Social Psychological Methods Outside the Laboratory

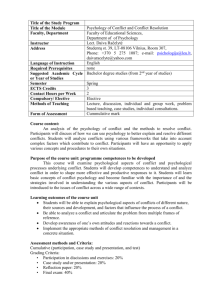

advertisement