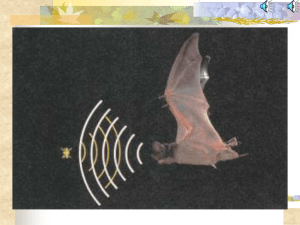

Auditory scene analysis by echolocation in bats

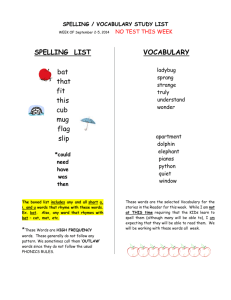

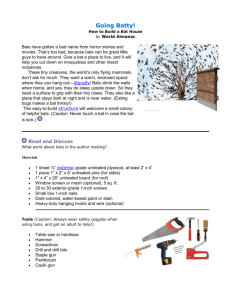

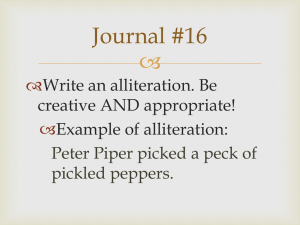

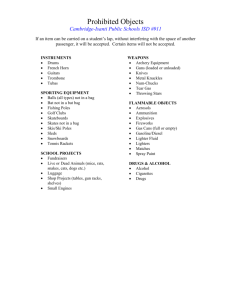

advertisement