Discovering Attribute and Entity Synonyms

advertisement

Discovering Attribute and Entity Synonyms for

Knowledge Integration and Semantic Web Search

Hamid Mousavi 1 , Shi Gao 2 , Carlo Zaniolo 3

1,2,3

Technical Report #130013

Computer Science Department, UCLA

Los Angeles, USA

1

hmousavi@cs.ucla.edu

2

gaoshi@cs.ucla.edu

3

zaniolo@cs.ucla.edu

Abstract— There is a growing interest in supporting semantic

search on knowledge bases such as DBpedia, YaGo, FreeBase, and

other similar systems, which play a key role in many semantic

web applications. Although the standard RDF format ăsubject,

attribute, valueą is often used by these systems, the sharing of

their knowledge is hampered by the fact that various synonyms

are frequently used to denote the same entity or attribute—

actually, even an individual system may use alternative synonyms

in different contexts, and polynyms also represent a frequent

problem. Recognizing such synonyms and polynyms is critical

for improving the precision and recall of semantic search. Most

of previous efforts in this area have focused on entity synonym

recognition, whereas attribute synonyms were neglected, and so

was the use of context to select the appropriate synonym. For

instance, the attribute ‘birthdate’ can be a synonym for ‘born’

when it is used with a value of type ‘date’; but if ‘born’ comes

with values which indicate places, then ‘birthplace’ should be

considered as its synonym. Thus, the context is critical to find

more specific and accurate synonyms.

In this paper, we propose new techniques to generate contextaware synonyms for the entities and attributes that we are using

to reconcile knowledge extracted from various sources. To this

end, we propose the Context-aware Synonym Suggestion System

(CS 3 ) which learns synonyms from text by using our NLP-based

text mining framework, called SemScape, and also from existing

evidence in the current knowledge bases. Using CS 3 and our

previously proposed knowledge extraction system IBminer, we

integrate some of the publicly available knowledge bases into

one of the superior quality and coverage, called IKBstore.

I. I NTRODUCTION

The importance of knowledge bases in semantic-web applications has motivated the endeavors of several important

projects that have created the public-domain knowledge bases

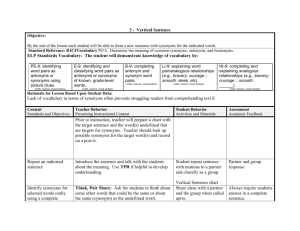

shown in Table I. The project described in this paper seeks

to integrate and extend these knowledge bases into a more

complete and consistent repository named Integrated Knowledge Base Store (IKBstore). IKBstore will provide much better

support for advanced web applications, and in particular for

user-friendly search systems that support Faceted Search [5]

and By-Example Structured Queries [6]. Our approach in

achieving this ambitious goal involves the four main tasks of:

A Integrating existing knowledge bases by converting them

into a common internal representation and store them in

IKBstore.

B Completing the integrated knowledge base by extracting

more facts from free text.

C Generating a large corpus of context-aware synonyms

that can be used to resolve inconsistencies in IKBstore

and to improve the robustness of query answering systems,

D Resolving incompleteness in IKBstore by using the

synonyms generated in Task C.

At the time of this writing, task A and B are completed,

and other tasks are well on their ways. The tools developed

to perform tasks B, C and D, and the initial results they

produced will be demonstrated at the VLDB conference on

Riva del Garda [20]. The rest of this section and the next

section introduce the various intertwined aspects of these tasks,

while the remaining sections provide an in-depth coverage of

the techniques used for synonyms generation and the very

promising experimental results they produced.

Task A was greatly simplified by the fact that many

projects, including DBpedia [7] and YaGo [18], represent

the information derived from the structured summaries of

Wikipedia (a.k.a. InfoBoxes) by RDF triples of the form

ăsubject, attribute, valueą, which specifies the value for

an attribute (property) of a subject. This common representation facilitates the use of these knowledge bases by

a roster of semantic-web applications, including queries expressed in SPARQL, and user-friendly search interfaces [5],

[6]. However, the coverage and consistency provided by each

individual system remain limited. To overcome these probName

Size (MB)

ConceptNet [27]

DBpedia [7]

FreeBase [8]

Geonames [2]

MusicBrainz [3]

NELL [10]

OpenCyc [4]

YaGo2 [18]

3075

43895

85035

2270

17665

1369

240

19859

Number of

Entities (106 )

0.30

3.77

«25

8.3

18.3

4.34

0.24

2.64

Number of

Triples (106 )

1.6

400

585

90

«131

50

2.1

124

TABLE I

S OME OF THE PUBLICLY AVAILABLE K NOWLEDGE BASES

UCLA Computer Science Department Technical Report #130013

lems, this project is merging, completing, and integrating these

knowledge bases at the semantic level.

Task B is mainly to complete the initial knowledge base

using our knowledge extraction system called IBminer [22].

IBminer employs an NLP-based text mining framework, called

SemScape, to extract initial triples from the text. Then using

a large body of categorical information and learning from

matches between the initial triples and existing InfoBox items

in the current knowledge base, IBminer translates the initial

triples into more standard InfoBox triples.

The integrated knowledge base so obtained will represent

a big step forward, since it will (i) improve coverage, quality

and consistency of the knowledge available to semantic web

applications and (ii) provide a common ground for different contributors to improve the knowledge bases in a more

standard and effective way. However, a serious obstacle in

achieving such desirable goal is that different systems do not

adhere to a standard terminology to represent their knowledge,

and instead use plethora of synonyms and polynyms.

Thus, we need to resolve synonyms and polynyms for the

entity names as well as the attribute names. For example,

by knowing ‘Johann Sebastian Bach’ and ‘J.S. Bach’ are

synonyms, the knowledge base can merge their triples and

associate them with one single name. As for the polynyms,

the problem is even more complex. Most of the time based

on the context (or popularity), one should decide the correct

polynym of a vague term such as ‘JSB’ which may refer to

“Johann Sebastian Bach”, ‘Japanese School of Beijing’, etc.

Several efforts to find entity synonyms have been reported in

recent years [11], [12], [13], [15], [25]. However, the synonym

problem for attribute names has received much less attention,

although they can play a critical role in query answering. For

instance, the attribute ‘birthdate’ can be represented with terms

such as ‘date of birth’, ‘wasbornindate’, ‘born’, and ‘DoB’

in different knowledge bases, or even in the same one when

used in different contexts. Unless these synonyms are known,

a search for musicians born, say, in 1685 is likely to produce

a dismal recall.

To address the aforementioned issues, we proposed our

Context-aware Synonym Suggestion System (CS 3 for short).

CS 3 performs mainly tasks C and D by first extracting

context-aware attribute and entity synonyms, and then using

them to improve the consistency of IKBstore. CS 3 learns

attribute synonyms by matching morphological information

in free text to the existing structured information. Similar

to IBminer, CS 3 takes advantage of a large body of categorical information available in Wikipedia, which serves as

the contextual information. Then, CS 3 improves the attribute

synonyms so discovered, by using triples with matching subjects and values but different attribute names. After unifying

the attribute names in different knowledge bases, CS 3 finds

subjects with similar attributes and values as well as similar

categorical information to suggest more entity synonyms.

Through this process, CS 3 uses several heuristics and takes

advantage of currently existing interlinks such as DBpedia’s

alias, redirect, externalLink, or sameAs links as well as the

interlinks provided by other knowledge bases.

In this paper, we describe the following contributions:

3

‚ The Context-aware Synonym Suggestion System (CS )

which generates synonyms for both entities and attributes

in existing knowledge bases. CS 3 intuitively uses free

text and existing structured data to learn patterns for suggesting attribute synonyms. It also uses several heuristics

to improve existing entity synonyms.

‚ Novel techniques are introduced to integrate several pubic knowledge bases and convert them into a general

knowledge base. To this end, we initially collect the

knowledge bases and integrate them by exploiting the

subject interlinks they provide. Then, IBminer is used

to find more structured information from free text to

extend the coverage of the initial knowledge base. At

this point, we use CS 3 to resolve attribute synonyms

on the integrated knowledge base, and to suggest more

entity synonyms based on their context similarity and

other evidence. This improves performance of semantic

search over our knowledge base, since more standard and

specific terms are used for both entities and attributes.

‚ We implemented our system and performed preliminary

experiments on public knowledge bases, namely DBpedia

and YaGo, and text from Wikipedia pages. The initial

results so obtained are very promising and show that

CS 3 improves the quality and coverage of the existing

knowledge bases by applying synonyms in knowledge

integration. The evaluation results also indicate that IKBstore can reach up to 97% accuracy.

The rest of the paper is organized as follows: In next

section, we explain the high-level tasks in IKBstore. Then,

in Section III, we briefly discuss the techniques to generate

structured information from text in IBminer. In Section IV,

we propose CS 3 to learn context-aware synonyms. In Section

V, we discuss how these subsystems are used to integrate our

knowledge bases. The preliminary results of our approach are

presented in Section VI. We discuss some related work in

Section VII and conclude our paper in Section VIII.

II. T HE BIG PICTURE

As already mentioned, the goal of IKBstore is to integrate

the public knowledge bases and create a more consistent and

complete knowledge base. IKBstore performs four tasks to

achieve this goal. Here we elaborate these four tasks in more

details:

Task A: Collecting publicly available knowledge bases, unifying knowledge representation format, and integrating knowledge bases using existing interlinks and structured information.

Creating the initial knowledge base is actually a straightforward task (Subsection V-B), since many of the existing

knowledge bases are representing their knowledge in RDF

format. Moreover, they usually provide information to interlink

a considerable portion of their subjects to those in DBpedia.

Thus, we use such information to create the initial integrated

knowledge base. For easing our discussion, we refer to initial

integrated knowledge base as initial knowledge base. Although

2

UCLA Computer Science Department Technical Report #130013

this naive integration may improve the coverage of the initial

knowledge base, it still needs considerable improvement in

consistency and quality.

a common ground for different contributors to improve the

knowledge bases in a more standard and effective way. Using

multiple knowledge bases in IKBstore can also be a good

mean for verifying the correctness of the current structured

summaries as well as those generated from the text.

Task B: Completing the initial knowledge base using accompanying text. In order to do so, we employ the IBminer

system [22] to generate structured data from the free text

available at Wikipedia or similar resources. IBminer first

generated semantic links between entity names in the text

using recently proposed text mining SemScape. Then, IBminer

learns common patterns called Potential Matches (PMs) by

matching the current triples in the initial knowledge base to

the semantic links derived from free text. It then employs the

PMs to extract more InfoBox triples from text. These newly

found triples are then merged with the initial knowledge base

to improve its coverage. Section III provides more information

about this process.

III. F ROM T EXT T O S TRUCTURED DATA

To perform the nontrivial task of generating structured

data from text, we use our IBminer system [22]. Although,

IBminer’s process is quite complex, we can divide it into

three high-level steps which are elaborated in this section.

The first step is to parse the sentences in text and convert

them to a more machine friendly structure called TextGraphs

which contain grammatical and semantic links between entities

mentioned in the text. As discussed in subsection III-A, this

step is performed by the NLP-based text mining framework

SemScape [21], [22]. The second step is to learn a structure

called Potential Match (PM). As explained in Subsection III-B,

PM contains context-aware potential matches between semantic links in the TextGraphs and exiting InfoBox items. As the

third step, PM is used to suggest the final structured summaries

(InfoBoxes) from the semantic links in the TextGraphs. This

phase is described in Subsection III-C.

Task C: Generating a large corpus of context-aware synonyms.

Since IBminer learns by matching structured data to the

morphological structure in the text, it may find more than

one acceptable matching attribute name for a given link name

from the text. This in fact implies possible attribute synonyms,

and it is the main intuition that CS 3 uses to learn attribute

synonyms. Based on PM, CS 3 creates the Potential Attribute

Synonyms (PAS) which is in nature similar to PM. However

instead of mapping link names into attribute names, PAS

provides mapping between different attribute names based

on the categorical information of the subject and the value.

Similar to the case of IBminer, the categorical information

serves as the contextual information, and improves the final

results of the generated attribute synonyms As described

in Section IV-A, CS 3 improves PAS by learning from the

triples with matching subjects and values but different attribute

names in the current knowledge base. CS 3 also recognizes

context-aware entity synonyms by considering the categorical

information and InfoBoxes of the entities. (Subsection IV-B).

A. From Text to TextGraphs

To generate TextGraphs from text, we employ the SemScape

system which uses morphological information in the text to

capture the categorical, semantic, and grammatical relations

between words and terms in the text. To understand the general

idea, consider the following sentence:

Motivating Sentence: “Johann Sebastian Bach (31 March

1685 - 28 July 1750) was a German composer, organist,

harpsichordist, violist, and violinist of the Baroque Period.”

There are several entity names in this sentence (e.g. ‘Johann

Sebastian Bach’, ‘31 March 1685’, and ‘German composer’).

The first step in SemScape is to recognize these entity names.

Thus, SemScape parses the sentence with Stanford parser

[19] which is a probabilistic parser. Using around 150 treebased patterns (rules), SemScape finds such entity names and

annotates nodes in the parse trees with possible entity names

they contain. These annotations are called MainParts (MPs),

and the annotated parser tree is referred to as an MP Tree.

With the nodes annotated with their MainParts, other rules do

not need to know the underlying structure of the parse trees

at each node. As a result, one can provide simpler, fewer, and

more general rules to mine the annotated parse trees.

Next, SemScape uses another set of tree-based patterns to

find grammatical connections between words and entity names

in the parse trees and combine them into the TextGraph. One

such TextGraph for our motivating sentence is shown in Figure

1. Currently SemScape contains more than 290 manually

created rules to perform this step. Each link in the TextGraph

is also assigned a confidence value indicating SemScape’s

confidence on the correctness of the link. We should point out

Task D: Realigning attribute and entity names to construct

the final IKBstore. This is indeed the most important step on

preparing the knowledge bases for structured queries. Here,

we first use P AS to resolve attribute synonyms in the current

knowledge base. Then, we use the entity synonyms suggested

by CS 3 to integrate entity synonyms and their InfoBoxes. This

step is covered in Section V.

Applications: IKBstore can benefit a wide variety of applications, since it covers a large number of structured summaries represented with a standard terminology. Knowledge

extraction and population systems such as IBminer [22] and

OntoMiner [23], knowledge browsing tools such as DBpedia

Live [1] and InfoBox Knowledge-Base Browser (IBKB)[20],

and semantic web search such as Faceted Search [5] and ByExample Structured queries [6] are three prominent examples

of such applications. In particular for semantic web search,

IKBstore improves the coverage and accuracy of structured

queries due to superior quality and coverage with respect to

existing knowledge bases. Moreover, IKBstore can serve as

3

UCLA Computer Science Department Technical Report #130013

Fig. 2. Part a) shows the graph pattern for Rule1, and part b) depicts one

of the possible matches for this pattern.

Fig. 1.

semantic links (initial triples) from TextGraphs and add them

to the same TextGraph. Some of the semantic links generated

for our motivating sentence are shown in Figure 3. For the

sake of simplicity we do not depict grammatical links in this

graph. We should restate that all the patterns discussed in this

subsection are manually created and the readers should not

confuse them with automatically generated patterns that we

discuss in the next subsections.

Part of the TextGraph for our motivating sentence.

that TextGraphs support nodes consisting of multiple nodes

(and links) through its hyper-links which mainly differentiates

TextGraphs from similar structures such as dependency trees.

For more details on the TextGraph generation phase, readers

are referred to [22].

Although useful for some applications, the grammatical

connections at this stage of the TextGraphs are not enough

for IBminer to generate structured data. The reason is that,

IBminer needs connections between entity names, which we

refer to them as Semantic links. Semantic links simply specify

any relation between two entities1 . With this definition, most

of the grammatical links in the TextGraphs are not semantic

links. To generate more semantic links, IBminer uses a set

of manually created graph-based patterns (rules) over the

TextGraphs. One such rule is provided below:

B. Generating Potential Matches (PM)

To generate the final structured data (new InfoBox Triples),

IBminer learns an intermediate data structure called Potential

Matches (PM) from the mapping between initial triples (semantic links in TextGraphs) and current InfoBox triples. For

instance consider the initial triple ăBach, was, composerą

in Figure 3. Also assume, the current InfoBoxes include

ăBach, Occupation, composerą. This implies a simple pattern

saying that link name ‘was’ may be translated to attribute

name ‘Occupation’ depending on the context of the subject

(‘Bach’ in this case) and the value (‘composer’). We refer to

these matches as potential matches and the goal here is to

automatically generate and aggregate all potential matches.

To understand why we need to consider the context of the

subject and the value, this time consider the two initial triples

ăBach, was, composerą and ăBach, was, Germaną in the

TextGraph in Figure 3. Obviously the link name ‘was’ should

be interpreted differently in these two cases, since the former

one is connecting a ‘person’ to an ‘occupation’, while the

latter is between a ‘person’ and a ‘nationality’. Now, consider

two existing InfoBoxes ăBach, occupation, composerą and

ăBach, nationality, Germaną which respectively match the

formerly mentioned initial triples. These two matches imply

a simple pattern saying link name ‘was’ connecting ‘Bach’

and ‘composer’ should be interpreted as ‘occupation’, while

it should be interpreted as ‘nationality’ if it is used between

‘Bach’ and ‘German’. To generalize these implications, instead

of using the subject and value names, we use the categories

they belong to in our patterns. For instance, knowing that

‘Bach’ is in category ‘Cat:Person’ and ‘composer’ and ‘German’ are respectively in categories ‘Cat:Occupation in Music’

and ‘Cat:Ueropean Nationality’, we learn the following two

patterns:

‚ ăCat:Person, was, Cat:Occupation in Musicą: occupation

‚ ăCat:Person, was, Cat:European Nationalityą: nationality

Here the pattern ăc1 , l, c2 ą:α indicates that the link named

l, connecting a subject in category c1 to an entity or value in

——————————– Rule 1. ——————————–

SELECT ( ?1 ?3 ?2 )

WHERE

{

?1 “subj of” ?3.

?2 “obj of” ?3.

NOT(”not” “prop of” ?3).

NOT(”no” “det of” ?1).

NOT(”no” “det of” ?2).

}

————————————————————————–

As depicted in the part a) of Figure 2, the pattern graph

(WHERE clause of Rule 1) specifies two nodes with (variable)

names ?1 and ?2 which are connected to a third node (?3)

respectively with subj of and obj of links. One possible match

for this pattern in the TextGraph of our running example is

depicted in part b) of Figure 2. It is worthy mentioning that

due to the structure of the TextGraphs, matching multi-word

entities to the variable names in the patterns is an easy task

for IBminer. This is actually a challenging issue in works such

as [30] which are based on dependency parse trees. Using the

SELECT clause Rule 1, the rule returns several triples for our

running example such as:

‚ ă‘johann sebastian bach’, ‘was’, ‘composer’ą,

‚ ă‘sebastian bach’, ‘was’, ‘composer’ą,

‚ ă‘bach’, ‘was’, ‘composer’ą,etc.

The above triples are referred to as the initial triples. In

IBminer, we have created 98 graph-based rules to capture

1 SemScape

treats values as entities

4

UCLA Computer Science Department Technical Report #130013

Fig. 3.

general or inaccurate (e.g. ‘People by Historical Ethnicity’ and

‘Centuries in Germany’). Considering the same issue for the

value part, hundreds of thousands of potential matches may be

generated for a single subject. This issue not only wastes our

resources, but also impacts the accuracy of the final results.

To address this issue, we use a flow-driven technique to rank

all the categories to which subject s belongs, and then select

best NC categories. The main intuition is to propagate flows or

weights through different pathes from s to each category, the

categories receiving more weights are considered to be more

related to s. Now, L being the number of allowed ancestor

levels, we create the categorical structure for s up to L levels.

Starting with node s as the root of this structure and assigning

weight 1.0 to it, we iteratively select the closest node to s,

which has not been processed yet, propagate its weight to

its parent categories, and mark it as processed. To propagate

weights of node ci with k ancestors, we increase the current

weights of each k ancestors with wi {k, where wi is the current

weight of node ci . Although wi may change even after ci is

processed, we will not re-process ci after any further updates

on its weight. After propagating the weight to all the nodes, we

select the top NC categories for generating potential matches

and attribute synonyms.

Some Semantic links for our motivating sentence.

category c2 , may be interpreted as the attribute name α. Note

that for each triple with a matching InfoBox triple, we create

several patterns since the subject and the values usually belong

to more than one (direct or indirect) categories.

More formally, let ăs, l, vą be an initial triple in the

TextGraph, which matches InfoBox triple ăs, α, vą in the

initial knowledge base. Also, let s and v respectively belong

to category sets Cs “ tcs1 , cs2 , ...u and Cv “ tcv1 , cv2 , ...u

according to the categorical information in Wikipedia. Later

in this subsection, we discuss a simple yet effective technique

for selecting a small set of related categories for a given subject

or value. For each cs P Cs and cv P Cv , IBminer creates the

following tuple and add it to PM:

ăcs , l, cv ą : α

C. Generating New Structured Summaries

To extract new structured summaries (InfoBox triples),

IBminer uses PMs to translate the link names of the initial

triples into the attribute names of InfoBoxes. Let t “ăs, l,

vą be the initial triple whose link (l) needs to be translated,

and s and v are listed in category sets Cs “ tcs1 , cs2 , ...u and

Cv “ tcv1 , cv2 , ...u respectively which are generated based on

our category selection algorithm. The key idea to translate l

is to take a consensus among all pair of categories in Cs and

Cv and all possible attributes to decide which attribute name

is a possible match.

To this end, for each cs P Cs and cv P Cv , IBminer finds all

potential matches such as ăcs , l, cv ą: αi . The resulting set

of potential matches are then grouped by the attribute names,

αi ’s, and for each group we compute the average confidence

value and the aggregate evidence frequency of the matches.

IBminer uses two thresholds at this point to discard lowconfident (named τc ) or low-frequent (named τe ) potential

matches, which are discussed in Section VI. Next, IBminer

filters the remaining results by a very effective type-checking

technique2 . At this point, if there exists one or more matches

in this list, we pick the one with the largest evidence value, say

pmmax , as the only attribute map and report the new InfoBox

triple ăs, pmmax .ai , vą with confidence t.c ˆ pmmax .c and

evidence tn .e. Secondary possible matches are considered in

the next section as attribute synonyms.

Each tuple in PM is also associated with a confidence value

c (initialized by the confidence of the TextGraph’s semantic

link) and an evidence frequency e (initialized by 1). More

matches for the same categories and the same link will increase

the confidence and evidence count of the above potential

match. The above potential match basically means that for

an initial triple ăs1 , l, v 1 ą in which s1 belongs to category cs

and v 1 belongs to cv , α may be a match for l with confidence

c and evidence e.

Later in Section IV, we show that how CS 3 uses the P M

structure to build up a Potential Attribute Synonym structure

to generate high-quality synonyms.

Selecting Best Categories: A very important issue in

generating potential matches is the quality and quantity of the

categories for the subjects and values. The direct categories

provided for most of the subjects are too specific and there are

only a few subjects in each of them. As shown in Section VI,

generating the potential matches over direct categories is not

very helpful to generalize the matches for new subjects. On the

other hand, exhaustively adding all the indirect (or ancestor)

categories will result in too many inaccurate potential matches.

For instance considering only four levels of categories in

Wikipedia’s taxonomy, the subject ‘Johann Sebastian Bach’

belongs to 422 categories. In this list, there are some useful

indirect categories such as ‘German Musicians’ and ‘German

Entertainers’, as well as several categories which are either too

IV. C ONTEXT- AWARE S YNONYMS

Synonyms are terms describing the same concept, which

can be used interchangeably. According to this definition, no

2 See

5

[22] for details on the type-checking mechanism used by IBminer

UCLA Computer Science Department Technical Report #130013

synonym for αj (αi ) can be computed by Ni,j {Nj (Ni,j {Ni ).

Obviously this is not always a symmetric relationship (e.g.

‘born’ attribute is always a synonym for ‘birthdate’, but not the

other way around, since ‘born’ may also refer to ‘birthplace’

or ‘birthname’ as well). In other words having Ni and Ni,j

computed, we can resolve both synonyms and polynyms for

any given context (Cs and Cv ).

With the above intuition in mind, the goal in PAS is to

compute Ni and Ni,j . Next we explain how CS 3 constructs

PAS in one-pass algorithm which is essential for scaling up

our system. For each two records in PM such as ăcs , l, cv ą:

αi and ăcs , l, cv ą: αj respectively with evidence frequency

ei and ej (ei ď ej ), we add the following two records to PAS:

matter what context is used, the synonym for a term is fixed

(e.g. ‘birthdate’ and ‘date of birth’ are always synonyms).

However, the meaning or semantic of a term usually depends

on the context in which the term is used. The synonym

also varies as the context changes. For instance, in an article

describing IT companies, the synonym of the attribute name

‘wasCreatedOnDate’ most probably is ‘founded date’. In this

case, knowing that the attribute is used for the name of a

company is a contextual information that helps us find an

appropriate synonym for ‘wasCreatedOnDate’. However, if

this attribute is used for something else, such as an invention,

one can not use the same synonym for it.

Being aware of the context is even more useful for resolving

polynymous phrases, which are in fact much more prevalent

than exact synonyms in the knowledge bases. For example,

consider the entity/subject name ‘Johann Sebastian Bach’.

Due to its popularity, a general understanding is that the entity

is describing the famous German classical musician. However,

what if we know that for this specific entity the birthplace is in

‘Berlin’. This simple contextual information will lead us to the

conclusion that the entity is refereing to the painter who was

actually the grandson of the famous musician Johann Sebastian

Bach. A very similar issue exists for the attribute synonyms.

For instance considering attribute ‘born’, ‘birthdate’ can be

a synonym for ‘born’ when it is used with a value of type

‘date’; but if ‘born’ is used with values which indicate places,

then ‘birthplace’ should be considered as its synonym.

CS 3 constructs a structure called Potential Attribute Synonyms (PAS) to extract attribute synonym. In the generation

of PAS, CS 3 essentially counts the number of times each pair

of attributes are used between the same subject and value and

with the same corresponding semantic link in the TextGraphs.

The context in this case is considered to be the categorical

information for the subject and the value. These numbers

are then used to compute the probability that any given two

attributes are synonyms. Next subsection describes the process

of generating PAS. Later in Subsection IV-B, we will discuss

our approach to suggest entity synonyms and improve existing

ones.

ăcs , αi , cv ą: αj

ăcs , αj , cv ą: αi

Both records are inserted with the same evidence frequency

ei . Note that, if the records are already in the current PAS,

we increase their evidence frequency by ei . At the very same

time we also count the number of times each attribute is used

between a pair of categories. This is necessary for estimating

Ni and computing the final weights for the attribute synonyms.

That is for the case above, we add the following two PAS

records as well:

ăcs , αi , cv ą: ‘’ (with evidence ei )

ăcs , αj , cv ą: ‘’ (with evidence ej )

Improving PAS with Matching InfoBox Items: Potential attribute synonyms can be also derived from different knowledge bases which contain the same piece

of knowledge, but in different attribute names. For instance let ăJ.S.Bach, birthdate, 1685ą and ăJ.S.Bach,

wasBornOnDate, 1685ą be two InfoBox triples indicating bach’s birthdate. Since the subject and value part

of the two triples matches, one may say birthdate and

wasBornOnDate are synonyms. To add these types of

synonyms to the PAS structure, we follow the exact same idea

explained earlier in this section. That is, consider two triples

such as ăs, αi , vą and ăs, αj , vą in which αi and αj may be

a synonym. Also, let s and v respectively belong to category

sets Cs “ tcs1 , cs2 , ...u and Cv “ tcv1 , cv2 , ...u. Thus, for all

cs P Cs and cv P Cv we add the following triples to PAS:

A. Generating Attribute Synonyms

Intuitively, if two attributes (say ‘birthdate’ and ‘dateOfBirth’) are synonyms in a specific context, they should be

represented with the same (or very similar) semantic links in

the TextGraphs (e.g. with semantic links such as ‘was born

on’, ‘born on’, or ‘birthdate is’). In simpler words, we use

text as the witness for our attribute synonyms. Moreover, the

context, which is defined as the categories for the subjects

(and for the values), should be very similar for synonymous

attributes.

More formally, let attributes αi and αj be two matches for

link l in initial triple ăs, l, vą. Let Ni,j (“ Nj,i ) be the total

number of times both αi and αj are the interpretation of the

same link (in the initial triples) between category sets Cs and

Cv . Also, let Nx be the total number of time αx is used

between Cs and Cv . Thus the probability that αi (αj ) is a

ăcs , αi , cv ą: αj (with evidence 1)

ăcs , αj , cv ą: αi (with evidence 1)

This intuitively means that from the context (category) of

cs to cv , attributes αi and αj may be synonyms. Again

more examples for these categories and attributes increase

the evidence which in turn improve the quality of the final

attribute synonyms. Much in the same way as learning from

initial triples, we count the number of times that an attribute

is used between any possible pair of categories (cs and cv ) to

estimate Ni .

Generating Final Attribute Synonyms: Once PAS structure is built, it is easy to compute attribute synonyms as

described earlier. Assume we want to find best synonyms for

attribute αi in InfoBox Triple t=ăs, αi , vą. Using PAS, for

6

UCLA Computer Science Department Technical Report #130013

all possible αj , all cs P Cs , and all cv P Cv , we aggregate

the evidence frequency (e) of records such as ăcs , αi , cv ą:

αj in PAS to compute Ni,j . Similarly, we compute Nj by

aggregating the evidence frequency (e) of all records in form

of ăcs , αi , cv ą: ‘’. Finally, we only accept attribute αj as

the synonym of αj , if Ni,j {Ni and Ni,j are respectively above

predefined thresholds τsc and τse . We study the effect of such

thresholds in Section VI.

V. C OMBINING K NOWLEDGE BASES

The IBminer and CS 3 systems allow us to integrate the

existing knowledge bases into one of superior quality and

coverage. In this section, we elaborate the steps of IKBstore

as introduced previously in Section II.

A. Data Gathering

We are currently in the process of integrating knowledge

bases listed in Table I, which include some domain specific knowledge bases (e.g. MusicBrainz [3], Geonames [2],

etc.), and some domain independent ones (e.g. DBpedia [7],

YaGo2 [18], etc.). Although most knowledge bases such as

DBPedia, YaGo, and FreeBase already provide there knowledge in RDF, some of them may use other representations.

Thus, for all knowledge bases, we convert their knowledge

into ăSubject, Attribute, Valueą triples and store them in

IKBstore3 . IKBstore is currently implemented over Apache

Cassandra which is designed for handling very large amount

of data. IKBstore recognizes three main types of information:

‚ InfoBox triples: These triples provide information on

a known subject (subject) in the ăsubject, attribute,

valueą format. E.g. ăJ.S. Bach, PlaceofBirth, Eisenachą which indicates the birthplace of the subject

J.S.Bach is Eisenach. We refer to these triples as InfoBox

triples.

‚ Subject/Category triples: They provide the categories that

a subject belongs to in the form of subject/link/category

where, link represents a taxonomical relation. E.g.

ăJ.S.Bach, is in, Cat:German Composersą which indicates the subject J.S.Bach belongs to the category

Cat:German Composers.

‚ Category/Category triples: They represent taxonomical links between categories. E.g. ăCat:German Composers, is in, Cat:German Musiciansą which indicates the

category Cat:German Composers is a sub-category of

Cat:German Musicians.

Currently, we have converted all the knowledge bases listed

in Table I into above triple formats.

IKBstore also preserves the provenance of each piece of

knowledge. In other words, for every fact in the integrated

knowledge base, we can track its data source. For the facts

derived from text, we record the article ID as the provenance. Provenance has several important applications such

as restricted search on specific sources, tracking erroneous

knowledge pieces to the emanating source, and better ranking

techniques based on reliability of the knowledge in each

source. In fact, we also annotate each fact with accuracy confidence and frequency values, based on the provenance of the

fact. To do so, each knowledge base is assigned a confidence

value. To compute the frequency and confidence of individual

facts, we respectively count the number of knowledge bases

B. Generating Entity Synonyms

There are several techniques to find entity synonyms.

Approaches based on the string similarity matching [24],

manually created synonym dictionaries [28], automatically

generated synonyms from click log [14], [15], and synonyms

generated by other data/text mining approaches [29], [23] are

only a few examples of such techniques. Although performing

very well on suggesting context-independent synonyms, they

do not explicitly consider the contextual information for suggesting more appropriate synonyms and resolving polynyms.

Very similar to context-aware attribute synonyms in which

the context of the subject and value used with an attribute

plays a crucial role on the synonyms for that attribute, we can

define context-aware entity synonyms. For each entity name,

CS 3 uses the categorical information of the entity as well as all

the InfoBox triples of the entity as the contextual information

for that entity. Thus to complete the exiting entity synonym

suggestion techniques, for any suggested pair of synonymous

entities, we compute entities context similarity to verify the

correctness of the suggested synonym.

It is important to understand that this approach should be

used as a complementary technique over the existing ones for

two main reasons. First, context similarity of two entities does

not always imply that they are synonyms specially when many

pieces of knowledge are missing for most of entities in the

current knowledge bases. Second, it is not feasible to compute

the context similarity of all possible pairs of entities due to

the large number of existing entities. In this work, we use the

OntoMiner system [23] in addition to simple string matching

techniques (e.g. Exact string matching, having common words,

and edit distance) to suggest initial possible synonyms.

Let ‘Johann Sebastian Bach’ and ‘J.S. Bach’ be two synonyms that two different knowledge bases are using to denote

the famous musician. A simple string matching would offer

these two entity as being synonyms. Thus we compare their

contextual information and realize that they have many common attributes with similar values for them (e.g. same values

for attributes occupation, birthdate, birthplace, etc.). Also they

both belong to many common categories (e.g. Cat:German

musician, Cat:Composer, Cat:people, etc.). Thus we suggest

them as entity synonyms with high confidence. However,

consider ‘Johann Sebastian Bach (painter)’ and ‘J.S. Bach’ entities. Although the initial synonym suggestion technique may

suggest them as synonyms, since their contextual information

is quite different (e.i. they have different values for common

attributes occupation, birthplace, birthdate, deathplace, etc.)

our system does not accept them as being synonyms.

3 For instance MusicBraneZ uses relational representation, for which we

consider every column name, say α, as an attribute connecting the main entity

(s) of a row in the table to value (v) of that row for column α, and create

triple ăs, α,vą.

7

UCLA Computer Science Department Technical Report #130013

including the fact and combine the confidence value of them

as explained in [22].

B. Initial Knowledge Integration

The aim of this phase is to find the initial interlinks between

subjects, attributes, and categories from the various knowledge

sources to eliminate duplication, align attributes, and reduce

inconsistency using only the existing interlinks. At the end

of this phase, we have an initial knowledge base which is

not quite ready for structured queries, but provides a better

starting point for IBminer to generate more structured data

and for CS 3 to resolve attribute and entity synonyms.

‚ Interlinking Subjects: Fortunately, many subjects in different knowledge bases have the same name. Moreover,

DBPedia is interlinked with many existing knowledge

bases, such as YaGo2 and FreeBase, which can serve as

a source of subject interlinks. For the knowledge bases

which do not provide such interlinks (e.g. NELL), in

addition to exact matching, we parse the structured part of

knowledge base to derive candidate interlinks for existing

entities, such as redirect and sameAs links in Wikipedia.

‚

‚

coverage of existing knowledge bases. These new triples

are then added to IKBstore. For each generated triple,

we also update the confidence and evidence frequency

in IKBstore. That is if the triple is already in IKBstore,

we only increase and update its confidence and evidence

frequency.

Realigning attribute names: Next we employ CS 3 to

learn PAS and generate synonyms for attribute names and

expand the initial knowledge base with more common and

standard attribute names.

Matching entity synonyms: This step merges the entities

base on the entity synonyms suggested by CS 3 . For the

suggested synonym entities such as s1 , s2 , we aggregate

their triples and use one common entity name, say s1 . The

other subject (s2 ) is considered as a possible alias for s1 ,

which can be represented by RDF triple ăs1 , alias, s2 ą.

Integrating categorical information: Since we have

merged subjects based on entity synonyms, the similarity

score of the categories may change and thus we need

to rerun the category integration described in Subsection

V-B.

Currently the knowledge base integration is partially completed for DBpedia and YaGo2 as described in Section VI.

‚

Interlinking Attributes: As we mentioned previously,

attributes interlinks are completely ignored in the current

studies. In this phase, we only use exact matching for

interlinking attributes.

‚ Interlinking Categories: In addition to exact matching,

we compute the similarity of the categories in different knowledge bases based on their common instances.

Consider two categories c1 and c2 , and let Spcq be the

set of subjects in category c. The similarity function for

categories interlink is defined as Simpc1 , c2 q “ |Spc1 q X

Spc2 q|{|Spc1 q Y Spc2 q|. If the Simpc1 , c2 q is greater than

a certain threshold, we consider c1 and c2 as aliases

of each other, which simply means that if the instances

of two categories are highly overlapping, they might be

representing the same category.

After retrieving these interlinks, we merge similar entities,

categories, and triples based on the retrieved interlinks. The

provenance information is generated and stored along with the

triples.

‚

VI. E XPERIMENTAL R ESULTS

In this section, we test and evaluate different steps of

creating IKBstore in terms of precision and recall. To this end,

we create an initial knowledge base using subjects listed in

Wikipedia for three specific domains (Musicians, Actors, and

Institutes). For these subjects, we add their related structured

data from DBpedia and YaGo2 to our initial knowledge

bases. Then, to learn PM and PAS structures, we use the

entire Wikipedia’s long abstracts provided by DBpedia for the

mentioned subjects. We should state that IBminer only uses

the free text and thus can take advantage of any other source

of textual information.

All the experiments are performed in a single machine

with 16 cores of 2.27GHz and 16GB of main memory. This

machine is running Ubuntu12. On average, SemScape spends

3.07 seconds on generating initial semantic links for each

sentence on a singe CPU. That is, using 16 cores, we were

able to generate initial semantic links for 5.2 sentences per

second.

C. Final Knowledge Integration

Once the initial knowledge base is ready, we first employ

IBminer to extract more structured data from accompanying

text and then utilize CS 3 to resolve synonymous information

and create the final knowledge base. More specifically, we

perform the following steps in order to complete and integrate

the final knowledge base:

‚ Improving Knowledge base coverage: The web documents contain numerable facts which are ignored by

existing knowledge bases. Thus, we first enrich the

knowledge base by employing our knowledge extraction

system IBminer. As described in Section III, IBminer

learns PM from free text and the initial knowledge base

to derive more triples which will greatly improve the

A. Initial Knowledge Base

To evaluate our system, we have created a simple knowledge

base which consists of three data sets for domains Musicians,

Actors, and Institutes (e.g. universities and colleges) from the

subjects listed in Wikipedia. These data sets do not share any

subject, and in total they cover around 7.9% of Wikipedia

subjects. To build each data set, we start from a general

category (e.g. Category:Musicians for Musicians data set) and

find all the Wikipedia subjects in this category or any of its

descendant categories up to four levels. Table II provides more

details on each data set.

8

UCLA Computer Science Department Technical Report #130013

Data set

Name

Musicians

Actors

Institutes

Subjects InfoBox Subjects with Subjects with Sentences

Triples

Abstract

InfoBox

per Abstract

65835 687184

65476

52339

8.4

52710 670296

52594

50212

6.2

86163 952283

84690

54861

5.9

text (long abstract for our case). To have a better estimation

for recall, we only used those InfoBox triples which match

at least to one of our initial triples. In this way, we estimate

based on InfoBox triples which are most likely mentioned in

the text. Nevertheless, this automatic technique provides an

acceptable recall value which is enough for comparison and

tuning purposes.

TABLE II

D ESCRIPTION

OF THE DATA SETS USED IN OUR EXPERIMENTS .

Best Matches: To ease our experiments, we combine our

two thresholds (τe and τc ) by multiplying them together and

create a single threshold called τ (=τe ˆτc ). Part a) in Figure 4

depicts the precision/recall diagram for different thresholds on

τ in Musicians data set. As can be seen, for the first 15% of

coverage, IBminer is generating only correct information. For

these cases τ is very high. According to this diagram, to reach

97% precision which is higher than DBpedia’s precision, one

should set τ to 6,300 (« .12|Tm |). For this case as shown in

Part c) of the figure, IBminer generates around 96.7K triples

with 33.6% recall.

In our initial knowledge base, we currently use InfoBox

triples from DBpedia and YaGo2 for the mentioned subjects.

Using multiple knowledge bases improves the coverage of

our initial knowledge base. However in many cases they use

different terminologies for representing the attribute and entity

names. Although our initial knowledge base contains three

data sets and we have performed all four tasks on these data

sets, we only report the evaluation results for the Musicians

data set here. This is mainly due to the time-consuming

process of manually grading the results.

To evaluate the quality of existing knowledge base, we

randomly selected 50 subjects (which have both abstract and

InfoBox) from the Musicians data set. For each subject, we

compared the information provided in its abstract and in its

InfoBox. For these 50 subjects, 1155 unique InfoBox triples

(and 92 duplicate triples) are listed in DBpedia. Interestingly,

only 305 of these triples are inferable from the abstract text

(only 26.4% of the InfoBoxes are covered by the accompanying text.) More importantly, we have found 47 wrong

triples (4.0%) and 146 (12.6%) triples which are not useful in

terms of main knowledge (e.g. triples specifying upload file

name, image name, format, file size, or alignment). Thus an

optimistic estimation is that at most 76% of facts provided

in DBpedia are useful for semantic-related applications. We

may call this portion of the knowledge base as useful in this

section. Moreover, the accuracy of DBpedia in this case is less

than 96%.

Secondary Matches: For each best match found in the previous step, say t “ăs, αi , vą, we generate attribute synonyms

for αi using PAS. If any of the reported attribute synonyms are

not in the list of possible matches for t (See Subsection IIIC), we ignore the synonym. Considering τe =.12|Tm |, precision

and recall of the remaining synonyms are computed similar to

the best match case and depicted in part b) of Figure 4 (while

the potential attribute synonym evidence count (τse ) decreases

from right to left). As the figure indicates, for instance, to have

97% accuracy one need to set τse to 12,000(« .23|Tm |). We

should add that although synonym results for this case indicate

only 3.6% of coverage, the number of correct new triples that

they generate are 53.6K which is quite acceptable.

Part c) of Figure 4 summarizes the number of best match

triples, the total number of generated items (both Best and

Secondary Matches), and the total number of correct items

for some possible τ . For instance for τ =.4|Tm |, we reach up

to 97% accuracy while the total number of generated results

is more than 150K (96.7K ` 53.6K). This indicates 28.7%

improvement in the coverage of the useful part of our Initial

knowledge base while the accuracy is above 97%. This is

actually very impressive considering the fact that we only use

the abstract of the page.

B. Completing Knowledge by IBminer

Using the Musicians data set and considering 40 categories

(NC “ 40) and 4 levels (L “ 4), we trained the Potential

Match (PM) structure using IBminer system. Total number of

initial triples for this chunk of data set is |Tn | “ 4.72M ,

while 52.9K of them match with existing InfoBoxes (|Tm | “

52.9K). Using PM and the initial triples, we generate the final

InfoBox triples without setting any confidence and evidence

frequency threshold (i.e. τe “ 0 and τc “ 0.0). Later in this

section, we also analyze the effect of NC and L selection on

the results.

To estimate the accuracy of the final triples, we randomly

select 20K of the generated triples and carefully grade them

by matching against their abstracts. Many similar systems such

as [9], [17], [31] have also used manual grading due to the

lack of a good benchmark. As for the recall, we investigate

existing InfoBoxes and compute what portion of them is also

generated by IBminer. This gives only a pessimistic estimation

of the recall ability, since we do not know what portion of

the InfoBoxes in Wikipedia are covered or mentioned in the

C. The Impact of Category Selection

To understand the impact of category selection technique,

we repeat the previously mentioned experiment on the Musicians data set for different levels (L) and category numbers

(NC ). Here, we only compare the results based on the recall

estimation. First, we fix the NC at 40 and change L from 1

to 4. Part a) of Figure 5 depicts the estimated recall (only

for the best matches) while PM’s frequency threshold (τe )

increases. Not surprisingly, as L increases, the recall improves

significantly, since more general categories are considered in

PM construction. On the other hand, using more categories

also improves the recall as depicted in Part b) of the figure.

Then we fix L at 4 and change NC from 10 to 50. The results

9

UCLA Computer Science Department Technical Report #130013

Fig. 4. Results for Musicians data set: a) Precision/Recall diagram for best matches, b) Precision/Recall diagram for attribute synonyms, and c) the size of

generated results for the test data set.

indicate that even with 10 categories, we may reach good recall

with respect to other cases. This mainly proves the efficiency

of our category selection technique. However, to have high

recall for lower NC s, one might want to use very low values

for τe which will result in lower accuracy.

In Part c) of Figure 5, we compare the time performance of

all the above cases. This diagram shows the average time spent

on generating final InfoBoxes for each abstract. As we increase

the levels, the delay increases exponentially since IBminer

performs more database accesses to generate the categories

structure. However, the delay linearly and insignificantly increases with increase in category numbers. This is mainly due

to the fact that the increase in the number of categories does

not require any extra database access. The delay for generating

PM also follows the exact same trend.

VII. R ELATED W ORK

There are several attempts to generate large-scale knowledge

bases [4], [10], [27], [8], [7], [2], [3]. However, none of

them are providing any automatic integration technique among

existing knowledge bases. There are only a few recent efforts

on integrating knowledge bases in both domain-specific and

general topics. GeoWordNet [16] is an integrated knowledge

base of GeoNames [2], WordNet [28], and MultiWordNet [26].

However, the integration is based on the manually created

concept mapping which is time-consuming and error-prone

for large scale knowledge base integration. In [18], Yago2

is integrated with GeoNames and structured information in

Wikipedia. The category (class) mapping in Yago2 is performed by simple string matching which is not reliable for

large taxonomies such as the one in Wikipedia. Recently, Wu

et al. proposed Probase [31] which aims to generate a general

taxonomy from web documents. Probase merged the concepts

and instances into a large general taxonomy. In taxonomy

integration, the concepts with similar context properties (e.g.

derived from the same sentence or with similar child nodes)

are merged.

Another line of related studies is the synonym suggestion

systems, which mostly focus on entity synonyms and ignore

attribute synonyms. One of the early work in this area is

WordNet [28] which provides manually defined synonyms for

general words. Words and very general terms in WordNet are

grouped to set of synonyms called Synset. Although WordNet

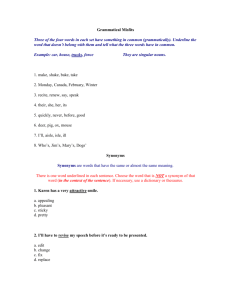

D. Completing Knowledge by Attribute Synonyms

In order to compute the precision and recall for the attribute

synonyms generated by CS 3 , we use the Musicians data set

and construct the P AS structure as described in section IV.

Using P AS, we generated possible synonyms for 10, 000

InfoBox items in our initial knowledge base. Note that these

synonyms are for already existing InfoBoxes which differentiate them from secondary matches evaluated in Subsection

VI-B. This has generated more than 14, 900 synonym items.

Among the 10, 000 InfoBox items, 1263 attribute synonyms

were listed and our technique generated 994 of them. We used

these matches to estimate the recall value of our technique for

different frequency thresholds (τcs ) as shown in Figure 6. As

for the precision estimation, we manually graded the generated

synonyms.

As can be seen in Figure 6, CS 3 is able to find more than

74% of the possible synonyms with more than 92% accuracy.

In fact, this is a very big step in improving structured query

results, since it increase the coverage of the IKBstore by at

least 88.3%. This in some sense improves the consistency of

the knowledge base by providing more synonymous InfoBox

triples. In aggregate with the improvement we achieved by

IBminer, we can state that our IKBstore doubles the size of

the current knowledge bases while preserving their precision

(if not improving) and improving their consistency.

Fig. 6. The Precision/Recall diagram for the attribute synonyms generated

for existing InfoBoxes in the Musicians data set.

10

UCLA Computer Science Department Technical Report #130013

Fig. 5. a) The impact of increasing level number on recall, b) The impact of increasing number of categories on recall, and c) InfoBox generation delay per

abstract.

contains 117,000 high quality Synsets, it is still limited to

only general words which miss most of multi-word terms,

and as a result it is not effective to be used in large scale

knowledge base integration systems. Another approach to

extract synonyms is based on approximate string matching

(fuzzy string searching) [24]. This technique is effective in

detecting certain kinds of format-related synonyms, such as

normalization and misspelling. However, this technique can

not discover semantic synonyms.

To overcome the shortcomings of the aforementioned approaches, more automatic approaches for generating entity

synonyms from web scale documents were presented in recent

years. In [12], [13], Chaudhuri et al. proposed an approach

to generate a set of synonyms which are substrings of given

entities. This approach exploits the correlation between the

substrings and the entities in the web documents to identify

the candidate synonyms. In [14], [15], Cheng et al. used query

click logs in web search engine to derive synonyms. First,

according to the click logs, every entity is associated with

a set of relevant web pages. Then, the queries which result

in certain web pages are considered as possible synonyms

for the associated entities of those pages. The synonyms are

then refined by evaluating the click patterns of queries. Later

in [11], Chakrabarti et al. refined this approach by defining

similarity functions, which solves click log sparsity problem

and verifies that candidate synonym string is of the same

class of the entity. In [29], the author proposed a machine

learning algorithm, called PMI-IR to mine synonyms from web

documents. In PMI-IR, if two sets of documents are correlated,

the words associated with the two sets are taken as candidate

synonyms.

outperforms existing knowledge bases in terms of coverage,

accuracy, and consistency. Our preliminary evaluation also

proves this claim as it shows that IKBstore doubles the coverage of the existing knowledge base while slightly improving

accuracy and resolving many inconsistencies. The accuracy of

the generated knowledge at this point is above 97%.

As for the future work, we are currently expanding IKBstore

and our goal is to cover all the subjects in Wikipedia. We are

also working on improving the integration of the categorical

and taxonomical information available in different knowledge

bases.

R EFERENCES

[1] Dbpedia live. http://live.dbpedia.org/.

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

VIII. C ONCLUSION

[11]

In this paper, we propose the IKBstore technique to integrate

currently existing knowledge bases into a more complete

and consistent knowledge base. IKBstore first merges existing

knowledge bases using the interlinks they provide. Then, it

employs the text-based knowledge discovery system IBminer

to improve the coverage of the initially integrated knowledge

bases by extracting knowledge from free text. Finally, IKBstore utilizes the CS 3 to extract context-aware attribute and

entity synonyms and uses them to reconcile among different

terminologies used by different knowledge bases. In this

way, IKBstore creates an integrated knowledge base which

[12]

[13]

[14]

11

Geonames. http://www.geonames.org.

Musicbrainz. http://musicbrainz.org.

Opencyc. http://www.cyc.com/platform/opencyc.

W. Abramowicz and R. Tolksdorf, editors. Business Information Systems, 13th International Conference, BIS 2010, Berlin,

Germany, May 3-5, 2010. Proceedings, volume 47 of Lecture

Notes in Business Information Processing. Springer, 2010.

M. Atzori and C. Zaniolo. Swipe: searching wikipedia by

example. In WWW (Companion Volume), pages 309–312, 2012.

C. Bizer, J. Lehmann, G. Kobilarov, S. Auer, C. Becker, R. Cyganiak, and S. Hellmann. Dbpedia - a crystallization point for

the web of data. J. Web Sem., 7(3):154–165, 2009.

K. D. Bollacker, C. Evans, P. Paritosh, T. Sturge, and J. Taylor.

Freebase: a collaboratively created graph database for structuring

human knowledge. In SIGMOD, 2008.

A. Carlson, J. Betteridge, B. Kisiel, B. Settles, E. H. Jr., and

T. Mitchell. Toward an architecture for never-ending language

learning. In AAAI, pages 1306–1313, 2010.

A. Carlson, J. Betteridge, B. Kisiel, B. Settles, E. R. H. Jr.,

and T. M. Mitchell. Toward an architecture for never-ending

language learning. In AAAI, 2010.

K. Chakrabarti, S. Chaudhuri, T. Cheng, and D. Xin. A framework for robust discovery of entity synonyms. In Proceedings of

the 18th ACM SIGKDD international conference on Knowledge

discovery and data mining, KDD ’12, pages 1384–1392, New

York, NY, USA, 2012. ACM.

S. Chaudhuri, V. Ganti, and D. Xin. Exploiting web search

to generate synonyms for entities. In Proceedings of the 18th

international conference on World wide web, WWW ’09, pages

151–160, New York, NY, USA, 2009. ACM.

S. Chaudhuri, V. Ganti, and D. Xin. Mining document collections to facilitate accurate approximate entity matching. Proc.

VLDB Endow., 2(1):395–406, Aug. 2009.

T. Cheng, H. W. Lauw, and S. Paparizos. Fuzzy matching of

web queries to structured data. In ICDE, pages 713–716, 2010.

UCLA Computer Science Department Technical Report #130013

[15] T. Cheng, H. W. Lauw, and S. Paparizos. Entity synonyms

for structured web search. IEEE Trans. Knowl. Data Eng.,

24(10):1862–1875, 2012.

[16] F. Giunchiglia, V. Maltese, F. Farazi, and B. Dutta. Geowordnet:

A resource for geo-spatial applications. In ESWC (1), pages

121–136, 2010.

[17] J. Hoffart, F. M. Suchanek, K. Berberich, E. Lewis-Kelham,

G. de Melo, and G. Weikum. Yago2: exploring and querying

world knowledge in time, space, context, and many languages.

In WWW, pages 229–232, 2011.

[18] J. Hoffart, F. M. Suchanek, K. Berberich, and G. Weikum.

Yago2: A spatially and temporally enhanced knowledge base

from wikipedia. Artif. Intell., 194:28–61, 2013.

[19] D. Klein and C. D. Manning. Fast exact inference with a

factored model for natural language parsing. In In Advances

in Neural Information Processing Systems 15 (NIPS, pages 3–

10. MIT Press, 2003.

[20] H. Mousavi, S. Gao, and C. Zaniolo. Ibminer: A text mining

tool for constructing and populating infobox databases and

knowledge bases. VLDB (demo track), 2013.

[21] H. Mousavi, D. Kerr, and M. Iseli. A new framework for textual

information mining over parse trees. In (CRESST Report 805).

University of California, Los Angeles, 2011.

[22] H. Mousavi, D. Kerr, M. Iseli, and C. Zaniolo. Deducing

infoboxes from unstructured text in wikipedia pages. In CSD

Technical Report #130001), UCLA, 2013.

[23] H. Mousavi, D. Kerr, M. Iseli, and C. Zaniolo. Ontoharvester:

An unsupervised ontology generator from free text. In CSD

Technical Report #130003), UCLA, 2013.

[24] G. Navarro. A guided tour to approximate string matching.

ACM Comput. Surv., 33(1):31–88, Mar. 2001.

[25] P. Pantel, E. Crestan, A. Borkovsky, A.-M. Popescu, and

V. Vyas. Web-scale distributional similarity and entity set

expansion. In Proceedings of the 2009 Conference on Empirical

Methods in Natural Language Processing: Volume 2 - Volume

2, EMNLP ’09, pages 938–947, Stroudsburg, PA, USA, 2009.

Association for Computational Linguistics.

[26] E. Pianta, L. Bentivogli, and C. Girardi. Multiwordnet: developing an aligned multilingual database. In Proceedings of the

First International Conference on Global WordNet, 2002.

[27] P. Singh, T. Lin, E. T. Mueller, G. Lim, T. Perkins, and W. L.

Zhu. Open mind common sense: Knowledge acquisition from

the general public. In Confederated International Conferences

DOA, CoopIS and ODBASE, London, UK, 2002.

[28] M. M. Stark and R. F. Riesenfeld. Wordnet: An electronic

lexical database. In Proceedings of 11th Eurographics Workshop

on Rendering. MIT Press, 1998.

[29] P. D. Turney. Mining the web for synonyms: Pmi-ir versus lsa

on toefl. In Proceedings of the 12th European Conference on

Machine Learning, EMCL ’01, pages 491–502, London, UK,

UK, 2001. Springer-Verlag.

[30] F. Wu and D. S. Weld. Open information extraction using

wikipedia. In ACL, pages 118–127, 2010.

[31] W. Wu, H. Li, H. Wang, and K. Q. Zhu. Probase: a probabilistic

taxonomy for text understanding. In SIGMOD Conference,

pages 481–492, 2012.

12