Basic Statistcs Formula Sheet

advertisement

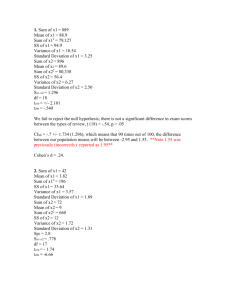

Basic Statistcs Formula Sheet Steven W. Nydick May 25, 2012 This document is only intended to review basic concepts/formulas from an introduction to statistics course. Only mean-based procedures are reviewed, and emphasis is placed on a simplistic understanding is placed on when to use any method. After reviewing and understanding this document, one should then learn about more complex procedures and methods in statistics. However, keep in mind the assumptions behind certain procedures, and know that statistical procedures are sometimes flexible to data that do not necessarily match the assumptions. 1 Descriptive Statistics Elementary Descriptives (Univariate & Bivariate) Name Population Symbol Sample Symbol Mean µ x̄ Sample Calculation x̄ = Main Problems P x N P (x−x̄)2 N −1 Sensitive to outliers Median, Mode Sensitive to outliers MAD, IQR Variance σx2 s2x s2x = Standard Dev σx sx sx = Covariance σxy sxy sxy = P (x−x̄)(y−ȳ) N −1 Outliers, uninterpretable units Correlation ρxy rxy rxy = sxy sx sy Range restriction, outliers, rxy = P (zx zy ) N −1 nonlinearity z-score zx zx zx = √ 2 sx x−x̄ ; sx Biased z̄ = 0; s2z = 1 Alternatives MAD Correlation Doesn’t make distribution normal Simple Linear Regression (Usually Quantitative IV; Quantitative DV) Part Regular Equation Population Symbol Sample Symbol Sample Calculation Meaning yi = α + βxi + i yi = a + bxi + ei ŷi = a + bxi Predict y from x sxy s2 x Slope β b b= Intercept α a a = ȳ − bx̄ zyi = ρxy zxi + i zyi = rxy zxi + ei Standardized Equation Slope Intercept Effect Size ρxy rxy None None P2 R2 = P (x−x̄)(y−ȳ) P (x−x̄)2 Predicted y for x = 0 ẑyi = rxy zxi rxy = 2 sxy sx sy Predicted change in y for unit change in x =b Predict zy from zx sx sy Predicted change in zy for unit change in zx 0 Predicted zy for zx = 0 is 0 2 2 rŷy = rxy Variance in y accounted for by regression line Inferential Statistics t-tests (Categorical IV (1 or 2 Groups); Quantitative DV) Test Statistic x̄ One Sample D̄ Paired Samples Independent Samples x̄1 − x̄2 Parameter µ µD µ1 − µ2 Standard Deviation sx = sD = sp = q Standard Error qP (x−x̄)2 N −1 sx √ N qP (D−D̄)2 ND −1 √sD ND 2 (n1 −1)s2 1 +(n2 −1)s2 n1 +n2 −2 sp q 1 n1 df t-obt N −1 tobt = x̄−µ0 ND − 1 tobt = D̄−µD 0 s √D tobt = (x̄1 −x̄2 )−(µ1 −µ2 )0 q sp n1 + n1 sx √ N ND + 1 n2 n1 + n2 − 2 1 r ρ=0 a&b α&β Correlation Regression (FYI) NA N −2 tobt = r r sa & sb N −2 tobt = a−α0 sa NA σ̂e = qP (y−ŷ)2 N −2 1−r 2 N −2 & tobt = t-tests Hypotheses/Rejection Question One Sample Paired Sample Independent Sample Greater Than? H0 : µ ≤ # H0 : µD ≤ # H 0 : µ1 − µ2 ≤ # Extreme positive numbers H1 : µ > # H1 : µD > # H 1 : µ1 − µ2 > # tobt > tcrit (one-tailed) H0 : µ ≥ # H0 : µD ≥ # H 0 : µ1 − µ2 ≥ # Extreme negative numbers H1 : µ < # H1 : µD < # H 1 : µ1 − µ2 < # tobt < −tcrit (one-tailed) H0 : µ = # H0 : µD = # H 0 : µ1 − µ2 = # Extreme numbers (negative and positive) H1 : µ 6= # H1 : µD 6= # H1 : µ1 − µ2 6= # |tobt | > |tcrit | (two-tailed) Less Than? Not Equal To? When to Reject t-tests Miscellaneous Test One Sample Paired Samples Independent Samples Confidence Interval: γ% = (1 − α)% x̄ ± tN −1; crit(2-tailed) × Unstandardized Effect Size sx √ N x̄ − µ0 D̄ ± tND −1; crit(2-tailed) × √sD D̄ ND (x̄1 − x̄2 ) ± tn1 +n2 −2; crit(2-tailed) × sp q 1 n1 + 3 1 n2 x̄1 − x̄2 Standardized Effect Size dˆ = x̄−µ0 sx dˆ = dˆ = 2 D̄ sD x̄1 −x̄2 sp b−β0 sb One-Way ANOVA (Categorical IV (Usually 3 or More Groups); Quantitative DV) Source Between Sums of Sq. Pg j=1 nj (x̄j − x̄G )2 df Mean Sq. F -stat g−1 SSB/df B M SB/M SW SSW/df W Within Pg j=1 (nj − 1)s2j N −g Total P i,j (xij − x̄G )2 N −1 Effect Size η2 = SSB SST 1. We perform ANOVA because of family-wise error -- the probability of rejecting at least one true H0 during multiple tests. 2. G is “grand mean” or “average of all scores ignoring group membership.” 3. x̄j is the mean of group j; nj is number of people in group j; g is the number of groups; N is the total number of “people”. One-Way ANOVA Hypotheses/Rejection Question Hypotheses When to Reject H 0 : µ1 = µ2 = · · · = µk Is at least one mean different? Extreme positive numbers H1 : At least one µ is different from at least one other µ Fobt > Fcrit • Remember Post-Hoc Tests: LSD, Bonferroni, Tukey (what are the rank orderings of the means?) Chi Square (χ2 ) (Categorical IV; Categorical DV) Test Independence Hypotheses H0 : Vars are Independent Observed From Table df Expected N pj p k (Cols - 1)(Rows - 1) χ2 Stat PR PC i=1 j=1 When to Reject (fO ij −fE ij ) fE ij H0 : Model Fits From Table N pi Cells - 1 PC i=1 (fO i −fE i )2 fE i Extreme Positive Numbers χ2obt > χ2crit H1 : Model Doesn’t Fit 1. 2. 3. 4. Extreme Positive Numbers χ2obt > χ2crit H1 : Vars are Dependent Goodness of Fit 2 Remember: the sum is over the number of cells/columns/rows (not the number of people) For Test of Independence: pj and pk are the marginal proportions of variable j and variable k respectively For Goodness of Fit: pi is the expected proportion in cell i if the data fit the model N is the total number of people 4 Assumptions of Statistical Models Correlation Regression 1. Estimating: Relationship is linear 1. Relationship is linear 2. Estimating: No outliers 2. Bivariate normality 3. Estimating: No range restriction 3. Homoskedasticity (constant error variance) 4. Testing: Bivariate normality 4. Independence of pairs of observations One Sample t-test Independent Samples t-test 1. x is normally distributed in the population 2. Independence of observations 1. Each group is normally distributed in the population Paired Samples t-test 2. Homogeneity of variance (both groups have the same variance in the population) 1. Difference scores are normally distributed in the population 2. Independence of pairs of observations 3. Independence of observations within and between groups (random sampling & random assignment) One-Way ANOVA Chi Square (χ2 ) 1. Each group is normally distributed in the population 1. No small expected frequencies • Total number of observations at least 20 • Expected number in any cell at least 5 2. Homogeneity of variance 2. Independence of observations • Each individual is only in ONE cell of the table 3. Independence of observations within and between groups Central Limit Theorem Possible Decisions/Outcomes H0 True H0 False Given a population distribution with a mean µ and a variance σ 2 , the sampling distribution of the mean using sample size N (or, to put it another way, the distribution Rejecting H0 Type I Error (α) Correct Decision (1 − β; Power) 2 of sample means) will have a mean of µx̄ = µ and a variance equal to σx̄2 = σN , Not Rejecting H0 Correct Decision (1 − α) Type II Error (β) which implies that σx̄ = √σN . Furthermore, the distribution will approach the normal 2 distribution as N , the sample size, increases. Power Increases If: N ↑, α ↑, σ ↓, Mean Difference ↑, or One-Tailed Test 5