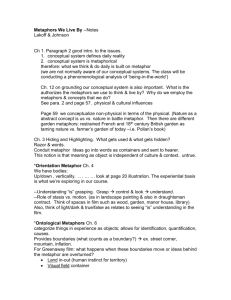

Computers as people: human interaction metaphors in human

advertisement