1 Language testing

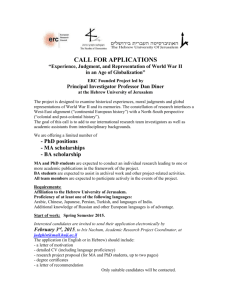

advertisement

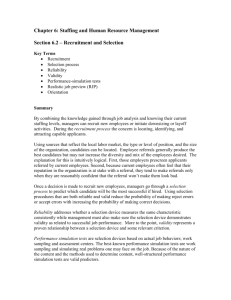

Language testing A test is a sample of an individual’s behaviour/performance on the basis of which inferences are made about the more general underlying competence of that individual. Language tests refer to any kind of measurement/examination technique which aims at describing the test taker’s foreign language proficiency, e.g. oral interview, listening comprehension task, free composition writing. 1. Kinds of tests and testing Proficiency tests Proficiency tests aim to measure students' L2 competence regardless of any training they previously had in the language. In these tests, designers specify what the candidates should be able to do to pass the test. Achievement tests Achievement tests assess whether learners have acquired specific elements of language that they were taught in the language course they took part in. There are two types of achievement tests: final tests at the end of the course and progress tests during the course. Direct versus indirect testing • Direct tests: candidates are required to perform the skill the test intends to measure. • Indirect tests want to measure skills that underlie performance in a particular task. Diagnostic tests • Diagnostic tests help identify learners' strengths and weaknesses in L2. Their main aim is to help teachers decide what needs to be taught to students. Placement tests • With the help of placements tests students can be placed in the learning group that is appropriate for their level of competence. Discrete point versus integrative testing • In discrete point tests every item focuses on one clear-cut segment of the target language without involving the others Typical test format: written multiple-choice test. • In integrative tests candidates need to use a number of language elements at the same time in completing the test tasks. For example: essay writing, dictation, cloze test. 1 Criterion referenced tests Norm referenced tests TOTAL 50 40 30 20 10 Std. Dev = 11,96 Mean = 53,1 N = 270,00 0 ,0 75,0 70,0 65,0 60,0 55,0 50,0 45,0 40,0 35,0 30,0 25,0 20 In norm-referenced tests, candidates performance is assessed in comparison with that of the other candidates. For these reasons the cut-off points (line between fail and pass) are determined after the test results are obtained from the group of students based on the distribution of the scores. Frequency • TOTAL Common European Framework of References for Languages Proficient user C2 Can understand with ease virtually everything heard or read. Can summarise information from different spoken and written sources, reconstructing arguments and accounts in a coherent presentation. Can express him/herself spontaneously, very fluently and precisely, differentiating finer shades of meaning even in more complex situations. C1 Can understand a wide range of demanding, longer texts, and recognise implicit meaning. Can express him/herself fluently and spontaneously without much obvious searching for expressions. Can use language flexibly and effectively for social, academic and professional purposes. Can produce clear, well-structured, detailed text on complex subjects, showing controlled use of organisational patterns, connectors and cohesive devices. • Criterion-referenced tests compare all the testees to a predetermined criterion. In such tests everybody whose achievement comes up to the pre-set criterion will receive a pass mark, while those under it will fail. The criteria are often set in terms of tasks that students have to be able to perform (e.g. to interact with an interlocutor with ease; to ask for information and understand instructions). Independent user B2Can understand the main ideas of complex text on both concrete and abstract topics, including technical discussions in his/her field of specialisation. Can interact with a degree of fluency and spontaneity that makes regular interaction with native speakers quite possible without strain for either party. Can produce clear, detailed text on a wide range of subjects and explain a viewpoint on a topical issue giving the advantages and disadvantages of various options. B1 Can understand the main points of clear standard input on familiar matters regularly encountered in work, school, leisure, etc. Can deal with most situations likely to arise whilst travelling in an area where the language is spoken. Can produce simple connected text on topics which are familiar or of personal interest. Can describe experiences and events, dreams, hopes and ambitions and briefly give reasons and explanations for opinions and plans. Objective testing versus subjective testing Basic User A2 Can understand sentences and frequently used expressions related to areas of most immediate relevance (e.g. very basic personal and family information, shopping, local geography, employment). Can communicate in simple and routine tasks requiring a simple and direct exchange of information on familiar and routine matters. Can describe in simple terms aspects of his/her background, immediate environment and matters in areas of immediate need. A1 Can understand and use familiar everyday expressions and very basic phrases aimed at the satisfaction of needs of a concrete type. Can introduce him/herself and others and can ask and answer questions about personal details such as where he/she lives, people he/she knows and things he/she has. Can interact in a simple way provided the other person talks slowly and clearly and is prepared to help. • • The scoring of a task is objective if the rater does not have to make a judgement because the scoring is unambiguous. For example: multiple choice test. In subjective test tasks, raters have to make a judgement when assessing candidates' performance. For example: marking of an essay. 2 Reliability Consider the tasks in the 199 exam: 1. C-test 2. Gap-fill task 3. Summary writing Decide whether these tasks are direct or indirect, subjective or objective, integrative or discrete point tasks. Reliability is the extent to which a test is free of random measurement error and produces consistent results when administered under similar conditions. This means that a reliable test is not affected by circumstances outside the test (e.g. the people who administer and mark the test, the time and place of the test) Types of reliability: • internal consistency: whether the test items are related to each other and measure the same ability • parallel or alternate form reliability: how well parallel or alternate forms of the same test measure the same ability Validity • test-retest reliability: whether test-takers perform similarly each time they complete the test • intra-rater reliability: whether the same rater assesses the test-takers' performance in the same way each time he/she evaluates the test • inter-rater reliability: whether two raters assess the test-takers' performance in the same way About the validity of the C-test The item-related strategies used by the participants Type of strategy Percentage of total Lexical 12.41 Syntactic 9.97 Morphological 3.71 Textual 5.36 Background knowledge 0.83 Translation 6.48 Counting the number of letters 15.31 No strategy used - automatically filled in 45.87 Total 100 Validity is the extent to which a test measures what it is supposed to measure and nothing else. • content validity: whether the test measures the ability it intends to measure; • concurrent validity: whether the test takers' performance in a test correlates with their results in a different type of test; • predictive validity: whether the test results accurately predict future performance; • construct validity: whether the test appropriately represents the theory of language competence it is based on; • face validity: whether the test looks as if it measures what it is supposed to measure. 3. Types of frequently used objective test tasks Multiple choice. It consists of a stem: 1. He ______________ three letters since 9 o'clock. And options, one of which is correct and the others are distractors. A writes B has written C has been written D had written Cloze test It is a continuous text in which every Nth word is mechanically deleted. N is usually between five and ten. The examinees have to fill in these blanks. It aims to test reading comprehension, syntax and vocabulary. 3 C-test • In the C-test the second half of every second word is left out. C-tests can provide a rough measure of learners' global level of proficiency. Dictation • The basis of the procedure is that each individual dictated chunk is long enough (10-25 words) to exceed the learner’s short-term memory, and so the forgotten items have to be filled in from the context and the learner’s knowledge of the language. Editing The editing test is the is reverse of the cloze test. For example: extra words extra are inserted put placed gone into to a text, and testees are is required to crossing cross these out. The oral proficiency interview Ideally the oral proficiency interview consists four phases: 1. Warm-up: usually not marked; 2. Level-check: getting an approximate idea of the learner’s proficiency level and the topics he/she feels comfortable in; 3. Probes: actual rating starts only at this stage, the interviewee is pushed up to or beyond his/her level of competence; 4.Wind-up: rounding off the interview by turning back to activities within the learner’s ability so as not to send him/her away with a feeling of failure. Matching Candidates are given a list of possible answers which they have to match with another list of words. For example: Match the words on the left with those on the right to make other English words. 1 head A partner 2 room B wife 3 business C master 4 house D mate Ordering In ordering tasks, candidates have to put a group of words, sentences or paragraphs in order. For example: Put the following words in order to complete the sentence: went yesterday I cinema friend to with. Analysis of test results The three most simple analyses of test results are the following: 1. Distribution curve – shows the number of students scoring within a particular range. 20 10 Std. Dev = 3,25 Mean = 8,3 N = 61,00 0 0,0 4,0 2,0 8,0 6,0 12,0 10,0 14,0 Score Statistical features of good tests 2. Facility value – expresses the proportion of students who responded correctly to an item. For example: if 100 students took part in a test, and 54 of them got the item right, the facility value is 0.54. 3. Discrimination index – expresses how well an item can discriminate between good and bad students. Ranges from 1 to - 1. • The distribution curve should be bellshaped. • Facility values should be between 0.3 and 0.7 (or in more lenient approaches to test design 0.2-0.8). • Discrimination indices should be above 0.4 (or in more lenient approaches to test design above 0.3). 4 Washback • Washback is the effect tests have on teaching and learning. • A beneficial washback effect can be if a so far neglected skill (e.g. listening) is put into the focus of teaching as a result of the introduction of a test where scores in this skill are important in determining the candidates' grades. • A negative washback effect can be if most of the time in lessons in secondary schools is spent on practising multiple choice tests. • Tests have effect on those who take the test, the teachers who prepare the students for the tests, the teaching materials (e.g. course-books), the society and the educational system. 1. Explain the difference between • proficiency and achievement tests; • b) diagnostic and placement tests; • c) direct and indirect tests; • d) subjective and objective tests; • e) norm-referenced and criterion referenced tests; • f) integrative and discrete point tests. 2. What is reliability? List the various types of reliability. 3. What is validity? List the various types of validity. 4. What are the most frequently used objective test tasks? 5. What are the most frequent statistical measures of test performance? 6. What effects can tests have on teaching and learning? 5