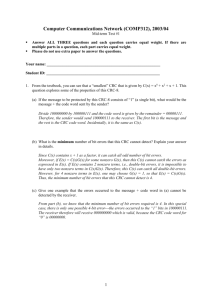

Lecture 7. TCP mechanisms for:

advertisement

Lecture 7. TCP mechanisms for: Îdata transfer control / flow control Îerror control Îcongestion control Graphical examples (applet java) of several algorithms at: http://www.ce.chalmers.se/~fcela/tcp-tour.html G.Bianchi, G.Neglia, V.Mancuso Data transfer control over TCP a double-face issue: ÎBulk data transfer ÎInteractive ÖHTTP, FTP, … Ögoal: attempt to send data as fast as possible ÖTELNET, RLOGIN, ... Ögoal: attempt to send data as soon as possible Öproblems: sender may transmit faster than receiver ÖProblem: efficiency interactivity trade-off ÆFlow control G.Bianchi, G.Neglia, V.Mancuso ÆThe tinygrams issue! (1 byte payload / segment – 20 TCP + 20 IP header) TCP pipelining W=6 ÎMore than 1 segment “flying” in the network ÎTransfer efficiency increases with W W ⋅ MSS ⎛ ⎞ thr = min⎜ C , ⎟ ⎝ RTT + MSS / C ⎠ ÎSo, why an upper limit on W? G.Bianchi, G.Neglia, V.Mancuso Why flow control? sender Î Limited receiver buffer Ö If MSS = 2KB = 2048 bytes Ö And receiver buffer = 8 KB = 8192 bytes Ö Then W must be lower or equal than 4 x MSS Î A possible implementation: Ö During connection setup, exchange W value. Ö DOES NOT WORK. WHY? G.Bianchi, G.Neglia, V.Mancuso receiver Window-based flow control Îreceiver buffer capacity varies with time! Ö Upon application process read() [asynchronous, not depending on OS, not predictable] Receiver buffer From IP Application process read() Receiver window Î MSS = 2KB = 2048 bytes Î Receiver Buffer capacity = 10 KB = 10240 bytes Î TCP data stored in buffer: 3 segments Î Receiver window = Spare room: 10-6 = 4KB = 4096 bytes Ö Then, at this time, W must be lower or equal than 2 x MSS G.Bianchi, G.Neglia, V.Mancuso Source port Destination port 32 bit Sequence number 32 bit acknowledgement number Header length U 6 bit R Reserved G checksum A P R S F C S S Y I K H T N N Window size Urgent pointer ÎWindow size field: used to advertise receiver’s remaining storage capabilities Ö 16 bit field, on every packet Ö Measure unit: bytes, from 0 (included) to 65535 Ö Sender rule: LastByteSent - LastByteAcked <= RcvWindow. Ö W=2048 means: Æ I can accept other 2048 bytes since ack, i.e. bytes [ack, ack+W-1] Æ also means: sender may have 2048 bytes outstanding (in multiple segments) G.Bianchi, G.Neglia, V.Mancuso What is flow control needed for? ÎWindow flow control guarantees receiver buffer to be able to accept outstanding segments. ÎWhen receiver buffer full, just send back win=0 Îin essence, flow control guarantees that transmission bit rate never exceed receiver rate Öin average! ÖNote that instantaneous transmission rate is arbitrary… Öas well as receiver rate is discretized (application reads) G.Bianchi, G.Neglia, V.Mancuso Sliding window W=3 S=4 S=5 S=6 S=7 Dynamic window based reduces to pure sliding window when receiver app is very fast in reading data… SEQ 1 2 W=3 3 4 5 6 7 8 Window “sliding” forward G.Bianchi, G.Neglia, V.Mancuso 9 Dynamic window - example sender TCP CONN SETUP Application does a 2K write receiver Exchanged param: MSS=2K, sender ISN=2047, WIN=4K (carried by receiver SYN-ACK) 2K, seq=2048 Rec. Buffer 0 EMPTY 0 Sender blocked Sender unblocks may send last 1K G.Bianchi, G.Neglia, V.Mancuso 2K, seq=4096 Ack=6144, win=0 Ack=6144, win=2048 1K, seq=6144 4K 2K Ack=4096, win=2048 Application does a 3K write 4K 0 4K FULL Application does a 2K read 0 4K 2K Performance: bounded by receiver buffer size ÎUp to 1992, common operating systems had transmitter & receiver buffer defaulted at 4096 Ö e.g. SunOS 4.1.3 Îway suboptimal over Ethernet LANs Öraising buffer to 16384 = 40% throughput increase (Papadopulos & Parulkar, 1993) Öe.g. Solaris 2.2 default Îmost socket APIs allow apps to set (increase) socket buffer sizes ÖBut theoretical maximum remains W=65535 bytes… G.Bianchi, G.Neglia, V.Mancuso Maximum achievable throughput Throughput (Mbps) (assuming infinite speed line…) 1000 W = 65535 bytes 100 10 1 0 100 200 300 RTT (ms) G.Bianchi, G.Neglia, V.Mancuso 400 500 Window Scale Option ÎAppears in SYN segment Öoperates only if both peers understand option Îallows client & server to agree on a different W scale Öspecified in terms of bit shift (from 1 to 14) Ömaximum window: 65535 * 2b Öb=14 means max W = 1.073.725.440 bytes!! G.Bianchi, G.Neglia, V.Mancuso Blocked sender deadlock problem sender receiver Rec. Buffer 0 FULL BLOCKED X ACK= REMAINS BLOCKED FOREVER!! G.Bianchi, G.Neglia, V.Mancuso 4K =2K N I W , Since ACK does not carry data, no ack from sender expected…. Application read 0 4K 2K Solution: Persist timer ÎWhen win=0 (blocked sender), sender starts a “persist” timer ÆInitially 500ms (but depends on implementation) ÎWhen persist timer elapses AND no segment received during this time, sender transmits “probe” ÖProbe = 1byte segment; makes receiver reannounce next byte expected and window size Æthis feature necessary to break deadlock Æif receiver was still full, rejects byte Æotherwise acks byte and sends back actual win ÎPersist time management (exponential backoff): ÖDoubles every time no response is received ÖMaximum = 60s G.Bianchi, G.Neglia, V.Mancuso Interactive applications ideal rlogin operation: 4 transmitted segments per 1 byte!!!!! keystroke Data by te Header overhead: 20 TCP header + 20 IP header + 1 data display byte a t a d f Ack o server a byte t a d f o Echo echo Ack of e choed b y CLIENT Interactive apps: create some tricky situations…. G.Bianchi, G.Neglia, V.Mancuso te SERVER The silly window syndrome SCENARIO Bulk data source G.Bianchi, G.Neglia, V.Mancuso Interactive user (one byte at the time) TCP connection Full recv buffer The silly window syndrome Fill up buffer until win=0 Network loaded with tinygrams (40bytes header + 1 payload!!) Ack=X, win=1 Buffer FULL 1 byte read 1 byte Ack=X+1, win=0 Forever! Ack=X+1, win=1 Buffer FULL 1 byte read 1 byte Ack=X+2, win=0 G.Bianchi, G.Neglia, V.Mancuso Buffer FULL Silly window solution ÎProblem discovered by David Clark (MIT), 1982 Îeasily solved, by preventing receiver to send a window update for 1 byte Îrule: send window update when: Æreceiver buffer can handle a whole MSS or Æhalf received buffer has emptied (if smaller than MSS) Îsender also may apply rule Æby waiting for sending data when win low G.Bianchi, G.Neglia, V.Mancuso Nagle’s algorithm (RFC 896, 1984) 1 byte on LANs, plenty of tynigrams on slow WANs, data aggregation G.Bianchi, G.Neglia, V.Mancuso ack 2 byte WAIT self-clocking algorithm: WAIT NAGLE RULE: a TCP connection can have only ONE SMALL outstanding segment 1 byte ack 3 byte Comments about Nagle’s algo ÎOver ethernet: Öabout 16 ms round trip time ÖNagle algo starts operating when user digits more than 60 characters per second (!!!) Îdisabling Nagle’s algorithm Öa feature offered by some TCP APIs Æset TCP_NODELAY Öexample: mouse movement over X-windows terminal G.Bianchi, G.Neglia, V.Mancuso PUSH flag Source port Destination port 32 bit Sequence number 32 bit acknowledgement number Header length U 6 bit R Reserved G checksum A P R S F C S S Y I K H T N N Window size Urgent pointer ÎUsed to notify ÖTCP sender to send data Æbut for this an header flag NOT needed! Sufficient a “push” type indication in the TCP sender API ÖTCP receiver to pass received data to the application G.Bianchi, G.Neglia, V.Mancuso Urgent data Source port Destination port 32 bit Sequence number 32 bit acknowledgement number Header length U 6 bit R Reserved G checksum A P R S F C S S Y I K H T N N Window size Urgent pointer ÎURG on: notifies rx that “urgent” data placed in segment. ÎWhen URG on, urgent pointer contains position of last byte of urgent data Æor the one after the last, as some bugged implementations do?? Æand the first? No way to specify it! Îreceiver is expected to pass all data up to urgent ptr to app Æinterpretation of urgent data is left to the app Î typical usage: ctrlC (interrupt) in rlogin & telnet; abort in FTP Îurgent data is a second exception to blocked sender G.Bianchi, G.Neglia, V.Mancuso TCP Error control G.Bianchi, G.Neglia, V.Mancuso TCP: a reliable transport ÎTCP is a reliable protocol Öall data sent are guaranteed to be received Övery important feature, as IP is unreliable network layer Îemploys positive acknowledgement Öcumulative ack Öselective ack may be activated when both peers implement it (use option) Îdoes not employ negative ack Öerror discovery via timeout (retransmission timer) ÖBut “implicit NACK” is available G.Bianchi, G.Neglia, V.Mancuso Error discovery via retransmission timer expiration Send segment Retransmission timer: waits for acknowledgement Re-send segment Fundamental problem: setting the retransmission timer right! G.Bianchi, G.Neglia, V.Mancuso time Lost data == DATA lost ack DATA ack Retr timer Retr timer Retrans mit DAT A G.Bianchi, G.Neglia, V.Mancuso Retrans mit DAT A although lost ack may be discovered via subsequent acks DATA 1 Retransm timer 1 Ack 1 DATA 2 Data 1 & 2 OK Retransm timer 2 G.Bianchi, G.Neglia, V.Mancuso Ack 2 Retransmission timer setting RTO = retransmission TimeOut DATA DATA RTO ack RTO ack Too late!!!! Retra n smit DATA TOO SHORT Might have txed!!!! Retrans mit DAT A TOO LONG Î TOO SHORT: unnecessary retransmission occurs, loading the Internet with unnecessary packets Î TOO LONG: throughput impairment when packets lost G.Bianchi, G.Neglia, V.Mancuso Retransmission timer setting ÎCannot be fixed by protocol! Two reasons: Ödifferent network scenarios have very different performance ÆLANs (short RTTs) ÆWANs (long RTTs) Ösame network has time-varying performance (very fast time scale) Æwhen congestion occurs (RTT grows) and disappears (RTT drops) G.Bianchi, G.Neglia, V.Mancuso Adaptive RTT setting ÎProposed in RFC 793 Îbased on dynamic RTT estimation Ösender samples time between sending SEQ and receiving ACK (M) Öestimates RTT (R) by low pass filtering M (autoregressive, 1 pole) ÆR = α R + (1-α) M Ösets RTO = R β G.Bianchi, G.Neglia, V.Mancuso α = 0.9 β = 2 (recommended) Problem: constant value β=2 SCENARIO 1: lightly loaded long-distance communication Propagation = 100 Queueing = mean 5, most in range 0-10 RTO = 2 x measured_RTT ~ 2 x 105 = 210 TOO LARGE! SCENARIO 2: mildly loaded short-distance communication Propagation = 1 Queueing = mean 10, most in range 0-50 RTO = 2 x measured_RTT ~ 2 x 11 = 22 G.Bianchi, G.Neglia, V.Mancuso WAY TOO SMALL! Problem: constant value β=2 SCENARIO 3: slow speed links transmission delay (ms) 28.8 Kbps 600 500 400 300 200 100 0 0 500 1000 1500 2000 2500 packet size (bytes) Natural variation of packet sizes causes a large variation in RTT! (from RFC 1122: utilization on 9.6 kbps link can improve from 10% up to 90% With the adoption of Jacobson algorithm) G.Bianchi, G.Neglia, V.Mancuso Jacobson RTO (1988) idea: make it depend on measured variance! Err = M-A A := A + g Err D := D + h (|Err| - D) RTO = A + 4D Îg = gain (1/8) Öconceptually equivalent to 1-α, but set to slightly different value ÎD = mean deviation Öconceptually similar to standard deviation, but cheaper (does not require a square root computation) Öh = 1/4 ÎJacobson’s implementation: based on integer arithmetic (very efficient) G.Bianchi, G.Neglia, V.Mancuso Guessing right? Karn’s problem Scenario 1 Scenario 2 DATA DA TA RTO RTO M? ret Retrans mit DAT A M ack G.Bianchi, G.Neglia, V.Mancuso M? ra n sm it ack Solution to Karn’s problem ÎVery simple: DO NOT update RTT when a segment has been retransmitted because of RTO expiration! ÎInstead, use Exponential backoff Ö double RTO for every subsequent expiration of same segment ÆWhen at 64 secs, stay Æpersist up to 9 minutes, then reset G.Bianchi, G.Neglia, V.Mancuso Need for implicit NACKs ÎTCP does not support negative ACKs ÎThis can be a serious drawback Ö Especially in the case of single packet loss ÎNecessary RTO expiration to start retransmit lost packet Ö As well as following ones!! ÎISSUE: is there a way to have NACKs in an implicit manner???? G.Bianchi, G.Neglia, V.Mancuso Seq=50 Seq=10 RTO 0 Seq=15 0 Seq=20 0 Seq=25 0 Seq=30 0 Seq=35 0 Retrans mit Seq =1 00 The Fast Retransmit Algorithm ÎIdea: use duplicate ACKs! Ö Receiver responds with an ACK every time it receives an outof-order segment Ö ACK value = last correctly received segment ÎFAST RETRANSMIT algorithm: RTO ack=100 ack=100 ack=100 Ö if 3 duplicate acks are received for the same segment, assume ack=100: FR that the next segment has been lost. Retransmit it right away. Ö Helps if single packet lost. Not very effective with multiple losses ÎAnd then? A congestion control issue… G.Bianchi, G.Neglia, V.Mancuso Seq=50 Seq=10 0 Seq=15 0 Seq=10 0