An Approach to Minimising Academic Misconduct and Plagiarism at

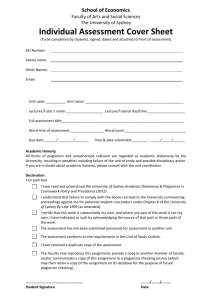

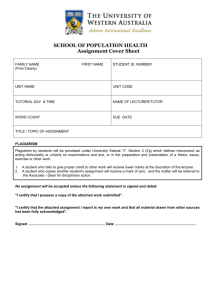

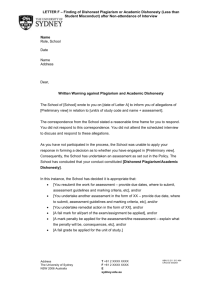

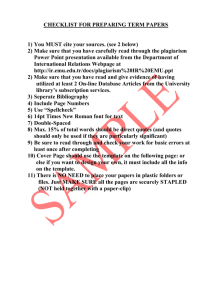

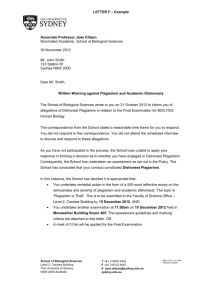

advertisement