Internet Worm Detection and Classification with Data Mining

advertisement

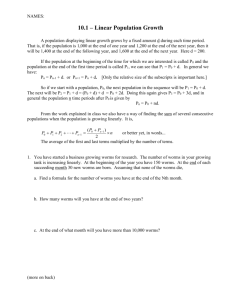

Internet Worm Detection and Classification with Data Mining Approaches Narubordee Sarnsuwan1, Naruemon Wattanapongsakorn1,* and Chalermpol Charnsripinyo2 1 Computer Engineering Department, King Mongkut’s University of Technology Thonburi, 126 Pracha-Utid, Tung-Kru, Bangkok 10140 Thailand, 2 Network Technology Laboratory, National Electronics and Computer Technology Center, Klong Luang, Pathumthani, 10120 Thailand *Corresponding author: naruemon@cpe.kmutt.ac.th Abstract Presently, trend of many malwares focuses on network end-point with diverse behaviors. In this paper, we present techniques to detect and classify many types of internet worm at network end-point by using data mining approaches which are Bayesian network, C4.5 Decision tree and Random forest. We use port and protocol profiles to train and test our detection models. Our results show that the detection rates of classification and detection known worms are at least 98.5% while the unknown worm detection rate is about 97% with Decision tree and Random forest, and 80% with Bayesian network. Key Words: Internet worm, Data mining, Bayesian network, Decision tree, Random forest, Network endpoint 1. Introduction Internet worm is a malicious code or program that exploits security holes on a network without human interference. Internet worm is self propagating, and fast spreading [1]. In 1988, internet worm was released the first time and over hundred hosts were infected. After that the threat of internet worm has been growing and causing more damage to network systems. To detect the internet worm, many approaches were proposed and based on signatures for misuse detection that can’t detect unknown/new worms. Most commercial programs are normally based on the signature approach where the worm signature is available after the attack to the network system for several days or weeks. Moreover, signature extraction must be considered by using expert knowledge. Thus, network anomaly detection and real times detection was become a great challenge. Network anomaly detection requires benign and infected behavior. This detection can deal with unknown worm. However, normal behavior of some applications (e.g., peer to peer protocol) is difficult to define and handle. So, this approach has high false detection rate. Recently, it has been reported [2, 3] that the security at the network endpoint is more efficient to set up shield for detecting virus and worm. The authors in [2] proposed Kullback-Leibler divergence to find frequency of source and destination ports that worm uses to propagate. Most worms spread on some fixed ports that are used by some vulnerable services. In this paper, we propose techniques to detect and classify internet worm by using data mining approaches without feature extraction [4]. We consider source ports and destination ports as well as some protocols that worm attempts to propagate [2]. Source and destination ports and protocols of normal and worm behavior are trained on data mining models. Moreover, known worm and unknown worm are classified by these models with high detection rate and low false alarm rate. Our detection approach focuses on detection at the network end point with detection rate over 99% and false alarm rate around 1% on known worm and over 94% detection rate with false alarm rate close to 0% on unknown worm without feature extraction and K-L divergence. The remainder of this paper is organized as follows. In section 2, we present related approaches of internet worm detection. In section 3, we describe information and characteristics of internet worm that are used in our approach. In section 4, we give details of our approach to detect internet worm. Then, we provide results of our approach and conclusion in section 5 and 6, respectively. 2. Related Work Misuse detection is a type of network intrusion detection based on signature such as Snort and Bro [5]. Misuse detection must have information of signature or pattern of each attack before it can classify data and detect the attack. Thus, the misuse approach can’t detect unknown/new attack because it has no information and experience of new attack. Internet worm can propagate very fast on a network before a human expert can extract the signature. Therefore, misuse detection is not sufficient to detect the problem. Unlike misuse detection, anomaly detection requires normal network behavior to associate and compare with anomaly behavior of the network traffic. This detection can detect unknown/new-type of worm. However, it is complicate to differentiate normal behavior and abnormal behavior of network traffic. Some benign traffic may be falsely classified as attack. Examples of network activities that are difficult to classify are peer-to-peer protocols and some media applications [2]. Khayam et al. [2] proposed K-L divergence measures to characterize perturbation in source and destination ports that worms attempt to propagate. They observed abnormal behavior from results of KL divergence and trained their model with only fixed ports on Support Vector Machine (SVM) using random multiple instances of benign traffic profile. They obtained good results with over 90% of detection rate for every end point and with false alarm rate close to 0. However, they used fixed ports from the results of K-L divergence to train and test on SVM. In [3], it proposed trend of attacks that focus on network endpoint and showed efficient solutions to detect malware on network end-point. Data mining approach is a good choice that can detect unknown internet worm. Siddigui et al. [6] proposed solutions to detect unknown worm by extracting features from cleaned program and infected program. They built a data mining model by train these features and presented results of unknown malware detection with overall accuracy around 95.6% and false positive rate around 3.8%. Other data mining approaches [7] were used to extract features of malware behavior and build a model by training with these features. They presented comparison of various data mining techniques to detect unknown worm. The results showed that the average detection rate is over 90% and the false alarm rate is around 6.67% with n gram extraction. Although the feature extraction gave high detection rate, in some cases [4] of feature extraction as n gram, it took long time and consumed high memory to process. For example, only n gram extraction with n equal 1, 2 and 3 for integer array, it consumed memory of 1KB, 256KB and 64MB, respectively. Moreover, this process did not include memory consumption to build the model. 3. Worm Lists In this section, we describe information of various worms that are used in our experiments. Several characteristics of each worm including Port profiles, and rate of scan per second used by worm to infect new hosts, are shown in Table 1. Table 1. Worm Characteristics Worm Scan per second Port CodeRedII 4.95 TCP 80 Zotob.G 39.34 TCP 135,445,UDP 137 SoBig.E 21.57 TCP 135,UDP 53 Sdbot-AFR 28.26 TCP 445 Rbot-AQJ 0.68 TCP 139,769 Rbot.CCC 9.7 TCP 139,445 Forbot-FU Blaster 32.53 10.5 TCP 445 TCP 135,444 UDP 69 Code red II uses a buffer overflow to exploit vulnerability on Microsoft IIS web servers. After the worm propagates itself to any host, it sends DOS attack and provides backdoors to attackers. Then, this worm will find new hosts to infect with port 80 on TCP. Sobig.E worm is attached with email or spam mail from bil@Microsoft.com and support@yahoo.com. If any user opens this file, the worm will start its process. This worm spreads to other hosts with port 135 on TCP protocol and port 53 on UDP protocol. Zotob.G exploits buffer over flow vulnerability on MS Windows Plug and Play and provides backdoors to attackers with ports 135, 445 on TCP protocol and port 137 on UDP protocol. Rbot.AQJ worm provides backdoors and allows attackers to remotely access on the vulnerable computer via IRC channels on Windows platform with ports 139 and 769 on TCP protocol. Rbot.CCC worm also provides backdoors and allows attackers to remotely access on the vulnerable computer via IRC channels on Windows platform. However, this worm propagates itself with ports 139 and 445. Forbot-FU propagates itself to other hosts with Trojan/Optix on Windows. This worm exploits buffer overflow vulnerability of Windows and provides backdoor to attackers with port 445 on TCP protocol. Blaster worm exploits a buffer overflow vulnerability of DCOM RPC on Windows XP and Windows 2000 by connecting to ports 135 and 4444 on TCP protocol and port 69 on UDP protocol. This worm can download and operate itself. After that, the worm sends DOS attacks to prevent patch update by sending SYN flood to the destination port 80. Sdbot-AFR worm exploits a buffer overflow vulnerability of Windows and provides a backdoor to attackers with port 445 on TCP protocol. Unlike Forbot-FU worm, this worm has a higher rate of scan per second. 4. Data Sets We use input datasets from [8] which were collected from 13 different network endpoints. The datasets were collected over 12-months period. Each network end-point has different behavior from each other. Each end host was installed with actual worm (i.e., Zotob.G, Forbot-FU, Sdbot-AFR, Blaster, Rbot.CCC and Rbot.AQJ) and simulated worm (i.e., CodeRedII). The datasets were collected by using “argus” program. Each instance of dataset has 7 attributes as follows Session id: 20-byte SHA-1 hash of the concatenated hostname and remote IP address Direction: one byte flag indicating as outgoing unicast, incoming unicast, outgoing broadcast or incoming broadcast packets Protocol: transport-layer protocol of the packet Source port: source port of the packet Destination port: destination port of the packet Timestamp: millisecond-resolution time of session initiation in UNIX time format Virtual key code: one byte virtual key code that identifies the data if it is normal data or worm. The datasets from [8] were separated into several categories in terms of normal data and type of worm. In addition, datasets from each end point were collected into different groups, for example, 13 end points have 13 groups. Example of datasets is shown in Table 2. Session ID Direction Protocol Src Port Des port Time Stamp Key code Table 2. Example of a data profile from [8] Sha-1 code 4 6 8704 60419 1145362465 0 Sha-1 code 3 6 80 1113 1148139379 d9 From the table, the direction column has one byte flag represented by an integer where 1 represents “incoming broadcast packets”, 2 represents “outgoing broadcast”, 3 represents “outgoing broadcast” and 4 represents “incoming unicast”. The protocol column represents transport-layer protocols using an integer such as 6 represents “TCP” and 17 represents “UDP”. The Key code column is one byte virtual key code that identifies the data types such as “d9” represents worm behavior and others represent normal behavior. We select datasets from [8] because these datasets were collected from the network end points such as homes, offices and universities. Thus, the datasets have various behaviors. In addition, some end points run peer to peer applications. There are many port numbers used in normal class data such as port numbers 22, 53, 80, 123, 135, 137, 138, 443, 445, 993 and 995 which are known ports (0:1023) and registered ports (1024:65535) for specific applications such as on-line Games and peer to peer applications. Moreover some well known ports are used by privilege users assigned by Internet Corporation for Assigned Names and Numbers (ICANN), while the registered ports are not assigned by the ICANN.We downloaded the datasets from this website [8] and selected only Direction, Protocol, Src Port, Des Port, Time Stamp and Key Code columns to be used as our datasets. In addition, we changed the worm key code from “d9” to “Worm” and change the normal data key code to “Normal”, accordingly. We collected datasets for 3 cases of experiments by sampling all datasets from the 13 end-points. We explain the preprocessed datasets of each case next. First case: We make training and testing datasets by inserting normal data and all types of worm (i.e., Zotob.G, CodeRedII, Blaster, Rbot.CCC, Rbot.AQJ, Sdbot, Sobig and Forbot-FU). In training dataset, there are 750 normal profiles and 150 profiles for each type of worms. With 8 types of worms, the total number of worm profiles used for training is 1200 profiles. The testing dataset has 1750 normal profiles and 350 profiles for each type of worms. We consider 9 output classes. The classification model is presented in Section 5. Second case: We insert 750 normal profiles and 1200 worm profiles for the training dataset and 1750 normal profiles and 2800 worm profiles for the testing dataset. In addition, worm class consists of all types of worm profiles (i.e., Zotob.G, CodeRedII, Blaster, Rbot.CCC, Rbot.AQJ, Sdbot-AFR, SoBig.E and Forbot-FU profiles). We combine these types of worm into worm class. There are totally 2 classes in these datasets as normal and worm class. Third case: We make 2 datasets. The training dataset is composed of 750 normal profiles and 1200 worm profiles by sampling 7 worm types, except one worm which is used as unknown worm. The testing dataset is composed of 1700 normal profiles and 2800 worm profiles. We add one unknown/untrained worm-type profiles into the testing dataset (resulting to the total of 8 types of worms). There are 2 output classes which are normal and worm. In this case, we perform 8 experiments where one unknown worm-type is considered at a time. For example, in the first experiment, we make a training dataset without Blaster worm. Then we add the Blaster worm into the testing dataset. In the next experiment, a different worm-type is excluded from the training dataset but is included for testing. After completing these 8 experiments, we find their average detection results. The details of the datasets used in our experiments are shown in Table 3. Table 3. Case Training and testing datasets Training Data Testing Data Output Normal Worm Normal Worm 1 750 150 x 8 1750 350 x 8 9 Classes 2 750 1200 1750 2800 2 Classes (8 types) 3 (8 exp) 750 1200 (7 types) 2800 2 Classes (8 types) 5. Worm Detection Models We propose to compare three different data mining models which are C4.5 Decision tree, Random forest and Bayesian network for Internet worm detection and classification. These models are well known for data mining. We train these data mining models to detect and classify worm by using port and protocol profiles as shown in Figure 1. Figure 1. Random forest is an effective tool for classification because it can deal with over-fitting of large dataset and is also fast for large dataset with many features. In addition, the Random forest is robust with noise. A tree is built from learning sampling dataset with replacement; about one third of this dataset was not used to train. This model can evaluate importance factors used in classification and un-pruned rules that are created and evaluated by the training dataset. 5.3. Bayesian Network (8 types) 1750 5.2. Random forest Bayesian network is a combination of graphical model and probabilistic model. A Bayesian network has several nodes or states that are correlated with probability. The Bayesian network learns casual relation from the training dataset to predict or classify unknown instances. Moreover, it can avoid overfitting with large data. We provide solutions to classify various types of worms in the first case, to distinguish known worms from normal data in the second case, and to detect unknown worms in the third case. First case: To show the detection and classification accuracy, we use a training dataset with 8 types of worms and normal data to train our model and classify the data type as shown in figure 2. Our worm detection model 5.1. C4.5 Decision tree The C4.5 Decision tree is an algorithm that builds tree by using a divide-and-conquer algorithm. A Decision tree is approximated with discrete dataset and can avoid over-fitting on large dataset. The Decision tree is produced by a training/learning dataset and built from rules that are created during the training. These rules are used to predict and classify sample/ or later datasets. To classify an unknown instance, the Decision tree will start at the root and traverse to a leave node. The result of classification and prediction occurs at the leave node. Figure 2. Case 1: normal and worm classification Second case: To identify known worms and normal data, we use a combination of 8 worms and 1 normal dataset to train our model and classify data into normal or worm as shown in figure 3. Third case: To detect unknown worms, we use 2 datasets to test and train our model in this case. The training dataset and testing dataset each has 2 classes which are normal and worm in the class attributes. The training dataset consists of 7 types of worms and the testing dataset consists of 8 types of worms. We train all models with same training dataset and test these models with the same testing dataset. worms that we consider in case 3, showing that our algorithms can detect unknown worms with overall detection rate over 91%. In particular, the Decision tree can detect unknown worms with the averaged detection rate over 97%, while the Bayesian network and Random forest have average detection rates from all experiments over 96% and 80%, respectively. Table 5. Figure 3. Cases 2 & 3: worm detection 6. Experimental Results In our experiments, the performance of each detection and classification model is measured and compared by using the detection rates which are True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN). The performance parameters (TP, FP, TN, and FN) are defined in Table 4 and described as follows: • True Positive (TP): an algorithm classifies Worm according to the actual data (Worm) • False Positive (FP): an algorithm classifies Worm opposite from the actual data (Normal) • True Negative (TN): an algorithm classifies Normal according to the actual data (Normal) • False Negative (FN): an algorithm classifies Normal opposite from the actual data (Worm) TP + TN The overall accuracy = TP + FP + TN + FN To calculate the detection rate, let NWorm be the total number of Worm profiles and NNormal be the total number of Normal profiles in the testing dataset. Thus, we have The detection rate = NWorm × TP + N Normal × TN N Worm + N Normal Table 4. Performance parameters for classification Actual Worm Normal Worm TP: True Positive FP: False Positive Normal FN: False Negative TN: True Negative Result From experiments, our models can classify and detect known worms and unknown worms with high detection rates without feature extraction and without using some fixed ports from K-L divergence. The results from cases 1 & 2 are shown in tables 5 and 6, where the detection rates of all models are over 98%. Table 7 presents the detection results with unknown Results of case 1 (worm classification) Detection rate (%) TP (%) TN (%) FP (%) FN (%) Bayesian Network 98.50 98.1 99.0 1.0 1.9 Decision tree C4.5 99.00 99.2 99.1 0.9 0.8 Random forest 99.50 99.6 99.2 0.8 0.4 Model Table 6. Results of case 2 (known worm detection) Detection rate (%) TP (%) TN (%) FP (%) FN (%) Bayesian Network 99.2 99.1 99.4 0.6 0.9 Decision tree C4.5 98.7 98.4 98.9 1.1 1.6 Random forest 99.0 98.4 99.3 0.7 1.6 Model Comparing the three approaches which are Bayesian network, Decision tree and Random forest, in Case 1, the detection rate of Random forest is the best while in Cases 2, the detection rate of Bayesian Network is the highest. In Case 3, the detection rate of Decision tree is the highest. In this last case, there is a problem in experimenting with Sdbot-AFR and Zotob.G because these worms have port profiles similar to those in the normal profiles. Bayesian Network can’t handle this problem but Decision tree and Random forest can do. Moreover, some other worms have port profiles similar to those in the Normal port profiles but all models can handle this issue, as shown in Table 7. Essentially, Table 7 shows detection rate of each model with 8 experiments. These models except Bayesian Network not only can classify many types of worms, but also have the capability to detect unknown worms by using port and protocol profiles on network endpoints. In our experiments, the algorithms spent less than 1 second and consumed about 5 MB of memory during the training and testing each model. This is because our models use a few attributes and do not use feature extraction to build the models. 7. Conclusion In this paper, we present three simple and efficient data mining techniques which are Bayesian Network, C4.5 Decision tree and Random forest for Internet worm detection and classification. We also compare the performance of each data mining model in various cases. We found that our models except the Bayesian Network model are efficient to detect unknown worm. They are good candidates for realtime worm detection because the models can be built Table 7. Zotob.G SoBig.E Sdbot-AFR Rbot-AQJ Rbot.CCC Forbot-FU Blaster Average Results of case 3 (unknown worm detection) Code RedII Detection rate (%) quickly while consuming low memory and use only port and protocol profiles for training and testing data. In particular, the Decision tree model is suitable in all cases that we investigate, while other models are suitable in some cases. Some worms have port profiles similar to those in the normal data profiles that may cause difficulty for worm detection. From our experiments, the Bayesian network model is not suitable for unknown worm detection. Bayesian Network 96.4 40.3 95.8 38.3 94.3 99.2 98.7 81.3 80.5 Decision tree C4.5 99.3 98.6 98.8 99.0 94.4 93.8 98.8 98.2 97.6 Random forest 86.5 94.5 97.9 99.5 98.8 99.3 98.9 98.7 96.8 Average 94.1 77.8 97.5 78.9 95.8 97.4 98.8 92.7 91.6 Model 8. References [1] N. Weaver, V. Paxson, S. Staniford and R. Cunningham, "Taxonomy of computer worms," Proc of the ACM workshop on Rapid malcode, WORM03, 2003, pp. 11-18 [2] S. A. Khayam, H. Radha and D. Loguinov, "Worm Detection at Network Endpoints Using InformationTheoretic Traffic Perturbations", IEEE Inter Conf on Communications (ICC), 2008, pp. 1561-1565. [3] Symantec Internet Security Threat Report XI – Trends for July – December 07,” 2007. [4] O. Sharma, M. Girolami and J. Sventek, "Detecting Worms Variants Using Machine Learning", Proc of the ACM CoNEXT conference, 2007 [5] C. Smith, A. Matrawy, S. Chow and B. Abdelaziz, "Computer Worms: Architecture, Evasion Strategies, and Detection Mechanisms," J. of Information Assurance and Security, 2009, pp. 69-83 [6] M. Siddiqui, M. C. Wang and J, Lee, "Detecting Internet Worms Using Data Mining Techniques", Cybernetics and Information Technologies, Systems and Applications: CITSA, 2008. [7] X. Wang, W. Yu, A. Champion, X. Fu and D. Xuan, "Detecting Worms via Mining Dynamic Program Execution", Security and Privacy in Communications Networks and the Workshops 2007, Nice, France, June 24, 2008 [8] Wireless and Secure Networks (WiSNet) Research Lab at the NUST School of Electrical Engineering and Computer Science (SEECS), http://wisnet.seecs.edu.pk/