Towards a balanced social psychology

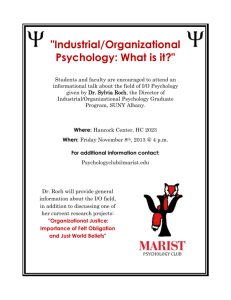

advertisement