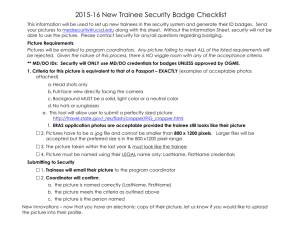

“It`s training wot done it

advertisement