3 - Northumbria University

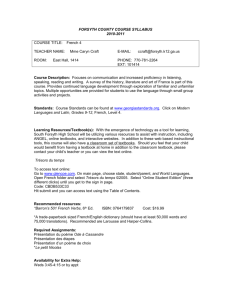

advertisement