- Disability Research Institute

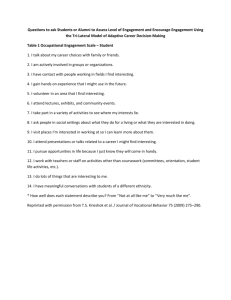

advertisement