Types of Research Design - Oncourse

advertisement

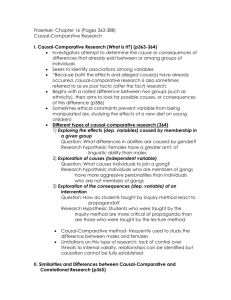

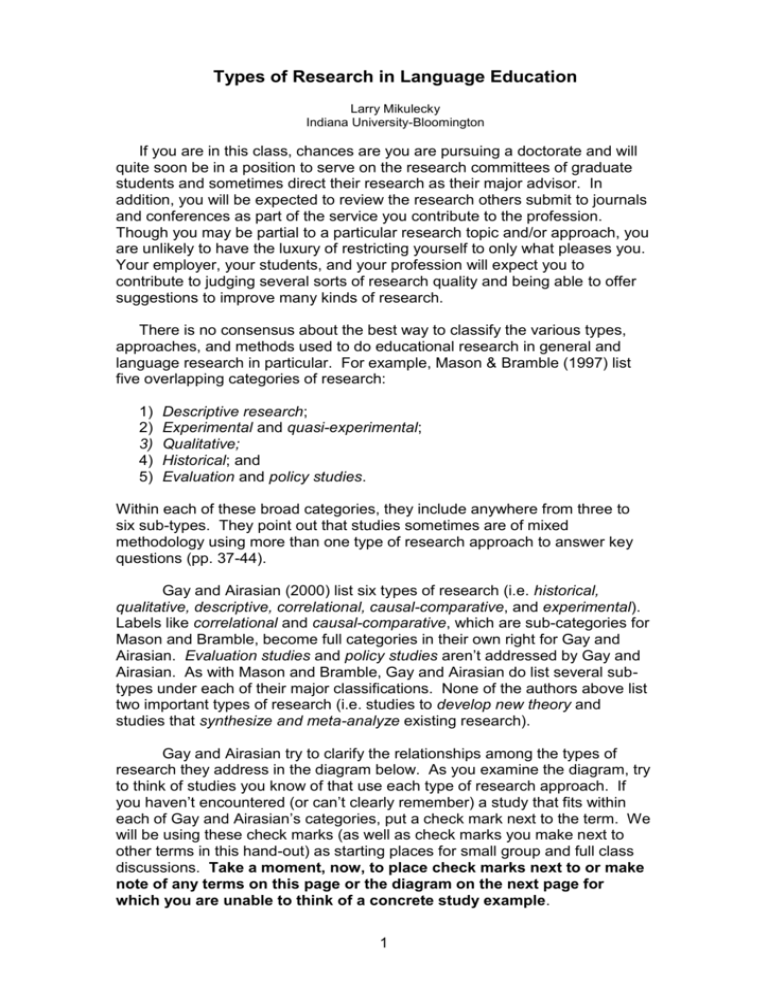

Types of Research in Language Education Larry Mikulecky Indiana University-Bloomington If you are in this class, chances are you are pursuing a doctorate and will quite soon be in a position to serve on the research committees of graduate students and sometimes direct their research as their major advisor. In addition, you will be expected to review the research others submit to journals and conferences as part of the service you contribute to the profession. Though you may be partial to a particular research topic and/or approach, you are unlikely to have the luxury of restricting yourself to only what pleases you. Your employer, your students, and your profession will expect you to contribute to judging several sorts of research quality and being able to offer suggestions to improve many kinds of research. There is no consensus about the best way to classify the various types, approaches, and methods used to do educational research in general and language research in particular. For example, Mason & Bramble (1997) list five overlapping categories of research: 1) 2) 3) 4) 5) Descriptive research; Experimental and quasi-experimental; Qualitative; Historical; and Evaluation and policy studies. Within each of these broad categories, they include anywhere from three to six sub-types. They point out that studies sometimes are of mixed methodology using more than one type of research approach to answer key questions (pp. 37-44). Gay and Airasian (2000) list six types of research (i.e. historical, qualitative, descriptive, correlational, causal-comparative, and experimental). Labels like correlational and causal-comparative, which are sub-categories for Mason and Bramble, become full categories in their own right for Gay and Airasian. Evaluation studies and policy studies aren’t addressed by Gay and Airasian. As with Mason and Bramble, Gay and Airasian do list several subtypes under each of their major classifications. None of the authors above list two important types of research (i.e. studies to develop new theory and studies that synthesize and meta-analyze existing research). Gay and Airasian try to clarify the relationships among the types of research they address in the diagram below. As you examine the diagram, try to think of studies you know of that use each type of research approach. If you haven’t encountered (or can’t clearly remember) a study that fits within each of Gay and Airasian’s categories, put a check mark next to the term. We will be using these check marks (as well as check marks you make next to other terms in this hand-out) as starting places for small group and full class discussions. Take a moment, now, to place check marks next to or make note of any terms on this page or the diagram on the next page for which you are unable to think of a concrete study example. 1 2 Sutter (1998) takes another approach to describing the various sorts of educational research when he writes: “Labelling educational research as a “type” is not as important as understanding the implications of a study’s most distinctive features. I believe that describing the distinctive features of educational research and thus avoiding contrived artificial typologies, captures the complexity and does not place imposing restraints on researchable questions.” (p. 86). He goes on to suggest that there are six distinguishing features that should be taken into account. These are: 1) 2) 3) 4) 5) 6) Quantitative versus Qualitative, Descriptive versus Inferential, True Experiment versus Quasi-experimental, Causal Comparative versus Correlational Single-Subject versus Group, and Teacher (sometimes call action research) versus Traditional. Sutter’s distinguishing characteristics cover the categories and subcategories of the authors discussed earlier. They also introduce a new research type (i.e. teacher or action research). In this course and throughout your professional life you will be expected to critically read and provide feedback about all the sorts of research. As you do this, three questions remain central. These are: 1) What kind of evidence is sufficient and can be trusted for this kind of study? 2) How far can one extend beyond the evidence in this study to drawing conclusions and making recommendations? 3) Does this study link to previous research and push the edge of knowledge? Throughout this semester, you will be examining several sorts of research and providing feedback as if you had already completed your doctorate. This will occur in several ways. 1) After seeing modelling about how to review manuscripts in class, you will review manuscripts as if you were a journal reviewer or a faculty member providing professional feedback to the research of a student or colleague. Some of the material you will review is already published and some is in manuscript form and hasn’t yet been published. 2) You will compare the strengths and weaknesses you identify in your reviews with those identified by other students and also by actual 3 journal reviewers. In addition, you (with 1-2 other students) will be both presenting someone else’s doctoral dissertation to the class and sometimes functioning as a review committee asking questions about the dissertation and making suggestions. 3) You will design a small pilot study that may serve as a steppingstone to your dissertation. This study should link to and go beyond existing research. Examples are: A) developing and trying out a means for gathering evidence; B) doing some initial analysis of data to see if full analysis is warranted, or C) Identifying where the weaknesses are in previous studies and suggesting ways to overcome these weaknesses. You will be presenting the results of your pilot study to a small group of your colleagues in this course during the last two class sessions. 4 Glossary of Some Key Research Terms* Action research/Teacher research: These studies usually involve a teacher gathering evidence to study her or his own teaching, to improve skills, or to understand the learning process of students. Such research is mainly for the teacher’s own use, though it can be more widely useful if it connects to previous research and extends our conceptual knowledge. This research is sometimes criticized for having inadequate research design and lacking trustworthy evidence. Case Study: These studies involve collecting data from several sources over time, generally from only one or a small number of cases. It usually provides rich detail about those cases, of a predominantly qualitative nature. Causal-comparative research. These studies attempt to establish causeeffect relationships among the variables of the study. The attempt is to establish that values of the independent variable have a significant effect on the dependent variable. This type of research usually involves group comparisons. For example, a causal-comparative study might attempt to identify factors related to the drop-out problem in a particular high school using data from records over the past ten years; to investigate similarities and differences between such groups as smokers and nonsmokers, readers and non readers, or delinquents and non-delinquents, using available data. In causal-comparative research the independent variable is not under the experimenter’s control, that is, the experimenter can't randomly assign the subjects to a group, but has to take the values of the independent variable as they come. The dependent variable in a study is the outcome variable. Correlational research: These studies attempt to determine whether and to what degree, a relationship exists between two or more quantifiable (numerical) variables. However, it is important to remember that just because there is a significant relationship between two variables it does not follow that one variable causes the other. When two variables are correlated you can use the relationship to predict the value on one variable for a subject if you know that subject's value on the other variable. Correlation implies prediction but not causation. The investigator frequently uses the correlation coefficient to report the results of correlational research. Multiple regression studies determine the ability of several different variables taken together and in various sequences to predict a new variable (i.e. study time, quality of instruction, home background used to predict language learning gains). Descriptive research: These studies involve collecting data in order to test hypotheses or answer questions regarding the subjects of the study. In contrast with the qualitative approach the data are numerical. The data are typically collected through a questionnaire, an interview, or through observation. In descriptive research, the investigator reports the numerical results for one or more variables on the subjects of the study. Examples * Many of these definitions draw upon course material developed by Jonathan Plucker at Indiana University and John Wasson at Morehead State University. 5 include public opinion surveys, fact-finding surveys, job descriptions, surveys of the literature, documentary analyses, anecdotal records, critical incident reports, test score analyses, normative data, description of the type and age of computers in rural schools. Policy makers often rely on this type of research to inform and justify their decisions. Evaluation: This might be an evaluation of a curriculum innovation or organisational change. An evaluation can be formative (designed to inform the process of development) or summative (to judge the effects). Often an evaluation will have elements of both. If an evaluation relates to a situation in which the researcher is also a participant it may be described as “teacher research” or “action research”. Evaluations will often make use of case study and survey methods and a summative evaluation will ideally also use experimental methods. For an evaluation to “push the edges of knowledge” it must go beyond “Did this work when we tried it?” to provide information that extends our knowledge of “why” and “how” something worked. Experimental Research: These studies investigate possible cause-andeffect relationships by exposing one or more experimental groups to one or more treatment conditions and comparing the results to one or more control groups not receiving the treatment (random assignment being essential). Issues of generalisablity (often called ‘external validity’) are usually important in an experiment, so the same attention must be given to sampling, response rates and instrumentation as in a survey (see below). It is also important to establish causality (‘internal validity’) by demonstrating the initial equivalence of the groups (or attempting to make suitable allowances), presenting evidence about how the different interventions were actually implemented and attempting to rule out any other factors that might have influenced the result. In education, these studies are difficult to implement because of logistical problems and sometimes ethical problems. Qualitative approach Qualitative approaches involve the collection of extensive narrative data in order to gain insights into phenomena of interest. Data analysis includes the coding of the data and production of a verbal synthesis (inductive process). Trustworthiness is usually achieved by supporting conclusions with information triangulated from several different sources and/or the same source over time. Inter-rater agreement about what goes into qualitative categories is usually called for. Quantitative approach: Quantitative approaches involve the collection of numerical data in order to explain, predict, and/or control phenomena of interest, data analysis is mainly statistical (deductive process). Trustworthiness is usually achieved by establishing several sorts of validity and reliability for the measures being used to create the numerical data. Quasi-experimental research: These studies are not able to make use of random assignment, though control groups are usually expected. Attempts are made to demonstrate that the treatment and control groups are somewhat equivalent and to statistically account for beginning differences if the groups aren’t equivalent. Less credible quasi-experiments do not include control groups and simply document change for participants receiving a particular 6 treatment. Like true experiments, there is a expectation that acceptable validity and reliability have been established for the information collected. Research synthesis and meta-analysis: A research synthesis is an attempt to summarise or comment on what is already known about a particular topic. The researcher brings different sources together, synthesising and analysing critically, thereby creating new knowledge (i.e. the studies together tell us more than individual studies alone). High quality studies go to great lengths to ensure that all relevant sources (whether published or not) have been included. Details of the search strategies used and the criteria for inclusion must be made clear. A systematic review will often make a quantitative synthesis of the results of all the studies, for example by meta-analysis (i.e. using statistical techniques to compile data from similar studies). Survey: An empirical study that involves collecting information from a larger number of cases, perhaps using questionnaires. A survey might also make use of already available data, collected for another purpose. A survey may be cross-sectional (data collected at one time) or longitudinal (collected over a period). Because of the larger number of cases, a survey will generally involve some quantitative analysis. Issues of generalisablity are usually important in presenting survey results, so it is vital to report how samples were chosen, what response rates were achieved and to comment on the validity and reliability of any instruments used. 7 References Gay, L. & Airasian, P. (2000). Educational Research: Competencies for Analysis and Application, Sixth Edition, Merrill/Prentice Hall Mason, E. & Bramble. W. (1997). Research in Education and the Behavioral Sciences. Madison, WI, Brown and Benchmark. Sutter, W. N. (1998). Primer of Educational Research. Boston, MA. Allyn and Bacon. 8