Multiple Regression (I)

advertisement

Multiple Regression (I)

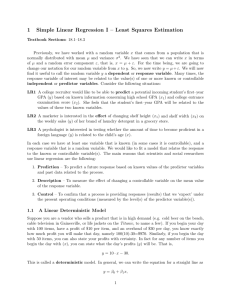

6.1 Multiple Regression Models

Let Y = (Y1 , · · · , Yn ) be the response (or dependent) variable. Let Xj = (X1j , · · · , Xnj ) for

j = 1, · · · , p − 1 be the predictor (or covariate, independent)variable. In a multiple regression

model, we assume

• The relation between response and predictor variables is linear.

• Predictor variables are not random.

• Response variable is random.

• The error term is identically independently normally distributed (iid).

• The expected value of the the error term is 0.

The model can be written as

Yi =β0 + β1 Xi1 + β2 Xi2 + · · · + βp Xi,p−1 + ϵi

=β0 +

p−1

∑

(1)

Xij βj + ϵi

j=1

where ϵi ∼iid N (0, σ 2 ).

Model (1) can include:

• Categorical predictor variables. A dummy variable may be defined as

{

1 if the i-th observation is female

.

0 if the i-th observation if male

We need to use k − 1 dummy variables if a categorical predictor variable has k levels.

Xij =

• Ordinal predictor variables. If the level of a categorical predictor variable can be rank,

we can generally define the value of a predictor variable from 1 to k for low level to high

level of the predictor variable.

• Polynomial regression. The model can be written as

2

Yi =β0 + β1 Xi1 + β2 Xi1

+ ϵi

=β0 + β1 Zi1 + β2 Zi2 + ϵi .

• Transformation. The model may also be the form of

log Yi = β0 +

p−1

∑

βj Xij + ϵi .

j=1

The Box-Cox transformation may also be used as

p−1

∑

Yiλ − 1

= β0 +

βj Xij + ϵi ,

λ

j=1

where the conventional values of λ are λ = 2, 1, 0.5, 0, −0.5, −1.0, −2.

1

• Interaction effects. The model may also be

Yi =β0 + β1 Xi1 + β2 Xi2 + β3 Xi1 Xi2 + ϵi .

• Combination of Cases. A model may contain the combination of the above cases.

6.2-6.6 General Linear Regression Model in Matrix Terms

Let

1 X11

Y1

1 X21

Y2

Y =

..

.. , X =

..

.

.

.

Yn

1 Xn1

···

···

..

.

X1,p−1

ϵ1

X2,p−1

ϵ2

, and ϵ = ..

..

.

.

.

· · · Xn,p−1

(2)

ϵn

Then, the matrix expression of the model is

Y = Xβ + ϵ,

where Y is an n-dimensional random vector, X is an n × p matrix, β is a p-dimension parameter

defined in (2), ϵ ∼ N (0, σ 2 I) is an error term and σ 2 is an unknown parameter. Assume the rank

of X is p.

We have shown

β̂ = (X ′ X)−1 X ′ Y.

Thus,

E(β̂) = E[(X ′ X)−1 X ′ Y ] = (X ′ X)−1 X ′ E(Y ) = (X ′ X)−1 X ′ (Xβ) = β,

and

Cov(β̂, β̂) = (X ′ X)−1 X ′ Cov(Y, Y )X(X ′ X)−1 = σ 2 (X ′ X)−1 .

The model residual is

ei = Yi − Ŷi = Yi − (β̂0 +

p−1

∑

β̂j Xij ).

i=1

Let e = (e1 , · · · , en )′ . Then

e = [I − X(X ′ X)−1 X ′ ]Y = (I − H)Y,

where H = X(X ′ X)−1 X ′ .

The estimator of σ 2 is

σ̂ 2 =

SSE

1

=

Y ′ [I − X(X ′ X)−1 X ′ ]Y,

n−p

n−p

where

SSE =

n

∑

(Ŷi − Yi )2 = Y [I − X(X ′ X)−1 X ′ ]Y.

i=1

2

Comment: σ̂ is not an MLE of σ 2 , but it is UMVU(Uniform Minimim Variance Unbiased)

estimator. The MLE is SSE/n.

The Analysis of Variable Table may be given as

2

Source

df

SS

Regression p − 1 SSR

Error

n − p SSE

Total

n−1

MS

M SR =

M SE =

F-value

p-value

MSR/MSE P [Fp−1,n−p > M SR/M SE]

SSR

p−1

SSE

n−p

SST

where SSR may be partitioned. The p-value tests

H0 : β1 = · · · = βp−1 = 0.

In addition, we can use the

SSR

SSE

=1−

.

SST

SST

The value of R2 tell us how much variation of the data can be interpreted by the linear function.

Its value is always between 0 and 1. If the value is large, then more variation is interpreted by the

linear function.

There is an adjusted R2 given by

R2 =

n−1

(1 − R2 )

n−p

2

Radj

=1−

which can be used the same way as R2 .

We have the following important properties:

•

E(β̂) = β

•

β̂j − βj

s(β̂j )

∼ tn−p .

Therefore, the (1 − α)-level confidence interval for βj is

β̂j ± tα/2,n−p s(β̂j ).

To test

H0 : βj = 0,

we can look at the p-value of βj given by

P [|tn−p | > |

β̂j

s(β̂j )

|].

If p-value is small, then we reject H0 .

• To compute the joint confidence interval of (βj1 , · · · , βjk ) , we can use the Bonferroni interval

as

α

β̂j ± t 2k

,n−p s(β̂j ).

3

•

SSE ∼ σ 2 χ2n−p .

Therefore, the (1 − α)-level confidence interval for σ 2 is

[

SSE

χ2α/2,n−p

,

SSE

χ21−α/2,n−p

].

6.7 Estimation of Mean Response and Prediction of New Observation

Let x0 = (x01 , · · · , x0,p−1 ) be a new observation of predictor variables. Then, the prediction of

the response is

ŷ0 = β̂0 +

p−1

∑

β̂j x0j .

j=1

The variance of the mean of the response is

1

′

−1 x01

(1,

x

,

·

·

·

,

x

)(X

X)

V (ŷ0 ) = SSE

01

0,p−1

.. .

.

x0,p−1

The 1 − α level confidence interval for the mean response is

√

ŷ0 ± tα/2,n−p V (ŷ0 )

and the 1 − α level confidence interval for the prediction of the observed value is

√

ŷ0 ± tα/2,n−p V (ŷ0 ) + M SE.

6.8 Diagnostics and Remedial Measures

We have the following methods.

• Scatter plot: plot response vs predictor variables.

• Residual plot: plot residual vs predicted values.

• Normal plot: plot normal CDF vs residuals

• Test for constancy of error variance.

– Modified Levene test. Divide residual into two groups with n1 and n2 observations,

respectively, where n = n1 + n2 . Let ẽ1 and ẽ2 be the medians of the residuals in the

two groups. Let

di1 = |ei1 − ẽ1 |, di2 = |ei2 − ẽ2 |.

Use the pooled t-test statistic

d¯1 − d¯2

,

t∗L = √

s 1/n1 + 1/n2

where

∑

∑

(di1 − d¯1 )2 + (di2 − d¯2 )2

s =

.

n−2

We reject constancy of variance if |t∗L | > tα/2,n−2 .

2

4

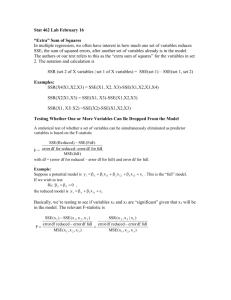

Table 1: Model Summaries for Dwaine Studio Example, SST = 26196.

Model

S=N

S=I

S=I+N

R2

0.8922

0.6986

0.9167

SSE

2824.40

7896.43

2180.92

Intercept is always included in the model.

– Breusch-Pagan test. Fit a model

log e2i = γ0 + γ1 Xi

and test H0 : γ1 = 0.

• F-test for lack of fit. The method is useful in ANOVA model because it needs replicated

values of independent variables.

6.9 Examples-Multiple Regression with Two Predictor Variables

Example of the textbook. The example is from Section 6.9 in the textbook. In this example,

Dwaine Studio company operates portrait studios in 21 cities of medium size. The studios specialize in portraits of children. It is considered an expansion and wish to investigate whether the

sales(S)(in thousand dollars) in a community can be predicted from the number of persons(N)(in

thousand persons) aged 10 or younger and the per capita disposable personal income(I)(in thousand dollars) in the community. The summary of the SAS output are given in Table 1.

The plots do not show us any significantly abnormal. In the model S = I +N , all the predictors

are significant (p-values less than 0.05). Thus the regression model S = I + N is the final fitted

model.

5