C. Pang`s Elementary Statistics Notes & Worksheets

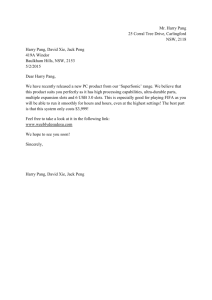

advertisement