Cholesky-Based Reduced-Rank Square

advertisement

Cholesky-Based Reduced-Rank Square-Root Kalman Filtering

J. Chandrasekar, I. S. Kim, D. S. Bernstein, and A. Ridley

Abstract— We consider a reduced-rank square-root Kalman

filter based on the Cholesky decomposition of the state-error

covariance. We compare the performance of this filter with

the reduced-rank square-root filter based on the singular value

decomposition.

I. I NTRODUCTION

The problem of state estimation for large-scale systems has

gained increasing attention due to computationally intensive

applications such as weather forecasting [1], where state

estimation is commonly referred to as data assimilation.

For these problems, there is a need for algorithms that are

computationally tractable despite the enormous dimension

of the state. These problems also typically entail nonlinear

dynamics and model uncertainty [2], although these issues

are outside the scope of this paper.

One approach to obtaining more tractable algorithms is

to consider reduced-order Kalman filters. These reducedcomplexity filters provide state estimates that are suboptimal

relative to the classical Kalman filter [3–7]. Alternative

reduced-order variants of the classical Kalman filter have

been developed for computationally demanding applications

[8–11], where the classical Kalman filter gain and covariance

are modified so as to reduce the computational requirements.

A comparison of several techniques is given in [12].

A widely studied technique for reducing the computational

requirements of the Kalman filter for large scale systems is

the reduced-rank filter [13–16]. In this method, the errorcovariance matrix is factored to obtain a square root, whose

rank is then reduced through truncation. This factorizationand-truncation method has direct application to the problem

of generating a reduced ensemble for use in particle filter

methods [17, 18].

Reduced-rank filters are closely related to the classical

factorization techniques [19, 20], which provide numerical

stability and computational efficiency, as well as a starting

point for reduced-rank approximation.

The primary technique for truncating the error-covariance

matrix is the singular value decomposition (SVD) [13–18],

wherein the singular values provide guidance as to which

components of the error covariance are most relevant to the

accuracy of the state estimates. Approximation based on the

SVD is largely motivated by the fact that error-covariance

This research was supported by the National Science Foundation, under

grants CNS-0539053 and ATM-0325332 to the University of Michigan, Ann

Arbor, USA.

J. Chandrasekar, I. S. Kim, and D. S. Bernstein are with Department of

Aerospace Engineering, and A. Ridley is with Department of Atmospheric,

Oceanic and Space Sciences, University of Michigan, Ann Arbor, MI,

dsbaero@umich.edu

truncation is optimal with respect to approximation in unitarily invariant norms, such as the Frobenius norm. Despite

this theoretical grounding, there appear to be no theoretical

criteria to support the optimality of approximation based on

the SVD within the context of recursive state estimation. The

difficult is due to the fact that optimal approximation depends

on the dynamics and measurement maps in addition to the

components of the error covariance.

In the present paper we begin by observing that the

Kalman filter update depends on the combination of terms

Ck Pk , where Ck is the measurement map and Pk is the error

covariance. This observation suggests that approximation of

Ck Pk may be more suitable than approximation based on

Pk alone.

To develop this idea, we show that approximation of Ck Pk

leads directly to truncation based on the Cholesky decomposition. Unlike the SVD, however, the Cholesky decomposition does not possess a natural measure of magnitude that is

analogous to the singular values arising in the SVD. Nevertheless, filter reduction based on the Cholesky decomposition

provides state-estimation accuracy that is competitive with,

and in many cases superior to, that of the SVD. In particular,

we show that, in special cases, the accuracy of the Choleskydecomposition-based reduced-rank filter is equal to the accuracy of the full-rank filter, and we demonstrate examples

for which the Cholesky-decomposition-based reduced-rank

filter provides acceptable accuracy, whereas the SVD-based

reduced-rank filter provides arbitrarily poor accuracy.

A fortuitous advantage of using the Cholesky decomposition in place of the SVD is the fact that the Cholesky

decomposition is computationally less expensive than the

SVD, specifically, O(n3 /6) [21], and thus an asymptotic

computational advantage over SVD by a factor of 12. An

additional advantage is that the entire matrix need not be

factored; instead, by arranging the states so that those states

that contribute directly to the measurement correspond to

the initial columns of the lower triangular square root, then

only the leading submatrix of the error covariance must

be factored, yielding yet further savings over the SVD.

Once the factorization is performed, the algorithm effectively

retains only the initial “tall” columns of the full Cholesky

factorization and truncates the “short” columns.

II. T HE K ALMAN

FILTER

Consider the time-varying discrete-time system

xk+1 = Ak xk + Gk wk ,

(2.1)

yk = Ck xk + Hk vk ,

(2.2)

where xk ∈ Rnk , wk ∈ Rdw , yk ∈ Rpk , vk ∈ Rdv , and

Ak , Gk , Ck , and Hk are known real matrices of appropriate

sizes. We assume that wk and vk are zero-mean white

processes with unit covariances. Define Qk , Gk GT

k and

Rk , Hk HkT , and assume that Rk is positive definite for

all k > 0. Furthermore, we assume that wk and vk are

uncorrelated for all k > 0. The objective is to obtain an

estimate of the state xk using the measurements yk .

The Kalman filter provides the optimal minimum-variance

estimate of the state xk . The Kalman filter can be expressed

in two steps, namely, the data assimilation step, where the

measurements are used to update the states, and the forecast

step, which uses the model. These steps can be summarized

as follows:

Data Assimilation Step

Kk = Pkf CkT (Ck Pkf CkT + Rk )−1 ,

Pkda

xda

k

=

=

Pkf

xfk

− Pkf CkT (Ck Pkf CkT +

+ Kk (yk − Ck xfk ).

(2.3)

−1

Rk )

Ck Pkf ,

(2.4)

(2.5)

Forecast Step

xfk+1 = Ak xda

k ,

f

Pk+1

Pkf

=

Ak Pkda AT

k

n×n

The matrices

∈ R

and

error covariances, that is,

Pkda

(2.6)

+ Qk .

∈ R

n×n

(2.7)

are the state-

da T

Pkf = E[efk (efk )T ], Pkda = E[eda

k (ek ) ],

given by

P = Un Σn UnT ,

where Un is orthogonal. For q 6 n, let ΦSVD (P, q) ∈ Rn×q

denote the SVD-based rank-q approximation of the square

1/2

root Σq of P given by

ΦSVD (P, q) , Uq Σq1/2 .

The following standard result shows that SS , where S ,

ΦSVD (P, q), is the best rank-q approximation of P in the

Frobenius norm.

Lemma III.1. Let P ∈ Rn×n be positive semidefinite,

and let σ1 · · · > σn be the singular values of P . If S =

ΦSVD (P, q), then

min

rank(P̂ )=q

2

kP − P̂ k2F = kP − SS T k2F = σq+1

+ · · · + σn2 . (3.4)

The data assimilation and forecast steps of the SVD-based

rank-q square-root filter are given by the following steps:

Data Assimilation step

−1

f

f

,

(3.5)

CkT + Rk

Ks,k = P̂s,k

CkT Ck P̂s,k

−1

da

f

f

P̃s,k

= P̂kf − P̂kf CkT Ck P̂s,k

CkT + Rk

Ck P̂s,k

, (3.6)

f

f

xda

s,k = xs,k + Ks,k (yk − Ck xs,k ),

In the following sections, we consider reduced-rank

square-root filters that propagate approximations of a squareroot of the error covariance instead of the actual error

covariance.

III. SVD-BASED R EDUCED -R ANK S QUARE -ROOT

F ILTER

Note that the Kalman filter uses the error covariances

Pkda and Pkf , which are updated using (2.4) and (2.7). For

computational efficiency, we construct a suboptimal filter that

uses reduced-rank approximations of the error covariances

Pkda and Pkf . Specifically, we consider reduced-rank approximations P̂kda and P̂kf of the error covariances Pkda and Pkf

such that kPkda − P̂kda kF and kPkf − P̂kf kF are minimized,

where k · kF is the Frobenius norm. To achieve this approximation, we compute singular value decompositions of the

error covariances at each time step.

Let P ∈ Rn×n be positive semidefinite, let σ1 > · · · > σn

be the singular values of P , and let u1 , . . . , un ∈ Rn be

corresponding orthonormal eigenvectors. Next, define Uq ∈

Rn×q and Σq ∈ Rq×q by

σ1

..

U q , u 1 · · · u q , Σq ,

. (3.1)

.

σq

With this notation, the singular value decomposition of P is

(3.7)

where

(2.8)

(2.9)

(3.3)

T

where

da

efk , xk − xfk , eda

k , xk − xk .

(3.2)

f

f

S̃s,k

, ΦSVD (P̃s,k

, q),

(3.8)

f

P̂s,k

(3.9)

,

f

f

S̃s,k

(S̃s,k

)T .

Forecast step

xfs,k+1 = Ak xda

s,k ,

f

P̃s,k+1

=

da T

Ak P̂s,k

Ak

(3.10)

+ Qk ,

(3.11)

where

da

da

S̃s,k

, ΦSVD (P̃s,k

, q),

(3.12)

da

P̂s,k

(3.13)

,

da

da T

S̃s,k

(S̃s,k

) ,

f

P̃s,0

and

is positive semidefinite.

Next, define the forecast and data assimilation error cof

da

variances Ps,k

and Ps,k

of the SVD-based rank-q square-root

filter by

f

Ps,k

, E (xk − xfs,k )(xk − xfs,k )T ,

(3.14)

da

da

da T

Ps,k , E (xk − xs,k )(xk − xs,k ) .

(3.15)

Using (2.1), (3.7) and (3.10), it can be shown that

da

f

T

Ps,k

= (I − Ks,k C)Ps,k

(I − Ks,k C)T + Ks,k Rk Ks,k

, (3.16)

f

da T

Ps,k+1

= Ak Ps,k

Ak + Qk .

(3.17)

T

T

f

f

f

da

da

da

Note that S̃s,k

S̃s,k

6 P̃s,k

and S̃s,k

S̃s,k

6 P̃s,k

.

f

f

Hence, even if P̃s,0

= Ps,0

, it does not necesarily follow that

f

f

da

da

P̃s,k = Ps,k and P̃s,k = Ps,k

for all k > 0. Therefore, since

f

Ks,k does not use the true error covariance Ps,k

, the SVDbased rank-q square-root filter is generally not equivalent

to the Kalman filter. However, under certain conditions,

the SVD-based rank-q square-root filter is equivalent to the

Kalman filter. Specifically, we have the following result.

f

f

Proposition III.1. Assume that P̃s,k

= Ps,k

and

f

da

da

f

f

rank(Ps,k ) 6 q. Then, P̃s,k = Ps,k and P̃s,k+1 = Ps,k+1 . If,

f

f

f

in addition, Ps,k

= Pkf , then Ks,k = Kk and Ps,k+1

= Pk+1

.

Proof. Since rank(P̃kf ) 6 q, it follows from Lemma III.1

that

T

f

f

f

f

S̃s,k

= P̃s,k

.

(3.18)

P̂s,k

= S̃s,k

Hence, it follows from (3.5) that

f

f

CkT + Rk

Ks,k = Ps,k

CkT Ck Ps,k

while substituting (3.18) into (3.6) yields

−1

,

(3.19)

da

f

f

f

f

P̃s,k

= P̃s,k

− P̃s,k

CkT (Ck P̃s,k

CkT + Rk )−1 Ck P̃s,k

. (3.20)

f

f

Next, substituting (3.19) into (3.16) and using P̃s,k

= Ps,k

in the resulting expression yields

da

da

P̃s,k

= Ps,k

.

(3.21)

f

Since rank(P̃s,k

)

da

rank(P̃s,k ) 6 q, and

da

P̂s,k

6 q, it follows from (3.20) that

therefore Lemma III.1 implies that

T

da

da

da

= S̃s,k

S̃s,k

= P̃s,k

.

(3.22)

Hence, it follows from (3.11), (3.17), and (3.21) that

f

f

P̃s,k+1

= Ps,k+1

.

(3.23)

Finally, it follows from (2.3) and (3.19) that

Ks,k = Kk .

(3.24)

Therefore, (2.4) and (3.16) imply that

da

Ps,k

=

Pkda .

(3.25)

Hence, it follows from (2.7), (3.17) and (3.25) that

f

f

Ps,k+1

= Pk+1

.

f

f

Corollary III.1. Assume that xfs,0 = xf0 , P̃s,0

= Ps,0

, and

rank(P0f ) 6 q. Furthermore, assume that, for all k > 0,

rank(Ak ) + rank(Qk ) 6 q. Then, for all k > 0, Ks,k = Kk

and xfs,k = xfk .

xfs,0

Since P ∈ Rn×n is positive semidefinite, the Cholesky

decomposition yields a lower triangular Cholesky factor L ∈

Rn×n of P that satisfies

LLT = P.

Partition L as

L=

IV. C HOLESKY-D ECOMPOSITION -BASED

R EDUCED -R ANK S QUARE -ROOT F ILTER

The Kalman filter gain Kk depends on a particular subspace of the range of the error covariance. Specifically,

Kk depends only on Ck Pkf and not on the entire error

covariance. We thus consider a filter that uses reduced-rank

approximations P̂kda and P̂kf of the error covariances Pkda

and Pkf such that kCk (Pkda − P̂kda )kF and kCk (Pkf − P̂kf )kF

are minimized. To achieve this minimization, we compute a

Cholesky decomposition of both error covariances at each

time step.

L1

···

Ln

n

,

where, for i = 1, . . . , n, Li ∈ R has real entries

T

Li = 01×(i−1) Li,1 · · · Li,n−i+1

.

(4.2)

(4.3)

Truncating the last n − q columns of L yields the reducedrank Cholesky factor

ΦCHOL (P, q) , L1 · · · Lq ∈ Rn×q .

(4.4)

Lemma IV.1. Let P ∈ Rn×n be positive definite, define

S , ΦCHOL (P, q) and P̂ , SS T , and partition P and P̂

as

P1

P12

P̂1

P̂12

, (4.5)

P =

, P̂ =

(P12 )T P2

(P̂12 ) P̂2

where P1 , P̂1 ∈ Rq×q and P2 , P̂2 ∈ Rr×r . Then, P̂1 = P1

and P̂12 = P12 .

Proof. Let L be the Cholesky factor of P . It follows from

(4.3) that Li LT

∈ Rn has the structure

i

0i−1

0(i−1)×(n−i+1)

Li LT

=

,

(4.6)

i

0(n−i+1)×(i−1)

Xi

where Xi ∈ R(n−i+1)×(n−i+1) . Therefore,

n

X

0q×q 0q×r

T

Li Li =

,

0r×q

Yr

(4.7)

i=q+1

where Yr ∈ Rr×r . Since

P =

n

X

Li LT

i ,

(4.8)

i=1

it follows from (4.4) that

P = P̂ +

xf0 ,

Proof. Since

=

(2.8) and (3.14) imply that

f

f

Ps,0

= P0f . It follows from (3.16) that if rank(Ps,k

) 6

da

q, then rank(Ps,k ) 6 q, and hence (3.17) implies that

f

rank(Ps,k+1

) 6 q. Therefore, using Proposition III.1 and

induction, it follows that Ks,k = Kk for all k > 0. Therefore,

(2.5), (2.6), (3.7) and (3.10) imply that xfs,k = xfk for all

k > 0.

(4.1)

n

X

Li LT

i .

(4.9)

i=q+1

Substituting (4.7) into (4.9) yields P̂1 = P1 and P̂12 = P12 .

Lemma IV.1 implies that, if S = ΦCHOL (P, q), then the

first q rows and columns of SS T and P are equal.

The data assimilation and forecast steps of the Choleskybased rank-q square-root filter are given by the following

steps:

Data Assimilation step

“

”−1

f

f

Kc,k = P̂c,k

CkT Ck P̂c,k

CkT + Rk

,

“

”−1

da

f

f

f

f

P̃c,k

= P̂c,k

− P̂c,k

CkT Ck P̂c,k

CkT + Rk

Ck P̂c,k

,

xda

c,k

=

xfc,k

+ Kc,k (yk −

Ck xfc,k ),

(4.10)

(4.11)

(4.12)

where

f

f

S̃c,k

, ΦSVD (P̃c,k

, q),

(4.13)

f

P̂c,k

(4.14)

,

f

f

S̃c,k

(S̃s,k

)T .

Forecast step

xfc,k+1 = Ak xda

c,k ,

(4.15)

f

da T

P̃c,k+1

= Ak P̂c,k

Ak + Qk ,

(4.16)

where

da

da

S̃c,k

, ΦSVD (P̃c,k

, q),

(4.17)

da

P̂c,k

(4.18)

,

da

da T

S̃c,k

(S̃c,k

) ,

f

and P̃c,0

is positive semidefinite.

Next, define the forecast and data assimilation error cof

da

variances Pc,k

and Pc,k

, respectively, of the Cholesky-based

rank-q square-root filter by

h

i

f

, E (xk − xfc,k )(xk − xfc,k )T ,

(4.19)

Pc,k

h

i

da

da T

Ps,k

, E (xk − xda

,

(4.20)

c,k )(xk − xc,k )

f

da

that is, Pc,k

and Pc,k

are the error covariances when the

Cholesky-based rank-q square-root filter is used. Using (2.1),

(4.12) and (4.15), it can be shown that

da

Pc,k

f

Pc,k

=

=

f

(I − Kc,k C)Pc,k

(I

da T

Ak Pc,k

Ak + Qk .

T

T

Kc,k Rk Kc,k

,

− Kc,k C) +

(4.21)

(4.22)

T

T

da

f

da

f

S̃c,k

6 P̃kda .

S̃c,k

6 P̃kf and S̃c,k

Note that S̃c,k

f

f

Hence, even if P̃c,0

= Pc,0

, the Cholesky-based rank-q

square-root filter is generally not equivalent to the Kalman

filter. However, in certain cases, the Cholesky-based rank-q

square root filter is equivalent to the Kalman filter.

Proposition IV.1. Let Ak and Ck have the structure

A1,k

0

Ak =

, Ck = Ip 0 ,

(4.23)

A21,k A2,k

f

where A1,k ∈ Rp×p and A2,k ∈ Rr×r , partition Pkf and P̃c,k

as

Pkf =

»

f

P1,k

f

P21,k

f

)T

(P21,k

f

P2,k

f

f

P1,k

, P̃c,1,k

–

f

, P̃c,k

=

»

f

P̃c,1,k

f

P̃c,21,k

f

(P̃c,21,k

)T

f

P̃c,2,k

–

, (4.24)

f

f

where

∈ R

and P2,k

, P̃c,2,k

∈ Rr×r , and

f

f

f

f

assume that q = p, P̃c,1,k = P1,k , and P̃c,21,k = P21,k

. Then,

f

f

f

f

Kc,k = Kk , P̃c,1,k+1 = P1,k+1 , and P̃c,21,k+1 = P21,k+1 .

Proof. Let

p×p

have entries

da

da T

P1,k (P21,k

)

=

,

da

da

P21,k

P2,k

(4.25)

da

da

where P1,k

∈ Rp×p is positive semidefinite and P2,k

∈

r×r

R . It follows from (2.4) that

da

f

f

f

f

P1,k

= P1,k

− P1,k

(P1,k

+ Rk )−1 P1,k

,

(4.26)

da

P21,k

(4.27)

=

f

P21,k

−

f

f

P21,k

(P1,k

−1

+ Rk )

(Q21,k )T

Q2,k

.

(4.31)

f

da

f

Define P̂c,k

and P̂c,k

by (4.14) and (4.18), and let P̂c,k

da

and P̂c,k have entries

f

P̂c,k

=

–

» da

–

f

f

da

P̂c,1,k

(P̂c,21,k

)T

P̂c,1,k (P̂c,21,k

)T

da

, P̂c,k

= da

, (4.32)

f

f

da

P̂c,21,k P̂c,2,k

P̂c,21,k P̂c,2,k

»

da

f

where P̂1,k

, P̂1,k

∈ Rp×p are positive semidefinite and

da

f

r×r

P̂2,k , P̂2,k ∈ R . Substituting (4.32) into (4.10) yields

"

#

f

P̂1,k

f

Kc,k =

(P̂1,k

+ Rk )−1 .

(4.33)

f

P̂21,k

f

f

Since Sc,k

= ΦCHOL (P̃c,k

, q) and we assume that q =

f

f

f

f

p, P̃c,1,k = P1,k , and P̃c,21,k

= P21,k

, it follows from

Lemma IV.1 that

f

f

f

f

= Pc,21,k

.

, P̂c,21,k

P̂c,1,k

= Pc,1,k

(4.34)

Hence, Kc,k = Kk .

Next, substituting (4.14) into (4.11) yields

da

f

f

f

f

P̃c,k

= P̂c,k

− P̂c,k

CkT (Ck P̂c,k

CkT + Rk )−1 Ck P̂c,k

. (4.35)

da

Let P̃c,k

have entries

"

da

P̃c,k

=

da

P̃c,1,k

da

P̃c,21,k

da

(P̃c,21,k

)T

da

P̃c,2,k

#

,

(4.36)

da

da

where P̃c,1,k

∈ Rp×p is positive semidefinite and P̃c,2,k

∈

r×r

R . Therefore, it follows from (4.23) and (4.32) that

da

f

f

f

f

P̃c,1,k

= P̂c,1,k

− P̂c,1,k

(P̂c,1,k

+ Rk )−1 P̂c,1,k

,

(4.37)

da

P̃c,21,k

(4.38)

=

f

P̂c,21,k

−

f

f

P̂c,21,k

(P̂c,1,k

−1

+ Rk )

f

P̂c,1,k

.

Substituting (4.34) into (4.37) and using (4.25) and (4.26)

yields

da

da

da

da

P̃c,1,k

= P1,k

, P̃c,21,k

= P21,k

.

Moreover, since

Lemma IV.1 that

da

S̃c,k

=

da

ΦCHOL (P̃c,k

, q),

(4.39)

it follows from

da

da

da

da

P̂c,1,k

= P̃c,1,k

, P̂c,21,k

= P̃c,21,k

.

(4.40)

It follows from (4.16) and (4.23) that

Pkda

Pkda

where Qk has entries

Q1,k

Qk =

Q21,k

f

P1,k

.

Substituting (4.23) into (2.3) yields

f P1,k

f

Kk =

(P1,k

+ Rk )−1 .

f

P21,k

(4.28)

Furthermore, (2.7) and (4.23) imply that

f

da T

P1,k+1

= A1,k P1,k

A1,k + Q1,k ,

(4.29)

f

da

da T

P21,k+1

= A2,k P21,k

AT

1,k + A21,k P1,k A1,k + Q21,k ,

(4.30)

da T

f

P̃c,1,k+1

= A1,k P̂1,k

A1,k + Q1,k ,

(4.41)

f

da

da T

P̃c,21,k+1

= A2,k P̂21,k

AT

1,k + A21,k P̂1,k A1,k + Q21,k .

(4.42)

Therefore, (4.29), (4.39), and (4.40) imply that

f

f

f

f

P̃c,1,k+1

= P1,k+1

, P̃c,21,k+1

= P21,k+1

.

Corollary IV.1. Assume that Ak and Ck have the strucf

f

f

f

ture in (4.23). Let q = p, P̃c,1,0

= P1,0

, P̃c,21,0

= P21,0

,

f

f

and xc,0 = x0 . Then for all k > 0, Kc,k = Kk , and hence

xfc,k = xfk .

Proof. Using induction and Proposition IV.1 yields

Kc,k = Kk for all k > 0. Hence, it follows from (2.5),

(2.6), (4.12), and (4.15) that xfc,k = xfk for all k > 0.

for i = 1, . . . , r − 1. Using (4.11) in (5.12) yields

V. L INEAR T IME -I NVARIANT S YSTEMS

In this section, we consider a linear time-invariant system

and hence assume that, for all k > 0, Ak = A, Ck = C,

Gk = G, Hk = H, Qk = Q, and Rk = R. Furthermore,

we assume that p < n and (A, C) is observable so that the

observability matrix O ∈ Rpn×n defined by

C

CA

O,

(5.1)

..

.

CAn−1

has full column rank. Next, without loss of generality we

consider a basis such that

In

O=

.

(5.2)

0(p−1)n×n

Therefore, (5.1) and (5.2) imply that, for i ∈ {1, . . . , n} such

that ip 6 n,

CAi−1 = 0p×p(i−1) Ip 0p×(n−pi) .

(5.3)

The following result shows that the Cholesky decomposition based rank-q square-root filter is equivalent to the

optimal Kalman filter for a specific number of time steps.

Proposition V.1. Let r > 0 be an integer such that 0 <

q = pr < n. Then, for all k = k0 , . . . , k0 + r − 1, Kc,k =

Kk .

f

P̂c,k+1

(Ai−1 )T C T

(5.13)

h

i

f

f

T

f

T

−1

f

i T T

= A P̂c,k − P̂c,k C (C P̂c,k C + R) C P̂c,k (A ) C

+ Q(Ai−1 )T C T ,

Therefore, (5.6) implies that, for all i = 1, . . . , r − 1,

P̂ f c,k+1 (Ai−1 )T C T

(5.14)

f

i T T

f

T

= ψ P̂c,k (A ) C , P̂c,k C , A, Q, C, R, i .

Hence, it follows from (5.5)-(5.7) that for i = 1, . . . , r − 1,

f

P̂k+i

CT

f

Since P̃c,k

= Pkf0 , it follows from Lemma IV.1 that for

0

i = 1, . . . , r,

f

CAi−1 P̂c,k

= CAi−1 Pkf0 .

0

Hence, it follows from (5.7) and (5.15) that for

1, . . . , r − 1,

P̂kf0 +i C T = Pkf0 +i C T .

Hence, for i = 1, . . . , r − 1,

“

”

f

Pk+1

(Ai−1 )T C T = ψ Pkf (Ai )T C T , Pkf C T , A, Q, C, R, i , (5.5)

where

ψ(X, Y, A, Q, C, R, i)

(5.6)

= AX − AY (CY + R)

i−1 T

CX + Q(A

T

) C .

Therefore,

P f k+i C T

=

i =

(5.17)

Kc,k0 +i = Kk0 +i .

f

However, in general Pc,k

6= Pkf for k = k0 , . . . , k0 + r − 1,

Kc,k .

VI. E XAMPLES

= APkf AT − APkf C T (CPkf C T + R)−1 CPkf AT + Q. (5.4)

−1

(5.16)

Finally, (2.3) and (4.10) imply that for i = 0, . . . , r − 1

Proof. Substituting (2.4) into (2.7) yields

f

Pk+1

(5.15)

f

f

f

= ϕ(P̂c,k

(Ai )T C T , P̂c,k

(Ai−1 )T C T , . . . , P̂c,k

C T , A, Q, C, i).

(5.7)

We compare performance of the SVD-based and

Cholesky-based reduced-rank square-root Kalman filters using a compartmental model [22] and 10-DOF mass-springdamper system.

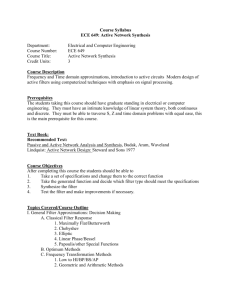

A schematic diagram of the compartmental model is

shown in Fig 1. The number n of cells is 20 with one state

per cell. All ηii and all ηij (i 6= j) are set to 0.1. We assume

that the state of the 9th cell is measured, and disturbances

enter all cells so that the disturbance covariance Q has full

rank.

ϕ(Pkf (Ai )T C T , Pkf (Ai−1 )T C T , . . . , Pkf C T , A, Q, C, i).

f

da

and P̂c,k

by

Define P̂c,k

f

f

f

da

da

da T

P̂c,k

, S̃c,k

(S̃c,k

)T , P̂c,k

, S̃c,k

(S̃c,k

) .

(5.8)

It follows from Lemma IV.1 and (5.3) that for all k > 0 and

i = 1, . . . , r,

da

da

f

f

. (5.9)

= CAi−1 P̃c,k

, CAi−1 P̂c,k

= CAi−1 P̃c,k

CAi−1 P̂c,k

Note that

f

da T

P̃c,k+1

= AP̂c,k

A + Q.

(5.10)

Multiplying (5.10) by (Ai−1 )T C T yields

f

da

P̃c,k+1

(Ai−1 )T C T = AP̂c,k

(Ai )T C T + Q(Ai−1 )T C T . (5.11)

Substituting (4.40) into (5.11) yields

f

da

P̂c,k+1

(Ai−1 )T C T = AP̃c,k

(Ai )T C T + Q(Ai−1 )T C T , (5.12)

Fig. 1.

Compartmental model involving interconnected subsystems.

We simulate three cases of disturbance covariance and

compare costs J , E(eT

k ek ) in Figure 3 where (a) shows

the cost when Q = I, ((b) shows costs when Q is diagonal

with entries

(

Qi,i =

4.0, if i = 9,

1.0, else,

(6.1)

and (c) shows costs when Q is diagonal with entries

(

0.25, if i = 9,

Qi,i =

(6.2)

1.0, else,

We reduce the rank of the square-root covariance to 2 in

all three cases. In (a) and (b) of Figure 3, the Choleskybased reduced-rank square-root Kalman filter exhibits almost

the same performance as the optimal filter whereas the

SVD-based reduced-rank square-root Kalman filter shows

degraded performance in (a). Meanwhile, in (c), the SVDbased has a large transient and large steady-state offset

from the optimal, whereas the Cholesky-based reduced-rank

square-root Kalman filter behaves close to the full-order

filter.

The mass-spring-damper model is shown in Figure 2. The

total number of masses is 10 with two states (displacement

and velocity) per mass. For i = 1, . . . , 10, mi = 1 kg,

and kj = 1 N/m, cj = 0.01 Ns/m, j = 1, . . . , 11. We

assume that the displacement of the 5th mass is measured and

disturbances enter all states so that disturbance covariance Q

has full rank.

Fig. 2.

Mass-spring-damper system.

We simulate three cases of disturbance covariance and

compare costs J in (a),(b) and (c) of Figure 4. (a) shows

costs when Q = I, (b) shows costs when Q is given by

(6.1), and (c) shows costs when Q is given by (6.2). We

reduce the rank of square-root covariance to 2 in all three

cases. In (a) and (b), the costs for the Cholesky-based and

SVD-based reduced-rank square-root Kalman filters are close

to each other but larger than the optimal. Meanwhile, in (c),

the SVD-based shows unstable behavior, while the Choleskybased filter remains stable and nearly optimal.

VII. C ONCLUSIONS

We developed a Cholesky decomposition method to obtain

reduced-rank square-root Kalman filters. We showed that

the SVD-based reduced-rank square-root Kalman filter is

equivalent to the optimal filter when the reduced-rank is

equal to or greater than the rank of error-covariance, while

the Cholesky-based is equivalent to the optimal when the

system is lower triangular block-diagonal according to the

observation matrix C which has the form [Ip×p 0] and p

is equal to the reduced-rank q. Furthermore, the Choleskybased rank-q square root filter is equivalent to the optimal

Kalman filter for a specific number of time steps. In general

cases, the Cholesky-based does not always perform better

that the SVD-based and vice versa. Finally, using simulation

examples, we showed that the Cholesky-based exhibits more

stable performance than the SVD-based filter, which can

become unstable when the strong disturbances enter the

system states that are not measured.

6

10

Optimal KF

RRSqrKF−Chol

RRSqrKF−SVD

R EFERENCES

5

10

4

J

10

3

10

2

10

1

10

50

100

150

200

250

time index

300

350

400

(a)

6

10

Optimal KF

RRSqrKF−Chol

RRSqrKF−SVD

5

10

4

J

10

3

10

2

10

1

10

50

100

150

200

250

time index

300

350

400

(b)

6

10

Optimal KF

RRSqrKF−Chol

RRSqrKF−SVD

5

10

4

10

J

[1] M. Lewis, S. Lakshmivarahan, and S. Dhall, Dynamic Data Assimilation: A Least Squares Approach. Cambridge, 2006.

[2] G. Evensen, Data Assimilation: The Ensemble Kalman Filter.

Springer, 2006.

[3] D. S. Bernstein, L. D. Davis, and D. C. Hyland, “The optimal projection equations for reduced-order, discrete-time modelling, estimation

and control,” AIAA J. Guid. Contr. Dyn., vol. 9, pp. 288–293, 1986.

[4] D. S. Bernstein and D. C. Hyland, “The optimal projection equations

for reduced-order state estimation,” IEEE Trans. Autom. Contr., vol.

AC-30, pp. 583–585, 1985.

[5] W. M. Haddad and D. S. Bernstein, “Optimal reduced-order observerestimators,” AIAA J. Guid. Contr. Dyn., vol. 13, pp. 1126–1135, 1990.

[6] P. Hippe and C. Wurmthaler, “Optimal reduced-order estimators in the

frequency domain: The discrete-time case,” Int. J. Contr., vol. 52, pp.

1051–1064, 1990.

[7] C.-S. Hsieh, “The Unified Structure of Unbiased Minimum-Variance

Reduced-Order Filters,” in Proc. Contr. Dec. Conf., Maui, HI, December 2003, pp. 4871–4876.

[8] J. Ballabrera-Poy, A. J. Busalacchi, and R. Murtugudde, “Application

of a reduced-order kalman filter to initialize a coupled atmosphereocean model: Impact on the prediction of el nino,” J. Climate, vol. 14,

pp.1720–1737, 2001.

[9] S. F. Farrell and P. J. Ioannou, “State estimation using a reduced-order

Kalman filter,” J. Atmos. Sci., vol. 58, pp. 3666–3680, 2001.

[10] P. Fieguth, D. Menemenlis, and I. Fukumori, “Mapping and PseudoInverse Algorithms for Data Assimilation,” in Proc. Int. Geoscience

Remote Sensing Symp., 2002, pp. 3221–3223.

[11] L. Scherliess, R. W. Schunk, J. J. Sojka, and D. C. Thompson,

“Development of a physics-based reduced state kalman filter for the

ionosphere,” Radio Science, vol. 39-RS1S04, 2004.

[12] I. S. Kim, J. Chandrasekar, H. J. Palanthandalam-Madapusi, A. Ridley,

and D. S. Bernstein, “State Estimation for Large-Scale Systems Based

on Reduced-Order Error-Covariance Propagation,” in Proc. Amer.

Contr. Conf., New York, June 2007.

[13] S. Gillijns, D. S. Bernstein, and B. D. Moor, “The Reduced Rank

Transform Square Root Filter for Data Assimilation,” Proc. of the 14th

IFAC Symposium on System Identification (SYSID2006),Newcastle,

Australia, vol. 11, pp. 349–368, 2006.

[14] A. W. Heemink, M. Verlaan, and A. J. Segers, “Variance Reduced

Ensemble Kalman Filtering,” Monthly Weather Review, vol. 129, pp.

1718–1728, 2001.

[15] D. Treebushny and H. Madsen, “A New Reduced Rank Square Root

Kalman Filter for Data Assimilation in Mathematical Models,” Lecture

Notes in Computer Science, vol. 2657, pp. 482–491, 2003.

[16] M. Verlaan and A. W. Heemink, “Tidal flow forecasting using reduced rank square root filters,” Stochastics Hydrology and Hydraulics,

vol. 11, pp. 349–368, 1997.

[17] J. L. Anderson, “An Ensemble Adjustment Kalman Filter for Data

Assimilation,” Monthly Weather Review, vol. 129, pp. 2884–2903,

2001.

[18] M. K. Tippett, J. L. Anderson, C. H. Bishop, T. M. Hamill, and J. S.

Whitaker, Monthly Weather Review, vol. 131, pp. 1485–1490, 2003.

[19] G. J. Bierman, Factorization Methods for Discrete Sequential Estimation. reprinted by Dover, 2006.

[20] M. Morf and T. Kailath, “Square-root algorithms for least-squares

estimation,” IEEE Trans. Autom. Contr., vol. AC-20, pp. 487–497,

1975.

[21] G. W. Stewart, “Matrix Algorithms Volume 1: Basic Decompositions,”

SIAM, 1998.

[22] D. S. Bernstein and D. C. Hyland. Compartmental modeling and

second-moment analysis of state space systems. SIAM J. Matrix Anal.

Appl., 14(3):880–901, 1993.

3

10

2

10

1

10

50

100

150

200

250

time index

300

350

400

(c)

Fig. 3. Time evolutions of the cost J , E(eT

k ek ) for the compartmental

system. For this example, R = 10−4 , P0 = 102 I, the rank of reduced

rank filters is fixed at 2 and energy measurement is taken at compartment 9.

Disturbances enter compartments 1,2,. . .,20. In (a), the cost J is when

disturbance covariance is Q = I20×20 , (b) is for the case when Q(9,9)

is changed to 4.0 and (c) is for the case when Q(9,9) is changed to

0.25.

6

10

Optimal KF

RRSqrKF−Chol

RRSqrKF−SVD

5

J

10

4

10

3

10

100

200

300

400 500 600

time index

700

800

900 1000

(a)

6

10

Optimal KF

RRSqrKF−Chol

RRSqrKF−SVD

5

J

10

4

10

3

10

100

200

300

400 500 600

time index

700

800

900 1000

700

800

900 1000

(b)

20

10

Optimal KF

RRSqrKF−Chol

RRSqrKF−SVD

15

J

10

10

10

5

10

0

10

100

200

300

400 500 600

time index

(c)

Fig. 4. Time evolutions of the cost J = E(eT

k ek ) of the mass-springdamper system. Here R = 10−4 , P0 = 1002 I, the rank of reduced rank

filters is fixed at 2, and a displacement measurement of m5 is available.

Velocity and force disturbances enter mass 1,2,. . .,10. (a) shows the cost J

when disturbance covariance is Q = I20×20 while (b) is for the case when

Q(9,9) is changed to 4.0 and (c) is for the case when Q(9,9) is changed to

0.25.