A Complete Generalization of Atkin`s Square Root Algorithm 1

advertisement

Fundamenta Informaticae 125 (2013) 71–94

71

DOI 10.3233/FI-2013-853

IOS Press

A Complete Generalization of Atkin’s Square Root Algorithm

Armand Stefan Rotaru∗

Institute of Computer Science, Romanian Academy

Carol I no. 8, 700505 Iasi, Romania

armand.rotaru@iit.academiaromana-is.ro

Sorin Iftene

Department of Computer Science, Alexandru Ioan Cuza University

General Berthelot no. 16, 700483 Iasi, Romania

siftene@info.uaic.ro

Abstract. Atkin’s algorithm [2] for computing square roots in Z∗p , where p is a prime such that

p ≡ 5 mod 8, has been extended by Müller [15] for the case p ≡ 9 mod 16. In this paper we extend

Atkin’s algorithm to the general case p ≡ 2s +1 mod 2s+1 , for any s ≥ 2, thus providing a complete

solution for the case p ≡ 1 mod 4. Complexity analysis and comparisons with other methods are

also provided.

Keywords: Square Roots, Efficient Computation, Complexity

1. Introduction

Computing square roots in finite fields is a fundamental problem in number theory, with major applications related to primality testing [3], factorization [17] or elliptic point compression [10]. In this paper

we consider the problem of finding square roots in Z∗p , where p is an odd prime. We have to remark that,

using Hensel’s lemma and Chinese remainder theorem, the problem of finding square roots modulo any

composite number can be reduced to the case of prime modulus, by considering its prime factorization

(for more details, see [4]).

∗

Address for correspondence: Institute of Computer Science, Romanian Academy, Carol I no. 8, 700505 Iasi, Romania

72

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

According to Bach and Shallit [4, Notes on Chapter 7, page 194] and Lemmermeyer [13, Exercise

1.16, Page 29], Lagrange was the first to derive an explicit formula for the case p ≡ 3 mod 4 in 1769.

According to the same sources ([4, Exercise 1, page 188] and [13, Exercise 1.17, Page 29]), the case

p ≡ 5 mod 8 was solved by Legendre in 1785. Atkin [2] also found a simple solution for the case

p ≡ 5 mod 8 in 1992. In 2004, Müller [15] extended Atkin’s algorithm to the case p ≡ 9 mod 16

and left further developing Atkin’s algorithm as an open problem. In this paper we extend Atkin’s

algorithm to the case p ≡ 2s + 1 mod 2s+1 , for any s ≥ 2, thus providing a complete solution for

the case p ≡ 1 mod 4. Müller’s algorithm and our generalization use quadratic non-residues, and thus,

they are probabilistic algorithms. We remark that several deterministic approaches for computing square

roots modulo a prime p have also been presented in the literature. Schoof [19] proposed an impractical

deterministic algorithm of complexity O((log2 p)9 ). Sze [21] has recently developed a deterministic

algorithm for computing square roots which is efficient (its complexity is Õ((log2 p)2 ))) only for certain

primes p.

The paper is structured as follows. Section 2 is dedicated to some mathematical preliminaries on

quadratic residues and square roots. Section 3 presents Atkin’s algorithm and its extension (Müller’s

algorithm), both based on computing square roots of −1 modulo p. We present our generalization in

Section 4. Its performance, efficient implementation and comparisons with other methods are presented

in Section 5. In the last section we briefly discuss the conclusions of our paper and the possibility of

adapting our algorithm for other finite fields.

2. Mathematical Background

In this section we will present some basic facts on quadratic residues and square roots. For simplicity of

notation, from this point forward we will omit the modular reduction, but the reader must be aware that

all computations are performed modulo p if not explicitly stated otherwise.

Let p be a prime and a ∈ Z∗p . We say that a is a quadratic residue modulo p if there exists b ∈ Z∗p

with the property a = b2 . Otherwise, a is a quadratic non-residue modulo p. It is easy to see that the

product of two residues is a residue and that the product of a residue with a non-residue is a non-residue.

If b2 = a then b will be referred to as a square root of a (modulo p) and we will simply denote this

√

fact by b = a. We have to remark that if a is a quadratic residue modulo p, p prime, then a has exactly

two square roots - if b is a square root of a, then p − b is the other one. In particular, 1 has the square roots

2

1 and −1 (in this case, −1 will be regarded as being p −

1)or, equivalently, a = 1 ⇔ (a = 1 ∨ a = −1).

a

The Legendre symbol of a modulo p, denoted as

, is defined to be equal to ±1 depending on

p

whether a is a quadratic residue modulo p. More exactly,

(

1, if a is a quadratic residue modulo p;

a

=

p

−1, otherwise.

Euler’s criterion states that, for any prime p and a ∈ Z∗p , the following relation holds:

a

p−1

2

a

=

.

p

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

73

Euler’s criterion provides a method of computing the Legendre symbol of a modulo p using an exponentiation modulo p, whose complexity is O((log2 p)3 ). There are faster methods for evaluating the Legendre

(log2 p)2

symbol - see, for example [8], in which are presented algorithms of complexity O( log log

) for com2

2p

puting the Jacobi symbol (the Jacobi symbol is a generalization of the Legendre symbol to arbitrary

moduli).

p2 −1

2

Another useful property is that

= (−1) 8 , that implies that 2 is a quadratic residue modulo

p

p if and only if p ≡ ±1 mod 8.

p+1

If p is prime, p ≡ 3 mod 4, and a ∈ Z∗p is a quadratic residue modulo p then b = a 4 is a square

p+1

p−1

a

root of a modulo p. Indeed, in this case, b2 = a 2 = a · a 2 = a ·

= a · 1 = a. Thus, in this

p

case, finding square roots modulo p requires only a single exponentiation modulo p. In the next sections

we will focus on the case p prime, p ≡ 1 mod 4.

3. Square Root Algorithms based on Computing

√

−1

In this section we present two methods for computing square roots for the cases p ≡ 5 mod 8 and

p ≡ 9 mod 16, both based on computing square roots of −1 modulo p.

3.1. Atkin’s Algorithm

Let p be a prime such that p ≡ 5 mod 8 and a a quadratic residue modulo p. Atkin’s idea [2] is to

√

√

express a as a = αa(β − 1) where β 2 = −1 and 2aα2 = β. Indeed, in this case, (αa(β − 1))2 =

a(−2aα2 β) = a(−β 2 ) = a. Moreover, in order to easily determine √

α, it will be convenient that β has

k

the form β = (2a) , with k odd. Thus, the major challenge is to find −1 of the mentioned form.

p−1

By Euler’s criterion, the relation (2a) 2 = −1 holds (a is a quadratic residue, but 2 is a quadratic

p−1

non-residue, therefore 2a is a quadratic non-residue), so we can choose β as β = (2a) 4 and α as

p−1

4 −1

p−5

α = (2a) 2 = (2a) 8 .

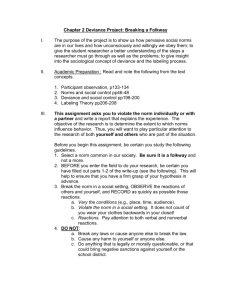

The resulted algorithm is presented in Figure 1.

Atkin’s Algorithm(p,a)

input:

1.

2.

3.

4.

p prime such that p ≡ 5 mod 8,

a ∈ Z∗p a quadratic residue;

b, a square root of a modulo p;

output:

begin

p−5

α := (2a) 8 ;

β := 2aα2 ;

b := αa(β − 1);

return b

end.

Figure 1: Atkin’s algorithm

74

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

Atkin’s algorithm requires one exponentiation (in Step 1) and four multiplications (two multiplications in Step 2 and two multiplications in Step 3).

3.2. Müller’s Algorithm

Let p be a prime such that p ≡ 9 mod 16 and a a quadratic residue modulo p. Müller [15] has extended

√

√

Atkin’s algorithm by expressing a as a = αad(β − 1) where β 2 = −1 and 2ad2 α2 = β. Indeed, in

this case, (αad(β − 1))2 = a(−2ad2 α2 β) = a(−β 2 ) = a. Moreover, in order to easily determine α, it

will be convenient that β has the form β = (2ad2 )k , with k odd.

p−1

By Euler’s criterion, the relation (2a) 2 = 1 holds (a and 2 are quadratic residues, therefore 2a is

a quadratic residue). We have two cases:

p−1

(I) (2a) 4 = −1 - in this case we can choose β as β = (2a)

(d = 1);

(II) (2a)

p−1

4

p−1

8

and α as α = (2a)

p−1

8 −1

2

= (2a)

p−1

2

p−1

8 −1

2

2

= 1 - in this case we need a quadratic non-residue d - by Euler’s criterion, d

and, thus, (2ad2 )

p−9

(2ad2 ) 16 .

p−1

4

= −1, so we can choose β as β = (2ad2 )

p−1

8

p−9

16

and α as α = (2ad )

= −1

=

The above presentation is in fact a slightly modified variant of the original one - for Case (I), Müller

used an arbitrary residue d. Kong et al. [11] have remarked that using d = 1 in this case leads to

an important improvement of the performance of original Müller’s algorithm, by requiring only one

exponentiation for half of the squares in Z∗p (Case (I)) and two for the rest (Case (II)).

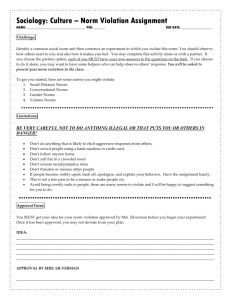

The resulted algorithm is presented in Figure 2.

p−1

In case (2a) 4 = −1, Müller’s algorithm requires one exponentiation (Step 1) and five multiplications (two multiplications in Step 2, one multiplication in Step 3 and two multiplications in Step 4). In

p−1

case (2a) 4 = 1, Müller’s algorithm, besides the operations in Steps 1-3, requires one more exponentiation (Step 8) and eight more multiplications (one multiplication in Step 8, four multiplications in Step

9 and three multiplications in Step 10. Additionally,

Step 7 requires, on average, two quadratic character

d

evaluations (generate randomly d ∈ Z∗p until

= −1 - because half of the elements are quadratic

p

non-residues, two generations are required on average). It is interesting to remark that Ankeny [1] has

proven that, by assuming the Extended Riemann Hypothesis (ERH), the least quadratic non-residue modulo p is in O((log2 p)2 ). As a consequence, in this case, the presented probabilistic algorithm for finding

a quadratic non-residue can be transformed into a deterministic polynomial time algorithm of complexity

O((log2 p)4 ).

4. A Complete Generalization of Atkin’s Square Root Algorithm

In this section we extend Atkin’s algorithm to the case p ≡ 2s + 1 mod 2s+1 , for any s ≥ 2, thus

providing a complete solution for the case p ≡ 1 mod 4. For any prime p, with p ≡ 1 mod 4, we can

express p − 1 as p − 1 = 2s t, where s ≥ 2 and t is odd. If we write t as t = 2t′ + 1, we obtain that

p = 2s+1 t′ + 2s + 1 that implies that p ≡ 2s + 1 mod 2s+1 .

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

75

Müller’s Algorithm(p,a)

input:

p prime such that p ≡ 9 mod 16,

a ∈ Z∗p a quadratic residue;

b, a square root of a modulo p;

output:

begin

p−9

1.

α := (2a) 16 ;

2.

β := 2aα2 ;

3.

if β 2 = −1

4.

then b := αa(β − 1);

5.

else

6.

begin

7.

generate d, a quadratic non-residue modulo p;

p−9

8.

α := αd 8 ;

9.

β := 2ad2 α2 ;

10.

b := αad(β − 1);

11.

end

12. return b

end.

Figure 2: Müller’s algorithm

√

√

We will express a as a = αa(β − 1)dnorm , where β 2 = −1, d is a quadratic non-residue modulo

p, norm ≥ 0, and 2ad2·norm α2 = β. Indeed, in this case, (αa(β − 1)dnorm )2 = a(−2ad2·norm α2 β) =

a(−β 2 ) = a. Moreover, in order to easily determine α, it will be convenient that β has the form β =

(2ad2·norm )k , with k odd.

The key point of our generalization is

p−1

Base Case: (2ad2·norm ) 2s−1 = −1, for some norm ≥ 0.

In this case, because

α = (2ad2·norm )

p−1

−1

2s

2

p−1

2s

is odd, we can choose β as β = (2ad2·norm )

= (2ad2·norm )

p−(2s +1)

2s+1

= (2ad2·norm )

t−1

2

p−1

2s

, α as

.

In contrast to Müller’s impractical attempt of further generalizing Atkin’s approach ([15, Remark 2]),

we focus on finding an adequate value for norm, the exponent of d such that the Base Case is satisfied.

In order to derive the value of norm, we use the following results:

Theorem 4.1. Let p be an odd prime, p − 1 = 2s t (s ≥ 3, t odd), a a quadratic residue modulo p, and d

a quadratic non-residue modulo p. Then, for all 1 ≤ i ≤ s − 1, the following statement holds

′

(∃norm′ ∈ N)((2ad2·norm )

p−1

2i

p−1

= 1) ⇒ (∃norm ∈ N)((2ad2·norm ) 2s−1 = −1)

76

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

Proof:

We use induction on i.

Initial Case - For i = s − 1 the reasoning is very simple. If there is a positive integer norm′

p−1

′ p−1

s−2 p−1

such that (2ad2·norm ) 2s−1 = 1 then, using that d 2 = −1 (or, (d2 ) 2s−1 = −1), we obtain

′

s−2

p−1

′

s−3 )

that (2ad2·norm d2 ) 2s−1 = −1, and, furthermore, (2ad2·(norm +2

may choose norm = norm′ + 2s−3 .

p−1

) 2s−1 = −1. Thus, we

Inductive Case - Let us consider an arbitrary number i, 1 ≤ i < s − 1. We assume that the

statement holds for the case i + 1 and we will prove it for the case i.

′

If there is a natural number norm′ such that (2ad2·norm )

′

p−1

2i

′

p−1

= 1, or, ((2ad2·norm ) 2i+1 )2 = 1,

p−1

then (2ad2·norm ) 2i+1 = ±1. We have two cases:

′

p−1

– If (2ad2·norm ) 2i+1 = 1 then, using the inductive hypothesis, we directly obtain that (∃norm ∈

p−1

N)((2ad2·norm ) 2s−1 = −1);

′

p−1

– If (2ad2·norm ) 2i+1 = −1 then, using that d

′

i

p−1

p−1

2

i

p−1

= −1 (or, equivalently, (d2 ) 2i+1 = −1)

′

i−1

p−1

we obtain that (2ad2·norm d2 ) 2i+1 = 1, and, furthermore, (2ad2·(norm +2 ) ) 2i+1 = 1.

Finally, using the inductive hypothesis, we obtain that the required statement holds.

⊓

⊔

The previous theorem leads to the following:

Corollary 4.2. Let p be an odd prime, p − 1 = 2s t (s ≥ 2, t odd), a a quadratic residue modulo p, and

d a quadratic non-residue modulo p. Then there exists norm ∈ N such that

p−1

(2ad2·norm ) 2s−1 = −1.

Proof:

For s = 2, we obtain directly norm = 0, because in this case 2 is a quadratic non-residue modulo p and

p−1

the relation (2a) 2 = −1 holds.

p−1

For s ≥ 3, 2 is a quadratic residue and thus, we have (2a) 2 = 1. Using Theorem 4.1, for i = 1

(norm′ = 0) we obtain that there is norm ∈ N such that

p−1

(2ad2·norm ) 2s−1 = −1.

⊔

⊓

Therefore, all other possible cases can be recursively reduced to the Base Case as presented above.

In order to further clarify the points made so far, we will now give an algorithmic description of our

generalization. We will use a special subroutine named FindPlace (presented in Figure 3), in which,

p−1

starting with certain values for a and norm that satisfy (2ad2·norm ) 2i = 1, for some i, we will search

p−1

for a place j as close as possible to s − 1 such that temp = (2ad2·norm ) 2j = ±1.

Furthermore, we will also formulate Base Case as a subroutine in Figure 4.

Finally, the main part of our algorithm is presented in Figure 5.

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

77

FindPlace(a, norm)

1.

2.

3.

4.

5.

6.

7.

8.

begin

if norm = 0 then temp := (2a)t

else temp := (2ad2·norm )t ;

j := s;

repeat

j := j − 1;

temp := temp2 ;

until (temp = 1 ∨ temp = −1)

return (j, temp)

end.

Figure 3: FindPlace Subroutine

BaseCase(a, norm)

1.

2.

3.

4.

begin

t−1

α := (2ad2·norm ) 2 ;

β := (2ad2·norm )α2 ;

b := αa(β − 1)dnorm ;

return b

end.

Figure 4: BaseCase Subroutine

Remark 4.3. For the clarity of the presentation, we believe it is also necessary to make some comments

and prove some statements on the Generalized Atkin Algorithm and its subroutines:

1. The variable norm contains the current value of the normalization exponent.

2. Some useful properties of the subroutine FindPlace are presented next:

(a) If the outputted value j of the subroutine FindPlace is not equal to s − 1, then the corresponding value temp will be −1.

Proof:

Because j < s − 1 then at least two iterations of repeat until have been performed (because

initially j = s and then j is decremented in each iteration). If we assume by contradiction that

the final value of temp is 1, then the previous value temp satisfies temp = ±1 (because temp =

temp2 in Step 6), and, thus, the algorithm had to terminate at the previous iteration.

⊔

⊓

(b) Let (j, temp) and (j ′ , temp′ ) be the outputs of two consecutive calls of the subroutine

FindPlace. Then j < j ′ .

78

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

Generalized Atkin Algorithm(p,a)

input:

p prime such that p ≡ 1 mod 4

a ∈ Z∗p a quadratic residue;

b, a square root of a modulo p;

output:

begin

1.

determine s ≥ 2 and t odd such that p − 1 = 2s t;

2.

generate d, a quadratic non-residue modulo p;

3.

norm := 0;

4.

(j, temp) := FindPlace(a, norm);

5.

while (j < s − 1)

6.

begin

7.

norm := norm + 2j−2 ;

8.

(j, temp) := FindPlace(a, norm);

9.

end

10. if (temp = −1) then BaseCase(a, norm)

11. if (temp = 1) then

12.

begin

13.

norm := norm + 2s−3 ;

14.

BaseCase(a, norm);

15.

end

end.

Figure 5: Generalized Atkin Algorithm

Proof:

Let us first point out that j < s − 1 (otherwise, if j = s − 1, there will not be another call of

FindPlace, since the algorithm will end with a call of BaseCase), which implies that j+1 ≤ s−1.

p−1

′ p−1

Therefore, we obtain temp = (2ad2·norm ) 2j = −1. Furthermore, we have (2ad2·norm ) 2j = 1,

′

p−1

which implies that (2ad2·norm ) 2j+1 = ±1, leading to j+1 ≤ j ′ (because j ′ is the greatest element

′

p−1

less than s − 1 such that (2ad2·norm ) 2j′ = ±1).

⊔

⊓

3. If p ≡ 5 mod 8, i.e., s = 2, then FindPlace will be called exactly once (with a and norm = 0)

and it will output j = s − 1 = 1 and temp = −1 - in this case, the subroutine BaseCase will

directly lead to the final result (no normalization is required). Thus, we have obtained Atkin’s

algorithm as a particular case of our algorithm.

4. If p ≡ 9 mod 16, i.e., s = 3, then FindPlace will be called exactly once (with a and norm = 0)

and it will output j = s − 1 = 2 and temp = ±1. Two subcases are possible:

• In case temp = −1, the subroutine BaseCase will lead directly to the final result (no normalization is required);

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

79

• In case temp = 1, the normalization exponent will be updated as norm = 0 + 23−3 = 1 and

the subroutine BaseCase will be called. Consequently, the final result will be computed as

b := αa(β − 1)d1 (Step 3 of BaseCase).

Thus, we have obtained Müller’s algorithm as a particular case of our algorithm.

5. Efficient Implementation and Performance Analysis

We start with the average-case and worst-case complexity analysis of our initial algorithm and then we

discuss several improvements for efficient implementation. Finally we present several comparisons with

the most important generic square root computing methods, namely Tonelli-Shanks and Cippola-Lehmer.

5.1. Average-Case and Worst-Case Complexity Analysis

We will consider the cases s ≥ 4 (for s = 2, s = 3, we obtain, Atkin’s algorithm, and, respectively,

Müller’s algorithm, whose complexities have been discussed in Section 3). Our algorithm determines the

value of norm by calling the subroutine FindPlace for each 1 digit in the binary expression of norm.

Therefore, the algorithm makes Hw(norm) calls to FindPlace, where Hw(x) denotes the Hamming

weight of x (i.e., the number of 1’s in x).

Let E denote one exponentiation, M - one multiplication, and S - one squaring (all these operations

are performed modulo p). Our subroutines will involve:

• FindPlace - if the output is (j, temp) then at most 2E+1M+(s − j) S;

• BaseCase - at most 3E+6M+1S.

We exclude the complexity of generating a quadratic non-residue d. All the other computations can

be considered negligible (if norm is represented in base 2 then the step norm := norm + 2j−2 implies

only setting a certain bit to 1). In the average case, we have Hw(norm) = s−2

2 , which means that

our algorithm will include s−2

calls

to

FindPlace

and

a

call

to

BaseCase.

Thus,

the total number of

2

operations is, on average, the following:

s−2

2 (2E

Ps−1

(s−j)

+ 1M) + j=22

S + 3E + 6M + 1S

=

(s−2)

(s−1)(s−2)

(s − 2)E + 2 M +

S + 3E + 6M + 1S =

4

s+10

s2 −3s+6

(s + 1)E + 2 M +

S

4

In contrast, in the worst case, norm will have Hw(norm) = s − 2 (i.e., all the bits from norm will

be equal to 1), resulting in (s − 2) calls to FindPlace and a call to BaseCase. The total number of

operations now becomes the following:

P

(s − 2)(2E + 1M) + s−1

=

j=2 (s − j)S + 3E + 6M + 1S

(s−1)(s−2)

2(s − 2)E + (s − 2)M +

S + 3E + 6M + 1S =

2

s2 −3s+4

(2s − 1)E + (s + 4)M +

S

2

Once more, we do not count the generation of a quadratic non-residue d. Consequently, both the

average-case and the worst-case complexity of our initial algorithm are in O((log2 p)4 ).

80

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

5.2. More Efficient Implementation

It is obvious that several steps (especially the steps that involves exponentiations) of our algorithm can be

performed much more efficiently compared to their raw implementation. A first solution is to precompute

t−1

several powers of d, to keep track of dnorm and (dnorm ) 2 as norm is updated and efficiently recompute

the value of temp from Step 2 of FindPlace by using the previous values. Moreover, in this case, the

final exponentiations (from BaseCase) can also be performed efficiently.

We will now examine the computations behind our algorithm more closely, in order to point out

possible improvements, if precomputations can be afforded. We begin by defining the elements Dj ,

j

j

0 ≤ j ≤ s − 1, Dj = D 2 , D = dt and Aj , 0 ≤ j ≤ s − 2, Aj = A2 , A = (2a)t . Let us denote

Aj · D temp norm by < j, temp norm >, where temp norm is in binary form. In our algorithm, we

determine the value of norm = (fs−3 ...f0 )2 by successively computing the digits f0 , f1 ,..., fs−3 , so

that:

s−1 0′ s

Ts−2

z }| {

= < s − 2, f0 00.........00 > =

1

Ts−3 = < s − 3, f1 f0 00......00

| {z } > =

1

T2

= < 2,

fs−4 ...f1 f0 000 > =

1

T1

= < 1,

fs−3 .....f1 f0 00 > = −1

s−2 0′ s

..

.

p−1

We remark that the last element T1 is exactly the element from the Base Case: (2ad2·norm ) 2s−1 .

The reader will notice that for any 0 ≤ j ≤ s − 2, we would compute Tj = Aj · D (fs−2−j ...f1f0 0...0)2

in a naive manner, by multiplying Aj with all the (2i )th powers of D corresponding to the 1 bits from

fs−2−j ...f1 f0 0...0. In order to reduce the number of modular multiplications, let us choose a fixed, small

integer k, with k ≥ 1, and consider the k terms Tj , Tj−1 , ..., Tj−k+1 , where j − k + 1 > 1. We obtain

the following sequence:

Tj

= < j,

fs−2−j fs−3−j ...f1 f0 0......0

| {z } >

=1

j+1 0′ s

Tj−1

= < j − 1,

..

.

fs−2−j+1fs−2−j fs−3−j ...f1 f0 0......0

| {z } >

j

=1

0′ s

Tj−k+2 = < j − k + 2, fs−2−j+k−2...fs−2−j fs−3−j ...f1 f0 0......0

| {z } > = 1

j−k+3 0′ s

Tj−k+1 = < j − k + 1, fs−2−j+k−1...fs−2−j fs−3−j ...f1 f0 0......0

| {z } > = 1

j−k+2 0′ s

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

81

Importantly, the sequence fs−3−j ...f1 f0 appears in all of the above terms. Let us denote the term

j−k+2 0′ s

z }| {

f

...f

f

00......00 by aux.

1

0

s−3−j

D

We notice that the Tj , Tj−1 , ..., Tj−k+1 can be computed in the following way, once we have determined Tj+1 (and, implicitly, fs−3−j , ...., f1 , f0 ):

Tj

f

k−1

s−2−j

= Aj · Ds−1

· aux2

| {z }

S0

Tj−1

f

f

k−2

s−2−j+1

= Aj−1 · Ds−1

· D s−2−j · aux2

| {z } | s−2

{z }

..

.

S1

S0

f

f

f

s−2−j+k−2

s−2−j+1

Tj−k+2 = Aj−k+2 · Ds−1

· · · · · · · · · · · · Ds−k+2

· D s−2−j · aux2

|

{z

}

| {z } | s−k+1

{z }

Sk−2

f

S1

f

S0

f

f

s−2−j+k−1

s−2−j+1

Tj−k+1 = Aj−k+1 · Ds−1

· D s−2−j+k−2 · · · · · · · · · Ds−k+1

· D s−2−j · aux

|

{z

} | s−2{z

}

| {z } | s−k

{z }

Sk−1

Sk−2

S1

S0

We added underbraces with subscripts to the terms in order to highlight the fact that it is useful to see

terms which have the same exponent as being part of a larger set. We will show how we can efficiently

generate the powers of aux and the sets Sw , for 0 ≤ w ≤ k − 1.

i

Firstly, we compute aux in the regular manner, and then aux2 , for 1 ≤ i ≤ k − 1, through k − 1

modular squarings. This way, we use only k−1 modular squarings, instead of (k−1)·Hw(fs−3−j ...f1 f0 )

regular modular multiplications.

fs−2−j+w

Secondly, we still have to compute the sets of terms Sw = {Ds−k+w+z

|0 ≤ z ≤ k − 1 − w},

for 0 ≤ w ≤ k − 1. Intuitively, the set Sw contains all the terms located w positions below the main

diagonal, for 0 ≤ w ≤ k − 1. For each w, if fs−2−j+w = 0 (as Ts−2−j+w = 1), we do not have to

compute anything because Sw = {1}, while if fs−2−j+w = 1 (as Ts−2−j+w = −1), each set can be

easily generated by taking Ds−k+w and applying k − w − 1 modular squarings.

Inner Loop

1.

2.

3.

4.

5.

6.

7.

compute aux

set Tj−k+1 := Aj−k+1 · aux;

for w = 0 to k − 1 do:

determine fs−2−j+w

update Tj−k+1 by setting Tj−k+1 := Tj−k+1 · Ds−k+w

for i = 2 to k − w − 1 do:

2

update Tj−k+i by setting Tj−k+i := Tj−k+i−1

Figure 6: Inner Loop

82

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

Thirdly, by storing the terms Tj , Tj−1 , ..., Tj−k+1 we can efficiently combine the two aforementioned

improvements as presented in Figure 6.

For each value of w between 0 and k − 1, running the inner loop generates both the necessary powers

of aux and the set Sw . Once the outer loop is completed, we have determined k new digits from the

binary representation of norm. We repeat this procedure until we know all the bits of norm.

Finally, we can also simplify the last computations of our initial algorithm. The standard procedure

t−1

would be to first calculate the terms α = (2ad2·norm ) 2 and β = (2ad2·norm )α2 , and then to generate

the square root b = αa(β − 1)dnorm . However, if we elaborate the expression of b we obtain

b = aαdnorm (β − 1)

t−1

t−1

= a(2a) 2 d2·norm 2 dnorm ((2a)t (d2·norm )t − 1)

= a(2a)

t−1

2

dt·norm ((2a)t (dt·norm )2 − 1)

t−1

Once we have computed (2a) 2 , we can then easily modify the final run of the inner loop in order

to generate D norm = (dt )norm and to compute the value of b. When combined, our suggestions lead to

a significantly improved version of our initial algorithm. The precomputation stage is as follows:

Precomputation(p, a, k)

input:

output:

p prime such that p ≡ 1 mod 4, p − 1 = 2s t, t odd;

k, 1 ≤ k ≤ s, a precomputation parameter;

j

Dj , 0 ≤ j ≤ s − 1, Dj = D 2 , D = dt , d quadratic non-residue,

t−1

auxA = (2a) 2 , A = (2a)t and As−1−k·i , 1 ≤ i ≤ q,

t2s−1−k·i

where q = ⌊ s−2

k ⌋, As−1−k·i = (2a)

begin

1.

generate and store d (by any means available);

2.

compute and store D and Di , 0 ≤ j ≤ s − 1

(by square-and-multiply exponentiation);

3.

compute and store auxA , A and As−1−k·i , 1 ≤ i ≤ q

(by square-and-multiply exponentiation);

end

Figure 7: Precomputation Subroutine

We have precomputed all the required powers of D, but only certain powers of A. It is not necessary

to keep all the powers of A, since the missing powers can be generated as they are needed. This is

because the algorithm behind the third improvement uses only one stored power of A and implicitly

employs k − 1 other powers of A, which are kept only for the duration of the outer loop. We have also

t−1

computed the term auxA = (2a) 2 , which is part of the final improvement. Moreover, we assume that

we have enough memory capacity to store k numbers, namely ACCh , where 1 ≤ h ≤ k. These numbers

are exactly Tj , Tj−1 , ..., Tj−k+1 , as used in the description of Inner Loop.

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

83

The main part of our improved algorithm is presented in Figure 8. Before running the actual algorithm, the Precomputation subroutine must be called. Note, however, that if p is a priori known, Steps

1 and 2 from Precomputation need to be performed only once (and this may be done in advance),

while Step 3 must be repeated for each a.

Improved Generalized Atkin Algorithm(p, a, k)

input:

p prime such that p ≡ 1 mod 4, p − 1 = 2s t, t odd;

a ∈ Z∗p a quadratic residue;

b, a square root of a modulo p;

output:

begin

1.

step := s − 2;

2.

auxnorm := 0 = (es−1 ...e0 )2 ; (the final auxnorm is 4 · norm)

3.

q := ⌊ s−2

k ⌋;

4.

rem := (s − 2) mod k + 1;

5.

for i = 1 to q do

6.

begin

7.

Complete Accumulator Update(step, k);

8.

Complete Inner Loop(step, k);

9.

end

11. Final Accumulator Update and Inner Loop(step, k, rem);

12. b := a · auxA · auxACC · (A · aux2ACC − 1);

13. return b

end.

Figure 8: Improved Generalized Atkin Algorithm

The first two subroutines correspond to the Inner Loop (described in Figure 6) in the following

manner:

• Complete Accumulator Update (presented in Figure 9) implements Steps 1 and 2, computing

aux and Tj−k+1 .

• Complete Inner Loop (presented in Figure 10) implements the loop in Steps 3 through 7, computing the bits fs−2−j ,. . . ,fs−3−j+k.

Final Accumulator Update and Inner Loop (presented in Figures 11, 12) is an incomplete

combination of a Complete Accumulator Update and a Complete Inner Loop, for determining

the remaining bits of norm, since s − 2 may not be an exact multiple of k. Moreover, a slight adjustment

is made in order to obtain the term auxACC = D norm = (dt )norm . We consider the case s = 2

separately and set auxACC := 1 since this case does not fit in the general framework. For s > 2, the

last part of this subroutine (Steps 15-34) computes the term T1 which must be treated individually, as

T1 = −1 while all other Ti ’s are equal to 1, for 2 ≤ i ≤ s − 2.

84

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

Complete Accumulator Update(step, k)

begin

1.

ACC1 := Astep−k+1

s−1

Y

(Dj−k )ej ;

j=step+2

2.

2 ;

for j = 2 to k do ACCj := ACCj−1

end

Figure 9: Complete Accumulator Update Subroutine

Complete Inner Loop(step, k)

begin

1.

for j = k downto 1 do

2.

begin

3.

auxnorm := auxnorm /2;

4.

if ACCj = −1 then

5.

begin

6.

ACC1 := ACC1 · Ds−j ;

2 ;

7.

for h = 2 to j − 1 do ACCh := ACCh−1

8.

es−1 := 1;

9.

end

10.

step := step − 1;

11.

end

end

Figure 10: Complete Inner Loop Subroutine

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

Final Accumulator Update and Inner Loop(step, k, rem)

begin

s−1

Y

(Dj−step−2 )ej ;

1.

auxACC :=

2.

3.

4

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

22.

23.

24.

25.

26.

ACC1 := A · aux2ACC ;

2 ;

for j = 2 to rem do ACCj := ACCj−1

for j = rem − 1 downto 2 do

begin

auxnorm := auxnorm /2;

if ACCj = −1 then

begin

auxACC := auxACC · Ds−1−j ;

ACC1 := A · aux2ACC ;

2 ;

for h = 2 to j − 1 do ACCh := ACCh−1

es−1 := 1;

end

end

if rem = 1 then

if s = 2 then auxACC := 1;

else

if es−1 = 0 then

begin

auxACC := auxACC · Ds−3 ;

es−1 := 1;

end

else begin

auxACC := auxACC · Ds−3 · Ds−2 · Ds−1 ;

es−1 := 0;

end

j=step+2

Figure 11: Final Accumulator Update and Inner Loop Subroutine

85

86

27.

28.

29.

30.

31.

32.

33.

34.

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

else begin

auxnorm := auxnorm /2;

if ACC2 = 1 then

begin

auxACC := auxACC · Ds−3 ;

es−1 := 1;

end

end

end

Figure 12: Final Accumulator Update and Inner Loop Subroutine (continued)

Example 5.1 illustrates the application of our improved algorithm.

Example 5.1. Let us consider p = 12289 (s = 12, t = 3) and a = 2564 (2564 is a quadratic residue

modulo 12289). We choose d = 19, k = 3 (therefore, q = 3), and obtain the following values :

i

Di

Ai

0

6859

8835

1

3589

9786

2

2049

9908

3

7852

3932

4

12280

1062

5

81

9545

i

Di

Ai

6

6561

8668

7

10643

11567

8

5736

5146

9

4043

10810

10

1479

12288

11

12288

-

However, we will only store the Di ’s, for 0 ≤ i ≤ 11, as well as A0 , A2 , A5 , A8 and auxA = 5128.

We obtain step = 10, auxnorm = 0 and rem = 2.

For i = 1, we update the accumulators so that ACC1 = A8 = 5164, ACC2 = A9 = 10810 and

ACC3 = A10 = −1.

Entering the Complete Inner Loop, we have:

• since ACC3 = −1, we have ACC1 = ACC1 · D9 = 1 and ACC2 = ACC12 = 1. Moreover,

e11 = 1, auxnorm = 0/2 + 2048 = 2048 and step = 9;

• since ACC2 = 1, we get auxnorm = 2048/2 = 1024 and step = 8;

• since ACC1 = 1, we get auxnorm = 1024/2 = 512 and step = 7;

For i = 2, we update the accumulators so that ACC1 = 1, ACC2 = 1 and ACC3 = 1.

Entering the Complete Inner Loop, we have:

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

87

• since ACC3 = 1, we get auxnorm = 512/2 = 256 and step = 6;

• since ACC2 = 1, we get auxnorm = 256/2 = 128 and step = 5;

• since ACC1 = 1, we get auxnorm = 128/2 = 64 and step = 4;

For i = 3, we update the accumulators so that ACC1 = 8246, ACC2 = 1479 and ACC3 = −1.

Entering the Inner Loop, we have:

• since ACC3 = −1, we have ACC1 = ACC1 · D9 = 10810 and ACC2 = ACC12 = −1.

Furthermore, e11 = 1, auxnorm = 64/2 + 2048 = 2080 and step = 3;

• since ACC2 = −1, we have ACC1 = ACC1 · D10 = 1. Furthermore, e11 = 1, auxnorm =

2080/2 + 2048 = 3088 and step = 2;

• since ACC1 = 1, we get auxnorm = 3088/2 = 1544 and step = 1;

We now perform the Final Accumulator Update and Inner Loop. Thus, we obtain auxACC =

D0 · D6 · D7 = 1490, ACC1 = A · aux2ACC = −1 and ACC2 = ACC12 = 1. Since ACC2 = 1, we

obtain e11 = 1, auxnorm = 1544/2 + 2048 = 2820 (thus, norm = 2820/4 = 705) and auxACC =

auxACC · D9 = 2460. The final computation gives us b = a · auxA · auxACC · (A · aux2ACC − 1) =

2564 · 5128 · 2460 · (10810 − 1) = 253.

5.3. Average-Case and Worst-Case Complexity Analysis for the Improved Algorithm

In the average case, we obtain the following complexities, based on the fact that norm has around s/2

bits equal to 1 in its representation:

• If p is a priori known, Precomputation takes 1E for the terms involving A (the computation of

the terms involving D can be performed in advance). If p is not a priori known, Precomputation

takes 2E, which means 1E for terms involving D and 1E for the terms involving A.

• Complete Accumulator Update takes 4s M and (k − 1)S (since, on average, we use s/2 bits from

norm, either of which can be 0 or 1, with equal probability).

• Complete Inner Loop takes k2 M +

be 0 or 1, with equal probability).

k(k−1)

S

4

(since we use k bits from norm, either of which can

• Final Accumulator Update and Inner Loop takes 2 · k2 M + 4s M + (k − 1)S + k2 M +

k(k−1)

S.

4

• The final computation of b takes 4M + 1S.

The estimate does not include the generation of a quadratic non-residue d. In general, the computation takes about 2E + ks ( 4s M + kS + k2 M + k(k−1)

S) + (k + 4)M + 1S. This value is around 2E + 3s

4

4S+

√

s

1 s2

1

3s

+ kM. Taking k = ⌈ s ⌉ (the optimal choice) leaves us with 2E + 4 S + 2s M +

2 M +√4 ( k )M + 4 (sk)S

√

√

1

1

4 (s⌈ s ⌉)M + 4 (s⌈ s ⌉)S + ⌈ s ⌉M.

88

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

√

√

√

s

1

If p is a priori known, we obtain 1E + 3s

s ⌉)M + 14 (s⌈ s ⌉)S + ⌈ s ⌉M. In this case,

4 S + 2 M + 4 (s⌈

√

we need s precomputed elements and memory for just 2⌈ s ⌉ additional elements.

Moving on to the worst case, we consider the fact that norm’s binary representation has roughly s

bits which are equal to 1. This results in the following complexities:

• Precomputation - same as for the average case.

• Complete Accumulator Update takes 2s M and (k − 1)S (since, on average, we use s/2 bits from

norm, and all of norm’s bits are equal to 1).

• Complete Inner Loop takes kM +

bits are equal to 1).

k(k−1)

S

2

(since we use k bits from norm, and all of norm’s

• Final Accumulator Update and Inner Loop takes 2kM + 2s M + (k − 1)S + kM +

k(k−1)

S.

2

• The final computation of b takes 4M + 1S - same as for the average case.

Again, we exclude the generation of a quadratic non-residue d. The computation takes at most

about 2E + ks ( 2s M + kS + kM + k(k−1)

S) + (2k + 4)M + 1S. This value is approximately 2E + 2s S +

2

√

2

sM + 12 ( sk )M + 12 (sk)S + 2kM. If we set k = ⌈ s ⌉ (the optimal choice), we have 2E + 2s S + sM +

√

√

√

√

1

1

s ⌉)S + 2⌈ s ⌉M. If p is a priori known, we obtain 2E + 2s S + sM + 12 (s⌈ s ⌉)M +

2 (s⌈√s ⌉)M + 2 (s⌈

√

1

s ⌉)S + 2⌈ s ⌉M. Like in the average case, we will need s precomputed elements and memory for

2 (s⌈ √

just 2⌈ s ⌉ additional eleme nts. Consequently, both the average-case and the worst-case complexity of

our improved algorithm are in O((log2 p)3.5 ).

5.4. Comparisons with Other Methods

In this section we will compare our algorithm with the most important square root algorithms, namely

Tonelli-Shanks and Cippola-Lehmer. After a short overview of these algorithms, we will put forward a

computational comparison of the three algorithms.

5.4.1.

Tonelli-Shanks Algorithm

The Tonelli-Shanks algorithm ([22], [20]) reduces the problem of computing a square root to another

famous problem, namely the discrete logarithm problem - given a finite cyclic group G, a generator α

of it, and an arbitrary element β ∈ G, determine the unique k, 0 ≤ k ≤ |G| − 1, such that β = αk . The

element k will be referred to as the discrete logarithm of β in base α, denoted by k = logα β. Although

this problem is intractable, if the order of the group is smooth, i.e., its prime factors do not exceed a given

bound, there is an efficient algorithm due to Pohlig and Hellman [16].

Let us consider an odd prime p, p = 2s t + 1, with s ≥ 2 and t is odd, a a quadratic residue and d a

quadratic non-residue (modulo p). Tonelli-Shanks algorithm is based on the following simple facts:

1. Let α = dt . Then | < α > | = 2s , or, equivalently, ord(α) = 2s , where < α > denotes the

subgroup induced by α, and ord(α) represents the order of α (in Z∗p ).

2. Let β = at . Then β ∈< α > and logα β is even (this discrete logarithm is considered with respect

to the subgroup induced by α).

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

89

√

√

t+1

k

Thus, if we can determine k such that β = αk , then a can be computed as a = a 2 (d−1 ) 2 t .

t+1

k

Indeed, (a 2 (d−1 ) 2 t )2 = at+1 (dkt )−1 = at+1 a−t = a.

Thus, the difficult part is finding k, the discrete logarithm of β in base α (in the subgroup < α >

of order 2s ). Tonnelli and Shanks compute the element k bit by bit. Lindhurst [14] has proven that

2

Tonelli-Shanks algorithm requires on average two exponentiations, s4 multiplications, and two quadratic

character evaluations, with the worst-case complexity O((log2 p)4 ).

Bernstein [5] has proposed a method of computing w bits of k at a time. His algorithm involves

s2

an exponentiation and 2w

2 multiplications, with a precomputation phase that additionally requires two

quadratic character evaluations on average, an exponentiation, and about 2w ws multiplications, producing

a table with 2w ws precomputed powers of α.

5.4.2.

Cippola-Lehmer Algorithm

The following square root algorithm is due to Cipolla [6] and Lehmer [12]. Cipolla’s method is based on

arithmetic in quadratic extension fields, which is briefly reminded below.

Let us consider an odd prime p and a a quadratic residue modulo p. We

√first generate an element

∗

2

z ∈ Zp such that z − a is a quadratic non-residue. The extension field Zp ( z 2 − a) is constructed as

follows:

• its elements are pairs (x, y) ∈ Z2p ;

• the addition is defined as (x, y) + (x′ , y ′ ) = (x + x′ , y + y ′ );

• the multiplication is defined as (x, y) · (x′ , y ′ ) = (xx′ + yy ′ (z 2 − a), xy ′ + x′ y);

• the additive identity is (0, 0), and the multiplicative identity is (1, 0);

• the additive inverse of (x, y) is (−x, −y) and its multiplicative inverse is (x(x2 − y 2 (z 2 − a))−1 ,

−y(x2 − y 2 (z 2 − a))−1 ).

Cipolla has remarked that a square root of a can be computed using that

(z, 1)

p+1

2

√

= ( a, 0),

and his method requires two quadratic character evaluations on average and at most 6 log2 p multiplications ([7, page 96]).

Lehmer’s method is based on evaluating Lucas’ sequences. Let us consider the sequence (Vk )k≥0

defined by V0 = 2, V1 = z, and Vk = zVk−1 − aVk−2 , for all k ≥ 2, where z ∈ Z∗p is generates such

that z 2 − 4a is a quadratic non-residue. Lehmer has proved that

√

1

a = V p+1 ,

2 2

and his method requires two quadratic character evaluations on average and about 4.5 log 2 p multiplications ([18]). Müller [15] has proposed an improved variant that requires only 2 log2 p multiplications,

which will be referred to as the Improved Cipolla-Lehmer.

90

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

5.4.3.

Tests Results

We have implemented Improved Generalized Atkin (Imp-Gen-Atk) and the fastest known algorithms,

namely Tonelli-Shanks-Bernstein (Ton-Sha-Ber) and Improved Cipolla-Lehmer (Imp-Cip-Leh). For all

pairs (log2 p, s), log2 p ∈ {128, 256, 512, 1024}, s ∈ {4, 8, 16, log22 p }, we have generated 32 pairs

(p, a), where a is a quadratic residue modulo p and we have counted the average number of modular

squarings and regular modular multiplications. We have considered two cases, depending whether p is

known a priori or not. We have not included the computation required for finding a quadratic non-residue

modulo p. For exponentiation we have considered the simplest method, namely the square-and-multiply

exponentiation. In case of an exponent x, this method requires log2 x squarings and Hw(x) regular

multiplications.

√

√

For Improved Generalized Atkin we choose the optimal k = ⌈ s⌉, requiring s + 2⌈ s⌉ stored

w

values. For Tonelli-Shanks-Bernstein, given that the number of needed precomputed values is s 2w , in

order to reach a number of elements comparable with ours, we choose the parameter w = 2 (that leads

to 2s elements). We have to remark that the performance of Improved Cipolla-Lehmer does not depend

on s.

We present the results for the case that p is not known a priori in Tables 1-4. In each column the

first value indicates the average number of squarings and the second one denotes the average number of

regular multiplications.

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

256 / 138

255 / 146

126 / 124

512 / 262

511 / 300

254 / 252

1024 / 515

1023 / 562

510 / 508

2048 / 1036

2047 / 1076

1022 / 1020

Table 1. Comparison between methods for s = 4, where p is unknown

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

260 / 136

255 / 179

126 / 124

515 / 267

511 / 300

254 / 252

1027 / 521

1023 / 562

510 / 508

2052 / 1031

2047 / 1076

1022 / 1020

Table 2. Comparison between methods for s = 8, where p is unknown

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

270 / 138

255 / 284

126 / 124

527 / 272

511 / 412

254 / 252

1039 / 527

1023 / 668

510 / 508

2062 / 1041

2047 / 1178

1022 / 1020

Table 3. Comparison between methods for s = 16, where p is unknown

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

303 / 171

253 / 301

126 / 124

559 / 297

509 / 426

254 / 252

1070 / 551

1021 / 686

510 / 508

2096 / 1059

2045 / 1195

1022 / 1020

91

Table 4. Comparison between methods for s = 32, where p is unknown

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

392 / 217

253 / 723

126 / 124

904 / 526

509 / 2468

254 / 252

2084 / 1334

1021 / 9021

510 / 508

5096 / 3721

2045 / 34431

1022 / 1020

Table 5.

Comparison between methods for s =

log2 p

, where p is unknown

2

In case that p is not known a priori, Improved Cipolla-Lehmer is clearly the best, while our algorithm

is comparable with Tonelli-Shanks-Bernstein.

We are interested in determining the values of s for which our algorithm is more efficient than Improved Cipolla-Lehmer and/or Tonelli-Shanks-Bernstein considering the case that p is known a priori.

We express 1E as log2 p S + log22p−s M. To simplify the comparisons we no longer distinguish between

squarings and regular multiplications.

More precisely, let us first determine s such that our algorithm is more efficient than Improved

Cipolla-Lehmer in terms of total computation:

√

log2 p − s 3s s 1 √

1 √

+

+ + (s⌈ s ⌉) + (s⌈ s ⌉) + ⌈ s ⌉ < 2 log2 p

2

4

2 4

4

We obtain the following sequence of equivalent inequalities:

√

√

log2 p − s s⌈ s ⌉ 5s

log2 p +

+

+

+ ⌈ s ⌉ < 2 log2 p

2

2

4

√

√

s⌈ s ⌉ 3s

log2 p

+

+ ⌈ s⌉ <

2

4

2

√

√

3s

s⌈ s ⌉ +

+ 2⌈ s ⌉ < log2 p

2

We now turn our attention to Tonelli-Shanks-Bernstein with the parameter w = 2. A more thorough

log2 p − s s2 3s

analysis of this algorithm gives us log2 p +

+

+

multiplications.

2

8

2

We obtain the following inequality:

√

√

s⌈ s ⌉ 5s

s2 3s

+

+ ⌈ s⌉ <

+

2

4

8

2

which leads to s > 20.

log2 p +

92

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

We present the results for the case that p is known a priori in Tables 5-8. We remind the reader that

in each column the first value indicates the average number of squarings and the second one denotes the

average number of regular multiplications.

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

128 / 72

126 / 71

126 / 124

256 / 133

254 / 136

254 / 252

512 / 269

510 / 265

510 / 508

1024 / 520

1022 / 522

1022 / 1020

Table 6. Comparison between methods for s = 4, where p is known a priori

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

131 / 80

127 / 112

126 / 124

260 / 136

255 / 176

254 / 252

515 / 276

511 / 305

510 / 508

1028 / 522

1023 / 559

1022 / 1020

Table 7. Comparison between methods for s = 8, where p is known a priori

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

141 / 79

126 / 125

126 / 124

270 / 155

254 / 187

254 / 252

526 / 262

510 / 316

510 / 508

1038 / 524

1022 / 574

1022 / 1020

Table 8.

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

Comparison between methods for s = 16, where p is known a priori

128

256

512

1024

176 / 116

126 / 246

126 / 124

308 / 182

254 / 310

254 / 252

560 / 317

510 / 441

510 / 508

1072 / 560

1020 / 697

1022 / 1020

Table 9.

Comparison between methods for s = 32, where p is known a priori

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

log2 p

Method

Imp-Gen-Atk

Ton-Sha-Ber

Imp-Cip-Leh

128

256

512

1024

268 / 186

126 / 686

126 / 124

649 / 453

254 / 2399

254 / 252

1597 / 1257

510 / 8895

510 / 508

4043 / 3425

1022 / 34172

1022 / 1020

Table 10. Comparison between methods for s =

93

log2 p

, where p is known a priori

2

6. Conclusions and Future Work

In this paper we have extended Atkin’s algorithm to the general case p ≡ 2s +1 mod 2s+1 , for any s ≥ 2,

thus providing a complete solution for the case p ≡ 1 mod 4. Complexity analysis and comparisons with

other methods have also been provided.

An interesting problem is extending our algorithm to arbitrary finite fields. In the case of the finite

fields GF(pk ), for k odd, the efficient techniques described in [11], [9] can be adapted to our case in a

straightforward manner, but, to the best of our knowledge, there are no similar techniques for the case

GF(pk ), for k even. We will focus on this topic in our future work.

Acknowledgements

We would like to thank the two anonymous reviewers for their helpful suggestions.

References

[1] Ankeny, N. C.: The Least Quadratic Non Residue, Annals of Mathematics, 55(1), 1952, 65–72.

[2] Atkin, A.: Probabilistic primality testing (summary by F. Morain), Technical Report 1779, INRIA, 1992,

URL:http://algo.inria.fr/seminars/sem91-92/atkin.pdf.

[3] Atkin, A., Morain, F.: Elliptic Curves and Primality Proving, Mathematics of Computation, 61(203), 1993,

29–68.

[4] Bach, E., Shallit, J.: Algorithmic Number Theory, Volume I: Efficient Algorithms, MIT Press, 1996.

[5] Bernstein, D. J.:

Faster square

URL:http://cr.yp.to/papers/sqroot.pdf.

roots

in

annoying

finite

fields

(preprint),

2001,

[6] Cipolla, M.: Un metodo per la risoluzione della congruenza di secondo grado, Rendiconto dell’Accademia

delle Scienze Fisiche e Matematiche, Napoli, 9, 1903, 154–163.

[7] Crandall, R., Pomerance, C.: Prime Numbers. A Computational Perspective, Springer-Verlag, 2001.

[8] Eikenberry, S., Sorenson, J.: Efficient Algorithms for Computing the Jacobi Symbol, Journal of Symbolic

Computation, 26(4), 1998, 509–523.

[9] Han, D.-G., Choi, D., Kim, H.: Improved Computation of Square Roots in Specific Finite Fields, IEEE

Transactions on Computers, 58(2), 2009, 188–196.

94

A.S. Rotaru and S. Iftene / A Complete Generalization of Atkin’s Square Root Algorithm

[10] IEEE Std 2000-1363. Standard Specifications For Public-Key Cryptography, 2000.

[11] Kong, F., Cai, Z., Yu, J., Li, D.: Improved generalized Atkin algorithm for computing square roots in finite

fields, Information Processing Letters, 98(1), 2006, 1–5.

[12] Lehmer, D.: Computer technology applied to the theory of numbers, Studies in number theory (W. Leveque,

Ed.), 6, Prentice-Hall, 1969.

[13] Lemmermeyer, F.: Reciprocity Laws. From Euler to Eisenstein, Springer-Verlag, 2000.

[14] Lindhurst, S.: An analysis of Shanks’s algorithm for computing square roots in finite fields, in: Number

theory (R.Gupta, K. Williams, Eds.), American Mathematical Society, 1999, 231–242.

[15] Müller, S.: On the Computation of Square Roots in Finite Fields, Designs, Codes and Cryptography, 31(3),

2004, 301–312.

[16] Pohlig, S., Hellman, M.: An improved algorithm for computing logarithms over GF(p) and its cryptographic

significance, IEEE Transactions on Information Theory, 24, 1978, 106–110.

[17] Pomerance, C.: The Quadratic Sieve Factoring Algorithm, Advances in Cryptology: Proceedings of EUROCRYPT 84 (T. Beth, N. Cot, I. Ingemarsson, Eds.), 209, Springer-Verlag, 1985.

[18] Postl, H.: Fast evaluation of Dickson Polynomials, in: Contributions to General Algebra (D. Dorninger,

G. Eigenthaler, H. Kaiser, W. Müller, Eds.), vol. 6, B.G. Teubner, 1988, 223–225.

[19] Schoof, R.: Elliptic Curves Over Finite Fields and the Computation of Square Roots mod p, Mathematics of

Computation, 44(170), 1985, 483–494.

[20] Shanks, D.: Five number-theoretic algorithms, Proceedings of the second Manitoba conference on numerical

mathematics (R. Thomas, H. Williams, Eds.), 7, Utilitas Mathematica, 1973.

[21] Sze, T.-W.: On taking square roots without quadratic nonresidues over finite fields, Mathematics of Computation, 80(275), 2011, 1797–1811, (a preliminary version of this paper has appeared as arXiv e-print, available

at http://arxiv.org/abs/0812.2591v3).

[22] Tonelli, A.: Bemerkung über die Auflösung quadratischer Congruenzen, Göttinger Nachrichten, 1891, 344–

346.