AERA Report, Pilot Study 2013

advertisement

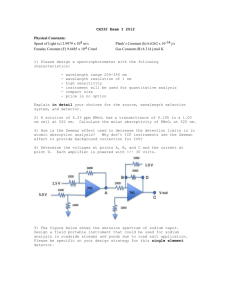

Inclusive Classroom Profile (ICP): Findings from the first US Demonstration Study Paper Presented at the AERA Annual Meeting, San Francisco, CA April, 27 – May 1, 2013 Elena P. Soukakou, Tracey A. West, Pam J. Winton, John H. Sideris Frank Porter Graham Child Development Institute, University of North Carolina at Chapel Hill Lia M. Rucker, North Carolina Rated License Assessment Project 1 Inclusive Classroom Profile (ICP): Findings from the first US Demonstration Study Objectives The Inclusive Classroom Profile (ICP) (Soukakou, 2012) is a classroom observation measure designed specifically to measure the quality of inclusive classroom practices. It is based on research evidence on the effectiveness of specialized instructional strategies for meeting the individual needs of preschool children with disabilities in inclusive settings (Odom, 2004; National Professional Development Center on Inclusion, 2011; Buysse and Hollingsworth, 2009). This session will share findings from the first demonstration study in the US conducted by FPG Child Development Institute, University of North Carolina. The study investigated the acceptability, reliability and validity of the ICP measure in collaboration with the NC Rated License Assessment Project, the Division of Child Development and Early Education, and the NC Department of Public Instruction, Exceptional Children. This study involved field testing the ICP in 51 settings in North Carolina with the following objectives: (1) Examine the acceptability of the ICP measure for program evaluation within an accountability structure such as quality rating systems. (2) Assess the effectiveness of training procedures for ensuring accurate administration. (3) Assess the psychometric properties of the ICP, including the measure’s inter-rater reliability, factor structure and construct validity. (4) Explore the relationship between inclusive quality and various child and classroom characteristics (e.g. relationship between quality and teachers’ special education qualifications, number of children with disabilities and severity of disability). Background/Theoretical Framework Never has so much attention been paid to improving the quality of early childhood programs serving high - need children. Through the Race to the Top –Early Learning Challenge Program and other reform efforts, states are being challenged to develop systems for rating, monitoring and improving early learning and development programs. States need reliable, valid tools for measuring classroom quality that are sensitive to and inclusive of each and every child (Odom, Buysse, & Soukakou, 2011). The ICP was developed in response to a lack of validated instruments designed specifically to measure the quality of inclusive practices (Odom & Bailey, 2001). It was designed to complement existing program quality measures with new quality indicators that assess specific, 2 classroom practices that support the developmental progress of children with disabilities in early childhood settings. In the form of a structured observation 7- point rating scale, the ICP includes 12 items that are assessed predominately through observation. A few quality indicators are assessed through teacher interview. Examples of the ICP items include the following: adaptation of space and materials, adult involvement in peer interactions, adaptation of group activities, support for communication, and family-professional partnerships. The development of the ICP was founded on a recognized premise; that is, what constitutes quality in programs where diverse learners such as children with disabilities need additional supports is possibly more multifaceted than what current quality assessment systems and tools measure (Gallagher & Lambert, 2006; Odom & Bailey, 2001; Spiker et al., 2011). An important assumption guiding the conceptualization of the ICP is that specialized instructional strategies that have research support are more likely to meet the needs of young children with disabilities in early childhood settings. For example, naturalistic instruction, which includes a set of practices such as incidental teaching methods, embedded strategies, scaffolding strategies, and peermediated interventions have empirical support and are generally encouraged in inclusive classrooms (Odom, 2004; National Professional Development Center on Inclusion, 2011). The ICP has been used in a pilot study in the United Kingdom with promising results for the measure’s reliability and validity (Soukakou, 2012). This is the first US demonstration study leading to validation. Methods The ICP was field tested in 51 inclusive preschool classrooms. Criteria for program participation in the study included: center-based, served preschool age children (three-five years of age), and served at least one child and no more than 50% with an Individualized Education Plan (IEP). To field-test the ICP measure, each inclusive classroom had an ICP assessment conducted by a trained assessor over a four month period. In addition, Early Childhood Environment Rating Scale assessments (ECERS-R; Harms, Clifford and Cryer, 2005) were gathered in all classrooms during the same data collection period for comparison to the ICP. Each classroom observation lasted approximately three hours. Classroom characteristics Of the 51 settings, 39% were child care programs, 26% were developmental day programs, 26% were Head Start programs and 9% were public preschools. In total, classrooms included 150 children with an identified disability (child with an IEP). The number of children with a disability per classroom ranged from one to eight children, with each classroom serving in average 2.94 children with an IEP. Teacher education level ranged from a high school diploma through a Master’s degree, with 51% of the teachers reporting having received a Bachelor’s degree. 3 Area and severity of disability For each child with a disability (N=150) teachers were asked to identify children’s area and severity of disability1. Children’s disabilities were identified in the following areas: behavior/social (67%), gross motor (27%), fine motor coordination (45%), sensory integration (27%); and intentional communication (90%). With regard to severity of disability, 59% of the classrooms had a least one child with a disability identified at the ‘severe’ level (1-4 point scale rating possible/mild/moderate/severe level), while 88% of classrooms had at least one child with a moderate or severe level of disability. Data sources Four assessors from the North Carolina Rated License Assessment Project (NCRLAP) collected data using the following measures: Inclusive Classroom Profile (ICP) (Soukakou, 2012), the ICP child and classroom demographic form, and the Early Childhood Rating Scales (ECERS-R) (Harms, Clifford, & Cryer, 2005). The ECERS-R was selected for use in this study because it was one of the main measures that served as a foundation for developing the ICP. Given that the ECERS-R also measures provisions for children with disabilities, correlations between the two measures could provide important information about the construct validity of the ICP and the contribution of the ICP items above and beyond those included in the ECERS-R. The NCRLAP has the contract in NC to collect ECERS-R data for NC’s Quality Rating and Improvement System (QRIS). For this study, the assessors were formally trained in using the ICP measure and classroom demographic form developed by the study researchers. The demographic information was gathered through teacher interview. Information on the acceptability and usability of the ICP was gathered through a social validity survey completed by the assessors. Assessors also participated in a full-day focus-group to provide feedback on the measure and its application. Results and Conclusions Objective 1: Examine the acceptability of the ICP measure for program evaluation. Results from the social validity survey (1-5 point scale), indicated that assessors rated the importance of the constructs measured by the ICP very highly (m= 5) and that they would highly recommend the ICP measure to others (m=5). With regard to the usability of the ICP, the assessors found the measure easy to use (m= 4). Finally, assessors rated the ‘Overview’ part of the training (information on administration and scoring, including video clips for practice purposes) as useful (m= 3.75), and all four assessors reported that they felt well prepared after the reliability training observations (m=4). Findings from the focus-group feedback meeting confirmed the high level of acceptability of the ICP measure by the assessors who used the ICP 1 Teachers could identify one or more areas of need for each child with an IEP. 4 in the study, and provided specific recommendations for improving the administration, scoring and training procedures. Objective 2: Examine the effectiveness of training procedures for accurate and reliable administration. Assessors received a three-hour training session on ICP administration and scoring followed by four reliability observations in inclusive classrooms to assess accuracy of administration and reliability proficiency against the ICP author standard. Each assessor (n=4) met a reliabilityproficiency criterion of 85% agreement within one scale point, maintained for three consecutive reliability observations against the ICP author’s rating. Mean inter-rater agreement across assessors was 98% with a range of 91-100%. Objective 3: Assess the psychometric properties of the ICP, including the measure’s inter-rater reliability, factor structure and construct validity. Inter-rater reliability was assessed in 9 reliability observations (18% of the sample), distributed over the four month data collection period. The mean inter-rater agreement across reliability observations was 87% (within one point scale). Reliability at the item level was assessed via an intra-class correlation coefficient (ICC) ranging from zero to one where one indicates perfect agreement. For most items agreement between raters was in an acceptable range (.51-.99) with a mean of .71. Only one item (Item 3) presented with low agreement (.11) indicating the need for further clarification of the item in the scoring protocol. Accuracy of ICP administration by assessors against author standards was assessed in 9 classroom observations. The mean agreement between assessor’s scores and ICP author standards (consensus score) was 94% (within one) and 75% (exact agreement) indicating that assessors maintained accurate administration of the ICP throughout the study. Internal consistency was assessed through Cronbach’s alpha analysis for the scale’s 12 items. This analysis suggested that the scale’s items were highly consistent (α = .85). Structural validity was assessed through exploratory factor analysis of the ICP items. A scree plot examination (see figure 1) of the variance explained by the number of factors, clearly showed a one-factor structure with good factor loadings (.34 - .84). Construct validity was examined through correlation analysis between the ratings on the ICP in and ratings on the Early Childhood Environment Rating Scales (ECERS-R; Harms, Clifford and Cryer, 2005). A moderate high correlation was found between the total score of the ICP and the ECERS-R (rho =.48) suggesting that the two instruments are correlated but not measuring identical constructs. A pattern of weaker correlations between the ICP and certain ECERS subscales, such as the ‘health and personal care routines’ that involve practices not measured by 5 the ICP (rho =.21) and higher correlations with ECERS subscales that measure constructs more closely related to the ICP ( ‘interactions’; rho = .38) provide preliminary evidence for the construct validity of the ICP. Table 1 presents all correlations between the ICP and ECERS-R total and item/subscale scores. Objective 4: Examine the relationship between the quality of observed inclusive practices and child and classroom characteristics. Multiple regression analyses were conducted to explore the association between observed quality of inclusive practices (as measured by the ICP) and the following classroom characteristics that were predicted to be associated with higher quality on the ICP: teacher education level, teacher special education training (course hours in special education), number of children in the classroom with an IEP, ratio between children and adults, and program type. Teacher education level was correlated at r = .41, p =.003; number of course hours in special education at r = .36, p =.014; number of children with an IEP at r = .37, p =.007; and ratio between children and adults in the classroom was correlated r = -.57, p <.001. Simple mean differences between program types were tested with one-way ANOVA. It was expected that developmental day programs and perhaps Head Start programs would have higher ICP scores because of their histories in serving young children with disabilities. This model testing was significant, F (3,47) = 13.77, p < .05 and accounted for a large amount of the variance, R2 = .47, indicating that differences in program type account for nearly half of the variance in ICP scores. The model partially confirmed the expected mean differences. Developmental day programs did indeed score highest and were significantly higher than child care programs but not significantly higher than the other two program types. Given the high correlations of the ICP with the predicted variables described above and the differences between program types, an exploratory analysis was conducted to further test the relationship between the ICP and those predictors. The following predictors of ICP scores were tested in a hierarchical series of five models with a predictor variable added at each step: teacher education level, number of course hours in special education, number of children in the classroom with an IEP, ratio between children and adults, and program type. ECERS-R total score was also entered as a predictor. The initial model tested for differences in ICP scores based on program type. Adding the predictor variables in sequence enabled us to test not only the unique contribution of each of the predictor variables, but also whether the presence of one affects the apparent impact of the others. Of particular interest were the expected program type differences and whether those differences were collinear with the other predictors. The significant difference that was found for the child care programs compared to all other program types was maintained upon controlling for teacher education, ECERS-R total score, course hours in special education, number of children with an IEP, and adults to children ratio. No other predictors were significant. 6 Significance This demonstration study provided further evidence for the reliability, validity, and usability of the ICP. Findings from the present study have implications for use of the ICP in research, quality rating systems and professional development. For instance, as a research instrument, the ICP could be used to assess quality across inclusive programs as well as to evaluate the relationship between the quality of inclusive practices and outcomes for children with disabilities and their families. As one component of a quality ratings system, the ICP could be used to evaluate the quality of inclusive practices. Finally, as a quality improvement tool, the information gathered on ICP could inform professional development models and enhance program quality improvement activities. 7 References Buysse, V., & Hollingsworth, H. L. (2009). Research synthesis points on early childhood inclusion: What every practitioner and all families should know. Young Exceptional Children, 11, 18-30. Gallagher, P. A., & Lambert, R. G. (2006). Classroom quality, concentration of children with special needs and child outcomes in Head Start. Exceptional Children, 73(1), 31–53. Harms, T., Clifford, R. M., & Cryer, D. (1998/2005). Early Childhood Environment Rating Scale-Revised Edition. New York, NY: Teacher’s College Press. National Professional Development Center on Inclusion. (2011). Research synthesis points on quality inclusive practices. Chapel Hill: University of North Carolina, FPG Child Development Institute, Author. Retrieved from http://community.fpg.unc.edu/npdci Odom, S. L., & Bailey, D. B. (2001). Inclusive preschool programs: Classroom ecology and child outcomes. In M. Guralnick (Ed.), Focus on change (pp. 253–276). Baltimore, MD: Brookes. Odom, S. L., Buysse, V., & Soukakou, E. (2011). Inclusion for young children with disabilities: A quarter century of research perspectives. Journal of Early Intervention, 33, 344-356. Odom, S. L., Vitztum, J., Wolery, R., Lieber, J., Sandall, S., Hanson, M. J., & Horn, E. (2004). Preschool inclusion in the United States: A review of research from an ecological systems perspective. Journal of Research in Special Educational Needs, 4, 17-49. Soukakou, E. P. (2012). Measuring quality in inclusive preschool classrooms: Development and validation of the Inclusive Classroom Profile (ICP). Early Childhood Research Quarterly, 27,478-488. Spiker, D., Hebbeler, K. M., & Barton, L. R. (2011). Measuring quality of ECE programs for children with disabilities. In M. Zaslow, I. Martinez-Beck, K. Tout, & T. Halle (Eds.), Quality measurement in early childhood settings (pp. 229–256). Baltimore, MD: Brookes. 8 Table 1 Rank-Order Correlations Between ICP and ECERS-R ECERS-R Scale Space and Furnishings Personal Care Language and Reasoning Program Structure Activities Interactions Parent and Staff ICP Total 0.48*** 0.21** 0.47*** 0.29* 0.30* 0.38** 0.38** ECERS Total Score 0.48*** Note: *p<.05, **p<.01, ***p<.001 Figure1. Scree plot displaying the amount of variance explained by the number of factors entered in ICP exploratory factor analysis. 9