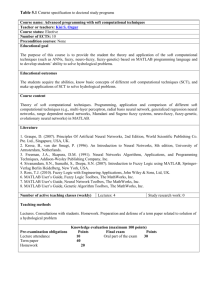

483-226

advertisement

A Novel Neuro-Fuzzy Classifier Based on Weightless Neural Network Raida Al-Alawi Department of Computer Engineering College of Information Technology University of Bahrain P. O. Box 32038 Bahrain Abstract: - A single layer weightless neural network is used as a feature extraction stage of a new neuro-fuzzy classifier. The extracted feature vector measures the similarity of an input pattern to the different classification groups. A fuzzy inference system uses the feature vectors of the training set to generate a set of rules required to classify unknown patterns. The resultant architecture is called Fuzzy Single Layer Weightless Neural Network (FSLWNN). The performance of the F-SLWNN is contrasted with the performance of the original Winner-Takes-All Single Layer Weightless Neural Network (WTA-SLWNN). Comparative experimental results highlight the superior properties of F-SLWNN classifier. Key-Words: - Weightless neural networks, Fuzzy inference system, pattern recognition, n-tuple networks. 1. Introduction Weightless neural networks (WNN) have received extensive research attention and regarded as powerful learning machines, in particular, as excellent pattern classifier [1,2]. WNNs possess many prominent features such as their simple one-shot learning scheme [3], fast execution time and readiness to hardware implementation [4, 5, 6]. The simplest form of WNN is the n-tuple classifier or the Single Layer Weightless Neural Networks (SLWNN) proposed by Bledsoe and Browning in 1959 [7]. The neurons in a SLWNN are RAM-like cells whose acquired knowledge is stored in Look-Up tables (LUT). The reason for referring to these models as weightless networks is that adaptation to new training patterns are performed by changing the contents of the LUTs rather than adjusting the weights in conventional neural network models. Although, many weightless neural networks models have been proposed in the literature, few combine these networks with fuzzy logic. The work presented in this paper is a new approach in combining the excellent features of SLWNN with a fuzzy rule base system, to generate a neuro-fuzzy classifier. This paper will first give an overview to the FSLWNN classifier. Section 3, describes the training and testing methodology of the proposed F-SLWNN. Section 4 presents the experimental results conducted to test the performance of the F-SLWNN and contrasted with the WTA-SLWNN. Finally, conclusion and future work is addressed in the last section. 2. The F-SLWNN Classifier The F-SLWNN is a two stage system in which the first stage is a single layer weightless neural network (SLWNN) used to extract the similarity feature vectors from the training data, while the second stage is a fuzzy Inference system (FIS) that builds the fuzzy classification rules from the knowledge learnt by the trained SLWNN. Figure 1 shows a block diagram of the F-SLWNN. The SLWNN is a multi-discriminator classifier as shown in figure 1. Each discriminator consists of M RAM-like neurons (weightless neurons or n-tuples) with n address lines, 2n storage locations (sites) and 1-bit word length. Each RAM randomly samples n pixels of the input image, as shown in figure 2. Each pixel must be sampled by at least one RAM. Therefore, the number of RAMs within a discriminator (M) depends on the n-tuple size (n) and the size of the input image (I) and is given by: M I n ...... (1) The RAM’s input pattern forms an address to a specific site (memory location) within the RAM. The outputs of all RAMs in each discriminator are summed together to give its response. The jth discriminator response (zj) is given by: zj where, an unseen pattern, in other words, the discriminator with the highest response will specify the class to which the test pattern belongs. M o kj k 1 k o j is ...... (2) the output of the kth RAM of the jth discriminator. n-tuple address lines Weightless Neuron Normalized 16x16 pixel image Discriminator 1 Discriminator 2 x1 x2 F-Net 1 F-Net 2 o 1j 1 1 0 1 0 0 o 2j d1(x) d2(x) zj Discriminator Response Adder MAX o Mj Identified Class 0 1 11......11 11......10 Feature vector (x) F-Net N 1 00......01 WSLNN xN 00......00 Discriminator N Input Pattern (P) Site address dN(x) FIS Figure 2. The architecture of the jth discriminator. Figure 1. Architecture of F-SLWNN. Before training, the contents of all RAMs sites are cleared (stores “0”). The training set consists of an equal number of patterns from each class. Training is a one-shot process in which each discriminator is trained individually on a set of patterns that belongs to it. When presenting a training pattern to the network, all the addressed sites of the discriminator which the training pattern belongs are set to “1”. When all training patterns are presented, the data stored inside the discriminators RAMs will give the WNN its generalization ability. Testing the SLWNN is performed by presenting an input pattern to the discriminators inputs and summing the RAMs outputs of each discriminator to get its response. The normalized response of class j discriminator xj, is defined by: xj zj M ...... (3) The value of xj can be used as a measure of the similarity of the input pattern to the jth class training patterns.The normalized response vector generated by all discriminators for an input pattern is given by: x [ x1 , x2 ,......., x N ] , 0 x j 1 ...... (4) This response vector can be regarded as a feature vector that measures the similarity of an input pattern to all classes. In the classical SLWNN (n-tuple networks), a WinnerTakes-All (WTA) decision scheme is used to classify In this paper, the set of feature vectors generated by the SLWNN for a given training set will be used as input data to the fuzzy rule-based system. Fuzzy rules will be extracted from the feature vector using the methodology described in [8]. This method is an expansion to the fuzzy min-max classifier neural network described in [9]. Figure 1 shows the general architecture for the second stage of our F-SLWNN system. Every class has a dedicated network (F-Net) that calculates the degree of membership of the input pattern to its class. Each Fuzzy network (F-Neti) consists of a number of neurallike subnetworks as illustrated in figure 3. The number of subnetworks in F-neti depends on the number of classes that overlap with class i. Each node in the first layer calculates the degree of membership of the input vector to its class (denoted by d fij ( l ) ( x ) ). Detailed analysis for computing the degree of membership of an input pattern x to a specific rule is found in [8]. The second layer neurons find the maximum degree of membership of the input vector x among the degree of membership values generated by the nodes in the first layer, given by: d f ij ( x ) ( d f ij ( l ) ( x )) ... (5) max l 1,..... The output node in network i finds the degree of membership of x to class i, denoted by d i ( x ) , and is given by: d i ( x ) min ( d f ( x )) ij ...... (6) Finally, an unknown vector x will be classified to the class that generates the maximum degree of membership, i.e.; x class i if d i ( x ) sub-net max j 1, 2, . . . . , N (d j ( x )) (7) overlap between class i and class j df df ij ( 1 ) (x) (x) (2) ij 4. Discussion and Experimental results MAX df (x) ij x1 x2 di ( x ) MIN overlap between class i and class k xN df ik (1) (x) df (x) ik Feature vector (x) df ik (2) (x) will generate a feature vector (x) that describes the belongingness of the input vector to each class. Fuzzy rules will then be applied on this feature vector to find the class that generate the maximum degree of membership and associate the unknown pattern to that class. MAX Figure 3. Detailed architecture of the ith fuzzy network (F-Neti). 3. Hybrid Training Algorithm Training the F-SLWNN is performed in two steps. The first step is performed in order to generate initial clusters from a subset of the training set. In this phase, a subset of training patterns T1 is used to train the SLWNN. Each discriminator will be trained to recognize one class. After completing this phase, the information stored into the discriminators’ RAMs will give the SLWNN its generalization capability. The second phase of the learning algorithm is the generalization phase in which the complete training set T (including T1) is applied to the trained SLWNN. Each pattern will produce a feature vector that describes its similarity to all classes. The generated set of feature vectors will be used to extract the fuzzy rules for each fuzzy network (F-Net) based on the overlapping regions of its own cluster with the other classes clusters. Once the generalization phase is completed, the SLWNN stage has encoded in its memory sites information about the training patterns subset T1, and the fuzzy inference stage has extracted the set of rules from the whole training set training set T. When an unknown pattern is applied to the system, the SLWNN The classification ability of the F-SLWNN has been tested on the classification of handwritten numerals using NIST standard database SD19 [10]. A 10-discriminator SLWNN is used in the first stage of the system. The discriminator consists of 32 neurons, each with n-tuple size of 8 and sampling binary images of 32x32 bit size. The parameters of the SLWNN (ntuple size, mapping method, number of training patterns) are fixed in the experiments conducted. The effect of changing these parameters on the performance of the network is beyond the scope of this work. The complete training set consists of 150 handwritten numerals from each class (A total of 1500 patterns). The subset T1 which is used to train the SLWNN contains 100 patterns from each class. The binary images from the database were normalized and resizes into a 16x16 pixel grid. The performance of the F-SLWNN is compared with the performance of the standard WTA-SLWNN. The WTA-SLWNN is trained on the same set of patterns T1. Table 1 summarizes the results obtained when testing the two classifiers on different sets. The first experiments are conducted to verify the behavior of the two models on the training sets T1 and T. Both systems produce 100% correct recognition for the training set T1. However, for training set T, the WTASLWNN gives an average of 91.7% correct recognition compared with 100% correct recognition for the F-SLWNN. In this case, the WTA-SLWNN used its encoded data to generalize for the unseen patterns in the set T, while the fuzzy inference stage used the SLWNN generated response vectors to extract the rules required to correctly classify them. The second experiment is conducted to test the generalization ability of the two classifiers. A new set of patterns that consists of 500 unseen patterns selected equally from the 10 numeral classes are presented to the two networks. The performance of the F-SLWNN is higher than that of the WTA-SLWNN as indicated in table 1. The Final experiments are conducted to test the noise tolerance of the two models. A ‘salt and pepper’ noise function supported by MATLAB is added to the training set T, with different values of noise densities. Figure 4 shows the percentage of correct recognition as a function of noise density for both classifiers. The results show that the performance of both models is comparable when the noise density is low. Applied patterns Classifiers Verification Generalization ability Set T1 Set T Test Set Percentage of Correct Classification WTAFSLWNN SLWNN 100 100 91.7 100 92.2 97.6 Table 1. A comparison of Percentage of correct recognition between F-SLWNN and WTA-SLWNN. However, the performance of the WTA-SLWNN degrades more rapidly than that of the F-SLWNN as the noise density increases. Figure 4. Percentage of correct recognition as a function of added noise density. 5. Conclusions A new two stage neuro-fuzzy is introduced. The first stage of the classifier utilizes a Single Layer Weightless Neural Network (SLWNN) as a feature extractor. The second stage is a fuzzy inference system whose rules are extracted from the feature vectors generated by the trained SLWNN. This approach is an alternative to the traditional crisp WTA n-tuple classifiers. The effectiveness of the proposed system has been validated using NIST database SD19 for handwritten numeral. Experimental results reveal that the FSLWNN classifier outperforms the WTA-SLWNN. Future work investigates the effect of varying the ntuple size and the size of the training sets T1 and T on the performance of the system. References [1] T. M. Jørgensen, “Classification of Handwritten Digits Using a RAM Neural Net Architecture,” Int’l J. Neural Systems, vol. 8, no. 1, pp. 17-25, 1997. [2] T. G. Clarkson et al. ,“Speaker Identification for Security Systems Using ReinforcementTrained pRAM Neural Network Architectures,” IEEE Transaction On Systems, Man and Cybernatics-Part C: Application and Reviews, Vol. 31, No. 1, pp. 65-76, Feb. 2001. [3] J. Austin. RAM-Based Neural Networks, Singapore: World Scientific, 1998. [4] I. Aleksander, W. Thomas, P. Bowden, "WISARD, a radical new step forward in image recognition," Sensor Review 4, 29-40, 1984. [5] E. V. Simões, L. F. Uebel and D. A. C. Barone, “Hardware Implementation of RAM Neural Networks,” Pattern Recognition Letters, Vol. 17, No. 4, pp. 421-429, 1996. [6] R. Al-Alawi, “FPGA Implementation of a Pyramidal Weightless Neural Networks Learning System” International Journal of Neural Systems, Vol. 13, No. 4, pp.225-237, 2003. [7] W. W. Bledsoe and I. Browning, “Pattern Recognition and Reading by Machine,” in Proc. Eastern Joint Computer Conference, Boston, MA, pp. 232–255, 1959. [8] S. Abe and M. S. Lan, “A Method for Fuzzy Rule Extraction Directly from Numerical Data and its application to Pattern Classification,” IEEE Transaction on Fuzzy Systems, Vol. 3, no. 1, pp. 18-28, Feb. 1995. [9] P. K. Simpson, “Fuzzy Min-Max Neural Networks-Part 1: Classification,” IEEE Transaction on Neural Networks, Vol. 3, no. 5, pp. 776-786, Sept. 1992. [10] National Institute of Standards and Technology, NIST Special Data Base 19, NIST Handprinted Forms and Characters Database, 2002.