Introduction to Evolutionary Computation

advertisement

Introduction to Evolutionary Computation/

Evolutionary Computation

Lecture 1: Overview

Ata Kaban

A.Kaban@cs.bham.ac.uk

http://www.cs.bham.ac.uk/~axk

School of Computer Science

University of Birmingham

2003

•

•

•

•

•

•

•

What is Evolutionary computation

Why is it interesting

Strong methods vs weak methods

The basic procedure & a toy example

Application areas

Further examples

Conclusions & the underlying assumption

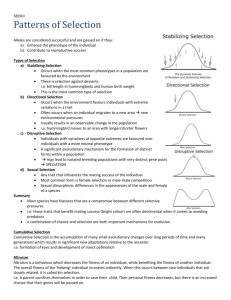

Some terms from genetics

• DNA (Deoxyribonucleic Acid) = the physical carrier of genetic information

• Chromosomes = a single, very long molecule of DNA

• Gene = basic functional block of inheritance (encoding and directing the synthesis of a

protein)

• Allele = one of a number of alternative values of a gene

• Locus = location of a gene

• Genome = the complete collection of genetic material (all chromosomes together)

• Genotype = the particular set of genes contained in a genome

• Phenotype = the manifested characteristics of the individual; determined by the

genotype

• Haploid set: a single complement of chromosomes (n)

• Diploid set: a double complement of chromosomes (2n)

– Human diploid cells have 2x23+2 chromosomes

• Meiosis:special type of cell division, such that gametes contain only one copy of each

chromosome

• The modern study of genetics started in mid 19-th century, with

Gregor Mendel’s experiments investigating the inheritance of

particular traits in peas

– Pure breeding plants

– Cross fertilised plants (e.g. round seeds & wrinkled seeds)

• Dominant (R) and recessive (r) genes

Simplifying analogy

• Chromosome

--

Bit string

• Gene

--

Bit

• Allele

--

0/1

What is

Evolutionary Computation?

• Study of computational systems that use ideas inspired from

natural evolution

• Survival of the fittest

• Search procedures that probabilistically apply search operators to

a set of points in the search space

general method for solving ‘search for solutions’ problems

Motivations

The mechanisms of evolution seem well suited to computational problems in

many fields

• Improving optimisation

– search through a very large state space

• Robust adaptation

– changing environment

• Machine intelligence

– hope that complex behaviour (such as intelligence) emerges from parallel

application & interaction of simple rules

• Understanding of biology

Some terminology (for historical reasons)

• Genetic algorithms

• Genetic programming

• Evolution strategies

-----------------------------------• Evolutionary computation

–

–

–

–

Reproduction

Random variation

Competition

Selection

Search

• Search for stored data

– Tree structured storage

• Search for solutions

– Too many possible nodes – they cannot be stored – are created from an

initial set of candidates in a way that depends on a particular algorithm

– The goal is to find a high-quality solution by only examining a small

portion of the tree

• AI graph-search

– If partial solutions can be evaluated the AI tree-search

– ‘find the path to a goal’ type of problems (e.g. 8-puzzle)

Does not apply always. Eg. Predicting the 3D structure of a protein

from its amino acid sequence - without knowing the physical moves by

which a protein folds up into a 3D structure

• General search methods

– Hill climbing

– Simulated annealing

– Tabu search

– Evolutionary search

‘Weak methods’ vs

‘strong methods’

• Weak methods = general methods (methods that work on a large variety

of problems)

– E.g. evolutionary methods

• Strong methods = methods specially designed and tailored to work

efficiently on particular problems

– E.g. traditional optimisation methods

A simple EA

1 run:

• 1. Initialisation: generate an initial population (generation) P(0) at

random; set i0

• 2. Iteration

–

–

–

–

A) evaluate the fitness of individuals in P(i)

B) select parents from P(i) based on their fitness

C) generate offspring from the parents using crossover & mutation to form P(i+1)

D) i i+1

• 3. until some stopping criteria is satisfied

Report statistics: e.g. the best fitness & i, averaged over several

runs on the same problem.

Toy example

Maximise the fct. f(x)=x wrt. X in the interval {0,…,31}.

• Encoding (representation): chromosomes:

2

0=00000, 1=00001, 2=00010, 3=00011,…

phenotype genotype

• Generate initial population at random:

01101, 00001, 11000, 10011,…

• Evaluate the fitness according to f(x)

e.g. 01101 = 13 169

(01101 is the genotype; 13 is the phenotype)

• Select 2 individuals for crossover based on their fitness. E.g. using the ‘roulette-wheel

sampling’ to implement a fitness-proportionate selection: pi=fi/(sumj fj)

parents:

offspring:

mutated:

0110|1(=13)

0110|0

01101

parents:

10|011(=19)

offspring: 10|000

mutated: 00000

1100|0(=24)

1100|1

11|000(=24)

11|011(=27)

One nice application: Evolutionary Art

http://www.genarts.com/galapagos/galapagos-images.html

Variables to play with

• Designing a sensible representation

• Size of the population

• Crossover rate: the probability of crossing over the pairs at a randomly (uniformly)

chosen locus (location)

• Mutation rate: the probability of mutating a gene (applied to all genes)

• Other representation schemes different types of crossover & mutation operators

• Selection strategies (fitness proportionate selection others than ‘roulette wheel

sampling’)

• Etc.

The success of the algorithm greatly depends on these factors and a good choice is

dependent on the concrete problem at hand.

Further application areas

•

•

•

•

•

•

•

•

•

•

Optimisation

– Combinatorial optimisation: circuit layout, job-shop scheduling

Automatic programming

– Evolving computer programs for specific tasks

– Designing other computational structures (NNs, cellular automata)

Machine Learning

– classification: evolving rules for learning classifier systems

– prediction: predicting protein structure, predicting weather

Economics

– Modelling of bidding strategies

– Modelling of the emergence of economic markets

Immune systems

– Discovery of multi-gene families during evolutionary time

Ecology

– Modelling host-parasite coevolution, symbiosis and resource flow

Population genetics

– Under what conditions will a gene for recombination be evolutionarily viable

Evolution and learning

– Studying how individual learning & species evolution affect each other

Social systems

– Evolution of social behavior in insect colonies evolution of cooperation &

communication in multi-agent systems

Etc.

Inventors

• John Holland “Adaptation in Natural and Artificial Systems”, University

of Michigan Press (1975)- Genetic Algorithms

• Lawrence Fogel, M. Evans, M. Walsh “Artificial Intelligence through

Simulated Evolution”, Wiley, 1966 - Evolutionary programming

• Ingo Rechenburg, 1965 - Evolutionary Strategies

Traditional computational systems vs. Evolutionary

computation

Traditional systems:

• Fixed & accurate at exact computation

• Unflexible

• Not appropriate for processing:

– noisy, inaccurate data

– non-smooth functions

– objectives that change over time

Exact solution to simplified problem

Evolutionary systems:

• Yield good quality approximate solutions to large real world problems

• Time consuming

• Flexible,

• Robust

• Appropriate when traditional methods break down

Approximate solution to real problem

Machine Learning and Evolutionary Algorithms

• Most Machine Learning is concerned with constructing a

hypothesis from examples that generalises well

– fast but very biased

• EC is a discovery-search over hypotheses

– slow and unbiased

Genetic Programming

• Extension of GA for evolving computer programs (Koza 1992)

– represent programs as LISP expressions

– e.g.

(IF (GT (x) (0)) (x) (-x))

see Fig.1.

Example: Evolving programs on a mobile robot

• Goal: obstacle avoidance

• inputs from eight sensors on robot {s1-s8} with values between {0,1023} (higher

values mean closer obstacle)

• output to two motors (speeds) m1,m2 with values between {0,15}.

Fitness function:

F=sum(si) + |8-m1| + |8-m2| + |m1 – m2|, where the first term is a penalty for going

close to an obstacle, the 2-nd and 3-rd terms are rewards for going fast and the last term

is a reward for going straight.

Example: Electronic Filter Circuit Design

•Individuals are programs that transform beginning circuit to final circuit by

•Adding/subtracting components and connections

•Fitness: computed by simulating the circuit

•Population of 640,000 has been run on a parallel processor

•After 137 generations, the discovered circuits exhibited performance competitive with

best human designs

Summary

•EA are a family of general methods for solving ‘search for solutions’

problems through randomized parallel search

•Nice metaphor with Darwinian theory of biological evolution

•Search operators enable us to generate new offspring from parents

•Implicit assumption that high-quality ‘parent’ candidate solutions from

different regions in the search space can be combined via crossover to, on

occasion, produce high-quality ‘offspring’ candidate solutions!

Programming exercises

(from M Mitchell, Chapter 1)

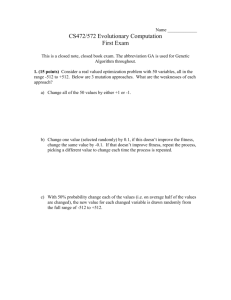

• 1. Implement a simple GA with fitness-proportionate selection, roulette-wheel

sampling, population size 100, single-point crossover rate pc = 0.7 and bitwise

mutation rate pm=0.001. Try it on the following fitness function: f(x)=number of ones

in x, where x in a chromosome of length 100. Perform 20 runs and measure the

average generation at which the string of all ones is discovered. Perform the same

experiment with crossover turned off (I.e. pc=0). Do similar experiments, varying the

mutation and crossover rates, to see how the variations affect the average time required

for the GA to find the optimal string. If it turns out that mutation with crossover is

better than mutation alone, why is that the case?

• 2. Implement a simple GA with fitness-proportionate selection, roulette-wheel

sampling, population size 100, single-point crossover rate pc=0.7 and bitwise mutation

rate pm=0.001. Try it on the fitness function f(x)=the integer represented by the binary

number x, where x is a chromosome of length 100. Run the GA for 100 generations

and plot the fitness of the best individual found at each generation as well as the

average fitness of the population at each generation. How do these plots change as you

vary the population size, the crossover rate and the mutation rate? What if you use

only mutation (i.e., pc=0 )?

• 3. Compare the GA’s performance on the fitness functions of exercises 1 and 2 with

that of steepest-ascent hill climbing and random-mutation hill-climbing.

• Steepest-ascent hill climbing:

– 1. Choose a candidate solution (e.g. bit string) at random. Call this string current-string

– 2. Systematically mutate each bit in the string from left to right, recording the fitness of the resulting

strings

– 3. If any of the resulting strings give a fitness increase, then set current-string to the resulting string

giving the highest fitness increase (the steepest ascent)

– 4. If there is no fitness increase, then save current-string (a hilltop) and go to step 1. Otherwise go to

step 2 with the new current-string

– 5. When a set number of fitness-function evaluations has been performed, return the highest hilltop

that was found

• Random-mutation hill climbing

– 1. Start with a single randomly generated string. Calculate its fitness

– 2. Randomly mutate one locus of the current string

– 3. If the fitness of the mutated string is equal to or higher than the fitness of the original string, keep the

mutated string. Otherwise keep the original string

– 4. Go to step 2.

• Iterate this algorithm for 10,000 steps (fitness-function evaluations) This is equal to

the number of fitness-function evaluations performed by the GA in computer exercise

2 (with population size 100 run for 100 generations). Plot the best fitness found so far

at every 100 evaluation steps (equivalent to one GA generation), averaged over 10

runs. Compare this with a plot of the GA’s best fitness found so far as a function of

generation. Which algorithm finds better performing strategies? Which algorithm finds

them faster? Comparisons like these are important if claims are to be made that GA is

a more effective search algorithm than other stochastic methods on a given problem.

Fig. 1.

IF

x

G

T

x

0

-x