CURS MPI (3)

advertisement

CURS MPI (3)

1. Recapitulare

1.1

Ce este MPI?

MPI = Message Passing Interface

Este o specificatie pentru o biblioteca standard

care defineste sintaxa si semantica unui model extins de

transmitere de mesaje si a fost conceputa de MPI Forum

(SC1992) (http://www.mpi-forum.org)

Nu este:

– un limbaj sau o specificatie de compilator

– nu este o implementare specifica

– nu ofera detalii de implementare; sunt oferite unele

indicii, dar implementatorii o un grad mare de

libertate, iar 2 implementari diferite pot face

acelasi lucru intr-o maniera foarte diferita.

MPI a fost conceput pentru implementarea calculului

paralel in sisteme cu memorie distribuita si in acest sens

ofera rutine pentru schimbul mesajelor intre unul sau mai

multi transmitatori si unul sau mai multi receptori.

Functionalitati standardizate:

- comunicatie P2P

- comunicatie colectiva

- rutine pentru sincronizare

- tipuri de date derivate pentru accessul la structuri

de date non-contigue

- posibilitatea de a crea submultimi de procesoare

- abilitatea de a crea topologii de procesoare

Desi MPI are la baza modelul sistemului cu memorie

distribuita, poate fi folosit pe mai multe tipuri de

sisteme:

-

masini cu memorie distribuita

masini cu memorie partajata

clustere de SMP (Symmetric MultiProcessing)

retele de statii de lucru (NOW)

retele heterogene de calculatoare

1.2

Un exemplu simplu (Calculul lui PI)

Se calculeaza o aproximatie a lui PI prin integrarea

functiei:

4

f(x) =

1 x2

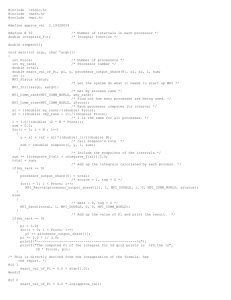

#include "mpi.h"

#include <stdio.h>

#include <math.h>

int main( int argc, char *argv[] ) {

int n, myid, numprocs, i;

double PI25DT = 3.141592653589793238462643;

double mypi, pi, h, sum, x;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&myid);

while (1) {

if (myid == 0) {

printf("Number of intervals: (0 quits) ");

scanf("%d",&n);

}

MPI_Bcast(&n, 1, MPI_INT, 0, MPI_COMM_WORLD);

if (n == 0)

break;

else {

h

= 1.0 / (double) n;

sum = 0.0;

for (i = myid + 1; i <= n; i += numprocs){

x = h * ((double)i - 0.5);

sum += (4.0 / (1.0 + x*x));

}

mypi = h * sum;

MPI_Reduce(&mypi, &pi, 1, MPI_DOUBLE,

MPI_SUM, 0, MPI_COMM_WORLD);

if (myid == 0)

printf("pi is approximately %.16f,

Error is %.16f\n", pi, fabs(pi PI25DT));

}

}

MPI_Finalize();

return 0;

}

2. Topologii si comunicatori

2.1

Comunicatori

De ce? Exemplu:

BMR (Broadcast Multiply Roll) matrix-matrix multiplication

algorithm (s x s mesh of processes, with the matrices

distributed in a 2D block layout):

for k = 0:s-1

1) The process in row i with A(i, (i+k)mod s)

broadcasts it to all other processes in the same row

i.

2) Processes in row i receive A(i, (i+k)mod s) in

local array T.

3) for i = 0:s-1 and j = 0:s-1 in parallel

C(i,j) = C(i,j) + T*B(i,j)

end

4) Upward circular shift each column of B by 1:

B(i,j) <-- B((i+1)mod s, j)

end

In the BMR, note that in each row we have to broadcast a

part of A across the row. We cannot use the MPI_Broadcast()

function call covered earlier because it will send the

message to all processors, not just those in the same row.

Here is where communicators come in handy; we can define

multiple communicators, one for each row. Then by using

that communicator we broadcast just in the specified row.

MPI has intracommunicators and intercommunicators.

Intracommunicators are for grouping processes and allowing

them to send collective communications to each other, while

intercommunicators are for sending messages between

disjoint intracommunicator groups. What we need here are

intracommunicators, each of which consists of a group and a

context. A group is an ordered set of processes, each

assigned a unique number 0, 1, ... s-1 where the set has s

processes. Its context is an MPI-defined class that

uniquely identifies the communicator. You can have two

communicators, each consisting of the identical set of s

processes, but they will have different contexts

(otherwise, you have only one communicator!).

Here is an example code fragment for creating a group from

the second row of an s by s array of processes, as would be

needed for BMR:

/* ---------------- */

/* s = sqrt(p) here */

/* ---------------- */

MPI_Group group_world;

MPI_Group row2_group;

MPI_Comm row2_comm;

int

*row2_ranks = new int[s];

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &p);

MPI_Comm_rank(MPI_COMM_WORLD, &my_rank);

/* ----------------------------------------------- */

/* Create vector of MPI_COMM_WORLD ranks for row2 */

/* processes:

*/

/* ------------------------------------------------*/

for (k = s; k < 2*s; k++)

row2_ranks[k-s] = k;

/*

/*

/*

/*

----------------------------------------------Find the group corresponding to MPI_COMM_WORLD

Note that MPI does the memory allocation needed

----------------------------------------------MPI_Comm_group(MPI_COMM_WORLD, &group_world);

*/

*/

*/

*/

/* --------------------- */

/* Create the row2 group */

/* --------------------- */

MPI_Group_incl(group_world, s, row2_ranks,

&row2_group);

/* ---------------------------------------------------- */

/* Create the new communicator; MPI assigns the context */

/* ---------------------------------------------------- */

MPI_Comm_create(MPI_COMM_WORLD, row2_group,

&row2_comm);

Once you have created the row2 communicator, you can

broadcast in it via operations like

if (my_rank >= s & my_rank < 2*s) {

MPI_Comm_rank(row2_comm, &row2_rank);

if (row2_rank == 0) /* ... set up buffer msg here */

MPI_Bcast(&msg, m*m, MPI_DOUBLE, 0, row2_comm); }

Warning: MPI_Comm_create() is a collective operation, so

all the processes in the old communicator must call it even those not going to be part of the new row2

communicator.

2.2

Topologii

Creating a set of s communicators for the rows, and

another set s of communicators for the columns (to do the

block cyclic shift of the matrix B) is clearly cumbersome.

MPI lets you define an implicit virtual topology on the set

of processors, corresponding to either a grid or a general

graph. This does not magically provide new hardware

interconnects for the machine, but does allows you to take

an algorithm naturally stated for a rectangular mesh of

processors and implement it more naturally. For the BMR

algorithm, this needs to be a square s x s mesh of

processes.

MPI_Comm grid_comm;

int dim_sizes[2], torus[2], coords[2];

dim_sizes[0] = s;

dim_sizes[1] = s;

torus[0] = TRUE; /* or FALSE, we don't care for BMR */

torus[1] = TRUE;

reorder = TRUE;

/*

/*

/*

----------------------------------------------- */

Create the Cartesian grid topology communicator */

----------------------------------------------- */

MPI_Cart_create(MPI_COMM_WORLD, 2, dim_sizes, torus, reorder, &grid_comm);

/*

^

^

^

^

^

^

*/

/*

|

|

|

|

|

|

*/

/*

Parent comm

|

|

|

|

|

*/

/*

Number of dim

|

|

|

|

*/

/*

Size of each dim

|

|

|

*/

/*

Wrap around

|

|

/*

Allow MPI to optimally assign processes to processors

|

/*

Newly created communicator

/* -------------------------------------------------------------------/*

/*

/*

----------------------------------------- */

Find my rank in the new grid communicator */

----------------------------------------- */

MPI_Comm_rank(grid_comm, &grid_rank);

/*

/*

/*

------------------------------------------- */

Find my coords in the new grid communicator */

------------------------------------------- */

MPI_Cart_coords(grid_comm, grid_rank, 2, coords);

Note that the call to MPI_Comm_rank() is needed to

find the new rank in the grid communicator, since MPI was

given permission to reorder the processes (reorder = TRUE).

Then MPI_Cart_coords() returns the coordinates of the

calling process. The inverse, finding the rank given the

coordinates, uses MPI_Cart_rank().

Implementing BMR will be eased with one more function:

MPI_Cart_sub() splits a grid into subgrids of lower

dimension, given a vector that specifies which dimensions

are to be free and which are to be fixed. Specifying the

row communicators takes the form:

MPI_Comm grid_comm;

MPI_Comm row_comm;

free_coords[0] = FALSE;

free_coords[1] = TRUE;

MPI_Cart_sub(grid_comm, free_coords, &row_comm);

and the column communicators:

free_coords[0] = TRUE;

free_coords[1] = FALSE;

MPI_Cart_sub(grid_comm, free_coords, &col_comm);

Using these row_comm and col_comm's, the BMR algorithm

takes the form (in pseudo-code)

for (k = 0; k < s; k++) {

sender = (my_row + k) % s;

if (sender == my_col) {

MPI_Bcast(&my_A_matrix, m*m, MPI_DOUBLE, sender,

row_comm);

/* .. then do local multiply with my_A_matrix */

else

*/

*/

*/

*/

MPI_Bcast(&T, m*m, MPI_DOUBLE, sender, row_comm);

/* .. then do local multiply with temp buffer T*/ }

MPI_Sendrecv_replace(my_B_matrix, m*m, MPI_DOUBLE, dest,

0, source, 0,

col_comm, &status); }

Of course, you have to figure out who is dest and who is

source in the last call.

There are other topologies possible, and using the graph

topology you can define arbitrary ones: hypercubes, rings,

etc. The grid is the most common one, however, which is why

it has been emphasized above.

3. MPI2

3.1

De ce a fost nevoie? Diferente

MPI-1 left out a lot of things that were hard to agree

on for a standard.

MPI-2 what it included:

- Language issues (inter-language operation)

- Dynamic process control/management

- Establishing Communication

- Single sided Communication

- Intercommunicator Collective Operations

- I/O including Parallel IO (PIO)

3.2

Gestiunea dinamica a proceselor

All characteristics of message passing

are contained within communicators. Communicators contain:

- Process lists or groups

- Connection/communication structures topologies

- System derived message tags - envelopes to

separate messages from each other

All processes in an MPI-1 application

belong to a global communicator called MPI_COMM_WORLD and

all other communicators are derived from this global

communicator. Communication can only occur within a

communicator. And this implies safe communication.

All process groups are derived from the membership

of the MPI_COMM_WORLD communicator and this means there are

no external processes => MPI-1 process membership is

static not dynamic:

- simplified consistency reasoning

- fast communication (fixed addressing) even across complex

topologies.

- interfaces well to simple run-time systems as found on

many MPPs.

Disadvantages of static process model:

- if a process fails, all communicators it belongs to

become invalid. I.e. No fault tolerance.

- dynamic resources either cause applications to fail

due to loss of nodes or make applications

inefficient as they cannot take advantage of new

nodes by starting/spawning additional processes.

- when using a dedicated MPP MPI implementation

you cannot usually use off-machine or even off-partion

nodes.

MPI-2 provides a spawn (or

remote start) call:

- depending on the implementation you

have (LAM 6.X+ does MPICH 1.3.1 does not)

- different vendor versions (NEC/SUN)

Two flavors

- MPI_Comm _spawn ( )

Starts new processes from a single binary and

returns an intercommunicator to them

- MPI_Comm_spawn_multiple ( )

Starts new processes from more than one

binary

3.3

Operatii I/O in parallel

3.4

Comunicatie unilaterala

Normal message passing operation

needs at least two parties

- A sender who performs a send call

- A receiver who performs a receive call

Why is this? And what does it have to

do with… memory management/protection?

? Earlier Cray MPP systems allowed

processes to remotely access other

processes memory via shmget and

shmput system function calls.

? This is known as Remote Memory Access

(RMA)

?

?

?

?

?

?

?

?

Remote Memory Access (RMA)

Is fast

Can allow for simple program design

An operation specifies all the send and

receive arguments together

Remote Memory Access (RMA)

Is fast

Can allow for simple program design

An operation specifies all the send and

receive arguments together

Data (memory) in a fixed range (a

window) is made available with a

MPI_Win_create ( ) call.

?

?

?

?

?

Freed with MPI_Win_free ( )

Data can then be accessed via

MPI_Put ( )

MPI_Get ( )

MPI_Accumulate ( )

Synch with MPI_Win

? The communication calls

(put/get/accumulate) are non-blocking

? The operation occurs sometime after the

call BUT before a synchronization point

MPI-2: Single sided communications

? RMA communication is in two classes

? Active

? Memory is moved from one process to another

? One process calls the move

? Both must call the synchronization (including

the owner of the target memory)

? Like message passing

MPI-2: Single sided communications

? RMA communication is in two classes

? Passive

? Memory is copied from a target to two other

processes

? Both processes call the copy

? Both must synchronize (complete) their move,

expect the target does not need to synchronize

Like shared memory

3.5

Probleme legate de limbaj

Disadvantages for MPI specifies both ANSI C and F77

binding:

- no agreed standard for data type conversions

between languages even upon the same

architecture

- communicators cannot be passed between

different language modules due to their

representation

- In F77 a communicator is an INTEGER

- In C it is usually a pointer to a structure

- non standard methods exist in different

implementations

Rezolvate partial – C conversion wrappers -> F2C,

overloading c++ operators called on the c++ side -> C2C++