admin10

advertisement

Computational Physics

Lecture 10

Dr. Guy Tel-Zur

Agenda

•

•

•

•

•

•

Quantum Monte Carlo

Star HPC

Parallel Matlab

GPGPU Computing

Hybrid Parallel Computing (MPI + OpenMP)

OpenFoam

מנהלה

• השעור האחרון הוזז .במקום יום ה' ,ה, 6/1/11 -

השיעור יתקיים ביום א' ,ה17:00- ,2/1/11 -

,20:00בבניין 90חדר .141

• פרויקטי הגמר – כמה תלמידים טרם בחרו נושא או

לא כתבו אלי מייל עם הנושא.

*** דחוף להסדיר את הפרוייקטים***

News…

• AMD, 16 cores in 2011

– How can a scientist continue to program in the old

fashioned serial way???? Do you want to utilize

only 1/16 of the power of your computer????

Quantum Monte Carlo

• MHJ Chapter 11

Star-HPC

• http://web.mit.edu/star/hpc/index.html

• StarHPC provides an on-demand computing

cluster configured for parallel programming in

both OpenMP and OpenMPI technologies.

StarHPC uses Amazon's EC2 web service to

completely virtualize the entire parallel

programming experience allowing anyone to

quickly get started learning MPI and OpenMP

programming.

Username: mpiuser

Password: starhpc08

Parallel Matlab

Unfortunately Star-P is dead

MatlabMPI

http://www.ll.mit.edu/mission/isr/matlabmpi/matlabmpi.html#introduction

MatlabMPI Demo

Installed on the vdwarf machines

Add to Matlab path:

vdwarf2.ee.bgu.ac.il> cat startup.m

addpath /usr/local/PP/MatlabMPI/src

addpath /usr/local/PP/MatlabMPI/examples

Addpath ./MatMPI

xbasic

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Basic Matlab MPI script that

% prints out a rank.

%

% To run, start Matlab and type:

%

%

eval( MPI_Run('xbasic',2,{}) );

%

% Or, to run a different machine type:

%

%

eval( MPI_Run('xbasic',2,{'machine1' 'machine2'}) );

%

% Output will be piped into two files:

%

%

MatMPI/xbasic.0.out

%

MatMPI/xbasic.1.out

%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% MatlabMPI

% Dr. Jeremy Kepner

% MIT Lincoln Laboratory

% kepner@ll.mit.edu

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Initialize MPI.

MPI_Init;

% Create communicator.

comm = MPI_COMM_WORLD;

% Modify common directory from default for better

performance.

% comm = MatMPI_Comm_dir(comm,'/tmp');

% Get size and rank.

comm_size = MPI_Comm_size(comm);

my_rank = MPI_Comm_rank(comm);

% Print rank.

disp(['my_rank: ',num2str(my_rank)]);

% Wait momentarily.

pause(2.0);

% Finalize Matlab MPI.

MPI_Finalize;

disp('SUCCESS');

if (my_rank ~= MatMPI_Host_rank(comm))

exit;

end

Demo folder ~/matlab/, watch top at the other machine

GPGPU

An interesting new article

URL: http://www.computer.org/portal/c/document_library/get_file?uuid=2790298b-dbe4-4dc7-b550b030ae2ac7e1&groupId=808735

GPGPU and Matlab

http://www.accelereyes.com

GP-you.org

>> GPUstart

Copyright gp-you.org. GPUmat is distribuited as Freeware.

By using GPUmat, you accept all the terms and conditions

specified in the license.txt file.

Please send any suggestion or bug report to gp-you@gp-you.org.

Starting GPU

- GPUmat version: 0.270

- Required CUDA version: 3.2

There is 1 device supporting CUDA

CUDA Driver Version:

CUDA Runtime Version:

Device 0: "GeForce 310M"

CUDA Capability Major revision number:

CUDA Capability Minor revision number:

Total amount of global memory:

- CUDA compute capability 1.2

...done

- Loading module EXAMPLES_CODEOPT

- Loading module EXAMPLES_NUMERICS

-> numerics12.cubin

- Loading module NUMERICS

-> numerics12.cubin

- Loading module RAND

3.20

3.20

1

2

455475200 bytes

Let’s try this

A

B

C

D

=

=

=

=

rand(100, GPUsingle); % A is on GPU memory

rand(100, GPUsingle); % B is on GPU memory

A+B; % executed on GPU.

fft(C); % executed on GPU

Executed on GPU

A

B

C

D

=

=

=

=

single(rand(100));

double(rand(100));

A+B; % executed on

fft(C); % executed

Executed on CPU

% A is on CPU memory

% B is on CPU memory

CPU.

on CPU

GPGPU Demos

OpenCL demos are here:

C:\Users\telzur\AppData\Local\NVIDIA Corporation\NVIDIA GPU Computing

SDK\OpenCL\bin\Win64\Release

And

C:\Users\telzur\AppData\Local\NVIDIA Corporation\NVIDIA GPU Computing SDK\SDK

Browser

My laptop has Nvidia Geforce 310M with 16 cuda cores

oclParticles.exe

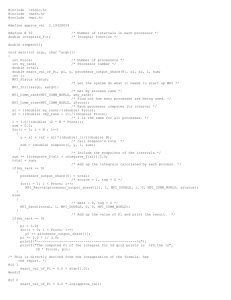

Hybrid MPI + OpenMP Demo

Machine File:

hobbit1

hobbit2

hobbit3

hobbit4

Each hobbit has 8 cores

MPI

mpicc -o mpi_out mpi_test.c -fopenmp

An Idea for a

final project!!!

cd ~/mpi program name: hybridpi.c

OpenMP

MPI is not installed yet on

the hobbits, in the

meanwhile:

vdwarf5

vdwarf6

vdwarf7

vdwarf8

top -u tel-zur -H -d 0.05

H – show threads, d – delay for refresh, u - user

Hybrid MPI+OpenMP continued

OpenFoam