MUSC Information Security Guidelines: Risk Management

advertisement

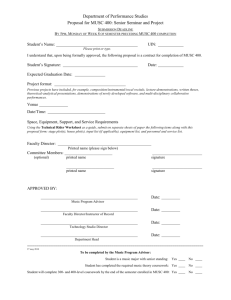

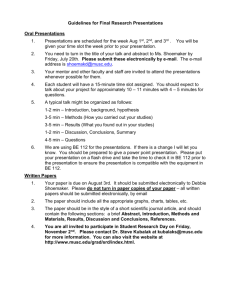

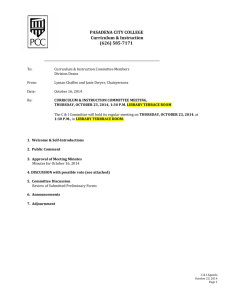

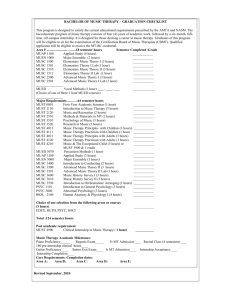

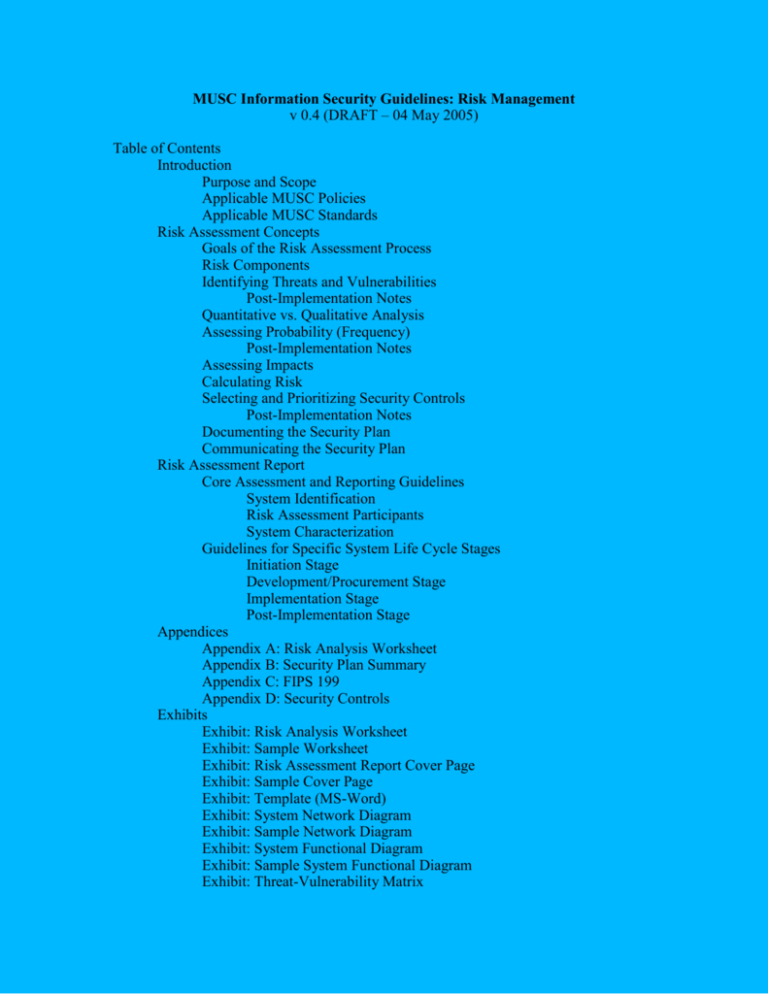

MUSC Information Security Guidelines: Risk Management v 0.4 (DRAFT – 04 May 2005) Table of Contents Introduction Purpose and Scope Applicable MUSC Policies Applicable MUSC Standards Risk Assessment Concepts Goals of the Risk Assessment Process Risk Components Identifying Threats and Vulnerabilities Post-Implementation Notes Quantitative vs. Qualitative Analysis Assessing Probability (Frequency) Post-Implementation Notes Assessing Impacts Calculating Risk Selecting and Prioritizing Security Controls Post-Implementation Notes Documenting the Security Plan Communicating the Security Plan Risk Assessment Report Core Assessment and Reporting Guidelines System Identification Risk Assessment Participants System Characterization Guidelines for Specific System Life Cycle Stages Initiation Stage Development/Procurement Stage Implementation Stage Post-Implementation Stage Appendices Appendix A: Risk Analysis Worksheet Appendix B: Security Plan Summary Appendix C: FIPS 199 Appendix D: Security Controls Exhibits Exhibit: Risk Analysis Worksheet Exhibit: Sample Worksheet Exhibit: Risk Assessment Report Cover Page Exhibit: Sample Cover Page Exhibit: Template (MS-Word) Exhibit: System Network Diagram Exhibit: Sample Network Diagram Exhibit: System Functional Diagram Exhibit: Sample System Functional Diagram Exhibit: Threat-Vulnerability Matrix 1. Introduction 1.1. Purpose and Scope These guidelines are intended to help MUSC System Owners to meet the risk assessment and risk management responsibilities that are assigned to them by MUSC's information security policies. These guidelines apply to all MUSC faculty, students and staff who serve in system ownership roles, in all of the entities that comprise the MUSC enterprise. 1.2. Applicable MUSC Policies Information Security Information Security – Risk Management Information Security – Evaluation Information Security – Documentation 1.3. Applicable MUSC Standards MUSC Information Security Standards: Risk Management 2. Risk Assessment Concepts 2.1 Goals of the Risk Assessment Process All information security risk assessments at MUSC serve the same basic purpose: to select a rational set of security controls or safeguards that will minimize the total cost of information security to the MUSC enterprise, while also meeting all regulatory requirements and accreditation standards. The total cost of security includes both the cost of security controls, and the cost of security breaches. The fundamental goal of information security is to protect against threats to the confidentiality, integrity, and availability of information. This goal can be expressed in term of meeting three basic types of security objectives: Confidentiality: Preserving authorized restrictions on information access and disclosure, including means for protecting personal privacy and proprietary information. Integrity: Guarding against improper information modification or destruction, and includes ensuring information non-repudiation and authenticity. Availability: Ensuring timely and reliable access to and use of information. When dealing with information protection, it is important to recognize that there is no such thing as perfect security. And while too little security leads to unacceptable risks, trying to impose too much security (or the wrong kinds of security) on the users, operators or administrators of a system is a waste of money, and isn't likely to be effective anyway. The goal of risk assessment is to determine the right amounts and the right kinds of security, needed to achieve a reasonable, appropriate, and responsible degree of protection, at the lowest possible total cost. Risk assessment has two other important goals: ● ● Regulatory compliance Maintaining public confidence in MUSC State and federal regulators, and the public at large, expect MUSC's senior management to exercise due diligence in assuring the protection of all the sensitive and critical information that is entrusted to MUSC's stewardship. Senior management in turn expects us to deliver appropriate information protection, and to deliver it efficiently. We cannot meet these expectations if we fail to understand our risks, if we fail to develop plans to manage our risks efficiently and effectively, or if we fail to execute those plans. This is what risk assessment is all about, and why it is such a critical piece of MUSC's information security program. At a very high level, two components contribute to the total cost of security for a system. The first component is the summed cost of all the system's security components themselves. For example, the costs of administering user accounts and passwords, and the costs of setting up and operating routine data backup and recovery procedures. The other major cost component arises from the expected cost (damages) created by security breaches. For example, the costs of lawsuits, fines and reputation damage that would be incurred if a system were compromised and sensitive and/or critical information about patients or other customers were destroyed, or exposed to the wrong people. As a rule, we expect that the more we invest in security controls for a system (as long as we invest our money rationally), the less we expect that we will need to spend on damages from security breaches, and vice versa. This general principle is illustrated in the following graph: F i g u r e 2 . 1-1: Optimal Level of Security An effective risk assessment process for a given system is one that enables the owner of the system to locate and pursue the optimal level of security. The assessment process should lead not only to the right amount of security for a system, but also to the right types of security for the system. The latter objective can be achieved only by first identifying the most significant risks that affect the system, and then by selecting and implementing the most cost-effective controls for managing those risks. The most significant risks are those that contribute the most to the expected cost of security breaches. The assessment process requires us to identify the most significant risks to a system, to understand the various techniques that can be used to control these risks, to understand the organization's ability and capacity to implement these controls, and last but not least, to be aware of any minimum security control standards that must be met as a result of security policies or regulations that apply to the system. Given the depth and breadth of knowledge and skills required, it should not be surprising that an effective risk assessment often requires a multidisciplinary team effort. The more risks that potentially affect a system, and the more people within the MUSC enterprise that the system touches, the more important it is for you, as the system owner, to assemble a knowledgeable and skilled risk assessment team. 2.2. Risk Components An information security risk can arise from anything that threatens the availability, integrity or confidentiality of information. Risks are a function of the specific threats and vulnerabilities that potentially affect your system, the probability (likelihood) of their affecting your system, and the potential impacts on your system, on the MUSC enterprise, and on individuals if they do occur. 2.3 Identifying Threats and Vulnerabilities A threat is defined as the potential for a "threat-source" to intentionally exploit or accidentally trigger a specific vulnerability. In a somewhat circular fashion, a vulnerability is defined as a weakness in a system's security procedures, design, implementation, or internal controls, that could be accidentally triggered or intentionally exploited, and result in a violation of the system's security policies. For a violation (breach) to occur, both a threat and a vulnerability have to exist. A specific type of security breach will result from a specific threat acting upon a specific vulnerability. In other words, a particular threat-vulnerability pair will, in a sense, define a particular type of security breach. For all practical purposes, the terms "security breach" and "threat-vulnerability pair" are equivalent. The first step in assessing the risks to your system is to start developing a list of all the threat-vulnerability pairs that could affect your system. We strongly recommend that you use a Risk Analysis Worksheet to record the list of threat- vulnerability pairs that your assessment team generates. See Appendix A for more information on how to use our recommended Risk Analysis Worksheet tool. But before they can identify potential threats and vulnerabilities, the members of your assessment team will need to have a solid understanding of how your system is put together (or how you expect it to be put together, if it is still in the conceptual or development stage). Network diagrams, information flow diagrams, and tables of hardware and software components are essential tools for understanding a system. The boundaries of your system, and its interfaces with external systems, network infrastructure components, and end-user devices, must also be clearly understood by the members of your assessment team. If you haven't already done so, you will need to develop the appropriate diagrams and tables, and familiarize your assessment team members with the architecture of your system, before going any further. Developing a list of potential threat-vulnerability pairs is best done as an exercise involving the entire assessment team. Try to keep the team members focused on threats and vulnerabilities that they can reasonably anticipate, that have a nonnegligible probability of occurring, and that would have a non-negligible impact if they did occur. Your list doesn't need to be 100% complete before you can move on to the next step in your assessment; your team can add to the list later if they realize that they have overlooked a significant threat or vulnerability. 2.3.1 Identifying Threats and Vulnerabilities: Post-Implementation If you are performing a Post-Implementation risk assessment, then obviously your assessment team should be thoroughly familiar not only with the system's current architecture, but also with its current state. The assessment team needs to be aware of the results of any evaluations of the security controls that have already been implemented with the system. If no recent evaluation has taken place, then an overall evaluation of the system's current security controls should be completed before attempting a Post-Implementation risk assessment. If the system's existing security controls are known to be working effectively, then the risk assessment team can focus all of its attention on assessing the "new" risk issues that arise from the specific environmental, operational, regulatory, or policy change(s) that motivated the Post-Implementation risk assessment in the first place. In other words, if all of the "old" risk issues are still under control, then the Post-Implementation assessment team does not really need to worry about them. On the other hand, if recent evaluation has shown that any of the existing controls have not proved to be effective, then the assessment team needs to broaden the scope of its review to include all of the "old" underlying risk issues (threatvulnerability pairs and/or policy/regulatory issues) that originally motivated the implementation of these "old" controls, that subsequently turned out to be ineffective. In other words, any Post-Implementation risk assessment team does need to worry about any "old" risk issues that are not under control. 2.4 Quantitative vs. Qualitative Analysis The type of risk posed by a particular type of potential security breach is defined by the threat and the vulnerability that together create the risk of the potential breach. The level of risk depends on two additional factors: ● ● the probability that the threat will act upon the vulnerability the potential impact of the breach that would result For the purposes of risk assessment, we usually express the probability of an event in terms of its expected frequency of occurrence. There are two basic approaches to risk assessment: quantitative and qualitative. In a quantitative assessment, your objective is to express the level of risk in cold, hard, monetary terms. To do this, you must first document a numerical probability for the probability of occurrence of each type of potential security breach; you'll have to use whatever valid historical frequency data you can find that would be applicable to your system. To quantify potential impacts, you need to compute an actual monetary value for the total expected losses from each type of security breach; you'll have to do a detailed and thorough financial impact analysis to compute this quantity. In theory, a quantitative risk assessment is ideal for risk-based decision-making, because it allows the risk assessment team to precisely compute the level of risk from each type of potential breach, and express the level of risk in cold, hard, monetary terms. This is usually done using the formula for Annualized Loss Expectancy (ALE), in which the expected annual loss from each type of potential breach is simply its frequency (expected number of breaches of that type per year) multiplied by its impact (total expected losses from each breach of that type, in dollars). ALEx = Frequencyx x Impactx For a given type of security breach x, the annualized loss expectancy due to x is simply the product of two factors: the expected frequency of x (the expected number of occurrences of x per year), and the potential impact of each occurrence of x (the total expected damages and losses from each occurrence, in dollars). By computing an ALE for each type of potential breach, the risk assessment team can assign a dollar value to each type of risk. Then, by sorting the risks by their ALE's, the team can assign each risk the proper priority for management. Alas, a significant problem with quantitative risk analysis is that most threat probabilities and impacts are extremely difficult to quantify. Security breaches occur relatively rarely, and organizations tend not to publicize them; as a result, most sources of incidence information are essentially anecdotal, and cannot be used to support the development of reliable probability or frequency estimates. Likewise, the total expected cost of the potential losses from a given type of breach are hard to estimate, and they may depend on factors such as length of downtime, amount of adverse publicity, and other such factors that are highly variable and inherently difficult to predict without a great deal of uncertainty. Because of the uncertainty problem, we recommend a qualitative approach to risk assessment. In a qualitative assessment, you determine relative risk rather than absolute risk. At the cost of some (debatable) loss of precision, you simplify your analysis significantly. In a qualitative analysis, you don't attempt to quantify risk precisely. Instead, you try to produce good, but rough, estimates of risk levels. For most assessments, a simple scale with just three levels of risk (Low, Moderate, and High) is good enough to allow your risk assessment team to identify the most significant risks, and to assign mitigation priorities with a reasonable degree of confidence that all significant risks will be addressed. 2.5. Assessing Probability (Frequency) Recall the two factors that contribute to the level of risk created by a given threatvulnerability combination: ● ● the probability that the threat will act upon the vulnerability the potential impact of the breach that would result In risk analysis, we usually express the probability of an event in terms of its expected frequency of occurrence. At MUSC, in keeping with our qualitative approach, we recommend using a simple three-point scale, as in the following example, for rating the estimated probability of a given type of breach: Rating Frequency of Occurrence (Expected) High > 12 times per year Moderate 1 - 11 times per year Low < 1 time per year Table 2.5-1: Probability (Frequency) Ratings Remember, the whole point of a qualitative risk assessment is to assess relative risks, and to determine which risks are the most significant and therefore should be assigned the highest priority for management. If using the frequency ratings suggested above results in all, or nearly all, of your potential breaches' being assigned the same probability, then you should consider adjusting your frequency thresholds, in order to get some useful separation; otherwise, you'll ultimately find it harder to identify the threat-vulnerability pairs that pose the highest risks. We strongly recommend that you use your Risk Analysis Worksheet to record the assessed Probability (Frequency) rating for each threat-vulnerability pair that your assessment team generated. See Appendix A for more information on how to use our recommended Risk Analysis Worksheet tool. 2.5.1. Assessing Probability (Frequency): Post-Implementation If you are performing a Post-Implementation risk assessment, then your assessment team should generally rate the probabilities of threat-vulnerability occurrence's with respect to their understanding of the system's current state, as derived from the most recent available evaluation of the effectiveness of the system's existing security controls. For example, if the system already has an effective security control in place to reduce the probability of a potential issue from "High" to "Low" then that should be reflected in your rated Probability (Frequency) for that potential issue. 2.6. Assessing Impacts The second factor that determines the level of risk created by a given threatvulnerability pair is the potential impact of the breach that would result if that specific threat did exercise that specific vulnerability. The impact of a breach depends on the effects that the breach would have on MUSC operations, on MUSC assets, and on individuals (typically students, patients, or other customers). Depending on the particular threat-vulnerability pair and the particulars of your system, the breach could result in one or more undesirable types of outcomes, including the disclosure or unauthorized viewing of confidential information, the unauthorized modification of sensitive information, the loss or destruction of important information, or an interruption in availability or service. Undesirable outcomes like these can potentially affect MUSC and its customers in many different ways, including the following: ● ● ● ● ● ● ● Life, health and well-being of MUSC's students Life, health and well-being of MUSC's patients Life, health and well-being of other MUSC customers Life, health and well-being of MUSC's faculty or employees Damage to MUSC's reputation and loss of customer confidence Interference with MUSC's ability to meet its mission obligations Fines, damages, settlements, and other legal costs To characterize the overall impact of particular type of breach affecting a particular system, you should consider potential impacts in all of these areas, and in any other areas that may be relevant to your system. The overall rating that you give to the impact of a security breach should be the "high water mark" of its impact across all areas. For example, if the impact in all areas is low, then rate the overall impact as Low. Likewise, if the highest impact among all areas is moderate, then rate the overall impact as Moderate. And finally, if the impact in any area is high, then rate the overall impact as High. The NIST Standards for Security Categorization of Federal Information and Information Systems were published as Federal Information Processing Standards Publication 199 (FIPS 199) in February 2004. This document established a simple impact rating standard, that can be used equally well inside and outside the federal government. FIPS 199 defines a simple, three-level rating system for potential impacts. Three standard impact ratings (Low, Moderate, and High) are defined as follows: ● ● ● The potential impact is Low if: The loss of confidentiality, integrity, or availability could be expected to have a limited adverse effect on organizational operations, organizational assets, or individuals. The potential impact is Moderate if: The loss of confidentiality, integrity, or availability could be expected to have a serious adverse effect on organizational operations, organizational assets, or individuals. The potential impact is High if: The loss of confidentiality, integrity, or availability could be expected to have a severe or catastrophic adverse effect on organizational operations, organizational assets, or individuals. Obviously, the terms limited and serious and severe or catastrophic are subject to interpretation, but the FIPS 199 standard does include a careful "amplification" on the meaning of these terms. For more information on how to rate potential impact using the FIPS 199 standard, please refer to Appendix C. We strongly recommend that you use your Risk Analysis Worksheet to record the assessed Impact rating for each threat-vulnerability pair that your assessment team generated. See Appendix A for more information on how to use our recommended Risk Analysis Worksheet tool. 2.7. Calculating Risk Recall that for a given type of security breach or threat-vulnerability combination, the level of risk that it poses is the product of two factors: its probability, and its potential impact. In a qualitative risk assessment, we do not attempt to literally calculate levels of risk, in the same sense that we would calculate an Annualized Loss Expectancy or ALE in a quantitative assessment. But we do still need to be able to "calculate" different levels of risks so that we can compare them, even though the two basic risk factors (probability and impact) are expressed in non-numeric, qualitative terms. If you use Low-Moderate-High scale for assessing your two risk factors, as recommended in these guidelines, then you can use the following multiplication table to determine a qualitative Risk level for each threat-vulnerability pair. In Table 2.7-1, the row headings denote your rated potential Impact of a particular breach, the column headings denote your rated Probability (Frequency). The entries within the table then give you a qualitative rating of the overall Risk level, which is "computed" as the "product" of Impact and Probability: Low Moderate High Low Low Low Moderate Moderate Low Moderate High High Moderate High High Table 2.7-1: Qualitative Risk Multiplication Table For example, if the rated Impact is Low, then you should rate the Risk-level as Low if the rated Probability is Low or Moderate, and you should rate the Risklevel as Moderate if the Probability is rated High, and so on. There are an important caveat to keep in mind when rating risk levels, no matter what methodology or formula you use. Not all "equally rated" risks should necessarily be viewed or treated equally. For example, a high-impact, low-probability breach, and a low-impact, highprobability breach will both end up having the same "Moderate" risk-level rating when you use the formula in Table 2.6-1. But the impact of the first breach would be considered severe or catastrophic, even if it is unlikely to actually occur, while the impact of the second breach would be considered relatively minor, even if it is expected to occur often. Which type of breach poses the most significant risk? The devil, as they say, is in the details. Your team might well conclude that the first type of breach is much more important to protect against, because it could literally put MUSC out of business if it ever does occur, and therefore assign it a higher priority when selecting and prioritizing security controls. There are limitations in any formal risk assessment methodology. You can and should use your assessments of relative risk levels, whether derived quantitatively or qualitatively, to help you select and prioritize the security controls for your system, as we'll discuss in the next section. But you also need to exercise good judgment, and be aware of the limitations of whatever formal risk assessment methodology you use. We strongly recommend that you use your Risk Analysis Worksheet to record the assessed Risk level rating for each threat-vulnerability pair that your assessment team generated. See Appendix A for more information on how to use our recommended Risk Analysis Worksheet tool. 2.8 Selecting and Prioritizing Security Controls At this point, your team has identified threat-vulnerability pairs, rated their probabilities and potential impacts, and calculated at least a qualitative level of risk for each threat-vulnerability pair. Now your team needs to decide (or in some cases, help your senior management to decide) how to appropriately deal with each of the risks that have been identified. The goal is to specify a rational set of security controls to appropriately manage the documented risks. In addition, the set of security controls that is specified must meet any externally-imposed information security requirements, including all applicable MUSC information security policies, and any additional legal, regulatory or accreditation requirements that your system is subject to. Selecting a rational (optimal) set of controls requires a broad range of knowledge and skills. If the members of your risk assessment team do not have a sound, collective understanding of all the different techniques that can be used to control information security risks, and of the overall ability and capacity of the people within the MUSC enterprise to implement the various types of technical, operational, and administrative controls that should be considered, then your team will not be able select and recommend an optimal set of controls. Your assessment team should start by reviewing all of the threat-vulnerability pairs identified for your system, and their assessed risk levels. Your team should also review all MUSC information security policies that are applicable to your system, and any additional legal, regulatory or accreditation requirements that your system may be subject to. For each threat-vulnerability pair, and for each external policy/regulatory requirement, your assessment team will need to discuss and evaluate control options. There may be many different control options for addressing each issue, and some control options may address multiple issues. The goal is to select an optimal set of controls -- one that meets all policy/regulatory requirements, and addresses all known risks in an acceptable manner, at the lowest overall cost to MUSC, and with the least overall impact on the MUSC enterprise. A cost-benefit analysis is generally needed to compare the various control alternatives, or in many cases, various alternative combinations of controls. Selecting an optimal set of controls requires your team to consider many different alternatives, and to carefully evaluate the alternatives in terms of their expected effectiveness, feasibility, and overall cost and impact. Refer to Appendix D for more information on the selection and evaluation of security controls. The end result of your assessment team's evaluation should be a prioritized list of recommended security controls. The priority that your assessment team assigns to each recommended control should be based on the importance of the issue(s) that the control would address. Issues with the highest rated risks, and issues that arise from a policy/regulatory requirement, should all be reflected in the recommended control priorities. Your control recommendations should document which issue(s) would be addressed by each control. If it is warranted by your security plan's overall cost or its potential organizational impact, then you should review the proposed plan with the appropriate senior management. If senior management questions your plan, then you may be asked to provide more details. You may be asked to show the details of your risk analysis, or the details of the cost-benefit analysis that led to your selection of controls. We strongly recommend that you use your Risk Analysis Worksheet to record and prioritize the selected controls for each threat-vulnerability pair that your assessment team generated. See Appendix A for more information on how to use our recommended Risk Analysis Worksheet tool. 2.8.1 Selecting and Prioritizing Security Controls: Post-Implementation If you are performing a Post-Implementation risk assessment, then your assessment team's evaluation and selection of new or modified security controls should be informed by their understanding of the system's current state, as gleaned from the most recent available evaluation of the effectiveness of the system's existing security controls. For example, the assessment team should generally not select a new or modified control that's already been tried and has proven ineffective, unless the reasons that it failed are well-understood and can be avoided the next time around. Likewise, if they are evaluating an entirely new and different type of control, against a small modification to an existing control that has proved highly effective, the assessment team's choice should be obvious, assuming the costs and benefits of the two approaches are comparable. 2.9 Documenting the Security Plan Now that a set of prioritized control recommendations has been documented, and if necessary approved by senior management, you need to create a security plan for your system. Beware that anyone who is expected to help execute your plan should generally be afforded the opportunity to participate in its development. The form and substance of a good security plan, and how the security plan should be documented, depends on which system life cycle stage the plan applies to. If your (proposed) system is still in the Initiation stage, then your security plan simply needs to be reflected in the business plan for the system. If you don't have a written business plan, then obviously you don't need a written security plan. If your (proposed) system is in the Development/Procurement stage, then your security plan should be reflected in any written specifications for the system's development and/or procurement, including any RFP(s) associated with the system. In other words, if any of your expected security controls need to be designed into the system, or are expected to be procured as part of the system, then requirements and/or specifications for those controls should be included in the written requirements and/or specifications for the system. If your system is in the Implementation stage, then your security plan should be incorporated into your overall system implementation plan. For each of your prioritized, recommended controls, your system implementation plan should identify who is responsible for implementing, testing, and verifying the control, and it should document the specific resources needed to implement, test and verify each control. The implementation plan should document the planned time frame for the implementation of each control (including testing and verification). If security controls will require on-going operation and maintenance, then those resource requirements should also be documented in the implementation plan. The implementation plan should also schedule time for a final review of all of the system's security controls by appropriate compliance official(s); the plan should also allow for the possibility of needing time and resources for remedial action prior to go-live. If your system is in the Post-Implementation stage, then your security plan should consist of an implementation plan for any new or modified security controls that are being recommended now, as a result of the current risk assessment. For each new or modified control, the implementation plan should identify who is responsible for implementing, testing, and verifying the control, and it should document the specific resources needed to implement, test and verify each control. The implementation plan should document the planned time frame for the implementation of each new or modified control (including testing and verification). If the new or modified security controls will require on-going operation and maintenance, then those resource requirements should also be documented in the implementation plan. (Note: If there has been no prior documented risk assessment, then the current risk assessment report should document all security controls that are already implemented with the system, and who is responsible for their on-going operation and maintenance.) In both the Implementation and Post-Implementation stages, we strongly recommend that you use a Security Plan Summary document to record who is responsible for implementing, testing and verifying each security control, to document the expected time frame for completion, and to note any on-going operational and/or maintenance requirements. See Appendix B for more information on how to use our recommended format for documenting your Security Plan Summary. 2.10 Communicating the Security Plan Your security plan must be communicated to everyone who is expected to participate in its implementation. If you have allowed for appropriate participation in the plan's development by those who will be affected, then there should be no surprises. In addition, if warranted by your security plan's overall cost or its potential organizational impact, you should review the plan with the appropriate senior management. 3. Risk Assessment Report MUSC's policies and standards require a documented (written) risk assessment report for certain systems. The following sections provide guidelines for producing a risk assessment report that conforms to MUSC's policies and standards. Normally one or more members of the risk assessment team is assigned responsibility for reporting the team's findings. 3.1. Core Assessment and Reporting Guidelines The first set of guidelines presented in this section apply to all risk assessment reports, regardless of the system life cycle stage to which the assessment applies. 3.1.1. System Identification All risk assessment reports should adequately identify and describe the system that is the subject of the assessment, by reporting the following information: ● ● ● ● ● ● Name of the System -- if not the exact name that the system is registered under in the MUSC Systems Registry, then it should be followed parenthetically by a cross-reference to that registered name System Owner - the name and email address of the System Owner, as registered in the MUSC Systems Registry; you should also document the System Owner's role if ownership is role-based, for example, the chair of the system's governance committee or council Effective Date of the Assessment - the date on which the findings documented in the assessment report were finalized Purpose of the Assessment - the system life cycle stage to which the report applies (one of the following: Initiation, Development/Procurement, Implementation, or Post-Implementation; if Post-Implementation, then also state the specific environmental, operational, regulatory, or policy change(s) that triggered the need for this post-implementation assessment) Project Manager - the name and email address of the project manager assigned to the overall system implementation project (applicable only if this is a risk assessment for the Implementation stage in the system's life cycle) Purpose and Major Function(s) of the System - a statement of the system's high-level purpose in relation to MUSC's missions, and a brief list of the system's major functions All of the above information should be documented in the cover page for the assessment report. See Exhibit 1 for a template (MS-Word), and Exhibit 1A for an example (PDF). 3.1.2. Risk Assessment Participants Recall that one of the goals of a risk assessment is demonstrating due diligence. The assessment report should therefore document who participated in the assessment, and who authored the report that documents the assessment team's findings. The following information should be reported on the cover page: ● ● Assessment Team Members - a list of the names, email addresses, and roles of everyone who participated in or contributed to the assessment Assessment Report Author(s) - a list of the names and email addresses of the principal author(s) of the assessment report itself 3.1.3. System Characterization The assessment team's overall (summary) classifications of the system in terms of confidentiality, integrity, and availability should be reported on the cover page. These classifications should follow the FIPS 199 standard. See Appendix C for more information on FIPS 199. In the course of the risk assessment, your team should have had access to diagrams and tables that document the system's overall architecture and boundaries (see Section 2.3). These high-level system architecture documents should be attached to your assessment report, and referenced on the cover page. 3.2. Guidelines for Specific System Life Cycle Stages The remaining guidelines in this section apply to the different types of risk assessment reports that are required at specific system life cycle stages. 3.2.1. Initiation Stage At the Initiation stage, a risk assessment report should contain one additional section, beyond the cover page and the attachments described in Section 3.1. This one additional section should simply list all of the major types of security controls that are expected to be required with the proposed system. 3.2.2. Development/Procurement Stage At the Development/Procurement stage, a risk assessment report should contain one additional section, beyond the core elements described in Section 3.1. This one additional section should simply list all of the security controls that are required to be developed/procured with the proposed system. 3.2.3. Implementation Stage At the Implementation stage, a risk assessment report should contain several additional sections, beyond the core elements described in Section 3.1. Note that your completed Risk Analysis Worksheet and your completed Security Plan Summary document are the recommended tools for meeting all three of the general reporting requirements listed below. Risk Analysis: The assessment report should list all of the threat-vulnerability pairs that the assessment team identified as being non-negligible for your system, along with any other risk issues (including policy or regulatory requirements), and the team's rated level of risk for each issue. Prioritized List of Selected Controls: The assessment report should document the prioritized list of security controls selected for implementation with the system, based on the results of the assessment team's risk analysis (which included any policy, regulatory or accreditation requirements that your system is subject to). Security Plan Summary: For each security control selected for the system by the assessment team, the assessment report should identify who is responsible for implementing, testing, and verifying the control. The plan should document the planned time frame for the implementation of each control (including testing and verification). If security controls will require on-going operation and maintenance, then those resource requirements should also be documented in the security plan summary. Additional detail on the security plan (for example, resource requirements) is expected to be found in the overall implementation plan for the system; the assessment report should document (on the cover page) the (lead) project manager for the overall system implementation project. 3.2.4. Post-Implementation Stage At the Post-Implementation stage, a risk assessment report should contain several additional sections, beyond the core elements described in Section 3.1. The first additional section documents the assessment team's understanding of the system's current (baseline) state when the Post-Implementation assessment was conducted. Some of its content will depend on whether a previous risk assessment document exists. Assessment Baseline: If a significant length of time has elapsed since the system's existing security controls have been implemented, then this section in the report should include a summary of the results of the most recent evaluation of the system's existing security controls. The remaining content in this section will depend on whether or not a previous risk assessment has been conducted and documented, and whether that documentation still exists: ● ● If a previous documented risk assessment exists: List any new or modified security controls that have been implemented since the previous documented assessment, and document who is responsible for the on-going operation and maintenance of these new or modified controls. If no prior documented risk assessment exists: List all existing security controls that are already implemented with the system, and who is responsible for their on-going operation and maintenance. The remainder of the risk assessment report should be focused on the specific risk issues that were within your assessment team's scope (see Section 2.3.1), and on the security plan that your assessment team developed to address these specific risk issues. Your assessment team's completed Risk Analysis Worksheet and completed Security Plan Summary document are the recommended tools for meeting all three of the general reporting requirements listed below. Risk Analysis: The assessment report should list all of the threat-vulnerability pairs that the assessment team identified as being non-negligible for your system, along with any other risk issues (including policy or regulatory requirements), and the team's rated level of risk for each issue. Prioritized List of Selected Controls: The assessment report should document the prioritized list of new or modified security controls selected for implementation with the system, based on the results of the assessment team's risk analysis (which included any policy, regulatory or accreditation requirements that your system is subject to), Security Plan Summary: For each new or modified security control selected for the system by the assessment team, the assessment report should identify who is responsible for implementing, testing, and verifying the control. The plan should document the planned time frame for the implementation of each new or modified control (including testing and verification). If security controls will require ongoing operation and maintenance, then those resource requirements should also be documented in the security plan summary. Appendix A: Risk Analysis Worksheet Our recommended Risk Analysis Worksheet is a simple spreadsheet that lists an identified (non-negligible) threat-vulnerability pair for your system on each row, along with its assessed probability (frequency), impact, and risk level, followed by the specific security controls that have been selected to address the threatvulnerability (if any), and the overall priority assigned to the selected control(s). The Risk Analysis Worksheet is also a convenient place to document any additional (external) security requirements that apply to your system, including policies, regulations, and accreditation standards. As necessary, document each such requirement as a pseudo-"Threat-Vulnerability" issue (whose "Risk Level" is automatically rated High) and then evaluate, select, and document controls to address the issue accordingly. Our recommended Risk Analysis Worksheet has the following columns: ● ● ● ● ● ● ● Threat-Vulnerability Probability/Frequency Impact Risk Level Selected Control(s) Control Priorit(ies) Comments A Risk Assessment Worksheet template (MS-Excel) is provided as Exhibit x, along with completed examples for some completely imaginary but hopefully representative systems (Exhibits x, x). Appendix B: Security Plan Summary Our recommended Security Plan Summary is a simple spreadsheet that lists, in priority order, all of the security controls that your assessment team (with senior management's involvement if appropriate) has selected for implementation with the system. For each selected control, the spreadsheet names the individual or group responsible for implementing, testing and verifying the control, and documents the planned time frame for the implementation of each control (including testing and verification). If the security control will require on-going operation and maintenance, then a brief statement of those requirements should also be documented. Our recommended Security Plan Summary has the following columns: ● ● ● ● ● ● Security Control Implementation Priority Responsible Person/Group Start Date End Date Operational/Maintenance Requirements A Security Plan Summary template (MS-Excel) is provided as Exhibit x, along with completed examples for a few completely imaginary but hopefully representative systems (Exhibits x, x). Appendix C: In February 2004, NIST published a document with the title "Standards for Security Categorization of Federal Information and Information Systems" as Federal Information Processing Standards Publication 199 (FIPS 199). This document established a simple impact rating standard, that can be used equally well inside and outside the federal government. FIPS 199 defines a simple, three-level rating system for potential impacts. The three ratings (Low, Moderate, and High) are defined as follows: The potential impact is Low if: The loss of confidentiality, integrity, or availability could be expected to have a limited adverse effect on organizational operations, organizational assets, or individuals. Amplification: A limited adverse effect means that, for example, the loss of confidentiality, integrity, or availability might: (i) cause a degradation in mission capability to an extent and duration that the organization is able to perform its primary functions, but the effectiveness of the functions is noticeably reduced; (ii) result in minor damage to organizational assets; (iii) result in minor financial loss; or (iv) result in minor harm to individuals. The potential impact is Moderate if: The loss of confidentiality, integrity, or availability could be expected to have a serious adverse effect on organizational operations, organizational assets, or individuals. Amplification: A serious adverse effect means that, for example, the loss of confidentiality, integrity, or availability might: (i) cause a significant degradation in mission capability to an extent and duration that the organization is able to perform its primary functions, but the effectiveness of the functions is significantly reduced; (ii) result in significant damage to organizational assets; (iii) result in significant financial loss; or (iv) result in significant harm to individuals that does not involve loss of life or serious life threatening injuries. The potential impact is High if: The loss of confidentiality, integrity, or availability could be expected to have a severe or catastrophic adverse effect on organizational operations, organizational assets, or individuals. Amplification: A severe or catastrophic adverse effect means that, for example, the loss of confidentiality, integrity, or availability might: (i) cause a severe degradation in or loss of mission capability to an extent and duration that the organization is not able to perform one or more of its primary functions; (ii) result in major damage to organizational assets; (iii) result in major financial loss; or (iv) result in severe or catastrophic harm to individuals involving loss of life or serious life threatening injuries. Appendix D: [objectives to consider (prevention, detection, recovery); types to consider (technical, operational, administrative); cost-benefit analysis; complementarity; sufficiency; defense in depth.]