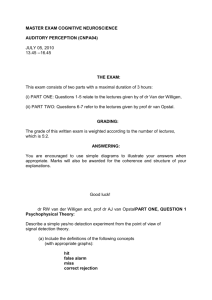

Auditory Objects[1] - University of Toronto

advertisement

![Auditory Objects[1] - University of Toronto](http://s3.studylib.net/store/data/007865137_2-080f9ab34dcb387399b5423313b9565d-768x994.png)