ANALYSIS OF FORMAL PROTOCOL TEST MODELS AND

advertisement

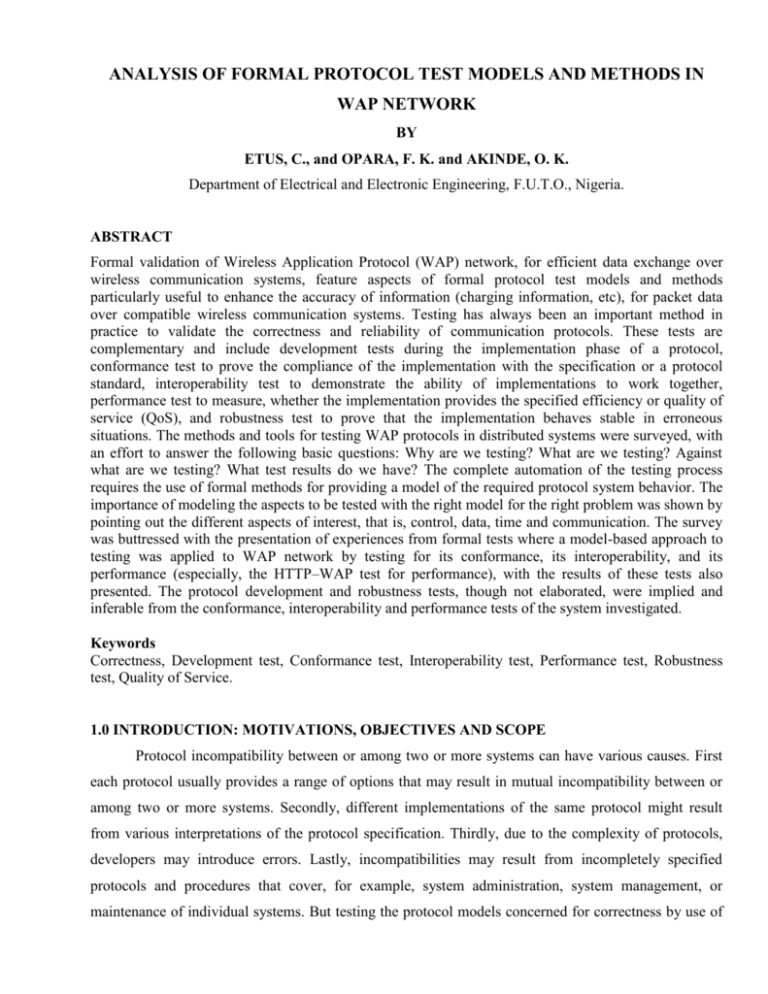

ANALYSIS OF FORMAL PROTOCOL TEST MODELS AND METHODS IN WAP NETWORK BY ETUS, C., and OPARA, F. K. and AKINDE, O. K. Department of Electrical and Electronic Engineering, F.U.T.O., Nigeria. ABSTRACT Formal validation of Wireless Application Protocol (WAP) network, for efficient data exchange over wireless communication systems, feature aspects of formal protocol test models and methods particularly useful to enhance the accuracy of information (charging information, etc), for packet data over compatible wireless communication systems. Testing has always been an important method in practice to validate the correctness and reliability of communication protocols. These tests are complementary and include development tests during the implementation phase of a protocol, conformance test to prove the compliance of the implementation with the specification or a protocol standard, interoperability test to demonstrate the ability of implementations to work together, performance test to measure, whether the implementation provides the specified efficiency or quality of service (QoS), and robustness test to prove that the implementation behaves stable in erroneous situations. The methods and tools for testing WAP protocols in distributed systems were surveyed, with an effort to answer the following basic questions: Why are we testing? What are we testing? Against what are we testing? What test results do we have? The complete automation of the testing process requires the use of formal methods for providing a model of the required protocol system behavior. The importance of modeling the aspects to be tested with the right model for the right problem was shown by pointing out the different aspects of interest, that is, control, data, time and communication. The survey was buttressed with the presentation of experiences from formal tests where a model-based approach to testing was applied to WAP network by testing for its conformance, its interoperability, and its performance (especially, the HTTP–WAP test for performance), with the results of these tests also presented. The protocol development and robustness tests, though not elaborated, were implied and inferable from the conformance, interoperability and performance tests of the system investigated. Keywords Correctness, Development test, Conformance test, Interoperability test, Performance test, Robustness test, Quality of Service. 1.0 INTRODUCTION: MOTIVATIONS, OBJECTIVES AND SCOPE Protocol incompatibility between or among two or more systems can have various causes. First each protocol usually provides a range of options that may result in mutual incompatibility between or among two or more systems. Secondly, different implementations of the same protocol might result from various interpretations of the protocol specification. Thirdly, due to the complexity of protocols, developers may introduce errors. Lastly, incompatibilities may result from incompletely specified protocols and procedures that cover, for example, system administration, system management, or maintenance of individual systems. But testing the protocol models concerned for correctness by use of formal methods can enhance compliance to standards and ensure protocol compatibility. The analysis of WAP protocol test models and methodologies in this paper will go a long in buttress and confirming these issues. 2.0 BACKGROUND Traditionally, the most commonly used methods for ensuring the correctness of a system have been simulation and testing. In many cases, “correct” is taken to mean equivalent, and the goal would certainly be met by a general technique for determining equivalence [Laycock, 1993]. While both have their strong points – simulation for evaluating functionality early in the design, and testing for ascertaining the behavior of the actual finished product – they clearly have significant limitations. For one, neither simulation nor testing can be exhaustive for any reasonably complex system, leaving open the possibility of unexpected behavior in situations that have not been explored. However, testing makes for validation in both hardware and software and not just verification – which is limited. Moreover, testing takes up a large part of development costs, and errors discovered late in the development process can be prohibitively expensive. Testing is critical especially for concurrent systems, which often present intricate interactions between components that are difficult to follow and evaluate without formal and automated support. Errors can sometimes occur only for specific execution sequences which are difficult if not impossible to reproduce or debug, making an exhaustive analysis necessary. Moreover, for certain critical systems, the application of formal methods has become a requirement, both for structuring the development process and for verifying the resulting product. For a survey on the state of the art in the field, including numerous industrial examples, see [Clarke and Wing, 1996]. Testing is therefore the dominating technique used in industry to validate that developed protocols conform to its specifications, and testing seeks to achieve correctness by detecting all the faults that are present in an implementation, so that they can be removed. Testing is a trade-off between increased confidence in the correctness of the implementation under test (IUT) and constraints on the amount of time and effort that can be spent in testing. Therefore, the coverage, or adequacy of the test suite, becomes a very important issue. Quality, adequacy or compatibility of tests for a given specification is commonly evaluated by checking the extent to which tests satisfy a particular test coverage criterion. A coverage criterion guides a testing strategy and sets requirements for a test suite based upon coverage of certain characteristics associated with the given specification. The list of characteristics considered for defining the test coverage criteria includes the following: conformance requirements, specification structure, and input domains [Graham and Ould, 1990]. The basic principle of testing for correctness is the selection of test cases or models that satisfy some particular criterion. The particular criterion used for testing computer systems has changed considerably in nature and scope as computer systems, and our knowledge of their behaviour, has developed. The essential difference between general software system testing and protocol testing is the fact that protocols are reactive systems; instead of having a single set of input parameters, the input to a reactive system is an infinite sequence of input events, each including a certain number of input parameters. 3.0 FORMAL PROTOCOL TEST MODELS AND METHODS Most communicating systems are tested for protocol conformance of its implementation against its specifications (critical requirements). Nevertheless, In addition to conformance testing, other types of protocol testing have been proposed [Dssouli et al, 1999], which includes: Interoperability testing to determine whether two implementations or more will actually inter-operate and if not, why – (Note that interoperability testing requires N=N test campaigns between N different implementations, whereas conformance testing against the protocol specification requires only N campaigns); Performance testing to measure the performance characteristics of an implementation, such as its throughput and responsiveness under various conditions; Robustness testing to determine how well an implementation recovers from various error conditions and abnormal situations. Hence, protocol testing is either oriented at the conformance of an IUT with respect to the specification of the network component, the interoperability between the IUT and other network components, the quality of service (performance) of the IUT, or at its robustness. These protocol formal test orientations or types will now be illustrated in the light of protocol models and methods. 3.1 Protocol Model Test for Conformance One of the well-established methods in testing is that of conformance testing. It is used to check that an implementation meets its functional requirements, i.e. that the implementation under test (IUT) is functionally correct. Hence, it targets the correctness of the temporal ordering and exchanged protocol data units (PDUs) or of abstract service primitives (ASPs). A good way of achieving a successful cooperation among communication systems is to define reference standards or models. Then these standards must be checked for correctness and their implementations must also be checked for correctness. Verification is the activity which is concerned with analyzing specification properties in order to detect possible inconsistencies. Conformance testing is the activity which is concerned with experimenting with an implementation in order to check its correctness according to a given specification. According to the WAP forum [WAP forum, 1998], protocol implementations (e.g. WAP specifications, etc.,) must be tested against standards in two tracks / phases to check, firstly for their conformance, and secondly for their compliance (See next section for compliance issues). The conformance Track / phase deal with conformance or Certification testing, which is focused on protocol application level. The application environment under test may be operating on both client or server side and checked against the standards. We present experiences from a case study where a model-based approach to black-box testing is applied to verify that a Wireless Application Protocol (WAP) gateway conforms to its specification (see [Hessel and Pettersson, 2006]). Recently, the WAP forum has announced the availability of certification test suites. One of such certification suites is the WAP gateway developed by Ericsson and used in mobile telephone networks to connect mobile phones with the Internet. Testing was focused on the software implementing the wireless session protocol (WSP) and wireless transaction protocol (WTP) layers of the WAP protocol [WAP Forum, 2001]. These layers, and their surrounding environment, are described as a network of timed automata [Alur and Dill, 1994]. Figure 1: Overview of the WAP formal model The WAP gateway model is emphasized on the software layers WTP and WSP. They have been modeled as detailed and close to the WAP specification as possible. Other parts of the gateway are modeled more abstractly, but without loss of externally observable behavior affecting the WTP and WSP layers. We have chosen the connection-oriented model version of the WAP protocol, where several outstanding transaction can be held together into a session. The model has been made with the intention to generate real system tests that can be executed over a physical connection. Obviously, the complexity of making this kind of system model and system test is much higher than to test each layer separately. In Figure 1, an overview of the modeled automata and their conceptual connections is shown as a flow-graph. The nodes represent timed automata [Alur and Dill, 1994] and the edges synchronization channels or shared data, divided in two groups (with the small arrows indicating the direction of communication). The model is divided in two parts, the gateway model, and the test environment model. The test environment consists of the two automata Terminal and HTTP Sever. The gateway model is further divided in to a WTP part, a WSP part, and globally shared data and timers4. The WTP part consists of The Service Access Point (TSAP), two instances WTP0 and WTP1 of the WTP protocol, a WSP Session Manager, two instances Method 0 and Method 1 of the WSP methods, and a Session Service Access Point (SSAP). The idea of the model is to let the Terminal automaton model a mobile device that non-deterministically stimulates the gateway with input and receives its output. In a typical scenario, the Terminal requests a WML page from a web sever. The requests go through an instance of the WTP and WSP layers and further to a web sever. In case the page exists, it is sent back through the gateway, and is finally received in the Terminal. A complete test bed is presented, which includes generation and execution of test cases. It takes as input a model and a coverage criterion expressed as an observer, and returns a verdict for each test case [Hessel and Pettersson, 2006]. The validity of the tests has been proven in a case study where test cases have been executed in a real test environment at Ericsson. The test generation techniques and the coverage criteria used have industrial strength as complete test suites have been generated for an industrial application, and discrepancies have been found between the model and the real system. The test bed includes existing tools from Ericsson for test-case execution. Figure 2: Overview of the setup used for testing the WAP gateway To generate test suites, the COXER tool — a new test-case generation tool based on the realtime modelchecker UPPAAL [Larsen et al, 1997], is used. This tool solves the problem of generating test cases, which extends the model-checking tool UPPAAL with capabilities for generating test suites. It takes as input the timed automata model of the WAP gateway described above, and a coverage criterion specified as a parameterized observer (.xml and .obs respectively in Figure 2). The output of the UPPAAL COXER tool is a set of abstract test cases (or test specifications) represented as timed traces, i.e., alternating sequences of states, and delays or discrete transitions, in the output format of the UPPAAL tool. To model the many sequence numbers (from a large domain) used in the protocol, an abstraction technique was used. It preserves the relations needed when comparing sequence numbers in the WAP protocol, while the size of the analyzed state space is significantly reduced. We believe the implemented abstraction technique will prove useful to model and analyze other similar protocols with sequence numbers, in particular in the context of model-based testing. These similar protocols include UserDatagram Protocol (UDP), and Transmission Control Protocol (TCP), and Internet Protocol (IP). Table 1 shows the result of the test suite generation. Each row of the table gives the numbers for a given coverage criteria and the automata it covers (used as input). For example, WTP denotes WTP0 and WTP1 for which the tool has found 63 coverage items, i.e., edges in the WTP template. To cover the WTP edges a test suite of 16 test cases is produced. The number of transitions of the test suite is 1562. The test suite interacts with the system 92 times, i.e., 92 PDUs are communicated. The table shows the result of the other test criteria as well. We note that, as expected, the switch coverage criterion requires many more test cases to be executed than edge coverage. We also note that it is more efficient to execute the test suites covering all templates at once, i.e., WTP, Session Manager, and Method, than to execute all the individual test suites. For example, the test suite with edge coverage in all templates sends 142 PDUs, whereas the sum of sent PDUs in the individual suites is 225. For switch coverage the numbers are 467 compared to 555 PDUs. Table 1: Test generation and execution results The test cases presented in the Table 1 have been executed on an in-house version of the WAP gateway at Ericsson. As shown in the rightmost column of Table 1 most of the test case went well. A few tests failed due to two discrepancies — one in the WTP automata and one in the Session Manager automaton. The first discrepancy is in the WSP layer. The session manager is modeled to not accept any new Connect messages. Reading the WAP specification carefully, after finding this discrepancy, it was allowed to accept new Connect messages and replaced the current session if the layer above agrees. This problem in the model explains the discrepancy found with the test suite covering the edges of Session Manager, and the seven discrepancies found when executing the test suite with switch coverage in the Session Manager. The second discrepancy is a behavior present in the model of the WTP layer used but not in the tested WAP gateway. It was found that no acknowledgement is sent from the WTP state, RESULT WAIT, when an (WTP) invoke is retransmitted and an acknowledgment has already been sent. The retransmission is required in the WTP specification [WAP Forum, 2001] but not performed by the implementation. This discrepancy was found both when running test suites covering the edge and switch criteria of the WTP template. It was also observed that the two discrepancies were both found when executing the edge covering test suites—one in the test suite for WTP, and the other in the test suite for Session Manager. The test suite with switch coverage finds the same discrepancies, but many times (as many as the erroneous edges appear in some switch). The suite with projection coverage did not find any discrepancies. Table 2: Analysis of dynamic behaviour computed Table 2 and Table 3 recap the features covered by the computed WSP test suite. In Table 2, the column “Function” represents service functionalities. At the WSP level, methods can be invoked by three basic PDUs, namely the Get PDU, Post PDU and the Reply PDU. The current Method reference is encapsulated in these PDUs. For each function, the PDUs involved in the operation of the function, or the related capabilities are presented. Some of the functionalities specified in the standard are mandatory while the other ones are optional. Most of the mandatory features have been computed (80%). Capabilities represent a set of service facilities and parameter settings related to the operation of the service provider. There are different kinds of facilities defined in the standard, but the service provider may recognize additional facilities. Some examples of facilities are Aliases, Extended methods, Protocol options [Kon´e, 2003]. The basic methods of WAP correspond to the ones defined in the HTTP/1.1 standard, and the extended methods correspond to those that are beyond HTTP/1.1. Proprietary methods fall in this category. During the connection, the peer entities can perform a Capabilities negotiation in order to agree on a common communication profile. The Connect PDU can be used to specify the requested capabilities of the initiator, and the ConnectReply PDU would contain the capabilities acknowledged by the responder. 83% of the dynamic optional functions have been treated. 100% of the standard defined PDUs and also 100% of the standard defined ASPs are involved in the test suite. In the overall test suite, there are also some parts of test cases that are repeated. For instance, all the Method Invocation facilities have basically the same operation scheme. This guarantees that each elementary protocol operation can be experimented by the computed test suite. Table 3: Percentage of computed features 3.2 Protocol Model Test Methodology for Interoperability The problem of interoperability / compliance has emerged from the possible heterogeneity of computer systems. One would like computer products to be open systems, so that they can be interchanged with few constraints and interwork with systems belonging to other manufacturers. In the context of communication systems, the interoperability problem occurs when at least two entities specified or implemented separately are required to, again, co-operate. Substantial efforts have been undertaken in these fields of protocol engineering to ensure communication systems of good quality. But computer systems are more and more complex, and in practice, it is difficult to test them in an exhaustive manner. Moreover, these systems may have different manufacturer profiles which may be, possibly, incompatible. Consequently, one cannot guarantee that such systems, however conforming they may be, can successfully interwork. The occurrence of an unexpected interaction reveals an error. Therefore testing different implementations together, in view of checking their aptitude to interwork is referred to as Interoperability testing. Figure 3: Example of Interoperating Wireless Network According to the WAP forum [WAP forum, 1998], protocol implementations (e.g. wireless network standards like WAP specifications, etc.,) must be tested against standards in two tracks / phases, to check their conformance and their compliance respectively. Track / phase one deals with Certification or conformance testing as discussed in section 3.1 above. Track two then deals with Compliance or interoperability testing concerned with the protocol level. Implementations would be tested through the lower level Service Access Points in order to check protocol operations for compliance with the standard. Until recently, the main developments have been concerned with certification or conformance issues and little or no development regarding compliance issues (protocol inter-operations), which are the primary concern in this section. Some organizations such as Standards Promotion and Application Group (SPAG) and ATM forum [ATM Forum, 2003] contributed to clarify the understanding of interoperability testing issues. The adopted generic design methodology for wireless network standard WAP interoperability test, called Top approach [Balliet and Eloy, 2000; Kon´e and Castanet, 2000; Kon´e and Castanet, 2001], handles interoperability test automation from specifications to test execution via a CORBA platform, and follows the recommendations of these organizations, mainly for testing architecture aspects. The other aspects concern test cases generation automatically computed from the formal model of protocol specifications. Methods from the literature generally compute interoperability test cases on the basis of behavior graph modeling the communicating entities [Cavalli and Lima, 1997; Fukada et al, 1997; Lee et al, 1993; Rafiq and Cacciari, 1999; Ulrich and Chanson, 1995]. These methods have been interesting contributions in automatic test generation. However in the future, the need to face the increasing complexity due to powerful features of new communication systems, plus concurrency, will grow up. The Top approach (implemented in the tool named Tescoms [Balliet and Eloy, 2000; Kon´e and Castanet, 2000]), again tries to tackle this problem with an on-the-fly computation approach. This on-the-fly paradigm with complex real-time specifications [Kon´e, 2001], have successfully been experimented upon, and its applicability to the interoperability of the WAP protocols [Kon´e, 2002; Kon´e and Thomesse, 2000] have been recently presented; with the result showing the analysis and application of the production or development of compliance / interoperability test suites for WAP Session Protocol (WSP) layer [Kon´e, 2003]. In the WSP specifications, implementation features are organized in groups of functionality (Session creation, Session suspend/resume, Push facilities etc). Two kinds of features are specified: mandatory and optional. Mandatory features must be implemented by both client and server. In the WSP standard, each optional functionality has been specified as a whole, without relating with the other ones. This style of specification facilitates the Static Interoperability Review (SIR). For the definition of target interoperability tests, it is enough to compare the client and the server Implementation Conformance Statement (ICS), and select the intersect of the implemented options. The guide of WAP ICS [WAP forum, 1998] has been used as a basis to test selection. Optional services are mainly the Push services and the Method Invocation facilities. During the SIR one can observe that most of the optional services can be invoked only after the connection establishment (the Suspend/Resume service is a particular case which can be started before). Instead of creating one test case per option, one could imagine to compute all these optional behaviors at a time, after the connection establishment. But in practice, implementations have not the same ICS and it is reasonable to compute optional services separately. Even if test patterns are produced automatically by a tool, test purposes are to be defined formally before, and that is done by hand. The Tescoms tool computes the client and server WAPspecifications according to the test purpose. The tool produces an output which is a test pattern to be used in experimentation for the expected feature (in this example, the connection establishment and the connection release). Remember that the test purpose is an abstract definition of the expected behavior, which can be modeled with a finite automaton, presented here as a sequence of transitions (one line per transition). Two examples of test purposes to illustrate the experiment with the WSP layer has been presented. The first example is concerned with a mandatory service (the session creation, the session release). The second example (the Push service) is optional. In addition, we describe the Suspend/Resume test case which is much longer. The session creation starts with an S-Connect-req (Connection Request), and ends with an S-Connect-cnf (Connection confirmation). The session release starts with an S-Disconnect-req initiated by either the client or the server, and ends with the notification of the disconnection. The syntax follows: Each line/transition describes an interaction as well as the implementation which executes it. The specifications represent 3000 lines of code and cannot be included in this paper. Figure 4: Structure of WAP services The structure of WAP model (Figure 4) is similar to the one of the OSI basic reference model. An (i)-WAP layer is composed of (i)-WAP entities using a (i-1)-WAP service. One of the particularities of WAP structure is that some (i)-service can be directly used by upper layers or applications. For example, the Transaction layer or further defined applications can directly access the Transport layer. Normative references tell more about WAP. The reader may refer to the standard [Clarke and Richardson, 1985] for detailed information. The Push Service offers the possibility to a server to spontaneously send some information to a client. As this feature is optional, the client must have a subscription to the related service before. Then information can be automatically received without explicit request. During the communication between a client and a server, some unpredictable event may occur, involving either the client or the server to be temporarily busy (or disturbed somehow). In such case, instead of closing the connection, and then asking for another new connection, the Suspend/Resume service can be invoked. The entity which asks for suspending the session uses an S-Suspend-req. The session can be resumed with the S-Resume-req. The test case in figure 1 shows a scenario which can be used to experiment this service. The full execution of such test cases by WAP client and server entities show their aptitude to interoperate or not. 3.3 Protocol Model test for performance Quality of Service (QoS) testing checks the service quality of the Implementation Under Test (IUT) against the QoS requirements of the network component. A specific class of QoS is that of performance-oriented QoS. Performance-oriented QoS requirements include requirements on delays (e.g. for response times), throughputs (e.g. for bulk data transfer), and on rates (e.g. for data loss). We concentrate exclusively on performance-oriented QoS, other classes of QoS are not considered here. Subsequently, the term performance is used instead of QoS and refers therefore to performance testing. Performance testing is an extension to conformance testing to check also QoS requirements. The main objective of protocol performance testing is to test the performance of communication network components under normal and overload situations. Different strategies can be used to study performance aspects in a communication network. One consists in attempting to analyze real traffic load in a network and to correlate it with the test results. The other method consists of creating artificial traffic load and of correlating it directly to the behavior that was observed during the performance test. The first method enables one to study the performance of network components under real traffic conditions and to confront unexpected behaviors. The second method allows us to execute more precise measurements, since the conditions of an experiment are fully known and controllable and correlations with observed performance are less fuzzy than with real traffic. Both methods are actually useful and complementary. A testing cycle should involve both methods: new behaviors are explored with real traffic load and their understanding is further refined with the help of the second method by attempting to reproduce them artificially and to test them. The presented approach to performance testing attempts to address both methods. The method of generating artificial traffic load for both normal and overload situations on the WAP network component follows traffic patterns of a well-defined traffic model. For performance testing, the conformance of an IUT is assumed. However, since overload may degrade the functional behavior of the IUT to be faulty, care has to be taken to recognize erroneous functional behavior in the process of performance testing. Another goal of performance testing is to identify performance levels of the IUT for ranges of parameter settings. Several performance tests will be executed with different parameter settings. The testing results are then interpolated in order to adjust that range of parameter value, where the IUT shows a certain performance level. Finally, if performance-oriented QoS requirements for an IUT are given, performance testing should result in an assessment of the measured performance, whether the network component meets the performance-oriented QoS requirements or not. The Performance Tabular and Tree Combined Notation (PerfTTCN) [ISO/IEC, 1991; 1996; and Knightson, 1993] – an extension of TTCN with notions of time, traffic loads, performance characteristics and measurements - is a formalism to describe performance tests in an understandable, unambiguous and re-usable way with the benefit to make the test results comparable. In addition, a number of TTCN tools are available. The proposal introduces also a new concept of time in TTCN. The current standard of TTCN considers time exclusively in timers, where the execution of a test can be branched out to an alternative path if a given timer expires. New proposals by Walter and Grabowski [Walter and Grabowski, 1997], introduced means to impose timing deadlines during the test execution by means of local and global timing constraints. The main advantage of the presented method is to describe performance tests unambiguously and to make test results comparable. This is in contrast with informal methods where test measurement results are provided only with a vague description of the measurement configuration, so that it is difficult to re-demonstrate and to compare the results precisely. The presented notation PerfTTCN for performance tests has a well-defined syntax. The operational semantics for PerfTTCN is under development. Once given, it will reduce the possibilities of misinterpretations in setting up a performance test, in executing performance measurements, and in evaluating performance characteristics. In contrast to that, a performance test gathers measurement samples of occurrence times of selected test events and computes various performance characteristics on the basis of several samples. The computed performance characteristics are then used to check performance constraints, which are based on the QoS criteria for the network component. Although the approach is quite general, one of its primary goals was the study of the performance of WAP network components. WAP protocol performance test consists of several distributed foreground and background test components. They are coordinated by a main tester, which serves as the control component. A foreground test component realizes the communication with the IUT. It influences the IUT directly by sending and receiving PDUs or ASPs to and respectively from the IUT. That form of discrete interaction of the foreground tester with the IUT is conceptually the same interaction of tester and IUT that is used in conformance testing. The discrete interaction brings the IUT into specific states, from which the performance measurements are executed. Once the IUT is in a state that is under consideration for performance testing, the foreground tester uses a form of continuous interaction with the IUT. It sends a continuous stream of data packets to the IUT in order to emulate the foreground load for the IUT. The foreground load is also called foreground traffic. A background test component generates continuous streams of data to cause load for the network or the network component under test. A background tester does not directly communicate with the IUT. It only implicitly influences the IUT as it brings the IUT into normal or overload situations. The background traffic is described by means of traffic models. Foreground and background tester may use load generator to generate traffic patterns. Traffic models describe traffic patterns for continuous streams of data packets with varying inter-arrival times and varying packet length. An often used model for the description of traffic patterns is that of Markov Modulated Poison Processes (MMPP). This model was selected for traffic description due to its generosity and efficiency. For example, audio and video streams of a number of telecommunication applications as well as pure data streams of file transfer or mailing systems have been described as MMPPs (Onvural, 1994). For the generation of MMPPs traffic patterns, efficient random number generator and efficient finite state machine logic are needed only. Nonetheless, the performance testing approach is open to other kinds of traffic models. In analogy to conformance testing, different types of performance test configurations can be identified. They depend on the characteristics of the network component under test. We distinguish between performance testing the implementation (either in hardware, software, or both) of (i) an enduser telecommunication application, (ii) an end-to-end telecommunication service, or (iii) a communication protocol. Of course, additional test configurations for other network components can be defined. The test configuration for protocol performance test is given in Figure 5. The notion System Under Test (SUT) comprises all IUT and network components. For simplification, the inclusion of the main tester in the figure has been omitted. Figure 5: Performance test configuration for WAP protocol Prominent in every protocol performance test configuration includes the use of background tester that generate artificial load to the network, and the use of monitors, that measure the actual real load in the network. Performance testing of a communication protocol (Figure 5) also includes foreground tester at the upper service access point to the protocol under test and at the lower service access point. This test configuration corresponds to the distributed test method in conformance testing (please refer to [ISO/IEC, 1991] for other test methods). The service access points are reflected by points of control and observation. Points of control and observation (PCOs) are the access points for the foreground and background test components to the interface of the IUT. They offer means to exchange PDUs or ASPs with the IUT and to monitor the occurrence of test events (i.e. to collect the time stamps of test events). A specific application of PCOs is their use for monitoring purposes only. Monitoring is needed to observe for example the artificial load of the background test components, the load of real network components that are not controlled by the performance test or to observe the test events of the foreground test component. Coordination points (CPs) are used to exchange information between the test components and to coordinate their behavior. In general, the main tester has access via a CP to each of the foreground and background test components. To sum up, WAP protocol performance test uses an ensemble of foreground and background tester with well-defined traffic models. The test components are controlled by the main tester via coordination points. The performance test accesses the IUT via points of control and observation. A performance test suite defines the conditions under which a performance test is executed. Performance characteristics and measurements define what has to be measured and how. Only a complete performance test suite defines a performance test unambiguously, makes performance test experiments reusable and performance test results comparable. Performance characteristics can be evaluated either off-line or on-line. An off-line analysis is executed after the performance test finished and all samples have been collected. On-line analysis is executed during the performance test and is needed to make use of performance constraints. Performance constraints allow us to define requirements on the observed performance characteristics. They can control the execution of a performance test and may even lead to the assignment of final test verdicts and to a premature end of performance tests. For example, if the measured response delay of a server exceeds a critical upper bound, a fail verdict can be assigned immediately and the performance test can finish. A HTTP-WAP server performance test has been done to show the feasibility of PerfTTCN. The experiment was implemented using the Generic Code Interface of the TTCN compiler of ITEX 3.1. (Telelogic, 1996) and a distributed traffic generator VEGA (Kanzow, 1994). VEGA uses MMPPs as traffic models and is traffic generator software that allows us to generate traffic between a potentially large numbers of computer pairs using TCP / UDP over IP communication protocols. It is also capable of using ATM adaptation layers for data transmission such as these provided by FORE Systems on the SBA200 ATM adaptor cards. The traffic generated by VEGA follows the traffic pattern of the MMPP models. Figure 6: Technical Approach of the experiment The C-code for the executable performance tests was first automatically derived from TTCN by ITEX GCI and then manually extended: (i) to instantiate sender/receiver pairs for background traffic, (ii) to evaluate inter-arrival times for foreground data packets, and (iii) to locally measure delays. Figure 6 illustrates the technical approach of executing WAP protocol performance tests with the derivation of the executable test suite and the performance test configuration. The figure presents also a foreground tester and several send / receive components of VEGA. The performance test for HTTP-WAP server uses the concepts of performance test configuration of the network, of the end system, and of the background traffic only. Other concepts such as measurements of real network load, performance constraints and verdicts are implemented in the next version. The example of a performance tests consists of connecting to a Web server using the HTTPWAP protocol and of sending a request to obtain the index.html URL (Schieferdecker et al, 1997). If the query is correct, a result PDU containing the text of this URL should be received. If the URL is not found either because the queried site does not have a URL of that name or if the name was incorrect, an error PDU reply can be received. Otherwise, unexpected replies can be received. In that case, a fail verdict is assigned. The SendGet PDU defines an HTTP request. The constraint SGETC defines the GET /index.html HTTP/1.0 request. A ReceiveResult PDU carries the reply to the request. The constraint RRESULTC of the ReceiveResult PDU matches on “?” to the returned body of the URL: HTTP/1.0 200 OK. The original purely functional test case in TTCN has been extended to perform a measurement of the response time of a Web server to an HTTP Get operation (see also Table 4). Table 4: Performance test case for the HTTP-WAP example The measurement “MeasGet” has been declared to measure the delay between the two events SendGet and ReceiveResult as shown in Table 5. Table 5: HTTP-WAP measurement declaration The repeated sampling of the measurement has been implemented using a classical TTCN loop construct to make this operation more visible in this example. The sampling size has been set to 10. The location of the operations due to “MeasGet” measurements are revealed in the comments column in the dynamic behavior of Table 4. It consists in associating a start measurement with the SendGet event and an end measurement with the ReceiveResult event as declared in Table 5. The delay between these two measurements will give us the response time to our request, which includes both network transmission delays and server processing delays. This experiment has been performed on an ATM network using Sun workstations and TCP/IP over ATM layers protocols. An example of the statistics with and without network load is shown in Figure 7 below, for the sake of space; the main program of the HTTP-WAP performance test is not shown. Figure 7: Performance test result of the HTTP-WAP example The graph on the left of Figure 7 shows delay measurement under no traffic load conditions while the graph to the right shows results achieved with six different kinds of CBR and three different kinds of Poisson traffic flows between two pairs of machines communicating over the same segment as the HTTP-WAP client machines. 4.0 ANALYSIS AND DISCUSSIONS As a way of analysis and discussions, some general inferences based on the survey has been made; with an estimation of a cost model for formal testing process of WAP protocol. The inferences as discussed include that: (i) Formal techniques can discover and reveal faults but can only be applied to over-simplified models. Complex models are difficult if not impossible to test, but if simplified, it becomes a lot easier to test, as has been the case with the models investigated (ii) Integration of testing into the protocol life cycle model so that each phase generates its own test is the best practice of formal protocol testing. This is because the effort needed to produce test cases during each phase will be less than the effort needed to produce one huge set of test case of equal effectiveness in a complete separate life cycle phase, just for testing. (iii) Experimental results surveyed show that the testing results obtained with formal test methods are better than those that can be achieved with traditional statistical methods because, statistical techniques (which Usually rely on simulation) often fail to find some critical cases for protocols, especially transport protocols. As an example, the surveyed formal test method applied to WAP protocol operating on the given network was able to find a configuration of the traffic over the network that sensitizes a critical problem in the WAP protocol, namely the weaknesses of the WAP congestion reaction mechanism. Furthermore as discovered, Cost models for formal testing process of protocols are estimatable, and hence, the cost of testing WAP protocol is hereby estimated. The cost of the testing phase within the development cycle includes the following aspects: (i) Cost of test development. (ii) Cost of test execution. (iii) Cost of test result analysis, diagnostics, etc. (iv) Cost of modifying the implementation under test in order to eliminate the detected faults. For the WAP protocol surveyed, the test suite provides explicit pass / fail / unresolved results with leads as to components that might be sources of failure or fault; and the percentages of its test cost estimates has been presented in table 6 and also analyzed with the chart in figure 8. Table 6: Percentage Estimates of formal test cost of WAP protocol surveyed Test Cost Types Cost of test development Cost of test execution Cost of result analysis, diagnosis, etc. Cost of modifying the IUT to eliminate faults Faults Detected Faults Non-detected 50% 50% 30% 60% 20% 80% 0% 100% Cost of test development 100 80 Cost of test execution 60 40 Cost of result analysis, diagnosis, etc. 20 0 Faults Detected Faults Non-detected Cost of modifying the IUT to remove faults Figure 8: Bar Chart analysis of formal test cost of WAP protocol surveyed The above analysis shows that whereas total test cost is a minimum for detected faults, it is a maximum for non-detected faults. This is because cost of test execution is often based on the number of test cases to be executed, or the total number of input / output interactions included in all test cases. In order to determine at what point a system has been tested thoroughly (a question difficult to answer), it is necessary to consider not only the cost required to detect and eliminate the remaining undetected faults in the system under test, but also the costs which occur if these undetected faults remain in the system. The latter cost is difficult to estimate; it is clearly higher for systems with high reliability requirements. Optimizing the testing process means organizing this process in such a manner that the overall cost (testing cost plus cost of remaining faults) is minimized. 5.0 CONCLUSION This paper presented a detailed survey in the application of formal methods to the testing and validation of communication protocols. In parallel, powerful methods have been proposed to test and validate the underlying protocols of communicating systems in order to facilitate and guarantee reliable development, conformance, interoperability, performance and robustness of new products. The main emphasis of this work is the identification and definition and implementation of basic protocol concepts for testing, the re-usable formulation of tests and the development of the test run time environments. To model the many sequence numbers (from a large domain) used in the protocols, some methods / techniques and tools were investigated. Results got proved useful to model and analyze protocols with sequence numbers, in particular in the context of model-based testing. The test beds presented include generation and execution of test cases. They take as input a model and a coverage criterion expressed as an observer, and returns a verdict for each test case. The protocol system tests surveyed goes through application-level testing and protocol-level testing, and these were found to be suitable for layered protocols, hence experiments based on the approaches can be carried out with several other protocol lower layers with few limitations. REFERENCES 1. Laycock G. T. (1993); “The theory and practice of specification based software testing”, Ph.D. thesis, pp 6-10, 32 – 41. 2. Clarke, E.M., and Wing, J.M. (1996); “Formal methods State of the art and future directions”, ACM Computing Surveys, 28(4):626–643. 3. Graham,D., and Ould, M. (November, 1990); “A Standard for Software Component Testing,” BCS Specialist Interest Group in Software, Issue 1.2. 4. Dssouli, R., Saleh, K., Aboulhamid, E., En-Nouaary, A., Bourhfir, C. (1999); “Test development for communication protocols: towards automation,” rComputer Networks 31(1999)1835– 1872,1849. 5. Lima, L.P., Cavalli, A. (1997); “A pragmatic approach to generating test sequences for embedded systems,” in: Proc. IWTCS’97, Cheju Islands, Korea. 6. Kone, O. (1983); “An Interoperability Testing Approach,” in A. Aho, J. Hopcroft and J. Ullman, Data Structures and Algorithms, Addison-Wesley, pp1225 – 1240. 7. Balliet, X., Eloy, N. (March, 2000); “Development of a distributed test system with CORBA,” Coauthored Master’s thesis. Institute National Polytechnique, Nancy France. 8. Kon´e, O., Castanet, R. (March, 2000); “Test generation for interworking systems,” In Computer Communications, Elsevier Science Publishers, Vol. 23 N.7. pp 642-652. 9. Kon´e, O., Castanet, R. “(July, 2001); The T broker platform for the interoperability of communications systems. IEEE 5th CSCC World Conference. Crete. 10. Cavalli, A., Lee, B., Macavei, T. (1997); “Test generation for the SSCOP-ATM network protocol,” in: Proc. SDL FORUM’97, Evry, France, Elsevier. 11. Kon’e O. (2003); “An Interoperability Testing Approach to Wireless Application Protocols,” (Universit´e Paul Sabatier – IRIT 118 route de Narbonne F-31000 Toulouse kone@irit.fr) Journal of Universal Computer Science, vol. 9, no. 10, 1220-1243. 12. Hessel, A., and Pettersson, P. (2006); “Model-Based Testing of a WAP Gateway: an Industrial Case-Study Department of Information Technology,” Uppsala University, SE- 75105 Uppsala, Sweden Technical report 2006-045 August 2006 ISSN 1404-3203 13. Alur R. and D. L. Dill., (1994); “A theory of timed automata,” Theoretical Computer Science, 126(2):183–235. 14. Larsen, K. G., Pettersson, P., and Yi.,W. (October, 1997); “UPPAAL in a Nutshell,” Int. Journal on Software Tools for Technology Transfer, 1(1–2):134–152. 15. The ATM Forum. (2003); URL: http://www.atmforum.com. 16. Cavalli, A., Lima, L.P. (1997); “A pragmatic approach to generating test sequences for embedded Systems,” In. Testing of Communicating Systems Vol. 10. Chapman & Hall. 17. Fukada A. et al. (1997); “A conformance testing for communication protocols modeled as a set of DFSMs with common inputs,” In. Testing of Communicating Systems Vol. 10. Chapman & Hall. 18. Rafiq, O., Cacciari, L. (1999); “Controllability and Observability in distributed testing,” Information and Software Technology, Elsevier, Vol. 41 (1999) 767-780. 19. Ulrich, A., Chanson, S.T. (June, 1995); “An approach to testing distributed software systems,” In Proc. IFIP symposium on Protocol Specification Verification and Testing, Warsaw, Poland. 20. Kon´e., O. (2002); “Compliance of wireless application protocols,” IFIP TestCom International Conference - Testing Internet Technologies and Services. Kluwer Academic Publishers. 21. Lee, D., Sabnani, K., Kristol, D., Paul, S. (1993); “Conformance testing of protocols specified as communicating FSMs,” In. Proc. IEEE INFOCOM’93, San Francisco. 22. Kon´e, O., Thomesse, J. P. (September, 2000); “Design of interoperability checking sequences against WAP,” Proc. IFIP International Conference on Personal Wireless Communications. Kluwer Academic Publishers. 23. WAP forum. (April, 1998); “Architecture Specification,” URL: 24. WAP forum, (April, 1998); “Wireless Application Protocol,” Conformance Statement, http://www.wapforum.com Compliance Profile and Release List. URL: http://www.wapforum.com. 25. Alur, R. and Dill, D. L. (1994); “A theory of timed automata,” Theoretical Computer Science, 126(2):183–235. 26. WAP Forum. (July, 2001); “Wireless transaction protocol,” version 10, online, http://www.wapforum.org/. 27. Clarke, L. A., Richardson, D. J. (1985); “Applications of symbolic evaluation,” Journal of Systems and Software 5, pp15–35. 28. Schieferdecker, B., Stepien, A., Rennoch. (October, 1997); “PerfTTCN, a TTCN language extension for performance testing,” European Telecommunications Standards Institute Temp. Doc. 48 ETSI 25th TC MTS Sophia Antipolis, 21-22 GMD FOKUS Hardenbergplatz 2, D10623 Berlin, Germany. 29. Combitech Group Telelogic AB (1996); ITEX 3.1 User Manual. 30. ISO/IEC (1991); ISO/IEC 9646-1 “Information Technology - Open Systems Interconnection - Conformance testing methodology and framework - Part 1: General Concepts“. 31. ISO/IEC (1996): ISO/IEC 9646-1 “Information Technology - Open Interconnection - Conformance testing methodology and framework - Part 3: The tree Systems and tabular combined notation. “ 32. Kanzow, P. (1994); “Konzepte für Generatoren zur Erzeugung von Verkehrslasten bei ATM- Netzen,” MSc-thesis, Technical University Berlin (in German only). 33. Knightson, K. G. (1993); OSI Protocol Conformance Testing,” IS9646 explained, McGraw-Hill. 34. Onvural, R. O. (1994); “Asynchronous Transfer Mode Networks: Performance Issues,” Artech House Inc. 35. Walter, T. and Grabowski, J. (1997); “A Proposal for a Real-Time Extension of TTCN, “ Proceedings of KIVS’97, Braunschweig, Germany. 36. Ulrich, A., Chanson, S.T. (June, 1995); “An approach to testing distributed software systems,” In Proc. IFIP symposium on Protocol Specification Verification and Testing, Warsaw, Poland. Academic Background of Researchers Chukwuemeka, Etus is a master’s degree student of Electrical and Electronic Engineering from Federal University of Technology Owerri (FUTO); and a major in Electronics and Computer Engineering. He is a researcher on Electronic and Computer related subject matters. E-mail: etuscw@yahoo.com Felix K., Opara is a post doctoral candidate of Electrical and Electronic Engineering from Federal University of Technology Owerri (FUTO); and a major in Electronics and Computer Engineering. He is a researcher on Electronic and Computer related subject matters. E-mail: kefelop@yahoo.com O. K., Akinde, is a master’s degree student of Electrical and Electronic Engineering from Federal University of Technology Owerri (FUTO); and a major in Electronics and Computer Engineering. He is a researcher on Electronic and Computer related subject matters. E-mail: solkinde@yahoo.com