CSC7130 (24 May, 2000)

advertisement

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

1

Programming exercises: Submission deadline 23:59, 12 Oct 2014, send to

cmsc5707.14@gmail.com.

Use MATLAB , OCTAVE[1] or any programming environments you prefer. Write your own

code; do not use any library functions such as FFT or autocorrelation for all parts except the

part “Build a speech recognition system.”, where you may use any Matlab /Octave functions

you like.

1) (5%) Recording of the templates: Use your own sound recording device (e.g. mobile

phone, windows-sound-recorder or http://www.goldwave.com/) to record the numbers

1,2,3,4 and name these files as s1A.wav, s2A.wav, s3A.wav and s4A.wav, respectively.

Each word should last about 0.60.8 seconds and use http://format-factory.en.softonic.com/

to convert your file to .wav if necessary. (You may choose English or Cantonese or

Mandarin to pronounce these words). These four files are called set A to be used as

templates of our speech recognition system. You may use any sampling rate (Fs) and bits

per second (bps) value. However, typical values are Fs=22050 Hz (or lower) and bps=16

bits per second.

2) (5%) Recording for the testing data: Repeat the above recording procedures of the same

four numbers: 1, 2, 3 and 4, and save the four files as : s1B.wav, s2B.wav, s3B.wav and

s4B.wav , respectively. They are to be used as testing data in our speech recognition

system.

3) (5%) Plotting:

a) Pick one wav file out of your sound files (e.g. x.wav), read the file and plot the time

domain signal. (Hint: you may use “wavread”, “plot” in MATLAB or OCTAVE.

Type “>help wavread” , “>help plot” in MATLAB to learn how to use them.)

b) Plot x.wav and save it in a picture file “x.jpg”.

4) (35%) Signal analysis:

a) From “x.wav”, write a program to find the start (T1) and stop (T2) locations in time

(ms) of your four recorded sounds automatically.

b) Extract one segment called Seg1 (20 ms of your choice of location) of the voiced

vowel part of x.wav between T1 and T2. Seg1 can be saved as an array in C++ or a

vector in MATLAB / OCTAVE . You may choose the segment by manual inspection

and hardcode the locations in your program.

c) Find and plot the Fourier transform (energy against frequency) of Seg1. The energy is

equal to |Square_root ([real]^2+[imaginary]^2)| . The horizontal axis is frequency and

the vertical axis is energy. Label the axes of the plot. Save the plot as “fourier_x.jpg”.

d) Find the pre-emphasis signal (pem_Seg1) of Seg1 if the pre-emphasis constant α is

0.945. Plot Seg1 and Pem_Seg1. Submit your program.

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

2

e) Find the 10 LPC parameters if the order of LPC for Pem_seg1 is 10. You should

write your autocorrelation code, but you may use the inverse function (inv) in

MATLAB/OCTAVE to solve the linear matrix equation.

5)

(50%) Build a speech recognition system: You may use any Matlab/Octvae functions

you like in this part. Use the tool at

http://www.mathworks.com/matlabcentral/fileexchange/32849-htk-mfcc-matlab to extract

the MFCC parameters (Mel-frequency cepstrum http://en.wikipedia.org/wiki/Melfrequency_cepstrum) from your sound files. Each sound file (.wav) will give one set of

MFCC parameters. See “A tutorial of using the htk-mfcc tool” in the appendix of how to

extract MFCC parameters. Build a dynamic programming DP based four-numeral speech

recognition system. Use set A as templates and set B as testing inputs. You may follow

the following steps to complete your assignment.

a) Convert sound files in set A and set B into MFCCs parameters, so each sound file

will give an MFCC matrix of size 13x70 (no_of_MFCCs_parameters x=13 and

no_of_frame_segments=70). Because if the time shift is 10ms, a 0.7 seconds sound

will have 70 frame segments, and there are 13 MFCC parameters for one frame. Here

we use M (j,t), to represent the MFCC parameters, where ‘j’ is the index for MFCC

parameters ranging from 1 to 13, ‘t’ is the index for time segment ranging from 1 to

70. Therefore a (13-parameter) sound segment at time index t is M(1:13,t).

b) Assume we have two short time segments (e.g. 25 ms each), one from the tth (t=28)

segment of sound X (represented by 13 MFCCS parameters Mx(1:13,t=28), and

another from the t’th (t’=32) time segment of sound Y (represented by MFCCS

parameters My(1:13,t’=32). The distortion (dist) between these two segments is

dist

j 13

Mx( j, t ) My ( j, t ' )

2

j 2

j 13

Mx( j,28) My ( j,32)

2

j 2

Note: The first row of the of the MFCCs (M(1,j)) matrix is the energy term and is not

recommended to be used in the comparison procedures because it does not contain

the relevant spectral information. Therefore summation starts from j=2.

Use dynamic programing to find the minimum accumulated distance (minimum

accumulated score) between sound x and sound y.

c) Build a speech recognition system: You should show a 4x4 comparison-matrix-table

as the result. An entry to this matrix-table is the minimum accumulated distance

between a sound in set A and a sound in set B. You may use the above steps to find

the minimum accumulated distance for each sound pair (there should be 4x4 pairs,

because there are four sound files in set A and four sound files in set B) and enter the

comparison-matrix-table manually or by a program.

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

3

d) Pick any one sound file from set A (e.g. the sound of ‘one’) and the

corresponding sound file from set B (e.g. the sound of ‘one’), compare these two

files using dynamic programing , plot the optimal path on the accumulated

matrix diagram .

e) Recognition

f)

recognition

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

4

What to submit :

a) All your programs with a readme file showing how to run them

b) All sound files of your recordings

c) The picture files inducing the optimal path diagram in question 5d.

d) The 4x4 comparison-matrix-table of the speech recognition system

e) Zip all (as student_number.zip) and submit it to cmsc5707.14@gmail.com

Reference

octave_guide : http://www.cse.cuhk.edu.hk/~khwong/www2/cmsc5707/octave_guide.doc

Appendix: A tutorial of using the htk-mfcc tool

Download the package from

http://www.mathworks.com/matlabcentral/fileexchange/32849-htk-mfccmatlab/content/mfcc/mfcc.m

Run ‘example.m’ you will see it can generate MFCCs from the sound file .

The default values are :

o

Tw= frame duration (ms)=25 ms,

o

Ts=frame shift=10ms etc.

o

C= number of cepstral coefficents is 12.

o

MFCCs is the output MFCC parameters

In case you want to use the MFCC parameters into a file and read it by another

language or package, you may do this. In matlab:

o

>clear %clear the workspace

o

> example %run example of 32849-htk-mfcc-matlab once

o

**you may need to change the sound file name in example.m to select

your own sound file.

o

whos % show the parameters generated, should see MFCCs

o

>> save('foo1.txt', 'MFCCs' ,'-ascii'); %save MFCCs in foo1.txt

o

You may use other programs to read this foo1.txt to get the parameters.

Make a function in matlab /octave to use example.m

o

Edit the file example.m

Comment clear all; close all; clc;, e.g. % clear all; close all; clc;

Add in the first line : function MFCCs=wav2mfcc1(wav_file)

o

Save this file as ‘wav2mfcc1.m’

o

So you may use wav2mfcc1.m as a function in matlab /octave .m file or

in the command window .

o

Example: put the following line in a test.m file

o

MFCCs_OUT= wav2mfcc1(‘sound_file.wav’);

o

%Result of running test.m: the resulting MFCC parameters will be saved

in the Matrix MFCCs_OUT after test.m is run

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

Appendix

FAQ on assignment 1 at

http://www.cse.cuhk.edu.hk/~khwong/www2/cmsc5707/cmsc5707.html

1. Question: For question 3 and 4, should we handle only one wav or we need to

handle all 8 of them?

Answer: For assignment 1, question 3 and 4, processing one sound file is

enough. However, for question 5, all 8 sound files will be involved.

2. Question: For the whole asg1, could you tell us which part of the question we

need to submit code for it?

Answer: For 4(a), 4(c), 4(d) and 4(e), you need to submit the programs.

3. Question: For 4b, what is the meaning of voice vowel?

Answer: In the example shown in the diagram, it is the sound sar (沙) in

Cantonese. The sampling frequency is 22050 Hz, so the duration is

2x104x(1/22050)=0.9070 seconds. The top diagram below shows the whole

duration of the sound, by visual inspection, the consonant ‘s’ is roughly from

0.2x104 samples to 0.6 x104 samples. And the vowel ‘ar’ is from 0.62 x104

samples to 1.2 2x104 samples. The lower diagram shows a 20ms (which is

(20/1000)/(1/22050)=441=samples) segment (vowel sound ‘ar’) taken from

the middle (from the location at the 1x104 th sample) of the sound.

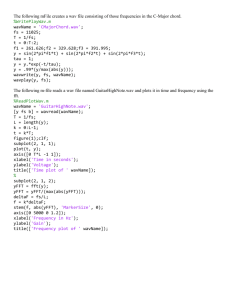

%the matlab program to produce the plots

%Sound source is from

%http://www.cse.cuhk.edu.hk/~khwong/www2/cmsc5707/sar1.wav

[x,fs]=wavread('sar1.wav'); %Matlab source to produce plots

fs % so period =1/fs, during of 20ms is 20/1000

%for 20ms you need to have n20ms=(20/1000)/(1/fs)

n20ms=(20/1000)/(1/fs) %20 ms samples

len=length(x)

figure(1),clf, subplot(2,1,1),plot(x)

subplot(2,1,2),T1=round(len/2); %starting point

plot(x(T1:T1+n20ms))

5

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

6

4. Question:

a. For Question 5a, what if my sound record last for 2s and my speech starts

from 600ms and ends at 1300ms, should I chop it to become a 0.7s speech?

Answer: Yes, you may use tools (such as http://www.goldwave.com/)

to cut the file to become a shorter duration, say 0.7 S. The idea is to

remove the silence regions, which have no use for your system.

b. Could I filter out that parts after I've read the data to the program?

Answer: Yes, you may filter out the parts after you read the data in

your program.

5. Question: Will matlab be too slow for question 5? I need to wait for more than 10

minutes for comparison between 2 sound tracks and we require to do 16

comparisons.

Answer: Coding matlab requires some skills, if you use a lot of “for loops”, it can

be slow. Use matrix operations will be faster. For example: a=[1 2 3 4], b=[ 4 5 6

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

7] You may use a program loop to find a*b, or just type a*b, will give you the

answer quick.

My implementation for the recognizer runs 10 comparisons in 2 seconds. If

you don’t want to optimize your code, you may choose to automate the

comparisons. Use a program to automatically set the comparisons of all

combinations, run it overnight will do the job too.

6. Question: Since my recordings of digits have some period of silence, the MFCC

matrix from the sound has many columns of NaNs. Would it affect the results?

How should I deal with that ? Thanks !

Answer: NaNs in matlab usually means infinity or some numbers that don't

make sense. Remove these numbers from the sequence.

7. Question: For question 5, besides cutting the sound file, do we need preprocess

the input sound by pre-emphasis, hamming window and so on?

Answer: No need to do it by the students, these preprocessing are done

automatically by the tool in

http://www.mathworks.com/matlabcentral/fileexchange/32849-htk-mfccmatlab

8. Question: There is a problem when i just change the name of the file in the

example.m(in the mfcc folder). I changed nothing except the name sp10.wav to

my own file. and once i changed it back the program is ok.

9. gf

Answer: I guess your soudn file is stereo and has two sound track, the

program does like it. Solutions:

1) you may use the tool in www.goldwave.com to make it a single track. You

may send me the sound file ,than I will have a better idea of the problem.

2) Remove one column form your data.

Do it in matlab, if you have a stereo wav file as the follow

>> x=wavread( 'd:\sounds\stereo1_maid.wav');

>> size(x) %check the size of the sound, if it has two columns it is stereo

ans =

541957

2

>> x1=x(:,1); % use this to make it mon.

>> size(x1)

ans =

541957

1

10. Question: For the question 4(a), I find the algorithm in ppt slides that said,

7

Assignment 1 for CMSC5707: Advanced topics in AI - Audio signal processing (v4c)

8

“If the energy level and zero-crossing rate of 3 successive frames is

high it is a starting point.

After the starting point if the energy and zero-crossing rate for 5

successive frames are low it is the end point.”

And I don't quite understand these two sentences. Is that means the energy

is high and the zero-crossing rate is also high for continuous 3 frames,

and that is the starting point? What is the value of HIGH and LOW mean?

Is that value depends on ourselves wave?

Answer: HIGH or LOW depends on your wave, plot these waves out you will

see the difference between segments of with sound and no sound.

Get a segment , say 20 ms long , of a silent region and save it as ‘x’ in matlab

and run the following.

figure(1)

clf

plot(x) %the original wave

figure(2)

clf

plot(x.^2) %plot the energy.

Repeat the above with a segment of voiced sound. Then you can see the difference

between them. Use it to determine the threshold of determining High or Low.