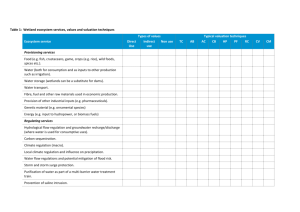

Characteristics and Criteria for Environmental Decision

advertisement