Lecture 5: Entropy: The whole world is messed up anyway

advertisement

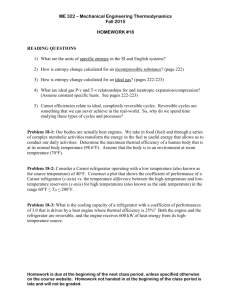

Lecture 5: Entropy: The whole world is messed up anyway! Review of lecture 4 o Application of the first law to biochemical reaction o Oxidation of sucrose and glycine o Concept of heat of formation o Discussion of second law and adiabatic system Today o Carnot cycle and thermodynamic definition of entropy o Efficiency of Carnot’s engine o Examples of calculation of entropy o Boltzmann’s definition of entropy o Entropy variation with o Temperature o Pressure o Composition o Reaction Adiabatic path Adiabatic path is the one where the state of the system changes without loss of heat to surrounding. According to 1st law ΔE=q+W, for adiabatic process q=0. For small reversible change in volume, the system will perform work on the surrounding and the change in the temperature of the system is given by: dV V T dT dV V V CV R CV ln 2 R ln 2 R ln 1 T V V1 V2 T1 CV dT pext .dV RT Note this consideration is only valid for reversible change in state. For example, if we had to expand against Pext=0, or expansion against vacuum, then the work done by the system would be exactly zero. So from the first law, ΔE=0. Hence, there will be no temperature change in the system. Especially interesting is to solve the problem 8, chapter 2 where you can get a good feel for difference between adiabatic and isothermal paths. Carnot Cycle Carnot cycle considers following cyclic path for an engine following a reversible paths. Gas at P2,V2,T1 Isothermal expansion Ideal Gas T1,P1,V1 Adiabatic Compression Adiabatic expansion Isothermal Compression Gas at P4,V4,T2 Gas P3,V3,T2 Adiabatic path I P 2 4 3 V Isothermal Path Calculation of q and W for the Carnot Cycle. o Path I. Isothermal reversible expansion of gas at T1. Since this increases the final volume of the gas the work done by the system is negative. Recall that in isothermal reversible expansion the net change in the internal energy is zero. V E 0 q w1 q1 P.dV q1 RT1 ln 2 V1 o Path II: Adiabatic reversible Expansion E w2 CV T2 T1 o Path III: Isothermal reversible compression. V E 0 q w3 q3 P.dV q3 RT2 ln 4 V3 o Path IV: Adiabatic reversible compression E w4 CV T1 T2 Thus total heat absorbed and work done is: qTotal V4 V2 q1 q 2 q3 q 4 RT1 ln RT 2 ln V1 V3 V V wTotal w1 w2 w3 w4 RT1 ln 2 RT 2 ln 4 V1 V3 qTotal wTotal or ETotal 0 Carnot’s Insight In a feat of insight Carnot realized that although q depends on the path taken, the ratio qrev/T does not! He called this new term entropy, a state variable. Let us try to calculate this term as we travel along the Carnot cycle. Clearly for paths II and IV it is zero since for any adiabatic path q=0. For reversible isothermal paths I and III. Path I : V RT1 ln 2 dq 1 q1 V1 R ln V2 dq T1 T1 V T1 T1 1 Path III : V RT2 ln 4 dq 1 q3 V3 R ln V4 dq T2 T2 V T2 T2 3 q1 q3 Path I Path III T1 T3 V V V V T T R ln 2 4 R ln 2 R ln 1 CV ln 1 CV ln 1 0 V4 T2 T2 V1V3 V3 Thus in cyclic reversible cycle the total q/T is zero. Thus, for such system total entropy change is zero. This is a characteristic of a state variable. Efficiency of the Carnot’s Engine In simplest terms, we find that during the paths I and II, the engine performs work on the surrounding and during paths III and IV surrounding does work on the system. The total useful work corresponds to area enclosed by these four paths in the P-V plot. On the other hand, system is expanding isothermally during path I and absorbing heat from the surrounding. During path III, the system releases heat to surrounding. Thus, according to the 1st law, the useful work done by the system must be equal to the difference between the heat absorbed (on path I) and released (on path III). We can define the efficiency of the engine as a ratio useful work done to heat absorbed from the surrounding (on path I) during the complete cycle. Note that system does release heat on path III, meaning that all the heat absorbed does not get converted to useful work. So we find: w q1 q3 q T 1 3 1 2 q1 q1 q1 T1 sin ce q1 q3 0 T1 T2 Conclusion, the highest efficiency is only possible if the colder temperature is absolute zero! This is the earliest statement of second law of thermodynamics!! What about irreversible processes? As we have seen that the state variable q/T, the entropy, is identically zero on a cyclic and reversible path. The important question, therefore, is what happens to its value during an irreversible process. Consider case of 1 mole of ideal gas (P=2atm, T1, V1) that expands isothermally to P2=1atm and V2=2V1. Two potential paths we may follow are: 1. Reversible expansion. 2. Irreversible expansion into vacuum Since the final state of the system is identical in both cases, the net change in the entropy (dq/T) must be the same. So the differences, if any, must occur in the surroundings. Case I E 0 q w q w 2V w RT ln RT ln 2 V q S system R ln 2 Entropy change for system T q S surrounding R ln 2 Entropy change for surroundin g T STotal 0 For the II case the change in the entropy of the system must be identical since it is a state variable: S system R ln 2 & S surrounding STotal R ln 2 q E w 0 T T For irreversible processes, the total entropy of system and surrounding increases, another statement of 2nd law. Boltzman’s Interpretation Strictly mathematical concept of entropy was greatly enhanced by Boltzman who argued that the entropy is a measure of disorder. Thus, the contemporary statement of 2nd law of thermodynamics is that total entropy is not conserved and increases continually. However, this does not mean it’s impossible to have –ve change in entropy of the system provided that it is accompanied by equal or more increase in the entropy of the surrounding. Boltzman argued: S k ln( N ) Where N is number of ways system can be arranged in indistinguishable ways as far as outside observer is concerned (Feynman). More recently information theory has been applied to entropy. S k ln( N ) k Pi ln Pi i Where, Pi is the probability of finding a system in state i. Consider water molecule. If it is in ice, it’s molecules are confined to a crystal lattice and, therefore, are highly ordered. In liquid state, water molecules are released from the confinement and hence on molecular scale they are more disordered. This degree of disorder increases even further in the vapor state. This increased disorder while going from solid-liquid-vapor states is reflected in the corresponding values of measured entropies, 41J/K(ice),63J/K(liquid), 188 J/K (vapor). Temperature dependence of Entropy Using the usual conditions such as isobaric or isochoric paths we can see that: T C dT dT dq S v Cv C v ln 2 isochroic path T T T T1 T C dT dT dq S P CP C P ln 2 isobaric path T T T T1 Just as in case of ΔH the above formulae apply as long as system remains in single phase. On the other hand if system undergoes a phase transition, at constant temperature and pressure. qP , L H Transition Stran H Tr TTr Thus overall temperature dependence of the entropy will include the changes involved at the phase transition. T dT H m dT S (T ) S (0 K ) C P ( s) C P (l ) T Tm T o TM Tm 0 Such an approach is valuable for calculating entropy at arbitrary temperature for a single component system. Pressure Dependence of Entropy For solids and liquids entropy change with respect to pressure is negligible on an isothermal path. This is because the work done by the surroundings on liquids and solids is miniscule owing to very small change in volume. For ideal gas we can readily calculate the entropy dependence on the pressure as follows: dS dq rev dw P.dV T T T E 0 d ( PV ) 0 PdV VdP dV V dP P V .dP dP nR T P P S nR ln 2 P1 dS Entropy of Mixing Consider two non-reacting gases A and B initially at identical pressure, P separated from each other. If we allow them to mix they will do so spontaneously and it is difficult to separate them. The mixture will have identical pressure P, but we can think of it as if it’s made up sum of partial pressures of A and B. We can calculate the net change in the entropy of this system. X P X P S A n A R ln A n A R ln( X A ) S B nB R ln B nB R ln( X B ) P P ST R.(n A nB )X A ln( X A ) X B ln( X B ) This is a very important relation used frequently in problems involving solutions.