Security Testing

advertisement

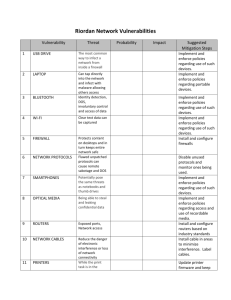

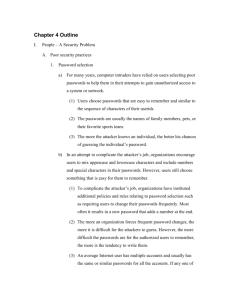

Security Testing Testing contributes to software development in a negative and a positive way: by detecting defects that need to be removed and by providing confidence in correct system performance. Security testing, in contrast to other types of testing, is more about what the software ought not to do rather than what it ought to do. Security issues arise from that fraction of software defects that are termed vulnerabilities, which could be exploited to adversely affect confidentiality, integrity, or accessibility of a system or its data. Rather than being driven by customer- or user-supplied requirements, security testing is typically mapped against anticipated attacks on the system. Hence, the development of misuse (or abuse) cases to describe conditions under which attackers might threaten the system, in contrast to the traditional use cases, which describe “normal” interaction patterns. Just as traditional usability and reliability testing need a proper context for their design and interpretation, so too security testing needs its context if it is to provide useful insights. Threat modeling (Reference 1) for security testing can be considered analogous to the development of operational profiles in reliability testing. Identifying potential threats to security is inherently more complex and uncertain than working within a well-defined community of stakeholders, all of whom wish the system to work successfully. First, the value of the system – its appeal to attackers – must be characterized across a range of potential abusers. Further, different attackers will themselves have different definitions of success, such as the extent to which they wish to remain undetected or anonymous. Any testing must always be considered as but one tool in support of risk management. Recent guidance from the National Institute for Standards and Technology (Reference 2), for instance, places security testing within the larger context of security control assessment, defined as “the testing and/or evaluation of the management, operational, and technical security controls to determine the extent to which the controls are implemented correctly, operating as intended, and producing the desired outcome with respect to meeting the security requirements for an information system or organization.” Unfortunately, security requirements are not typically specified and security expectations are not usually explicitly aligned with organizational needs and strategies, as th f figure below, from Reference 2, idealizes. Information Security Requirements Integration (Reference 2) Instead, the challenge all too often is simply to understand where the organization is most vulnerable and then to design and conduct security testing accordingly. As with any other testing, the limitations of schedule and budget force prioritization in order to maximize the value of the testing. Another NIST publication (Reference 3) emphasizes various limitations of the testing approach and indicates that testing is best combined with a wider array of assessment techniques. For instance, “testing is less likely than examinations to identify weaknesses related to security policy and configuration.” Reference 3 characterizes penetration testing as support of target vulnerability validation and brackets its application with “password cracking” and “social engineering”. Indeed, the greatest weakness in any security configuration is likely to be the human element, and testing should assess exposures due to attackers’ acquiring insider knowledge or impersonating legitimate users. To the extent that security measures primarily consist of building defensive barriers, then the prerequisite for any security assessment would be penetration testing. This type of testing is meant to determine the capabilities required to breach those barriers. A helpful historical survey (Reference 4) asserts that “penetration testing has indeed advanced significantly but is still not as useful in the software development process as it ought to be” due to a number of limitations. Most penetration testing is typically done far too late in the software development life cycle and without sufficient sensitivity to the wider range of business risks. Traditional organizational responsibilities and reporting chains also mean that test result analyses “generally prescribe remediation at the firewall, network, and operating system configuration level … [which are not] truly useful for software development purposes.” Reference 4 provides helpful discussions of specific vulnerability scanning and testing tools (host- or network-based) and techniques. It advocates penetration testing that is less “black box” (ignorant of system internals) and more “clear box” (sometimes called “white box”) in which design and implementation details are visible to the tester, allowing exploration of more potential weak spots. Note that “white box” testing is not to be confused with “white hat” testing. Security testing is often differentiated as being conducted as either overt (“white hat”) or covert (“black hat”). A more complex representation (and less standard terminology) of the possible permutations of mutual knowledge is shown in this figure from Reference 5 Target-Attacker Matrix See [6] for further details on design-based security testing, which may involve analyzing data flow, control flow, information flow, coding practices, and exception and error handling within the system. It recommends test-related activities across the development life cycle: o Initiation Phase should include preliminary risk analysis, incorporating history of previous attacks on similar systems. o Requirements Phase involves establishing test management processes and conducting more detailed risk analysis. o Design Phase allows focus of test resources on specific modules, such as those designed to provide risk mitigation. o Coding Phase permits functional testing to begin at the unit level as individual modules are implemented. o Testing Phase moves from unit testing through integration testing to complete system testing. o Operational Phase may begin with beta testing and continues to require attention as deployment may involve configuration errors or encounters with unexpected aspects of the operational environment. See Reference 7 for an extensive discussion of software assurance tools and techniques, with links to numerous other resources and to a reference dataset of security flaws and associated identification test cases. No measures of security test coverage seem particularly helpful. One could test against a “top twenty-five” list of known vulnerabilities (Reference 8), but no Pareto-like analysis has ever been published to indicate if exploiting the “top twenty-five” results in 80% of security breaches … or 8% … or any other proportion. Similarly, one might wish to engage in security growth modeling, analogous to reliability growth modeling [9], but little empirical data and no significant supporting analyses have been published. Threat modeling explores a range of possible attackers, all with different capabilities and incentives. These characteristics might be helpfully profiled in terms of knowledge, skills, resources, and motivation: o What is the distribution of knowledge about existing vulnerabilities? o How likely is an attacker to possess the skills required to exploit a given vulnerability? o How extensive are the resources (access, computing power, etc.) that might be brought to bear? o What motivations would keep a given attacker on task to successful completion of the attack? Results of test cases need to be considered with more nuance than simply noting the success or failure of breaching security. They must be calibrated in terms of these same aspects: o What knowledge about a given vulnerability was assumed in the test case? o What specific skills and skill levels were employed within the test? o How extensive were the resources that were required to execute the test? o What motivations of an attacker would be sufficient to persist and produce a similar result? Risk-based security testing (Reference 10) is concerned not simply with the probability of a breach but, more importantly, with the nature of any breach. What are the goals of different attackers and what might be the consequence of their actions? A range of security tests might, for instance, indicate the probability of a successful denial-of-service attack as 20%, of a confidentiality violation as 10%, and of an undetected data integrity manipulation as 5%. If Attacker A were interested in extorting protection payment to forego a $1,000,000 opportunity cost due to website unavailability, then the business would be facing a risk exposure of 20% of that potential loss -- $200,000. Attacker B, intent on revealing confidential information that would cost $5,000,000 in regulatory fines and legal expenses, would represent a risk exposure of 10% of that value -- $500,000. Finally, if a competitive advantage of $20,000,000 might be gained by Attacker C successfully corrupting business-sensitive data, then that risk exposure would be greatest even with 5% as the lowest probability of occurrence -- $1,000.000. The next step would be to analyze the return on security investment and allocate resources accordingly. Reducing risk exposure might be accomplished by any combination of reducing the probability of the occurrence (risk avoidance) and reducing the consequences should the event occur (risk mitigation). Consider defending against Attacker A. Perhaps $25,000 is budgeted for security improvement. If that amount was invested in risk avoidance, say by strengthening website defenses, it might reduce the probability of a successful denial-of-service attack from 20% to 15%, representing a risk exposure reduction of 5% of the potential $1,000,000 loss and yielding a return on investment of $50,000/$25,000 = a ratio of 2. An alternative investment in risk mitigation, say by decreasing incident recovery time, might lower the cost of a successful attack by $400,000. That return on investment would be calculated for a risk exposure reduction of $80,000 (given the unchanged 20% probability of occurrence) divided by the $25,000 allocated – a more attractive return ratio of 3.2. Of course, an even better return might be found by some optimal combination of investments in both risk avoidance and risk mitigation. Reference 10 concludes that “although it is strongly recommended that an organization not rely exclusively on security test activities to build security into a system, security testing, when coupled with other security activities performed throughout the SDLC, can be very effective in validating design assumptions, discovering vulnerabilities associated with the application environment, and identifying implementation issues that may lead to security vulnerabilities.” References 1, Frank Swiderski and Window Snyder. Threat Modeling. Redmond, Washington: Microsoft Press. 2004. 1. NIST Special Publication 800-39, Managing Information Security Risk: Organization, Mission, and Information System View (March 2011). 2. NIST Special Publication 800-115, Technical Guide to Information Security Testing and Assessment (September 2008). 3. Kenneth R. van Wyk. Adapting Penetration Testing for Software Development Purposes. (https://buildsecurityin.us-cert.gov/bsi/articles/bestpractices/penetration/655-BSI.html). 4. Institute for Security and Open Methodologies. OSSTMM 3 – The Open Source Security Testing Methodology Manual (http://www.isecom.org/mirror/OSSTMM.3.pdf) 5. Girish Janardhanudu. White Box Testing (https://buildsecurityin.uscert.gov/bsi/articles/best-practices/white-box/259-BSI.html). 6. Software Assurance Metrics And Tool Evaluation (http://samate.nist.gov/) 7. 2011 CWE/SANS Top 25 Most Dangerous Software Errors (http://cwe.mitre.org/top25). 8. M. R. Lyu, Ed., Handbook of Software Reliability Engineering: McGraw-Hill and IEEE Computer Society Press, 1996. 9. C. C. Michael and Will Radosevich. Risk-Based and Functional Security Testing. (https://buildsecurityin.us-cert.gov/bsi/articles/bestpractices/testing/255-BSI.html) 10. Julia H. Allen, Sean Barnum, Robert J. Ellison, Gary McGraw, and Nancy R. Mead. Software Security Engineering: A Guide for Project Managers. Upper Saddle River, N.J.: Addison-Wesley. 2008. 11. Chris Wysopal, Lucas Nelson, Dino Dai Zovi, and Elfriede Dustin. The Art of Software Security Testing: Identifying Software Security Flaws. Upper Saddle River, N.J.: Addison-Wesley. 2007. NOTE: Reference this resource as https://buildsecurityin.usert.gov/sites/default/files/software-securitytesting_pocketguide_1%200_05182012_PostOnline.pdf Link for PDF file at https://buildsecurityin.us-cert.gov/swa/software-assurance-pocket-guide-series#development is incorrect Software Security Testing Development, Volume III – (Version 1.0, May 21, 2012) Software security testing validates the secure implementation of a product thus reducing the likelihood of security flaws being released and discovered by customers or malicious users. The goal is not to "test in security," but to validate the robustness and security of the software products prior to making them available to customers and to prevent security vulnerabilities from ever entering the software. This volume of the pocket guide describes the most effective security testing techniques, their strengths and weaknesses, and when to apply them during the Software Development Life Cycle.