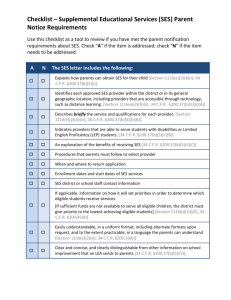

Supplemental educational services are a component of Title I of the

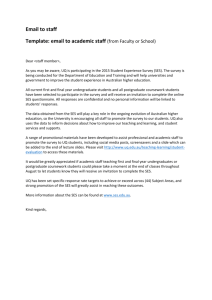

advertisement