Chapter 1 - Sacramento

advertisement

NEURAL NETWORKS NON-LINEAR SCALING

Alok Bhaskar Nakate

B.E., Pune University, India, 2006

PROJECT

Submitted in partial satisfaction of

the requirements for the degree of

MASTER OF SCIENCE

in

COMPUTER SCIENCE

at

CALIFORNIA STATE UNIVERSITY, SACRAMENTO

SPRING

2011

NEURAL NETWORKS NON-LINEAR SCALING

A Project

by

Alok Bhaskar Nakate

Approved by:

__________________________________, Committee Chair

V. Scott Gordon, Ph.D.

__________________________________, Second Reader

Kwai Ting Lan, Ph.D.

____________________________

Date

ii

Student: Alok Bhaskar Nakate

I certify that this student has met the requirements for format contained in the University format

manual, and that this project is suitable for shelving in the Library and credit is to be awarded for

the Project.

__________________________, Graduate Coordinator

Nikrouz Faroughi, Ph.D.

Department of Computer Science

iii

________________

Date

Abstract

of

NEURAL NETWORKS NON-LINEAR SCALING

by

Alok Bhaskar Nakate

Training a neural network with backpropagation algorithm is a systematic process

to model a set of given data. This training process involves, among other things, scaling

the input and output datasets provided to the neural network. The reason that the scaling

process is required, is that real world problem datasets might not be in the range [0, 1],

whereas the neural networks work with data only in the range [0, 1], i.e. the neurons fire

or they do not. To achieve this, linear scaling is typically used, which for certain datasets

can make it difficult for the neural network to properly differentiate between values that

are close together.

This project will show how the implementation of non-linear median scaling can

be applied to the training datasets and compares its performance against the linear scaling

methodology for a variety of training datasets both for speed of learning and subsequent

ability to generalize. This project demonstrates that after introduction of non-linear

iv

scaling into the backpropagation and applying it to the various datasets, there is an

improvement in performance to some extent.

_______________________, Committee Chair

V. Scott Gordon, Ph.D.

_______________________

Date

v

ACKNOWLEDGEMENTS

I would like to thank Professor Scott Gordon who helped me choose the topic for this

project and for countless times he spent with me to guide me in my project. I would also

thank him for his valuable advice for this project, and for his reviewing and providing

suggestions for the project report. I would also like to thank Professor Kwai Ting Lan for

reviewing this project report.

Finally, I would like to thank my numerous friends who endured this long process with

me, always offering support.

vi

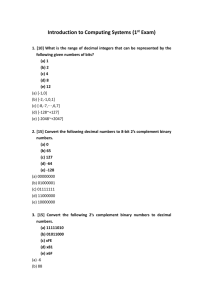

TABLE OF CONTENTS

Acknowledgements ............................................................................................................ vi

List of Tables ..................................................................................................................... ix

List of Figures ..................................................................................................................... x

Chapter

1.

INTRODUCTION .................................................................................................. 1

2.

BACKGROUND .................................................................................................... 3

2.1. Neural Networks ..................................................................................................... 3

2.2. Artificial neuron ...................................................................................................... 7

2.3. Backpropagation algorithm ................................................................................... 11

2.3.1.

Criteria..................................................................................................... 12

2.3.2.

Learning rate ........................................................................................... 13

2.3.3.

Generalization ......................................................................................... 13

2.4. Linear scaling ........................................................................................................ 14

2.4.1.

3.

Drawback of the linear scaling ................................................................... 16

PROPOSED SOLUTION ..................................................................................... 19

3.1.

Scaling in backpropagation ......................................................................... 20

3.1.1.

Initialization ................................................................................................ 21

3.1.2.

Scaling......................................................................................................... 21

3.1.3.

Training ....................................................................................................... 23

3.1.4.

Un-scaling process ...................................................................................... 24

3.2.

Which Neural Network elements are scaled? ............................................. 26

vii

3.3.

4.

Scaling range............................................................................................... 27

EXPERIMENTAL METHODOLOGY ................................................................ 28

4.1.

Datasets ....................................................................................................... 28

4.1.1.

Minimum weight steel beam problem ........................................................ 28

4.1.2.

Two-Dimensional projectile motion datasets ............................................. 30

4.1.3.

Wine recognition data ................................................................................. 32

4.2.

5.

Measuring performance .............................................................................. 35

RESULTS ............................................................................................................. 37

5.1.

Result for minimum weight problem datasets ............................................ 37

5.2.

Result for two-dimensional projectile motion dataset ................................ 38

5.3.

Result for wine recognition data ................................................................. 39

6.

CONCLUSION ..................................................................................................... 41

7.

FUTURE WORK .................................................................................................. 42

Appendix A Source Code ............................................................................................... 43

Appendix B Datasets ...................................................................................................... 61

Appendix C Results ........................................................................................................ 66

Bibliography ..................................................................................................................... 82

viii

LIST OF TABLES

Page

Table 1 Linear scaling example ……………….….……….………...….…………….. 16

Table 2 Linear scaling with large difference in datasets ……………….…………...... 17

Table 3 Training datasets for steel beam design problem …………………………….. 29

Table 4 Testing datasets for steel beam design problem ……..………………………. 30

Table 5 Example training datasets for 2-D projectile data motion …...………………. 31

Table 6 Example testing datasets for 2-D projectile data motion ….…………………. 32

Table 7 Example training datasets for wine recognition ………….……….…………. 34

Table 8 Example testing datasets for wine recognition ……………....………………. 34

Table 9 Result for minimum weight problem …………………………………..…….. 38

Table 10 Result for 2-D projectile motion problem ………………...………………… 39

Table 11 Result for wine recognition data …………………..………………………... 40

ix

LIST OF FIGURES

Page

Figure 1 Artificial neural network…………………………..……………………….…. 5

Figure 2 Artificial neuron with activation function F……………..……………………. 7

Figure 3 Sigmoidal activation function…..……………………….…………………….. 9

Figure 4 Backpropagation algorithm……..……………………………...……………. 12

Figure 5 Neural network with large difference in input datasets…...…………………. 18

Figure 6 Formula for non-linear scaling…....…………………….…….…..…………. 20

Figure 7 Formula for linear scaling…….…..…………………………………………. 22

Figure 8 Formula for non-linear scaling in detail…....…………….………….………. 23

Figure 9 Formula for scaling criteria value..…………………………………..………. 24

Figure 10 Formula for non-linear unscaling.…………..………………………………. 25

Figure 11 Formula for linear unscaling…….………………………………….………. 26

x

1

Chapter 1

INTRODUCTION

Neural networks are biologically inspired and often capable of modeling realworld complex functions. Artificial Neural Networks are composed of elements that

perform in a manner that resembles a set of biological neurons, and organized in a way

that is inspired by the anatomy of the brain [1]. An interesting characteristic of neural

networks is that they can learn their behavior by example, i.e. when certain set of inputs

are applied, they self-adjust to produce responses consistent with the desired outputs. So,

when a set of training data is applied to the neural networks, they learn that particular

problem, so furthermore when the trained neural network comes across some unknown

inputs but of similar circumstances this designed neural network, can often respond

appropriately, and this characteristic of neural network is known as generalization [4]. To

effectively do so they need to be trained initially with training datasets which include the

inputs and the corresponding desired outputs. The neural network then tries to minimize

the error between the desired output specified in the training data and actual output

produced by the neural networks. This is often a slow process, so researchers are

interesting in finding fast training methods.

One of the well-known methods of training a neural network is Backpropagation.

Backpropagation is a systematic method for training multilayer neural networks. When a

set of inputs is applied to the neural network, the backpropagation algorithm adjusts the

weights based on the resulting error.

2

Backpropagation usually performs scaling of input (optional) and output values.

The reason for scaling the training datasets is that the artificial neurons only output in the

range [0, 1]. Real world problems often include data outside this range. Backpropagation

typically uses linear scaling before the algorithm starts its training.

However, this linear scaling approach often fails when the input or output values

are clustered and not adequately distributed. If all the datasets are scaled linearly, this can

result in training data that is clustered too closely together for the network to model.

Therefore, this project will introduce a new approach of nonlinear scaling, and test this

method on a variety of problems with different training datasets.

3

Chapter 2

BACKGROUND

2.1.

Neural Networks

Artificial neural networks are inspired by the biological neurons that reside in the

brain’s Central Nervous System. The neural network has the capability for solving real

world problems by building a computational model and processing the information

provided to it. It can be called an adaptive system that changes its structure based on the

information that flows through the network during its learning. Learning implies that

neural networks are capable of changing their input/output behavior as a result of changes

in the environment.

Neural networks learn by example. Training sets are provided to the neural

network and then by the use of training algorithms like backpropagation, they learn to

replicate it. The neural networks operate in three scenarios, which are as follows:

1. During the training process of the neural network, the datasets including both the

input and the desired output are provided. The network then adjusts the weights with

the help of the algorithm (backpropagation), so that it gets to the desired output. This

process may require iterations to reach to the desired output. This is also known as the

correct/known behavior. Therefore, the neural networks learn from this process when

provided with an appropriate training dataset producing the correct output.

2. After training, test datasets are applied to the neural network to test the above process

in order to know whether the designed network was successful in learning. The test

4

datasets also include input and the desired output. The only difference between the

first step and this step is that instead of iterating through the loop to adjust the

weights, it uses the above data (weights) to try to reach the desired output for similar

untrained cases.

3. In the third scenario, only input datasets are provided to the designed neural network

expecting that the neural network will produce the desired output since it has learnt

from the above steps. This scenario can be a real-life example, for instance

implementing the trained neural network in a field where the desired outputs are

unknown and the only data available is input data.

The main advantage of neural networks lies in their ability to represent both linear

and non-linear relationships and in their ability to learn these relationships directly from

the data being modeled.

The basic artificial neural network is shown in Figure 1 below. It consists of four

main components:

1. The input layer: All the artificial neurons accept the inputs given to the neural

networks.

2. The Output layer: All the results are produced at this layer.

3. One or more hidden layers: The computations occur here, described in section 2.2.

4. The weights: The values at each link between the nodes. Weights get adjusted in the

network according to the error calculations.

5

Figure 1: Artificial neural network

In the traditional computational (non-neural network) approach, complex

problems can be solved by “divide and conquer”. The complex problems can be

decomposed into simpler or smaller elements so that it is easier to understand them.

These simple elements which are partially solved can then be gathered together to

produce the complex systems. However, in neural networks, the solutions are distributed

amongst the neurons. Each neuron computes and produces output and passes on to

another neuron or to the output layer. Often, the neural networks can modify their

behavior in response to changes in the environment, which is similar to the working of

the brain.

6

Some advantages of neural networks are:

1. A trained neural network can become an “expert” in the category of information it

has learned.

2. Adaptive learning: They have an ability to learn how to do tasks based on the data

given for training or initial experience.

3. Self-Organization: They can create their own representation of information that

they receive during the learning process.

4. Flexibility to a large number of domains

7

2.2.

Artificial neuron

The artificial neuron is an abstraction of the first order characteristic of the

biological neuron, which represents a mathematical unit in the neural network model. We

can say that neurons form the computational model inspired by the natural biological

neuron. Figure 2 below shows the neuron used as a fundamental building block for

backpropagation algorithm. It accepts input from the datasets at the input layer or from

other neurons, adds all these inputs and multiplies them with their corresponding weights,

producing a weighted sum. This weighted summation of products also called as NET

must be calculated for each neuron in the network. After NET is calculated, it is passed

on to the activation function to determine to what degree the neuron fires, thereby

producing the signal OUT [1].

X1

W2

X2

X3

Activation

function

W1

NET = XW

F

W3

Threshold

Figure 2: Artificial neuron with activation function F

OUT

8

As shown in the above figure 2, the X1, X2 and X3 are the inputs given to the

neuron. The W1, W2 and W3 are the weights applied initially to the inputs, which are

later adjusted accordingly. Then the summation blocks accepts these inputs and weights

and calculate the weighted sum or NET and passes that on to the activation function.

Each input is multiplied by a corresponding weight and all the weighted inputs are

then summed to determine the activation level of that neuron. In figure 2 above, the “F”

block is the activation function. Furthermore, this activation function F processes NET

signal to produce the neuron’s output signal, OUT.

OUT = F (NET)

In some neural network models, F may be a threshold function

OUT = 1 if NET > T

OUT = 0 otherwise

Here, T is a constant threshold value, or a function that more accurately simulates the

non-linear characteristic of the biological neuron and permits more general network

functions [1].

However, it is more common to use a continuous function instead of a hard

threshold value. The F processing block compresses the range of NET, so that OUT never

exceeds some low limits regardless of the value of NET, and this F processing block is

therefore sometimes known as the squashing function. The squashing function is often

chosen to be a logistic function or sigmoid (S – Shaped) and mathematically represented

as:

9

OUT = F(NET) = 1 / (1 + e – NET )

As shown in the figure 3, the squashing function or the sigmoid compresses the

range of NET so that the OUT lies between zero and one. This is why the calculated

output of a neural network can lie only between zero and one. The advantage of using the

logistic function rather than a hard threshold is that the logistic function is differentiable

which led to the derivation of the backpropagation algorithm [1].

Figure 3: Sigmoidal activation function

10

In biological terms, the inputs of artificial neurons resemble the synapse in

biological neuron, which are multiplied by weights. It resembles the strength of the

respective signals in natural neuron, and is further computed by the mathematical

function that determines the activation function.

Now, the weights of the artificial neurons can be adjusted to obtain the desired

output with the specific inputs provided. So depending on the adjustment of the weights,

the summation in the computational block will be different.

Normally, the artificial neural network is not composed of a single neuron. Typical

neural networks can have as few as half a dozen or as many as hundreds or thousands of

neurons involved in complicated applications. There are various algorithms which adjust

the weights according to the desired output and this process is called training an artificial

neural network. Backpropagation algorithm is one of the popular training methods.

11

2.3.

Backpropagation algorithm

Backpropagation is a systematic method for training multilayer artificial neural

networks. It is known as a supervised learning method, which adjusts the weights when

the result that was calculated is compared against the result that was expected in the

training datasets. The difference between the produced output and the desired output is

calculated which is known as the error. It then statistically uses this information to

modify the weights and train itself to reach the expected result as quickly as possible. So,

we can say that when a set of datasets are provided to neural networks, they

systematically try to train themselves and so as to respond intelligently to the inputs.

Backpropagation algorithm is mainly applied to feed forward neural networks. As

the name suggests, the artificial neural networks send their calculations forward and the

errors are propagated backwards.

The backpropagation algorithm follows an iterative process, i.e. in each iteration

the weights of nodes are modified using data from the training data sets. The main

aim/objective of backpropagation algorithm is to reduce the error by adjusting the

weights throughout the entire network. The error is the difference between the actual

result calculated by the neural networks and the desired output. Often, the data that are

provided to the neural network are scattered. Hence, before the backpropagation executes

the learning process, these scattered data are scaled to some desired range.

The objective of training the neural network using backpropagation is to adjust

the weights so that the application of a set of inputs produces the desired set of outputs.

The input – output sets are often referred to as vectors. The training process assumes that

12

each input vector is paired with a target vector or the desired output, together these are

called as the training pair [1]. The neural network is trained over a number of training

pairs.

The backpropagation process is shown in figure 4.

Initialize the weights in the network to a small random number

I

Do

For each training pair (di , do ) in the training sets

// di is the desired input and do is the desired output.

// this is the feed forward pass

O = Neural_network_outputs (network, di)

Calculate error (do – O) at the output layer for each output in training pair

Compute delta for all weights from the hidden layer to output layer

Compute delta for all the weights from input layer to hidden layer

// above two steps are the backward pass

Update the weights in the Network

Until all datasets classified correctly and the desired criteria is satisfied

Return the set of trained network weights

Figure 4: Backpropagation algorithm

2.3.1. Criteria

This parameter indicates when the learning process should stop. All the outputs

must be within this criteria parameter to terminate.

13

2.3.2. Learning rate

This is a constant to affect the speed of the learning. The mathematical

calculations of backpropagation are based on small changes being made to the weights at

each step of the error calculations. If the changes made to the weights are too large, the

algorithm may bounce around the error. So, in this case, it is necessary to reduce the

learning rate. On the other hand, the smaller the learning rate, the more steps it takes to

get to the criteria.

2.3.3. Generalization

If you have a good training set with examples that cover most or all of the various

possible inputs and the neural networks learns them all, then it is likely to generalize and

successfully model other similar instances. This means that it will give the correct output

for other inputs of the same application.

14

2.4.

Linear scaling

Backpropagation algorithm is a supervised learning method wherein a set of

inputs datasets are applied to the networks to train them and then applying the test

datasets to see how well they learned. Furthermore, if the network learns them all and

some unknown set of input data are applied to the network, they hopefully generalize.

Often, the input and output datasets are not uniform, i.e. they do not have a fixed

range, and certainly are rarely limited to the range [0, 1]. These datasets are usually

distributed across a large range of values. Due to this large distribution of input datasets,

scaling becomes essential. The reason this type of scaling process is needed is because

the input and output values provided to the neural network can be any random number,

whereas the neurons can output data only in the range of [0, 1], i.e. they fire or they do

not fire. Hence scaling the datasets is necessary.

Hence, the linear scaling is applied to output values (training datasets) and

optionally to the input datasets, that are given to the neural networks, to bring all the

datasets in the desired range [0, 1], before the backpropagation starts the learning process.

15

The formula for applying the linear scaling to each input and output training

datasets provided to the Neural Networks is:

Xi =

(X – Xmin)

____________

(Xmax – Xmin)

Where,

X = the value which is being scaled.

Xi = the scaled value for each dataset values (X)

Xmin = the minimum value of that particular input which is being scaled.

Xmax = the maximum value of that particular input which is being scaled.

Table 1 shows an example dataset and the scaled result. The first column is the

original training dataset and the second column is the scaled value for each left hand side

value.

The scaled values will be between range [0.050, 0.95], instead of the theoretical

range [0, 1]. According to the formula used in sigmoidal function and as shown in figure

3, it approaches only 0 or 1, but never actually reaches 0 or 1, hence the linear scaling

formula is modified to scale all the datasets from 0.05 to 0.95. As shown in the table 1, in

the first column the smallest value is 11.02 hence its scaled value is 0.050 and the largest

value is 14.83, hence its scaled value is 0.95.

16

Table 1: Linear scaling example

Datasets provided to Neural Network

Linear Scaled values

14.23

0.807

13.19

0.563

14.36

0.841

13.24

0.573

14.19

0.800

14.39

0.845

14.06

0.765

14.83

0.95

11.02

0.050

2.4.1. Drawback of the linear scaling

For some training datasets that have large differences in them, scaling those

values that are close together will bring their differences near to zero. The motivation of

using backpropagation for training neural networks is to reduce the error between the

calculated output by the network and the desired output in the datasets. However, if the

difference between some of the various input/output pairs provided to neural network is

too small to make them harder to distinguish, the further weight manipulations in

17

backpropagation becomes tougher and hence requires much more iteration to achieve the

desired output.

To demonstrate this, let us consider the datasets in table 2. After applying the

linear scaling formula to each of the values, we get the following scaled values.

Table 2: Linear Scaling with large difference in datasets

Datasets provided to Neural Network

Linear Scaling values

0.25

0.00016

0.15

0.050

0.75

0.0011

0.99

0.0014

600

0.95

430

0.7165

598

0.9966

According to the linear scaling formula,

X = 0.15

Xmin = 0.15

Xmax = 600

The Xi = 0.05

For X = 0.75, the Xi = 0.0011

In addition, for X = 600, the Xi = 0.95.

18

Figure 5: Neural networks with large difference in input datasets

In the figure 5, the width of the arrows represents the amount of weights applied

to each input datasets. The nodes with 0.15 values are thicker and the nodes with value

600 are thinner. From the above example, the difference between the datasets 0.15 and

600 is large, so the smaller values like 0.15, 0.75 are all scaled close to zero and this

adversely affects the ability of backpropagation to find weights that differentiate between

them.

This project explores whether this drawback can be reduced by applying nonlinear scaling to the input/output datasets, i.e. scale the larger datasets to a smaller range

and the smaller datasets to a larger range.

19

Chapter 3

PROPOSED SOLUTION

One way of making the scaling process non-linear is to scale the datasets

according to the medians for each dataset inputs and outputs. This approach might help

resolve the scaling issue for the datasets with large difference, as this scaling approach

will scale at a lesser amount for the largely dense values and scale in large amount for the

less dense datasets values. The formula for median type scaling will be as shown in figure

6.

First, we find out the median, minimum and maximum for each datasets, i.e.

finding these three values for each column in the training datasets provided to the neural

networks. Then, for each dataset value apply the given formula and linearly scale the

right and left halves of the data separately. After the right half of the formula computes

the scaling value that fit in the right hand side of the median, 0.5 value is added to it the

computed value, to shift the value to the right hand side, as shown in figure 6. If seen

closely each sub-portion of the sides (values that lie in left hand side and the values that

lie in right hand side) are actually scaled linearly. However, the overall classification of

the datasets occurs non-linearly according to the median value and the algorithm scales

them accordingly.

20

Figure 6: Formula for non-linear scaling

3.1. Scaling in backpropagation

The backpropagation algorithm is classified into three major steps. They are as

follows:

1. Initialization: In this procedure, the neural network is defined and all the necessary

parameters are set. The weights are initially set to small, random values.

2. Scaling: This process applies the scaling formula as shown in figure 6, to the datasets

provided to neural network to bring all the datasets into the desired range [0, 1].

3. Training: This process includes the forward pass and error correction procedure for

reaching the desired output. This process iterates until all the datasets are classified

correctly and the desired criterion is reached. (Described in figure 4.)

4. Un-scaling: This process includes scaling back the calculated output data by the

backpropagation algorithm in step 2 and the actual inputs that were scaled.

21

3.1.1. Initialization

1. Set training datasets and test datasets, i.e. initialize the number of rows and number of

columns in the datasets.

2. Design the neural network by defining the network topology, i.e. the number of nodes

with input, output and hidden layers.

3. Initialize the backpropagation parameters like criteria, learning rate.

4. Initialize the weights to some small random values.

3.1.2. Scaling

1. Initialize the extreme[] array. The extreme[] array holds the 3 values – minimum,

maximum, and the median values for each input and output datasets.

2. Open the Training case file and read all the data to an array Train[][]

3. For each column in array Train[][]

a. Find out the minimum and maximum values and insert them in extreme[0] and

extreme[1] array.

4. For each input datasets

a. Scale down only the input datasets except the output datasets in the array Train[][]

with the following linear scaling formula.

i.e. for each dataset in the particular column apply the formula shown in figure 7

and this will scale them in the range [0 – 1].

22

Figure 7: Formula for linear scaling

5. Store the output dataset columns in a temporary array MedianArray[]

6. Sort the MedianArray[] to find the median.

7. For each output datasets

a. Calculate the median value and insert it in array extreme[2].

8. For each output datasets

a. Scale down the output datasets with the three values in extreme[] array with the

non-linear median type scaling algorithm shown in figure 8 and insert each scaled

value in Train[][] array.

23

Figure 8: Formula for non-linear scaling in detail

3.1.3. Backpropagation algorithm

1. For each data training pair (di , do) in the training sets

a. Process the feed forward pass

b. For each output in training pair, calculate the error at the output layer

c. Scale the criteria value for each corresponding calculated output values. The

formula for scaling the criteria value is scaled to the range [0,.9], not [0.05, 0.95]:

24

Figure 9: Formula for scaling the criteria value

d. Process the backward pass

i.

Compute delta for all weights from the hidden layer to output layer.

ii.

Compute delta for all the weights from input layer to hidden layer

e. Update the weights in the network in a way that minimizes the error.

2. Repeat Step 1 for all datasets until they are classified correctly and within the desired

criteria.

3. Return the set of trained network weights.

3.1.4. Un-scaling process

1.

After the weight manipulations process executes, and the outputs lie within the

desired criteria or the network reaches the number of defined iterations, the

25

dataset values need to be scaled back to the original value/range. The formula for

reversing the scaling process is as shown in figure 10.

i. For non-linear scaling back to original range:

Figure 10: Formula for non-linear unscaling

26

ii. For Linear scaling back to original range:

Figure 11: Formula for linear unscaling

2. Print the calculated Output

3. Exit the program

3.2.

Which Neural Network elements are scaled?

In the above algorithm, the non-linear median type scaling is applied to the output

dataset only and the input dataset are scaled according to the linear formula and then

these results are compared against the fully linear scaling process applied to the same

datasets and observed the performance of the neural network. The non-linear scaling of

the datasets is applied to only the output datasets, but scaling the inputs in non-linear

would also be a useful experiment.

27

A criterion is a parameter used by the backpropagation algorithm to find out the

termination point. Every calculated output error is compared with the criteria to see if

training has succeeded. In the algorithm, we need to scale the criteria value, as all the

input and output datasets have been scaled respectively, so when comparisons are made,

all the data values need to be within to the same range. Hence, the criteria value for each

calculated output is also scaled non-linearly.

3.3. Scaling range

All the formulas described above in the algorithm are theoretical formulas, so for

the implementation purpose, the algorithm needs to be refined to ensure that the error

correction calculation is correct. For instance, in the scaling formula if the difference of

Xmin and Xmax value comes to zero, the algorithm will encounter divide-by zero error

during the actual implementation. Therefore, all the formulas need to be adjusted. As

shown in all the figures above in the backpropagation algorithm, all the datasets are

scaled (linearly or non-linearly) in the range [0.05, 0.95]. The purpose for doing so is that

the logistic output used in the backpropagation cannot actually output a zero or a one, but

it approaches these values (Please refer to section 2.2 for logistic function explanation).

In the median measurement formula, the range from [Median – Maximum] the

computed value falls in range [Minimum – Median], so the formula has to be refined so

that the computed value in the range [Median – Maximum] shifts right to 0.5 value

(Please refer to Appendix A for the formula implementations).

28

Chapter 4

EXPERIMENTAL METHODOLOGY

The effectiveness of non-linear scaling will be assessed by applying different

problem sets to a particular neural network under normal scaling techniques (linearly)

and then apply the same problem sets with non-linear scaling technique, and measuring

how well the neural network does at learning the data and generalizing.

In this section, descriptions of each of the datasets is provided.

4.1.

Datasets

Each dataset has one training pair datasets and one testing pair dataset, which are

applied to the designed neural network. The table shows only some of the training pairs,

as in some cases all the training dataset is too large to be included in this document.

The above-discussed backpropagation algorithm is applied to three problem datasets.

4.1.1. Minimum weight steel beam problem

In this dataset, the artificial neural network will be used for learning in the domain

of structural engineering. This dataset is an acceptable design that satisfies the

requirements of a design code for AISC LRFD specification of steel structures for

designing concrete structures. This dataset is specifically of a minimum weight steel

beam from the wide-flange (W) shape database for a given loading condition [3]. The

designed artificial neural network will be used to learn to select the lightest W shape

among all the available shapes. Each instance of the training dataset consists of five input

patterns:

29

The member length (L)

The unbraced length (L b)

The maximum bending momentum in the member (M max)

The maximum shear force (V max)

Each instance of the training datasets also includes the following corresponding output

pattern:

The plastic module of the corresponding least weight member (Z x)

Backpropagation uses the following parameter values:

Learning rate = 0.3

Criteria = 0.001

Table 3: Training datasets for steel beam design problem

Inputs

Output

Instance

L

Lb

M max

V max

Zx

1

0.40

0.40

0.190

0.190

0.6313

2

0.20

0.20

0.120

0.240

0.3630

3

0.35

0.35

0.035

0.035

0.1000

4

0.15

0.15

0.045

0.120

0.1400

5

0.15

0.15

0.035

0.095

0.1000

30

Table 4: Testing datasets for steel beam design problem

Inputs

Outputs

Instance

L

Lb

M max

V max

Zx

1

0.20

0.20

0.030

0.060

0.1000

2

0.30

0.30

0.095

0.127

0.3900

3

0.15

0.15

0.010

0.027

0.0415

4

0.40

0.40

0.120

0.120

0.4830

4.1.2. Two – Dimensional projectile motion datasets

This dataset includes the two – dimensional projectile motion of a ball fired from a gun

with particular specifications. These datasets were gathered by keeping the gun at some

stationary platform and hitting a stationary target. The gun has the ability to swivel

anywhere from 0 to 90 degrees on the Z axis, and the angle that is formed will be

elevation angle theta. The gun can swivel 360 degrees about the origin of X – Y plane,

the angle that is formed between the X-axis and where the gun is pointing is called the

azimuthal angle phi. Keeping wind into consideration, in the X-Y plane resulting in the

wind's own azimuthal angle alpha. Finally, the wind, if it is blowing, imparts some

acceleration to the projectile and we will refer to this acceleration as “a”. Hence, each

instance of the training dataset consists of five input patterns:

31

The initial velocity of projectile (V o)

The elevation angle of gun ( Θ )

Azimuthal angle of the gun (Φ)

The horizontal acceleration of projectile due to wind (a)

Azimuthal angle of wind accelerated vector (α)

Each instance of the training dataset consists of two output patterns:

The target co-ordinates (Xt, Yt, 0)

The projectile impact co-ordinates (Xi, Yi, 0)

Table 5: Example training datasets for 2-D projectile data motion

Instance

Inputs

outputs

Vo

Θ

Φ

a

α

Target

Impact

1

-185.680

62.3995

33.0

7.0

3.203

57.8742

136.039

2

46.4323

-21.428

40.0

0.0

0.8099

9.1267

335.227

3

-197.269

80.5293

49.0

0.0

3.791

30.211

157.793

4

-45.512

-66.324

31.0

0.0

1.0952

62.4432

235.541

5

130.623

-164.342

49.0

3.0

0.0

68.9361

270.0

6

61.9628

42.6237

31.0

2.0

0.6027

22.502

34.523

7

32.177

-3.891

35.0

0.0

5.7311

7.5142

353.103

32

Table 6: Example testing datasets for 2-D projectile data motion

Instance

Inputs

outputs

Vo

Θ

Φ

a

α

Target

Impact

1

-136.583

10.491

39.0

7.0

1.928

26.795

194.710

2

34.339

210.004

49.0

1.0

4.509

34.542

80.5470

3

82.987

-124.332

32.0

9.0

4.930

33.781

316.559

4

-48.627

40.255

35.0

9.0

0.5604

37.4732

182.333

5

153.010

174.322

46.0

4.0

1.3616

73.017

7.921

6

-165.138

119.676

37.0

4.0

2.754

53.303

136.59

Backpropagation uses the following parameter values:

Learning rate = 0.3

Criteria = 1

4.1.3. Wine recognition data

The wine recognition datasets are the result of a chemical analysis of wines grown

in the same region in Italy, but derived from three cultivars. This chemical analysis

determined the quantities of 13 constituents found in each of the three types of wines.

The output is classified into three categories mainly according to the Class they fit in.

Each instance of the training dataset consists of five input patterns:

33

Alcohol

Malic Acid

Ash

Alkalinity of ash

Magnesium

Total phenols

Flavonoids

Non-Flavonoid phenols

Proanthocyanins

Color Intensity

Hue

OD280/OD315 of diluted wines

Proline

Each instance of the training dataset consists of one output pattern:

Class distribution (1, 2, or 3)

34

Table 7: Example training datasets for wine recognition

N

Input

O

1

14.2

1.71 2.43 15.6 127 2.8 3.06 .28 2.2 5.6 1.04 3.9

1065

1

2

13.2

1.78 2.14 11.2 100 2.6 2.76 .26 1.2 4.3 1.05 3.4

1050

1

3

12.3

.94

1.36 10.6 88

.28 .42 1.9 1.05 1.8

520

2

4

12.3

1.1

2.28 16

101 2.0 1.09 .63 .41 3.2 1.25 1.6

680

2

5

12.8

1.35 2.32 18

122 1.5 1.25 .21 .94 4.1 .76

1.2

630

3

6

12.8

2.31 2.4

98

1.3

560

3

24

1.9 .57

1.1 1.09 .27 .83 5.7 .66

Table 8: Example testing datasets for wine recognition

N

Input

O

1

14

1.95

2.5

16.8

113 3.8 3.49 .24 2.1 7.8 .86

3.45 1480 1

2

12

1.81

2.2

18.8

86

2.2 2.53 .26 1.7 3.9 1.1

3.14 714

2

3

13

3.9

2.3

21.5

113 1.4 1.39 .34 1.1 9.4 .57

1.33 550

3

Backpropagation uses the following parameter values:

Learning rate = 0.3

Criteria = 0.1

35

4.2. Measuring performance

The main objective of this study is to improve the scaling process of the

backpropagation algorithm, so that it can do the error correction as fast as it can and

reach the desired output in the least number of iterations. So to compare between the

linear scaling process and the non-linear scaling process using the backpropagation for

both tests, we have to study the main factors of the algorithm, like the speed of learning,

and how well the designed neural network generalizes.

We set the learning rate and other parameters before we apply the scaling, and

then keep these values intact and then observe the error calculations done by linear

scaling process and then non-linear scaling process for each particular dataset.

Another factor that needs to be observed is the criteria value for each particular

dataset that is being tested. Keeping the criteria value too low might turn the neural

network calculation into infinite or too much iteration, and the comparison between the

linear and non-linear scaling would not conclude or would not make sense. On the other

hand, a huge criteria value would allow training to conclude before learning is complete.

Therefore, we need to observe the results with trial and error method, for the best criteria

value for a particular dataset. For example, in the projectile datasets, the training pair is

dispersed along a large range, one value is -150 and the other value is 220, so keeping the

criteria too low, the neural network will have to undergo too many iterations. However,

the datasets which do not have a large distribution in their input values, we can apply the

criteria value as low as 0.001. Changing the criteria value for each test and for each

datasets, makes a significant difference in the learning process.

36

Another important observation for the comparison would be to study the

difference between calculated outputs versus the corresponding desired outputs by the

human eye observations. After the proposed number of iterations, the backpropagation

algorithm terminates, even if the results were not meet within the specified criteria. For

such results, the minute details in the error difference in the generated output sets must be

observed.

After the learning process finishes calculating the weights, they are applied to the

test datasets. During this test, we need to observe how well they learned for both scaling

methods. During this test execution, the algorithm does not undergo any iteration for its

weight manipulations, it simply uses is the calculated weights for determining the output.

37

Chapter 5

RESULTS

After the parameters required by backpropagation algorithm for non-linear scaling

have been set properly, the datasets are applied to the designed artificial neural network.

Both the datasets, i.e. the training datasets and the testing datasets are given as input to

the algorithm. Complete outputs for training runs are given in Appendix C.

5.1.

Result for minimum weight problem datasets

After executing the backpropagation method for linear scaling, the network did

not converge the calculation within the declared iteration value, which were 900000

iterations. On the other hand, for the non-linear scaling execution of the algorithm, the

network converged earlier than the number of iterations that was defined. As discussed

earlier, the number of iterations of the neural network depends on the criteria value, both

the execution had the same criteria value. The neural network learnt the dataset within

359299 iterations, whereas in linear scaling method, the network did not converge.

Furthermore, this methodology was tested using the test datasets. Observing the outputs

for test datasets, the network applied the weights that were adjusted during the learning

phase. The percentage of the testing cases that meet the criteria for non-linear scaling is

100, so that all the outputs that were generated by the neural network were within the

desired criteria.

38

Looking at the results of backpropagation for minimum weight problem dataset,

we can conclude that, for the non-linear scaling, the network learned faster than when the

datasets were scaled linearly.

Table 9: Result for minimum weight problem

Operation

Linear Scaling

Total number of iterations

Non-Linear Scaling

900000

Converged

Network did not converge

Percentage Generalization

for training cases

Percentage Generalization

for test cases

80%

Network converged in

359299 iterations

100%

83.33%

100%

5.2.

Result for two-dimensional projectile motion dataset

For this dataset problem, in both the cases i.e. linear scaling and the non-linear

scaling, the network does not converge within 90000000 numbers of iterations.

Therefore, it is hard for us to tell which one did better. However, if we observe the

outputs that were generated in non-linear scaling method, there is very little improvement

in the difference between the desired output and the calculated output. For example, from

the output (Refer to Appendix C for result), the desired result is supposed to be 59.3108

and the execution in linear scaling processes 64.2965, whereas the non-linear scaling

processes 62.1667, so it is clear that 62.1667 is closer to the desired value than 59.3108.

39

Table 10: Result for 2-D projectile motion problem

Operation

Linear Scaling

Total number of iterations

Non-Linear Scaling

90000000

Converged

Network did not converge

Network did not converge

Percentage Generalization

for training cases

Percentage Generalization

for test cases

4.84%

12.168%

22.22%

15.556%

5.3.

Result for wine recognition data

We apply the wine recognition problem to the backpropagation algorithm for

linear and non-linear scaling methodology. This particular problem helps us to identify

that the non-linear scaling is better than the linear scaling method. For the linear scaling

method, the number of datasets that meet the criteria for training cases is 99.4382 on the

other hand for the non-linear scaling method, it is 100.00 percent. The number of

iterations for linear scaling methodology is less than the non-linear scaling. This implies

that the linear scaling methodology learnt faster than non-linear, but did not learn

efficiently. Therefore, the output generated by the algorithm in non-linear method was

more efficient than the output generated by the algorithm in linear method.

40

Table 11: Result for wine recognition data

Operation

Linear Scaling

Total number of iterations

Converged

Percentage Generalization

for training cases

Percentage Generalization

for test cases

Non-Linear Scaling

9000000

Network converged in

275049 iterations

99.4382%

Network converged in

306377 iterations

100%

100%

100%

41

Chapter 6

CONCLUSION

Artificial Neural Networks learn by example, and with good training datasets,

they achieve the learning process faster. The current linear scaling process used in

backpropagation fails for large differences and clustering of the values in training

datasets. In real world problems, the data that the neural network uses is often scattered

along a large scale. If this distributed data is scaled linearly, the learning effort of the

neural network may increase.

A new method of non-linear scaling called median scaling, tries to lessen the

clustering issues in the datasets. The algorithm takes all the input, output datasets at once,

and finds out the number of inputs and number of training cases. Further, for each

training pair in the datasets, the algorithm finds out three values: minimum, maximum

and the median and scales them.

After undergoing the experiment of the non-linear scaling (median type scaling)

and applying various datasets, we can say that the non-linear scaling improves the error

calculation up to some extent. Therefore, the non-linear scaling is one of the processes to

decrease the training effort. The linear scaling can work for a set of data where the range

is not dispersed rather they are tightly coupled.

42

Chapter 7

FUTURE WORK

There is a lot of scope for improving the backpropagation algorithm in terms of

scaling the datasets. For instance, this experiment applies non-linear scaling to the output

datasets, further we could apply non-linear scaling to the input datasets too and try to

study the error calculations.

Another approach for non-linear scaling would be to perform clustering analysis

on the training datasets. Each set of populated datasets can be clustered and then can be

classified into groups of datasets. Then we can split the points into multiple sections and

then apply scaling. So, instead of finding out the median and then scaling the datasets, we

find multiple points according to the clustering analysis and then scale each of them.

43

APPENDIX A

Source Code

/**************************************************

Neural Network with Backpropagation using Non-Linear Scaling methodology

-------------------------------------------------Modified by Alok Nakate

California State University Sacramento

Date: October 2010

Change: Non-Linear Scaling

-------------------------------------------------Adapted from D. Whitley, Colorado State University

Modifications by S. Gordon

-------------------------------------------------Version 3.0 - October 2009 - includes momentum

-------------------------------------------------compile with g++ nn.c

****************************************************/

#include <iostream>

#include <fstream>

#include <cmath>

using namespace std;

#define NumOfCols

3

#define NumOfRows

#define NumINs

/* number of layers +1 i.e, include input layer */

14

13

#define NumOUTs

1

0.01

/* most books suggest 0.3

*/

/* all outputs must be within this to terminate */

#define MaxIterate 1000000 /* maximum number of iterations

#define ReportIntv 1001

0.8

#define TrainCases 178

*/

/* all outputs must be within this to terminate */

#define TestCriteria 0.02

#define Momentum

*/

/* number of outputs, not including bias node

#define LearningRate 0.3

#define Criteria

/* max number of rows net +1, last is bias node */

/* number of inputs, not including bias node

*/

/* print report every time this many cases done*/

/* momentum constant

/* number of training cases

*/

*/

44

#define TestCases

15

/* number of test cases

*/

// network topology by column -----------------------------------#define NumNodes1 14

/* col 1 - must equal NumINs+1

#define NumNodes2 14

/* col 2 - hidden layer 1, etc.

*/

*/

#define NumNodes3 1

/* output layer must equal NumOUTs */

#define NumNodes4 0

/*

#define NumNodes5 0

/* note: layers include bias node */

#define NumNodes6

*/

0

#define TrainFile

"winetrain.dat" /* file containing training data */

#define TestFile

"winetest.dat" /* file containing testing data */

int NumRowsPer[NumOfRows]; /* number of rows used in each column incl. bias */

/* note - bias is not included on output layer */

/* note - leftmost value must equal NumINs+1

/* note - rightmost value must equal NumOUTs

double TrainArray[TrainCases][NumINs + NumOUTs];

// an array for finding the median for a particular column

// (from the given inputs and outputs)

double MedianArray[TrainCases];

double TestArray[TestCases][NumINs + NumOUTs];

int CritrIt = 2 * TrainCases;

ifstream train_stream;

/* source of training data */

ifstream test_stream;

/* source of test data

*/

ofstream result_stream("result.txt");

void CalculateInputsAndOutputs ();

void TestInputsAndOutputs();

void TestForward();

double ScaleOutput(double X, int which);

// Alok

double ScaleOutputGeneralise(double X, int which);

double ScaleDown(double X, int which);

// Alok

double ScaleDownGeneralise(double X, int which);

*/

*/

45

// Alok

double ScaleCriteria(double ActualOutput, int which);

double ScaleTestCriteria(double ActualOutput, int which);

void GenReport(int Iteration);

void TrainForward();

void FinReport(int Iteration);

void DumpWeights();

// Alok

double * sort(double arr[], int numR);

void quicksort(int arr[], int low, int high);

double FindMedian(double arr[], int numR);

double getMedian(double arr[], int numR);

//

struct CellRecord

{

double Output;

double Error;

double Weights[NumOfRows];

double PrevDelta[NumOfRows];

};

struct CellRecord CellArray[NumOfRows][NumOfCols];

double Inputs[NumINs];

double DesiredOutputs[NumOUTs];

double extrema[NumINs+NumOUTs][3]; // [0] is low, [1] is hi, [2] is median

long Iteration;

/************************************************************

Get data from Training and Testing Files, put into arrays

The scaling process also occurs in this step.

*************************************************************/

void GetData()

{

for (int i=0; i < (NumINs+NumOUTs); i++)

{ extrema[i][0]=99999.0; extrema[i][1]=-99999.0; }

46

// read in training data

train_stream.open(TrainFile);

for (int i=0; i < TrainCases; i++)

{ for (int j=0; j < (NumINs+NumOUTs); j++)

{ train_stream >> TrainArray[i][j];

if (TrainArray[i][j] < extrema[j][0]) extrema[j][0] = TrainArray[i][j];

if (TrainArray[i][j] > extrema[j][1]) extrema[j][1] = TrainArray[i][j];

}}

train_stream.close();

// read in test data

test_stream.open(TestFile);

for (int i=0; i < TestCases; i++)

{ for (int j=0; j < (NumINs+NumOUTs); j++)

{ test_stream >> TestArray[i][j];

if (TestArray[i][j] < extrema[j][0]) extrema[j][0] = TestArray[i][j];

if (TestArray[i][j] > extrema[j][1]) extrema[j][1] = TestArray[i][j];

}}

// guard against both extrema being equal

for (int i=0; i < (NumINs+NumOUTs); i++)

if (extrema[i][0] == extrema[i][1]) extrema[i][1]=extrema[i][0]+1;

test_stream.close();

// scale training and test data to range 0..1

/**********************************************************************

Apply Scaling to Training cases

**********************************************************************/

for (int i=0; i < TrainCases; i++)

{

for (int j=0; j < NumINs; j++)

TrainArray[i][j] = ScaleDown(TrainArray[i][j],j);

}

// Alok

// Find the Median of a particular column

// Currently it finds the medians of all the output columns.

47

for (int k=NumINs; k < NumINs+NumOUTs; k++)

{

for (int i=0; i < TrainCases; i++)

{

MedianArray[i] = TrainArray[i][k];

}

extrema [k][2] = FindMedian(MedianArray, TrainCases);

}

// Apply the NON-LINEAR Scaling using the median

for (int i=0; i < TrainCases; i++)

{

for (int k=NumINs; k < NumINs+NumOUTs; k++)

TrainArray[i][k] = ScaleDownGeneralise(TrainArray[i][k],k);

}

/**********************************************************************

Apply Scaling to Test cases

**********************************************************************/

for (int i=0; i < TestCases; i++)

{

for (int j=0; j < NumINs; j++)

TestArray[i][j] = ScaleDown(TestArray[i][j],j);

for (int k=NumINs; k < NumINs+NumOUTs; k++)

TestArray[i][k] = ScaleDownGeneralise(TestArray[i][k],k);

}

}

double FindMedian(double arr[], int numR)

{

double valMedian;

arr = sort (arr, numR);

valMedian = getMedian(arr, numR);

return valMedian;

}

/***************************************************************

Function to find the median of a particular sorted column

48

***************************************************************/

double getMedian(double arr[], int numR)

{

int middle = numR/2;

double average;

if (numR%2==0)

average = (arr[middle-1]+arr[middle])/2;

else

average = (arr[middle]);

return average;

}

/***************************************************************

Function to sort a particular column

***************************************************************/

double * sort(double arr[], int numR)

{

double temp;

for (int i = (TrainCases - 1); i >= 0; i--)

{

for (int j = 1; j <= i; j++)

{

if (MedianArray[j-1] > MedianArray[j])

{

temp = MedianArray[j-1];

MedianArray[j-1] = MedianArray[j];

MedianArray[j] = temp;

}

}

}

return arr;

}

49

/**************************************************************

Assign the next training pair

***************************************************************/

void CalculateInputsAndOutputs()

{

static int S=0;

for (int i=0; i < NumINs; i++) Inputs[i]=TrainArray[S][i];

for (int i=0; i < NumOUTs; i++) DesiredOutputs[i]=TrainArray[S][i+NumINs];

S++;

if (S==TrainCases) S=0;

}

/**************************************************************

Assign the next testing pair

***************************************************************/

void TestInputsAndOutputs()

{

static int S=0;

for (int i=0; i < NumINs; i++) Inputs[i]=TestArray[S][i];

for (int i=0; i < NumOUTs; i++) DesiredOutputs[i]=TestArray[S][i+NumINs];

S++;

if (S==TestCases) S=0;

}

/************************* MAIN *************************************/

void main()

{

int I, J, K, existsError, ConvergedIterations=0;

long seedval;

double Sum, newDelta, scaledCriteria;

Iteration=0;

NumRowsPer[0] = NumNodes1; NumRowsPer[3] = NumNodes4;

NumRowsPer[1] = NumNodes2; NumRowsPer[4] = NumNodes5;

NumRowsPer[2] = NumNodes3; NumRowsPer[5] = NumNodes6;

50

/* initialize the weights to small random values. */

/* initialize previous changes to 0 (momentum). */

seedval = 555;

srand(seedval);

for (I=1; I < NumOfCols; I++)

for (J=0; J < NumRowsPer[I]; J++)

for (K=0; K < NumRowsPer[I-1]; K++)

{ CellArray[J][I].Weights[K] = 2.0 * ((double)((int)rand() % 100000 / 100000.0)) - 1.0;

CellArray[J][I].PrevDelta[K] = 0;

}

GetData(); // read training and test data into arrays

cout << endl << "Iteration

result_stream << "Iteration

Inputs

Inputs

cout << "Desired Outputs

";

";

Actual Outputs" << endl;

result_stream << "Desired Outputs

Actual Outputs" << endl;

// ------------------------------// beginning of main training loop

do

{ /* retrieve a training pair */

CalculateInputsAndOutputs();

for (J=0; J < NumRowsPer[0]-1; J++) CellArray[J][0].Output = Inputs[J];

/* set up bias nodes */

for (I=0; I < NumOfCols-1; I++)

{ CellArray[NumRowsPer[I]-1][I].Output = 1.0;

CellArray[NumRowsPer[I]-1][I].Error = 0.0;

}

/**************************

* FORWARD PASS

*

**************************/

/* hidden layers */

for (I=1; I < NumOfCols-1; I++)

for (J=0; J < NumRowsPer[I]-1; J++)

51

{ Sum = 0.0;

for (K=0; K < NumRowsPer[I-1]; K++)

Sum += CellArray[J][I].Weights[K] * CellArray[K][I-1].Output;

CellArray[J][I].Output = 1.0 / (1.0+exp(-Sum));

CellArray[J][I].Error = 0.0;

}

/* output layer */

for (J=0; J < NumOUTs; J++)

{ Sum = 0.0;

for (K=0; K < NumRowsPer[NumOfCols-2]; K++)

Sum += CellArray[J][NumOfCols-1].Weights[K]

* CellArray[K][NumOfCols-2].Output;

CellArray[J][NumOfCols-1].Output = 1.0 / (1.0+exp(-Sum));

CellArray[J][NumOfCols-1].Error = 0.0;

}

/**************************

* BACKWARD PASS

*

**************************/

/* calculate error at each output node */

for (J=0; J < NumOUTs; J++)

CellArray[J][NumOfCols-1].Error =

DesiredOutputs[J]-CellArray[J][NumOfCols-1].Output;

/* check to see how many consecutive oks seen so far */

existsError = 0;

for (J=0; J < NumOUTs; J++)

{

// Alok

// Apply non linear scaling to the criteria too as we applied it to

// the outputs initially

scaledCriteria = ScaleCriteria(CellArray[J][NumOfCols-1].Output, NumINs+J);

if (fabs(CellArray[J][NumOfCols-1].Error) > scaledCriteria)

{

existsError = 1;

}

}

52

if (existsError == 0) ConvergedIterations++;

else ConvergedIterations = 0;

/* apply derivative of squashing function to output errors */

for (J=0; J < NumOUTs; J++)

CellArray[J][NumOfCols-1].Error =

CellArray[J][NumOfCols-1].Error

* CellArray[J][NumOfCols-1].Output

* (1.0 - CellArray[J][NumOfCols-1].Output);

/* backpropagate error */

/* output layer */

for (J=0; J < NumRowsPer[NumOfCols-2]; J++)

for (K=0; K < NumRowsPer[NumOfCols-1]; K++)

CellArray[J][NumOfCols-2].Error = CellArray[J][NumOfCols-2].Error

+ CellArray[K][NumOfCols-1].Weights[J]

* CellArray[K][NumOfCols-1].Error

* (CellArray[J][NumOfCols-2].Output)

* (1.0-CellArray[J][NumOfCols-2].Output);

/* hidden layers */

for (I=NumOfCols-3; I>=0; I--)

for (J=0; J < NumRowsPer[I]; J++)

for (K=0; K < NumRowsPer[I+1]-1; K++)

CellArray[J][I].Error =

CellArray[J][I].Error

+ CellArray[K][I+1].Weights[J] * CellArray[K][I+1].Error

* (CellArray[J][I].Output) * (1.0-CellArray[J][I].Output);

/* adjust weights */

for (I=1; I < NumOfCols; I++)

for (J=0; J < NumRowsPer[I]; J++)

for (K=0; K < NumRowsPer[I-1]; K++)

{ newDelta = (Momentum * CellArray[J][I].PrevDelta[K])

+ (LearningRate * CellArray[K][I-1].Output * CellArray[J][I].Error);

CellArray[J][I].Weights[K] = CellArray[J][I].Weights[K] + newDelta;

CellArray[J][I].PrevDelta[K] = newDelta;

}

53

GenReport(Iteration);

Iteration++;

} while (!((ConvergedIterations >= CritrIt) || (Iteration >= MaxIterate)));

// end of main training loop

// -------------------------------

FinReport(ConvergedIterations);

TrainForward();

TestForward();

}

double ScaleCriteria(double ActualOutput, int which)

{

double range, allPos;

if (ActualOutput < 0.5)

{

range = (extrema[which][2]-extrema[which][0]);

allPos = ((.9*(Criteria/range)))/2;

}

else

if (ActualOutput >= 0.5)

{

range = (extrema[which][1]-extrema[which][2]);

allPos = (((.9*(Criteria/range)))/2)+0.5;

}

return (allPos);

}

double ScaleTestCriteria(double ActualOutput, int which)

{

double range, allPos;

if (ActualOutput < 0.5)

{

54

range = (extrema[which][2]-extrema[which][0]);

allPos = ((.9*(TestCriteria/range)))/2;

}

else

if (ActualOutput >= 0.5)

{

range = (extrema[which][1]-extrema[which][2]);

allPos = (((.9*(TestCriteria/range)))/2)+0.5;

}

return (allPos);

}

/*******************************************

Scale Desired Output to 0..1

*******************************************/

double ScaleDown(double X, int which)

{

double allPos;

allPos = .9*(X-extrema[which][0])/(extrema[which][1]-extrema[which][0])+.05;

return (allPos);

}

/************************************************

This function scales the input non-linear wise.

*************************************************/

double ScaleDownGeneralise(double X, int which)

{

double range, allPos;

if (X < extrema[which][2])

{

range = (extrema[which][2]-extrema[which][0]);

allPos = ((.9*((X-extrema[which][0])/range))+.05)/2;

}

else

if (X >= extrema[which][2])

55

{

range = (extrema[which][1]-extrema[which][2]);

allPos = (((.9*((X-extrema[which][2])/range))+.05)/2)+0.5;

}

return (allPos);

}

/*******************************************

Scale actual output to original range

*******************************************/

double ScaleOutput(double X, int which)

{

double range = extrema[which][1] - extrema[which][0];

double scaleUp = ((X-.05)/.9) * range;

return (extrema[which][0] + scaleUp);

}

/*************************************************

Scale back to the original value

**************************************************/

double ScaleOutputGeneralise(double X, int which)

{

double range, scaleUp, res;

if (X < 0.5)

{

range = extrema[which][2] - extrema[which][0];

scaleUp = ((((X*2)-.05)/.9) * range);

res = (extrema[which][0] + scaleUp);

//(((X*2) - 0.05)/0.9) * range

}

if (X >= 0.5)

{

range = extrema[which][1] - extrema[which][2];

scaleUp = (((((X-0.5)*2)-.05)/.9) * range);

56

res = (extrema[which][2] + scaleUp);

}

return (res);

}

/*******************************************

Run Test Data forward pass only

*******************************************/

void TestForward()

{

int GoodCount=0;

double Sum, TotalError=0, scaledTestCriteria;

cout << "Running Test Cases" << endl;

result_stream << "Running Test Cases" << endl;

for (int H=0; H < TestCases; H++)

{ TestInputsAndOutputs();

for (int J=0; J < NumRowsPer[0]-1; J++) CellArray[J][0].Output = Inputs[J];

/* hidden layers */

for (int I=1; I < NumOfCols-1; I++)

for (int J=0; J < NumRowsPer[I]-1; J++)

{ Sum = 0.0;

for (int K=0; K < NumRowsPer[I-1]; K++)

Sum += CellArray[J][I].Weights[K] * CellArray[K][I-1].Output;

CellArray[J][I].Output = 1.0 / (1.0+exp(-Sum));

CellArray[J][I].Error = 0.0;

}

/* output layer */

for (int J=0; J < NumOUTs; J++)

{ Sum = 0.0;

for (int K=0; K < NumRowsPer[NumOfCols-2]; K++)

Sum += CellArray[J][NumOfCols-1].Weights[K]

* CellArray[K][NumOfCols-2].Output;

CellArray[J][NumOfCols-1].Output = 1.0 / (1.0+exp(-Sum));

CellArray[J][NumOfCols-1].Error =

DesiredOutputs[J]-CellArray[J][NumOfCols-1].Output;

57

scaledTestCriteria = ScaleTestCriteria(CellArray[J][NumOfCols-1].Output, NumINs+J);

if (fabs(CellArray[J][NumOfCols-1].Error) <= scaledTestCriteria )

GoodCount++;

TotalError += CellArray[J][NumOfCols-1].Error *

CellArray[J][NumOfCols-1].Error;

}

GenReport(-1);

}

cout << endl;

result_stream << endl;

cout << "Sum Squared Error for Testing cases = " << TotalError << endl;

result_stream << "Sum Squared Error for Testing cases = " << TotalError << endl;

cout << "% of Testing Cases that meet criteria = " << ((double)GoodCount/(double)TestCases)*100;

result_stream << "% of Testing Cases that meet criteria = " << ((double)GoodCount/(double)TestCases)*100;

cout << endl;

result_stream << endl;

cout << endl;

result_stream << endl;

}

/*****************************************************

Run Training Data forward pass only, after training

******************************************************/

void TrainForward()

{

int GoodCount=0;

double Sum, TotalError=0, scaledCriteria;

cout << endl << "Confirm Training Cases" << endl;

result_stream << endl << "Confirm Training Cases" << endl;

for (int H=0; H < TrainCases; H++)

{ CalculateInputsAndOutputs ();

for (int J=0; J < NumRowsPer[0]-1; J++) CellArray[J][0].Output = Inputs[J];

/* hidden layers */

for (int I=1; I < NumOfCols-1; I++)

for (int J=0; J < NumRowsPer[I]-1; J++)

{ Sum = 0.0;

58

for (int K=0; K < NumRowsPer[I-1]; K++)

Sum += CellArray[J][I].Weights[K] * CellArray[K][I-1].Output;

CellArray[J][I].Output = 1.0 / (1.0+exp(-Sum));

CellArray[J][I].Error = 0.0;

}

/* output layer */

for (int J=0; J < NumOUTs; J++)

{ Sum = 0.0;

for (int K=0; K < NumRowsPer[NumOfCols-2]; K++)

Sum += CellArray[J][NumOfCols-1].Weights[K]

* CellArray[K][NumOfCols-2].Output;

CellArray[J][NumOfCols-1].Output = 1.0 / (1.0+exp(-Sum));

CellArray[J][NumOfCols-1].Error =

DesiredOutputs[J]-CellArray[J][NumOfCols-1].Output;

scaledCriteria = ScaleCriteria(CellArray[J][NumOfCols-1].Output, NumINs+J);

if (fabs(CellArray[J][NumOfCols-1].Error) <= scaledCriteria)

GoodCount++;

TotalError += CellArray[J][NumOfCols-1].Error *

CellArray[J][NumOfCols-1].Error;

}

GenReport(-1);

}

cout << endl;

result_stream << endl;

cout << "Sum Squared Error for Training cases = " << TotalError << endl;

result_stream << "Sum Squared Error for Training cases = " << TotalError << endl;

cout << "% of Training Cases that meet criteria = " <<

((double)GoodCount/(double)TrainCases)*100 << endl;

result_stream << "% of Training Cases that meet criteria = " <<

((double)GoodCount/(double)TrainCases)*100 << endl;

cout << endl;

result_stream << endl;

}

/*******************************************

Final Report

59

*******************************************/

void FinReport(int CIterations)

{

cout.setf(ios::fixed); cout.setf(ios::showpoint); cout.precision(4);

result_stream.setf(ios::fixed); result_stream.setf(ios::showpoint); result_stream.precision(4);

if (CIterations<CritrIt)

{

cout << "Network did not converge" << endl;

result_stream << "Network did not converge" << endl;

}

else

{

cout << "Converged to within criteria" << endl;

result_stream << "Converged to within criteria" << endl;

}

cout << "Total number of iterations = " << Iteration << endl;

result_stream << "Total number of iterations = " << Iteration << endl;

}

/*******************************************

Generation Report

pass in a -1 if running test cases

*******************************************/

void GenReport(int Iteration)

{

int J;

cout.setf(ios::fixed); cout.setf(ios::showpoint); cout.precision(4);

result_stream.setf(ios::fixed); result_stream.setf(ios::showpoint); result_stream.precision(4);

if (Iteration == -1)

{ for (J=0; J < NumRowsPer[0]-1; J++)

{

cout << " " << ScaleOutput(Inputs[J],J);

result_stream << " " << ScaleOutput(Inputs[J],J);

}

cout << " ";

result_stream << " ";

for (J=0; J < NumOUTs; J++)

{

cout << " " << ScaleOutputGeneralise(DesiredOutputs[J],NumINs+J);

60

result_stream << " " << ScaleOutputGeneralise(DesiredOutputs[J],NumINs+J);

}

cout << " ";

result_stream << " ";

for (J=0; J < NumOUTs; J++)

{

cout << " " << ScaleOutputGeneralise(CellArray[J][NumOfCols-1].Output,NumINs+J);

result_stream << " " << ScaleOutputGeneralise(CellArray[J][NumOfCols-1].Output,NumINs+J);

}

cout << endl;

result_stream << endl;

}

else if ((Iteration % ReportIntv) == 0)

{ cout << " " << Iteration << " ";

result_stream << " " << Iteration << " ";

for (J=0; J < NumRowsPer[0]-1; J++)

{

cout << " " << ScaleOutput(Inputs[J],J);

result_stream << " " << ScaleOutput(Inputs[J],J);

}

cout << " ";

result_stream << " ";

for (J=0; J < NumOUTs; J++)

{