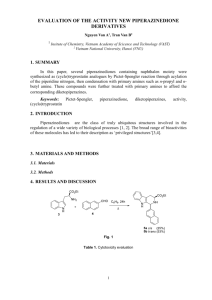

ABSTRACT

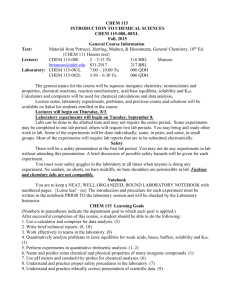

advertisement