Implementation of Bandwidth Broker for CBR Services

Implementation of Bandwidth Broker for Premium Services

Vanja Divkovic, Alen Bazant

Faculty of Electrical Enginering and Computing,

Unska 3, HR-10000 Zagreb, Croatia vanja.divkovic@fer.hr, alen.bazant@fer.hr

It means that different bandwidth broker implementations are

Abstract

Policy based network management has become an inevitable concept for networks relying on Diffserv mechanism. PBNM leads to a standardized and consistent network configuration, independent of architecture or implemented QoS model. In this paper we have presented a bandwidth broker implementation for premium services in an

IP network with differentiated services. possible.

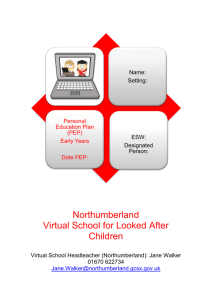

Generic BB implementation contains modules shown in

Application server

Adjacent

BB

PM interface

Inter-domain

Adjacent

BB

Discussion 1 Introduction

In the last twenty years tremendous efforts have been made in developing QoS support for the Internet communications. At the beginning of 90’s IETF proposed the integrated services architecture or briefly Intserv. Intserv guarantees a service quality to each traffic flow. Furthermore,

RSVP (Resource Reservation Protocol) protocol has been developed on the basis of the Intserv concept. It is intended to a network resource reservation in all the routers along an information transfer path (end-to-end).

The fundamental reason why Intserv has not been accepted in the Internet was its scalability. That is the reason why IETF

(Internet Engineering Task Force) has also proposed the differentiated services architecture, briefly called Diffserv.

Diffserv is not oriented to end-to-end QoS provisioning, but is rather focused on a particular administrative domain called

Diffserv domain (DS domain).

Scalability of Diffserv is achieved by dividing traffic into a small number of traffic classes, and each packet is treated according to a traffic class it belongs to. Complex functions of packet classification and conditioning are performed only in border routers, positioned on the edge of a domain. Diffserv exhibits a lack of a mechanism for an end-to-end resource reservation, and accordingly, it has no built-in capability for end-to-end QoS support. Service level agreements (SLA) are made between domains. Border routers, owned by service providers, are configured on the basis of an SLA. Diffserv architecture gives a framework for conforming to an SLA, but it does not answer following questions:

How to make an SLA with a neighboring domain?

How to determine if a particular request for network resources should be accepted or rejected?

How to configure border routers?

The first option is to perform all these operations manually. It is an extremely demanding job, and it is acceptable only if performed scarcely and in a simple network. The other possibility is to introduce a new network component, called bandwidth broker (BB). For the first time it

Discussion 2 Bandwidth Broker

Bandwith Broker is a network device whose original purpose is the establishment of SLAs with neighboring domains, and configuring routers inside its own domain in conformance with traffic-conditioning agreement (TCA), as a part of an agreed SLA. BB’s architecture is not standardized.

User/host

Network operator

User/appl.

interface

Data storage

Edge router(s)

"Simple" policy services

Intra-domain

Routing info

NMS interface

Edge router(s)

Figure 1. Generic bandwidth broker architecture.

Of course, it is not necessary that all BB implementations should contain all these modules because some of them could be implemented in other network elements.

Discussion 3 Policy Based Network Management

The introduction of new services, like virtual private network (VPN), load balancing, traffic engineering, security and the installation of new network devices are potential source of complications in a network configuration.

Furthermore, having network equipment from different vendors causes additional problems. On the other side, many companies base their business on intranet/Internet, and it is of a key importance for them to align their business policy to a network configuration. To achieve those goals, network administrators must configure one or more network devices taking extreme care that new network configuration does not conflict with the old one. Manual configuration of network devices is a demanding task vulnerable to human errors.

As a solution to the problem, IETF has proposed a series of standards related to different aspects of network management by means of traffic policy. The entire concept is called Policy Based (Network) Management (PBNM or

PBM). PBNM leads to a standardized and consistent network configuration, independent of architecture or implemented

QoS model.

Policy is a keyword in the PBNM concept. It represents a unique regulation of the access to network resources and services, based on administrative criteria. Policy defines which users and applications will have priority in network resources usage. It can be specified in many different ways.

IETF Policy Working Group has chosen an approach based on policy rules. Hence, policy is a series of rules. Each policy rule consists of conditions and actions that should be undertaken if conditions are fulfilled.

According to the PBNM system model, a network is divided into policy domains (PD). Components in a PD are policy manager (PM), called also policy server (PS), a series of network components managed by PM, and directory server that stores policy rules. PM is a central entity in a PBNM configuration and it communicates with other entities.

Network devices send to PM their type, capabilities and current configuration. On the basis of these information and policy stored in a directory server, PM delivers to the devices configuration a policy which is then, by managed device, translated in specific configuration commands. PBNM system user can also enter a new policy through an adequate interface.

Two key components must be standardized to make

PBNM a living concept: a protocol for the communication between PS and managed network devices, and description of the management information. As a protocol for communication between PS and directory server is most often used well-known LDAP (Lightweight Data Access Protocol) protocol.

Discussion 3.1 COPS Protocol

PS can communicate with managed network devices according to many different protocols, like SNMP (Simple

Network Management Protocol), Diameter, Radius,

Corba/IIOP. IETF’s recommendation called "Common Open

Policy Service" defines COPS protocol supported by numerous companies whose experts also participated in the protocol's creation. Entities that communicate together via this protocol are called policy decision point (PDP) and policy enforcement point (PEP). PEP is a network device, like switch or router, which is configured via PBNM to treat packets coming to its ports in a defined manner, while PDP is an entity that takes the decision on PEP's configuration and delivers it to a PDP.

COPS can operate in two different modes: outsourcing and provisioning. In the case of Diffserv QoS a provisioning mode is used. Moreover, it is standardized by IETF as the COPS-PR protocol. It is, in fact, the extension of COPS that brings in a transfer of policy defined in policy information base (PIB).

COPS is based on a client-server communication model, and communication is run by client.

It is possible to find freeware COPS implementations on the Web, e.g. Java version, or Intel's COPS SDK (Software

Development Kit) written in C, what makes possible to easy implement COPS and COPS-PR protocols in networking equipment.

Discussion 3.2 Policy Information Base

During the realization of a particular service it is very often necessary to configure more than one network device.

The entire set of policies related to the service is called policy information base (PIB). It defines a structure of the information transferred from a PDP to PEP.

Language and grammar used for a PIB description are defined in RFC 3159. They are also called a structure of policy provisioning information (SPPI). Each service has its own PIB. Diffserv QoS PIB has a special importance for a

Diffserv implementation, and it is still going through a standardization process.

Discussion 3.3 Bandwidth Broker in PBNM

Big network will be divided in administrative domains

(PDs). Each domain will be under the authority of its policy manager. Cooperation between PMs is necessary for a service establishment on a network level. That is why each PM will contain a BB module. BB is not a separate device, but is a part of a PBNM system and uses its functionality. BB merely takes care about making SLAs with neighboring domains and about translation of SLA into a policy of its own domain. Other processes, like acceptance/refusal of requests, policy storage and its transfer to network devices, user and application interfaces, tariffing and network monitoring are already implemented in a PBNM system, and accordingly they do not have to be implemented in a BB.

Discussion 4. Bandwidth Broker for Premium Services

As a support to this theoretical overview, we developed simple model of PDP and PEP, which, of course, lack

functionalities found in today’s commercial products (see [1]

). They are written in Java, but PEP is using Linux traffic control capabilities, which means that it is not portable and works only on a Linux platform.

Discussion 4.1 Linux Diffserv

Linux network code from kernel version 2.2 has been completely rewritten, and now natively supports many advanced features. Traffic control is only one of them and the most interesting for us. Its main building blocks are queuing disciplines, classes, filters and policies. The main advantage of traffic control on Linux is its flexibility. There are often different ways to accomplish a particular task, and the main problem is that tool for traffic control configuration is not user

friendly and it is not well documented ([4] and [11] are trying

to change that). When Diffserv standard was published, Linux traffic control was upgraded with some new features to

support it. Reference [3] explains what was added and why.

There are also some other Linux Diffserv implementation

proposals, but they are not widely accepted ([6] and KIDS

form Karlsruhe University are known to the authors), and we will not take them into a consideration. Linux tool for configuring traffic control is called tc , and it has been used in our programs.

Discussion 4.2 Policy Decision Point

PDP is a multithreaded server that waits for PEP initiated connections. Communication between a PDP and PEP is based on a very simple protocol that uses COPS logic and message names, but have just a few options. It is character oriented and can be easily implemented in Java programming language. Upon the establishment of a TCP connection initiated by PEP, and upon receiving an OPN (Open) message,

PDP checks whether it supports that PEP and responses with

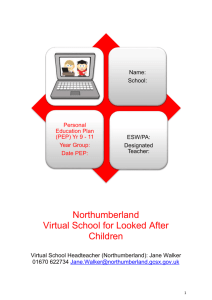

CAT (client accepted) or CC (Client Close) message. If PEP is supported, it adds it into a list of connected PEPs. After successfully establishing a few connections PDP looks like it is shown in Figure 2.

PDP builds its domain structure on the basis of data received from PEPs. It must know which routers are border routers, and which are ingress and egress domain interfaces. It also needs to know routers’ available resources for specific services and their static routing tables. This architecture cannot work with dynamic routing protocols, because route

changes could jeopardize existing QoS guarantees. All this data is conveyed in a REQ (Request) message. New SLA is added manually by network administrator, and contains some basic data about the kind of service, bandwidth, sender, receiver, ingress router, starting and ending time etc. After a new SLA is added, PEP must check whether there are enough available resources for fulfilling a request.

4.3. Policy Enforcement Point

Now we are going to make some insight into PEP’s behavior. As was already mentioned before, PEP runs on a

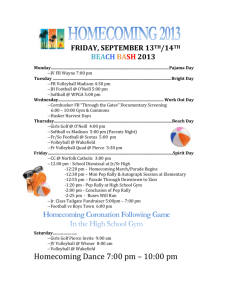

Linux operating system. In our case it is a RedHat distribution version 8.0 with installed JDK 1.4.2. After PEP is started, it needs information about its basic configuration. Configuration data are stored in an XML file that contains information about interfaces, their bandwidth sharing among different services, their roles and PEP static routing table. PEP parameters after initialization are shown in Figure 3.

Figure 2. Policy Decision Point

Our algorithm for that purpose is simple and straightforward. Its steps are described below:

1.

Take the address of our ingress router and a neighbor egress router from the SLA. Ingress router's interface toward the neighbor egress router is the ingress interface for our domain;

2.

Repeat steps 3 to 5 until you reach an egress router.

Repeating these steps, we build path client packets will take on transit through our network;

3.

Take the ingress and the egress interface on the current router. If there are sufficient resources on these interfaces for time specified in SLA, then go to step 4. If not, then go to step 7;

4.

Current router is added to a path;

5.

Current router becomes next hop of the previous current router;

6.

SLA can be accepted and stop;

7.

SLA can not be accepted and stop.

For the reason of a simplification, we have omitted to mention that each interface in PDP’s network structure has a calendar with previously reserved resources. This calendar must be refreshed after adding or deleting an SLA. We should also notice that this works only inside one domain.

Communication with other PDPs will be added later and the only change in our algorithm will be in step 6. After our

PEP discovers that accepting of the SLA in its domain is possible, it looks in its table of neighbors to whom our egress router for this SLA is connected, and what is the address of a

PDP supporting that domain (if neighboring domain has a

PDP). Than it sends request to the neighboring PDP and waits for a response. After the SLA is accepted, it is stored in an

SLA repository and timer is activated. When the moment for

SLA execution comes, the timer triggers event for sending decision to border routers. Decision is written in a form of

XML text.

Figure 3. Policy Enforcement Point

During the initialization phase PEP installs static routing tables and configures interfaces. That is done by calling commands from our Java program like we do in the Linux

shell. The same approach is used in [12], too. At the beginning

PEP deletes all existing traffic control configuration and applies its own from the configuration file. Besides the best effort service which is implemented by default, we have decided, because of simplicity, to implement premium service

(equivalent to a constant bit rate service in ATM networks) only. Other services can be added later in the same manner.

The entire initial configuration remains during PEP operation, and only new filters are added and deleted at ingress interfaces after receiving PDP’s decisions. Traffic that does not match ingress filter is treated as best effort (BE) traffic. Premium service traffic that does not follow SLA is discarded. Logical representation of ingress router queuing disciplines, classes and filters are shown in Figure 4.

F ef

1

EF

EF set DSCP 0x2e ingress

F ef

N egress

FORWARDING

BE

F be

BE set DSCP 0x00

INGRESS QDISC

Ingress Interface

DSMARK QDISC

Egress Interface

Figure 4. Ingress router queuing disciplines

The situation is similar at core routers. These routers do not perform filtering at ingress interfaces, because packets they receive are already filtered at border routers. In ingress queues packets are served in FIFO manner. Egress interfaces of core routers are configured with CBQ (Class Based

Queuing) queuing discipline designed by Van Jacobson [9].

According to their DSCP, packets are queued in one of two queues. Expedited forwarding (EF) traffic has a higher priority and is classified into FIFO queue, while BE traffic gets into the queue with RED (Random Early Detection) queuing discipline. Logical representation of interfaces configuration

in core router is given in Figure 5.

F ef

F

I

F

O

EF ingress egress

FIFO FORWARDING

R

E

D

BE

Ingress Interface

CBQ QDISC

Egress Interface

Figure 5. Core router queuing disciplines

Egress routers have the same configuration as the core routers. Border routers work as ingress and egress routers depending on the traffic direction, and their configuration is composition of ingress and egress router configurations.

PEP specific data important to PDP (like clientSi in

COPS) is conveyed in a request (REQ message), and upon sending REQ, PEP is ready to receive decisions (DEC message). Decisions from PDP do not reference data from any

PIB or management information base (MIB), but they are simply SLA parts important for PEP. Requests and decisions are XML data. PEP extracts data for installing or deleting new filter on ingress interface from a DEC message.

Conclusions

When talking about QoS in the Internet, we have to say that experimental results with Premium IP service in GÉANT

are promising [10], but much more has to be done before such

services could get commercial ISPs acceptance. There are still many open issues, not just for engineers, but also for economists, lawyers, politicians and sociologists. One of the main problems with Diffserv is whether it can provide satisfactory QoS or not. Its scalability means also poor service classification, and the question is: "What is an optimal tradeoff between these two characteristics?" Articles dealing with

that problem are [7] and [16].

Other important problems are interdomain/intradomain tariffing, received/provided service monitoring, and unauthorized service usage. In our work we were concentrated on providing end-to-end QoS guarantees, following IETF achievements in that direction. Disadvantages of this, two

tiered, PEP-PDP approach, are outlined in [13], and the

authors propose an introduction of middle tier to serve as a proxy between PEP and PDP. Despite all the obstacles, we believe that represented technologies are going to shape future of our networked world.

References

[1] Allot Communications, "Policy-Based Network

Architecture," White Paper; http://www.allot.com/media/ExternalLink/policymgmt.pd

f

[2] Almesberger, W, "Linux Traffic Control – Next

Generation," (Oct. 2002), http://tcng.sourceforge.net/doc/tcng-overview.pdf

[3] Almesberger, W. et al , "Differentiated Services on

Linux". Internet draft, <draft-almesberger-wajhakdiffserv-linux-00.txt> (Feb. 1999).

[4] Balliache, L, "Differentiated Service on Linux HOWTO,"

Aug. 2003, http://opalsoft.net/qos/DS.htm

[5] Blake, S. et al , "An Architecture for Differentiated

Services," RFC 2475 (Dec. 1998).

[6] Braun, T. et al, "A Linux Implementation of a

Differentiated Services Architecture for the Internet,"

Internet draft, <draft-nichols-diff-svc-arch-00.txt> (Nov.

1997).

[7] Christin, N., J. Liebeherr, "A QoS Architecture for

Quantitative Service Differentiation," IEEE Comm.

Magazine , Vol. 41, No. 6, (2003), pp. 38-45.

[8] Durham, D. et al , "The COPS (Common Open Policy

Service) Protocol". RFC 2748 (Jan. 2000).

[9] Floyd S., V. Jacobson, "Link-sharing and Resource

Management Models for Packet Networks," IEEE/ACM

Transactions on Networking , Vol. 3, No. 4 (1995).

[10] Giordano, S. , S. Salsano, S. Van den Berghe, G. Ventre,

D. Giannakopoulos, "Advanced QoS Provisioning in IP

Networks: The European Premium IP Projects," IEEE

Comm. Magazine , Vol. 41, No. 1 (2003), pp. 30-36.

[11] Hubert, B., "Linux Advanced Routing & Traffic Control," http://lartc.org

[12] Jha, S., M. Hassan, Engineering Internet QoS, Artech

House (2002), pp. 69-212.

[13] Law, E., A. Saxena, "Scalable Design of a Policy-Based management System and Performance," IEEE Comm.

Magazine , Vol. 41, No. 6 (2003), pp. 72-79.

[14] Nichols, K. et al, "A Two-bit Differentiated Services

Architecture for the Internet," Internet draft, <draftnichols-diff-svc-arch-00.txt>, Nov. 1997.

[15] Wang, Z. Internet QoS Architectures and Mechanisms for Quality of Service, Morgan Kaufman Publishers, (San

Francisco 2001), pp. 79-133.

[16] Welzl, M., L. Franzens, M. Mühlhäuser, "Scalability and

Quality of Service: A Trade-off?," IEEE Comm.

Magazine , Vol. 41, No. 6 (2003) pp. 32-36.