COST733CAT - a database of weather and circulation type

advertisement

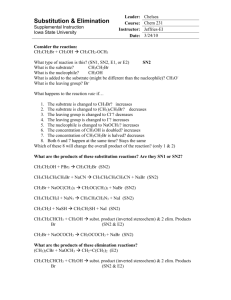

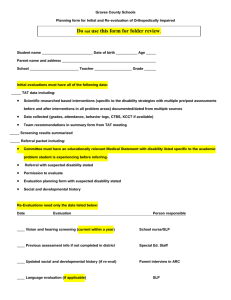

1 COST733CAT - a database of weather and circulation type classifications 2 Authors 3 Philipp A., J. Bartholy, C. Beck, M. Erpicum, P. Esteban, R. Huth, P. James, S. Jourdain, T. 4 Krennert, S. Lykoudis, S. Michalides, K. Pianko, P. Post, D. Rassilla Álvarez, A. Spekat, F. S. 5 Tymvios 6 Abstract 7 A new database of classification catalogs has been compiled during the COST Action 733 8 "Harmonisation and Applications of Weather Type Classifications for European regions" in order to 9 evaluate different methods for weather and circulation type classification. This paper gives a 10 technical description of the included methods and provides a basis for further studies dealing with 11 the dataset. Even though the list of included methods is by far not complete, it demonstrates the 12 huge variety of methods and their variations. In order to allow a systematic overview a new 13 conceptional systemization for classification methods is presented reflecting the way types are 14 defined. Methods using predefined types include manual and threshold based classifications while 15 methods producing derived types include those based on eigenvector techniques, leader algorithms 16 and optimization algorithms like k-means cluster analysis. The different methods are discussed with 17 respect to their intention and implementation. 18 19 Introduction 20 Classification of weather and atmospheric circulation states into distinct types is a widely used tool 21 for describing and analyzing weather and climate conditions. The principal idea is to transfer 22 multivariate information given on the metrical scale in a input dataset, e.g. a time series of daily 23 pressure fields, to a univariate time series of type membership on the nominal scale, i.e. a so called 24 classification catalog. The advantage of such a substantial information compression is the 25 straightforward use of the catalogs. On the other hand the loss of information caused by the 26 classification process makes it sometimes difficult to relate the remaining information to other 27 climate elements like temperature or precipitation, which in most cases is the main objective for 28 applications of classifications. It may be a consequence of this contrariness, that the number of 29 different classification methods is huge and still increasing, in the hope to find a classification 30 method producing a simple catalog but reflecting the most relevant variability of the climate 31 system. However, the number of classification methods and their results is a drawback as it is hard 32 to decide which one to use for certain applications. This was the reason to initiate COST action 733 33 entitled "Harmonisation and Applications of Weather Type Classifications for European regions". 34 The main goal of this network of European meteorologist and climate scientists is to systematically 35 compare different classification methods and to evaluate whether there might be one or a few 36 universally superior methods, which can be recommended. Further it should be evaluated whether it 37 is possible to combine favorable properties of existing methods in order to develop a reference 38 classification. The basis for this work has been established by producing a database of classification 39 catalogs (called cost733cat) under unified conditions concerning the input dataset and the 40 configuration of the classification procedure in order to make the resulting catalogs as comparable 41 as possible concerning the method alone. As a consequence in some aspects the included catalogs 42 might be suboptimal for some applications, e.g. all methods have been applied to means sea level 43 pressure while the 500 hPa geopotential heigth might be better for some applications. However, the 44 current version of the collection, which will be made available for free at the end of COST Action 45 733 in 2010, can be applied for more than intercomparison questions and future versions might 46 include more suitable configurations. 47 [COMMENT: 48 a more detailed justification of the selection of fixed 49 numbers of categories, in the first place, and in particuler 9,18 and 27, in 50 the second stage, should be included unless this is covered in another paper 51 e.g by WG3. The reson is that even among us there is still 52 a discussion about the physical meaning and the "quality" - in terms of 53 being appropriate for use in certain topics- of the classifications in the 54 catalogue. So we need to stress the intended use, that is intercomparison of 55 the methods in terms of discriminating power/efficiency both of the patterns 56 of key meteorological parameters (WG3), and in other applications (WG4). 57 COMMENT] I tried to point this out above (48ff). 58 In order to describe the included methods a new systemization has been developed within the COST 59 Action and is used here in the following. 60 Classification methods have been discriminated into two main groups e.g. by Yarnal (1993) for a 61 long while. The first group has been called "manual" while the second group is called "automated". 62 An alternative discrimination refers to "subjective" versus "objective" methods, which is not quite 63 the same since automated methods which are often seen as objective always include subjective 64 decisions. A third group has been established (e.g. Sheridan 2002) called "hybrid" methods 65 referring to methods defining the types subjectively but assigning all observation patterns 66 automatically. 67 Another distinction could be made between circulation type classifications (CTC) including only 68 information of the atmospheric circulation like air pressure, and so called weather type 69 classifications (WTC) including also information about other weather elements like temperature, 70 precipitation, etc. However, beside the subjective methods, WTCs are rare and actually only one 71 method using other parameters than pressure fields is included in cost733cat. The reason is 72 probably the demand from the majority of applications to use CTCs for relating circulation to target 73 variables (circulation-environment approach after Yarnal REF), e.g. for downscaling where only 74 reliable circulation data are available. 75 With the growing availability of computing capacities during the last decades, the number of 76 automated CTC methods increased considerably, since it is easy now to modify existing algorithms 77 and produce new classifications. This increased variety of automated methods makes it necessary to 78 find a new systematization of methods especially accounting for the increased diversity of 79 automated methods and their algorithms (see Huth et al. 2009) for further developments. 80 This paper is organized as follows: after a the description of the classification input dataset the new 81 methodological systemization is presented and used as structure for the description of the 82 individual classification methods. Concluding remarks point out the differences and commons from 83 the technical point of view. 84 85 Input data and configuration 86 In order to be able to evaluate differences between the resulting classification catalogs all methods 87 have been applied to daily 12 o'clock ERA40 reanalysis data (REF) within the period 09/1957 to 88 08/2002 covering all months, excluding discrepancies caused by different input datasets. However, 89 while most of the authors contributing to the catalog dataset originally used sea level pressure 90 (SLP), some methods have been applied to other parameters like the geopotential height of the 500 91 hPa level or wind components, humidity and temperature (the three latter parameters only in one 92 single method called WLK). Therefore, in order to further reduce sources of differences for 93 comparisons, at least one variant of each method has been producing using SLP only. 94 Another important feature is the clipping of the ERA40 1° by 1° grid. Different spatial scales and 95 regions have been covered by a set of 12 unified domains throughout Europe presented in Figure 1 96 and Table 1. The largest covering whole Europe by a reduced grid resolution (domain 0) while the 97 smallest is confined to the greater Alpine area comprising 12x18 grid points (domain 6). All 98 methods have been applied to all 12 domains. 99 [Figure 1.] 100 [Table 1.] 101 While some methods allow to chose an arbitrary number of types (like cluster analysis) others are 102 limited to one or a few numbers, either due to their concept (e.g. by division by wind sectors) or to 103 technical reasons (e.g. leading to empty classes). The original numbers of types are varying between 104 4 and 43 types which makes it rather difficult to compare the classifications. Therefore three 105 reference numbers have been specified to be 9, 18 and 27 types in order to reduce the departures to 106 the maximum of 2 for each method. Again all methods have been run three times for each of the 107 reference number of types. 108 An overview of the different variants of classifications is presented in Table 2. 109 [Table 2.] 110 Methods and systemization 111 112 From the methodological point of view two main groups can be discerned concerning the way types 113 are defined. The first strategy is to establish a set of types in prior to the process of assignment 114 (called "predefined types" hereafter), while the second way is to arrange the entities to be classified 115 (daily patterns in this case) following a certain algorithm such that the types are, together with the 116 assignment, the result of the process (called "derived types"). 117 ... 118 I. Methods using predefined types 119 Methods using predefined types include those with subjectively chosen circulation patterns and /or 120 weather situations and those where the allocation of days to one type depends on thresholds. Thus 121 the latter define the types indirectly by declaration of a boundary, e.g. a distinction is made between 122 days with a westerly main flow direction over the domain and days with northerly, easterly or 123 southerly direction where the angle between the sectors serves as a threshold and boundary between 124 the types. 125 ... 126 I.1. Subjective definition of types 127 A common feature of the subjective classifications is their relatively high number ranging between 128 29 and 43 except for the Peczely classification with 13 types. 129 ... 130 HBGWL/HBGWT - Hess Brezowsky Grosswetterlagen/-typen 131 One of the most famous catalogs is surely the one founded by Baur and revised and developed by 132 Hess and Brezowsky for central Europe. It is now maintained in Potsdam by Gerstengarbe and 133 Werner and freely available at ... 134 The concept of type definition strongly follows the onflow direction of air masses onto central 135 Europe, discerning zonal, mixed and meridional types which are further discriminated on a second 136 hierarchical level into a total of 10 "Großwettertypes" and on the last hierarchical level into 29 137 "Großwetterlagen" and one undefined type for transition patterns. The subjective definition of types 138 and assignment of daily patterns includes the knowledge of the authors about importance of the 139 specific circulation patterns for temperature and precipitation conditions in central Europe. 140 Therefore they are called weather types rather than circulation types because other weather 141 elements are included in the classification process not only circulation patterns. 142 OGWL - Objective Grosswetterlagen 143 An objectivized version of the "Hess and Brezowsky Großwetterlagen" has been produced by 144 James () using only circulation composites of the original classification and newly assigning the 145 daily circulation patterns to the types by finding the minimum Euclidean distance. 146 PECZELY 147 Gyorgy Peczely, a Hungarian climatologist (1924-1984), originally published his macrocirculation 148 system in 1957. The system was defined on the base of the geographical location of cyclones, 149 anticyclones over the Carpathian basin. All together 13 types were composed. The abbreviation, the 150 corresponding number (used in the code file), and short description of the 13 circulation types: 151 Meridional, northern types 152 mCc (1) Cold front with meridional flow 153 AB (2) Anticyclone over the British Isles 154 CMc (3) Cold front arising from a Mediterranean cyclone Meridional, southern types 155 mCw (4) Warm front arising from a meridional cyclone 156 Ae (5) Anticyclone located east of the Carpathian Basin 157 CMw (6) Warm front arising from a Mediterranean cyclone 158 Zonal, western types 159 zC (7) Zonal cyclone 160 Aw (8) Anticyclone located west of the Carpathian Basin 161 As (9) Anticyclone located south of the Carpathian Basin 162 Zonal, eastern types 163 An (10) Anticyclone located north of the Carpathian Basin 164 AF (11) Anticyclone located over the Scandinavian Peninsula Central types 165 A (12) Anticyclone located over the Carpathian Basin 166 C (13) Cyclone located above the Carpathian Basin 167 After the death of Gyorgy Peczely (professor of the Szeged University, Hungary), one of his 168 followers, Csaba Karossy (professor of the Berzsenyi Daniel College, Szombathely, Hungary) 169 continued the coding process. 170 171 PERRET 172 173 ZAMG 174 175 I.2. Threshold based methods 176 GWT - Grosswettertypen or Prototype classification 177 This classification approach is based on predefined circulation patterns determined according to the 178 subjective classification of the so-called Central European Großwettertypes (Hess and Brezowski, 179 1952; Gerstengarbe and Werner, 1993). It is assumed that these 10 Großwettertypes resulting from 180 a generalization of 29 large-scale weather patterns defined by the geographical position of major 181 centres of action and the location and extension of frontal zones (Gerstengarbe and Werner, 1993) 182 can be sufficiently characterized in terms of varying degrees of zonality, meridionality, and 183 vorticity of the large-scale SLP field over Europe. On the basis of this assumption, the classification 184 scheme consists of the following steps: 185 At first, three prototypical SLP patterns are defined for the region 19 °W-38 °E and 36°N-64°N 186 representing idealized W-E, S-N, and central low-pressure isobars over the European region. 187 Spatial correlations with these prototypical patterns are calculated for all monthly mean SLP grids 188 for; they will be addressed as coefficients of zonality (Z), meridionality (M), and vorticity (V), 189 respectively. 190 The ten Central European Großwettertypes are defined by means of particular combinations of 191 these three correlation coefficients: Großwettertypes high and low pressure over Central Europe 192 result from a maximum V coefficient (negative and positive, respectively). The remaining eight 193 main circulation types are defined in terms of the Z and M coefficients corresponding to the main 194 isobar directions over central Europe (e.g. Z = 1 and M= 0 for the W-E pattern, Z = 0.7 and M= 0.7 195 for the SW-NE pattern, and so on). Each monthly SLP grid outside the high- and low-pressure 196 samples is assigned to one of these direction types according to the minimum Euclidean distance of 197 its Z and M coefficients from those of the predefined prototypes. 198 A further subdivision of these eight circulation type samples into cyclonic and anticyclonic 199 subsamples is achieved according to the sign of the corresponding V coefficient. 200 201 LITADVE/LITTC - Litynski advection and circulation types 202 1.) Calculate two / three indices: meridional Wp, zonal Ws . / sea level pressure in central point of 203 domain Cp 204 Wp and Ws are defined by the averaged components of the geostrophical wind vector and describe 205 the advection of the air masses. 206 2.) Calculate the lower boundary and upper boundary values for Wp, Ws/ and Cp for each day 207 3.) We have component N ( Wp ), E ( Ws ) / N ( Wp ), E ( Ws ), C ( Cp ) when the indice is less 208 then the lower boundary value 209 4.) We have component 0 ( Wp ), 0 ( Ws ) / 0 ( Wp ), 0 ( Ws ), 0 ( Cp) when the indice is between 210 lower and upper boundary values 211 5.) We have component S ( Wp ), W ( Ws ) / S ( Wp), W ( Ws ), A ( Cp ) when the indice isn't less 212 then the upper boundary value 213 6.) Finally, the type = superposition of these two / three components. 214 215 LWT2 - Lamb weather types version 2 216 The LWT2 Method is a modified and improved version of the objective Jenkinson-Collison (JC) 217 system for classifying daily MSLP fields into 26 flow categories, indicating flow direction and 218 vorticity. 219 The LWT2 grid can be placed anywhere. The vorticity and flow strength thresholds (of JC) are 220 modified dynamically so that exactly 33% of days (in ERA40) fall into each of the three vorticity 221 classes Ax, Ux and Cx. 222 The size of the LWT2 grid is usually optimized so that the mean within-type pattern correlations are 223 maximized. However, for the COST733 sub-domains, a fixed grid size is forced as a function of the 224 sub-domain size. Thus, while free LWT2 grids normally have a typical north-south extent of about 225 24-30 degrees of latitude, the forced LWT2 grids for the WG2 sub-domains have extents of 12-18 226 degrees. The large domain's LWT2 grid is also forced and has an extent of 46 degrees - much larger 227 than normal. 228 229 WLK - Wetterlagenklassifikation 230 1.) Calculate main wind sector: wind direction at 700 hPa indicates flow, derived from u- and v- 231 components of true wind. 2/3 of all weighted wind directions have to be directed to a sector of 10°. 232 The 10°-sector is then assigned to a quadrant 233 2.) for each gridpoint nabla[square](geopotential) and its weighted area mean [gpdm2/km2*10.000] 234 are calculated. 235 3.) Weighted area mean value of precipitable water (whole atmosphere), compared to area daily 236 mean value (annual variation), indicating values above or 237 below of daily mean. 238 239 II. Methods producing derived types 240 II.1. PCA based methods 241 242 TPCA - t-mode principal component analysis 243 Short description of the TPCA method 244 TPCA = principal component analysis in T-mode 245 a) Introduction and historical context The potential of principal component analysis (PCA) to be 246 used as a classification tool was suggested by Richman (1981) and the idea was developed and 247 more deeply discussed by Gong and Richman (1995). The basic idea of using PCA as a 248 classification tool consists in assigning each case to that principal component, for which it has the 249 highest loading. To classify circulation patterns (as well as patterns of other atmospheric variables), 250 PCA should be used in T-mode (i.e., rows of the data matrix correspond to gridpoints and columns 251 to days), not in S-mode (where rows correspond to days and columns to gridpoints) - this was to a 252 different extent discussed and proved by Richman (1986), Drosdowsky (1993), Huth (1993, 1996a), 253 and Compagnucci and Richman (2007). The first application of T-mode PCA to classification of 254 circulation patterns dates back to Compagnucci and Vargas (1986) who analyzed artificial data; the 255 first analysis of this kind based on real data was conducted by Huth (1993). In order to obtain a real 256 classification, rotated PCA must be used; usually, an oblique rotation yields better results than an 257 orthogonal one (Huth 1993). Nevertheless, results of an unrotated analysis can also be interpreted, 258 though not directly as circulation types (e.g., Compagnucci and Salles 1997). The classification 259 based on raw data yields better results than that of anomalies because the latter creates artificial 260 types and have difficulties with classifying patterns close to the time-mean flow. The use of 261 correlation rather than covariance as a similarity measure is recommended since the latter would 262 give more influence to the patterns with larger spatial variability, for which there is no reason; in 263 fact, the difference of results between the correlation and covariance matrix is usually small (Huth 264 1996a). An important choice is the number of principal components to retain and rotate; Huth 265 (1996a) showed that those numbers of PCs selected by the rule of O'Lenic and Livezey (1988), i.e., 266 whose eigenvalue is well separated from the following one, tend to yield better solutions. When raw 267 data are used, the number of types (classes) is identical to the number of principal components. A 268 comparison of various circulation clasisfication methods (Huth 1996b) demonstrated that TPCA is 269 excellent in uncovering the real structure of data (i.e., it succeeds in reproducing the classification 270 known in advance), however at the expense of a lower between-group separation. It is important to 271 stress that the fact that the correlation / covariance matrix in TPCA in a typical climatological 272 setting (more temporal than spatial points) is singular, does not pose any limitations on the 273 calculation procedure and does not lead to the instability of results, alleged e.g. by Ehrendorfer 274 (1987). Other applications of T-mode PCA as a pattern classification tool (not only of circulation 275 fields) include Bartzokas et al. (1994), Bartzokas and Metaxas (1996), Huth (1997), De and 276 Mazumdar (1999), Huth (2000, 2001), Compagnucci et al. (2001), Salles et al. (2001), Jacobeit et 277 al. (2003), Müller et al. (2003), Brunetti et al. (2006), and Huth et al. (2007). 278 b) Settings of the method Here we apply TPCA in a setting similar to Huth (2000). Since the 279 calculation of the correlation matrix and principal components (the size of the correlation matrix in 280 our case would be 16436 x 16436) is extremely demanding on computer resources and is practically 281 intractable on a personal computer, we avoid it by calculating PCA on a subset of data and a 282 subsequent projection of obtained principal components onto th e rest of data. Such an approach 283 was shown to be reliable. The procedure is therefore as follows: 284 1. Data preprocessing: Spatial mean is calculated for each pattern; it is subtracted from the 285 data. The reason is that it is reasonable to treat patterns with the same spatial structure, 286 differing only by a constant, as identical. 287 2. The dataset is divided into ten subsets by selecting 1st, 11th, 21st, etc. day as the first 288 subset; 2nd, 12th, 22nd, etc. as the second subset, ..., and 10th, 20th, 30th, etc. as the tenth 289 subset. Steps 3 to 9 are repeated for each subset separately. 290 3. Correlation matrix is calculated. 291 4. Principal components (loadings and scores) are calculated. 292 5. The plausible numbers of principal components are selected: the criterion is that there is a 293 pronounced drop between the selected and next eigenvalue in the eigenvalue vs. PC number 294 diagram. Usually two to three numbers are selected. (The plausible numbers of PCs are 295 usually the same in all the analyses of the ten subsamples.) 296 6. The oblique rotation (direct oblimin method) is conducted for the above selected numbers 297 of components. 298 7. The principal components are projected onto the rest of data by solving the matrix 299 equation FAT = FTZ (here F and F are matrices of PC scores and PC correlations, 300 respectively, Z is the full data matrix, and A are "PC loadings" (pseudo-loadings) to be 301 determined. 302 8. The sign of principal components with prevalent negative sign (the sign is assigned to the 303 components to some extent randomly and has no real meaning) is changed. 304 9. Each day is classified with that PC (type) for which it has the highest loading. 305 10. Contingency tables are used to compare the resultant classifications based on ten 306 subsamples. The classifications are usually comparable (i.e., yield very similar types); if one 307 (or several) of them differs considerably from the rest, is not considered further. 308 11. The final classification (one for each selected number of PCs) is taken by a random 309 choice from those passing point 10. That is, if e.g. three numbers of PCs were chosen as 310 plausible for a given domain, three different final classifications are produced. 311 The following classifications are available: domain no. of types 00 7, 12 01 7, 9 02 7, 11 03 7, 9 04 312 7, 9 05 7, 9 06 7, 9 07 7, 9 08 7 09 7, 9 10 7, 9 11 7, 6 313 The classifications are denoted as follows: Huth_TPCAnn_Dxx.txt where xx is the number of the 314 domain (two digits) and nn is the number of types (two digits). 315 Note: Due to splitting up into a classification TPCA07 (7 PCs for all domains) and TPCA 316 (varying PCs for the domains) the names have been changed now to Huth_TPCA_Dxx.txt 317 without the numbers. 318 319 P27 - Kruzinga emprical orthogonal function types 320 P27 is a simple eigenvector based classification scheme (Kruizinga 1978, 1979). The scheme uses 321 daily 500 hPa heights on a regular grid (originally one 6 x 6 points with the step of 5° in latitude 322 and 10° in longitude). The actual 500 hPa height, htq, for the day t at gridpoint q is reduced first by 323 subtracting the daily average height, ht,over the grid. This operation removes a substantial part of 324 the annual cycle. The reduced 500 hPa heights ptq, are given by: 325 ptq= htq - ht , q=1,... n; n the number of gridpoints, t=1,...N, N the number of days 326 The vector of reduced 500 hPa heights is approximated as: 327 pt s1t*a1+ s2t*a2 + s3t*a3, t=1,...N. 328 a-s are the first three principal component vectors (eigenvectors of the second-moment matrix of pt) 329 and s-s are their amplitudes or scores. 330 The flow pattern of a particular day is described by three amplitudes: s1t, s2t, s3t 331 s1ta1 characterizes the east-west component of the flow 332 s2ta2 the north-south component and 333 s3ta3 the cyclonicity (or anticyclonicity) 334 The range of each amplitude is divided into three equiprobable intervals, then each pattern is on the 335 basis of its amplitudes uniquely assigned to one of the 3 x 3 x 3 = 27 possible interval 336 combinations. The type numbers show the interval to what the type belongs. The first number 337 shows the intensity of the westery flow (1 is eastery or weak westerly, 2 medium and 3 strong 338 westery flow), the second number shows the intensity of the southerly flow (1 is northerly and 3 is 339 southerly). The third number shows direction of the vorticity (1- anticyclone, 3 -cyclone, 2 340 something between). 341 PCAXTR - principal component analysis extreme scores 342 The methodology is based on the well known Rotated Principal Component Analysis, introducing 343 some modifications: 344 S-mode with previous standardization of the rows (spatial standardization) 345 Correlation Matrix 346 Scree test and North-Rule-of-Thumb selection methods 347 Varimax (orthogonal) rotation 348 Decision of the number of clusters and their centroids with the "extreme scores method" 349 (Esteban et al; 2005, 2006, 2009) 350 Assignment to the groups of the remaining cases with Euclidian distance to the nearest 351 centroid. 352 In this way, the main novelty of this procedure is the called “extreme scores method”, oriented to 353 facilitate the decision on the number of groups and the centroids needed for the classifications. For 354 this purpose are considered the spatial variation patterns (modes) established by the PCA, i.e., the 355 principal components retained and rotated in their positive and negative phases as potential groups 356 for circulation patterns classification. The centroids are calculated averaging the days that fulfil the 357 principle of the “extreme scores” for a certain pattern and phase: observations with high score 358 values for a certain component (normally values higher than +2 for the positive phase, or lower than 359 -2 for the negative phase), but with low score values for the remainder (normally between +1 and -1 360 ) were selected. Using this technique, if there is not any real case that could be assigned to the PCA 361 that we are checking (i.e., there is not any day with a close spatial structure), we will consider this 362 component and phase as an artificial result on the PCA analysis, thus eliminating this potential 363 group (circulation pattern). Briefly, the extreme scores procedure establishes the number of groups 364 and their centroids for the clustering method, but also tries to be a filter to avoid artefacts in the 365 final classification if the Varimax rotation previously used in the PCA process has not done it as 366 well as expected. 367 II.2. Leader algorithm 368 Methods based on the so called leader algorithm have been established when computing capacities 369 have been available but were still low. They try to find key (or leader) patterns in the sample of 370 maps, which are located in the center of high density clouds of entities within the multidimensional 371 phase space, spawned by the variables, i.e. grid point values. Thus the aim is close to that of non- 372 hierarchical cluster analysis but the expensive iterations of the latter is avoided. 373 LUND 374 1.) Calculate Pearson correlation coefficients between all days, 375 2.) for each day count all correlation coefficients r >0.7, 376 3.) define the day with the largest number of correlation coefficients r >0.7, as key pattern day for 377 type 1, 378 4.) remove from the data set the key pattern day for type 1as well as all days with a correlation 379 coefficients r >0.7 to that key day, 380 5.) on the remainder data set apply steps 2.) to 4.) for the next types, until all types (of the 381 predefined number of types) have a key day, 382 6.) assign days to a key day according to the highest correlation coefficient. 383 384 385 E - Erpicum et al. 386 Our classification algorithm works like this: 387 1.We compute the geometrical 3D direction of the vertical vector of the 500hPa 388 geopotential(Z500)/sea level pressure(SLP) surface for all grid points for each domain. 389 2.We compute the cosine value of the angle between all the couples (between two different days) of 390 vertical vectors for each grid point. 391 3.We sum these cosines to create a similarity index for all couples of the weather maps (of the 392 ERA-40 period i.e. 09/1957=>08/2002). This index is normalized by the number of grid points. It 393 equals 1 for the weather map with itself. It is near zero if the two weather maps (i.e. days) are very 394 very different. 395 4.For a given similarity index threshold (from 1 to 0), for each weather map, we compute the 396 number of similar weather maps (i.e. their similarity index are higher than the similarity index 397 threshold). The weather map reference of the first class is the weather map which contains the 398 maximum of similar weather maps), the second one is the second largest class and so on .... The 399 number of classes is fixed here to 10 or 30. 400 5.We decrement the similarity index threshold from 1 to 0 until 99% of weather maps are classified. 401 Therefore, 1% of the weather maps are not classified. 402 403 404 KH - Kirchhofer types 405 1.) Calculate spatial correlation coefficients between all fields, as well as 406 between single rows and single columns, 407 2.) assign each pair of days with the minimum of the whole grid, single column and single row 408 correlation coefficients, 409 3.) for each day count all correlation coefficients r>0.4 410 4.) define the day with most correlations >0.4 as key pattern for type 1 411 5.) take out the key day and all days with r>0.4 to the key day 412 6.) on the remaining days proceed as in step 2.) to 4.) for the following type numbers until the all 413 types (of the predefined number of types) have a key day. 414 7.) finally choose type number of each day (using whole sample again) by highest correlation 415 coefficient to any of the key days. 416 II.3. Optimisation algorithms 417 418 CKMEANS 419 CKMEANS is a k-means clustering algorithm with a few modifications, e.g., with respect to 420 obtaining the starting partition: 421 1. Random selection of one day, so, yes, we are using daily data 422 2. Computation of the distance measure to all remaining day using information from 500 and 423 1000hPa gridded geopotential reanalysis fields -> nine most DISSIMILAR days are 424 identified. Together with the randomly selected day in step 1 we have a starting partition of 425 10. The number 10 is de-mystified in Remark 1 below. 426 427 PCACA - k-means by seeds from hierarchical cluster analysis of principal components 428 This method is a combination of two well known statistical multivariate techniques, such as 429 Principal Component Analysis and clustering. Most of it follows the recommendations proposed by 430 Yarnal (1993) 431 Thus, for each one of the large domain and smaller subdomains the analysis was initiated running a 432 Principal Component Analysis (S-mode) to reduce the collinearity, simplifying the numerical 433 calculations and improving the performance of subsequent clustering procedure. 434 In order to avoid that the PCA extract the annual cycle of sea level pressure (strong/weak pressure 435 gradients in winter/summer) as the greatest source of common variance as the first component, but 436 keeping the seasonality of the circulation patterns (eg. more cyclonic in winter...), the classification 437 procedure was not performed season by season; instead the raw (original) sea level pressure data 438 were filtered applying a high-pass filter (Hewitson and Crane, 1992) to emphasize the synoptic 439 variability upon the rest of the sources of variability. Day-to-day cycles in sea level pressure were 440 obtained submitting the daily time series of sea level pressure map means (the mean value of all the 441 grid points on the corresponding days map) to a spectral and autocorrelation analysis. The results 442 show that, on average, cycles longer than 13 days can be considered non-synoptic, and therefore, 443 they can be removed by subtracting a l3-day running mean of the grid (from day t - 6 to day t + 6 , 444 centered on day t = 0) from each data point in each grid. This high-pass filter provides de-seasoned 445 daily maps of sea level pressure, showing spatial differences in pressure from the surrounding 11- 446 day mean map. The retained map patterns are identical to the unfiltered maps, except that the values 447 vary about zero. Although seasonal and annual cycles in mean sea level pressure are removed, the 448 seasonal frequency of various patterns is not changed (e.g., more cyclonic patterns in winter). 449 The matrix was disposed on a S mode, being the principal components extracted from a correlation 450 matrix. The significant components (mostly 3 components in all subdomains, 9 in the larger 451 domain) were identified through several test (e.g. scree test, Joliffe´s test, North´s test), and later on 452 rotated by a VARIMAX algorithm to avoid the classical spatial problems associated to this mode of 453 decomposition (domain shape…). 454 The daily time series of component scores were the input of the clustering procedure. A two-stage 455 modus operandi has been used to identify an appropriate number of clusters and to obtain initial 456 “seed points” using a hierarchical algorithm (ward´s method), and then refined by an agglomerative 457 algorithm (k-means) to obtain a more robust classification. The primary partition was selected with 458 the help of some statistical test (R2, Pseudo-F, Pseudo-T2...), but, when the cutting points were not 459 clear, several solutions were run, plotting the resulting types, and finally choosing manually the best 460 related with composites plots from monthly climatic remarkable episodes (temperature and 461 precipitation) from each sub-domain. 462 463 PETISCO - k-means 464 We take each day in the sample and using this day as a seed, we make the group of the similar days 465 to the seed, after that, we use the average of the group as new seed and make again the group of the 466 similar days to the seed, repeating the process in the same way until to get a stable group. Finally 467 we select as a subtype the average of the group that contains more days, and take away its days. We 468 repeat the process with the remaining days in the same way, and continue selecting as a new 469 subtype, the greater group average no similar to previously selected subtypes. The process 470 continues until a new selection is not possible or until the size of the group is lesser than a certain 471 number. 472 The similarity measure utilized is the correlation coefficient between the grid data fields of the two 473 elements whose similarity we are studying, and it is calculated for mslp and for 500 hPa 474 geopotencial fields. To improve the similarity analysis, the domain is divided into two subdomains 475 and the correlation in them is also calculated, so two elements are considered similar if both 476 correlation value for mslp and for 500 hPa are greater than a threshold in the whole domain and in 477 each subdomain. We have utilized a correlation value of 0.90 as a threshold to select the subtypes 478 for each domain, in order to represent a great number of synoptic patterns. 479 These subtypes are then grouped into types; first, we select a number of dissimilar subtypes and 480 then the remaining subtypes are grouped with the most similar of the previously selected subtypes. 481 Now we use 0.80 as threshold value. 482 In our previous work we utilized lesser resolution (5 o x 5o ), but in this work the higher resolution 483 can introduce a lot of redundant information inside the domain related to the border, this might 484 produce a worse representation of the similarity on the domain border. Nevertheless the method has 485 been applied as originally was applied without doing a Principal Component Analysis. 486 487 PCAXTRKMS 488 These methods follows the same procedure than PCAXTR, only introducing change at the last step. 489 In this way, the assignment to the groups of the remaining cases is made with the K-means non- 490 hierarchical clustering method. In relation to these two different ways of clustering the cases 491 (without iterations in PCAXTR and with iterations in PCAXTRKM), some authors have pointed 492 out that the iterations of the Kmeans method commonly tend to equal the size of the groups (Huth, 493 1996), probably related with a strong underlying structure, as a continuum, of the data. Recently, 494 Philipp et al (2007) highlighted some limitations of K-means regarding to the dependence on the 495 ordering of checks and reassignments. In this way, different local optimums could be obtained with 496 different starting partitions or different K-means algorithms. 497 SANDRA/SANDRAS - simulated annealing and diversified randomization clustering 498 The numbers of clusters for COST733 is subjectively choosen in order to reach a compromise 499 between a reasonable low number to allow an overview and MSLP variation explained by the 500 clustering. For all domains it is around 20 as suggested also by an external criterion for t-mode 501 PCA. Fine adjustment is done by selection of elbows of the silhouette index. 502 Method: Simulated anneiling clustering of 3-day sequences of daily Z925 and Z500 fields together, 503 i.e. one day is decribed by Z925 and Z500 of the day itself and the two preceding days. 504 505 NNW - neural network self organizing maps (Kohonen network) 506 The methodology used is the Artificial Neural Networks and the number of clusters chosen is 507 considred to be proportional to the size of the domain. The results are in tabular form for the 500 508 hPa contour analyses. 509 510 Discussion and concluding remarks 511 Manual or subjective methods are still in use and have their value due to the integration of the 512 experience of experts, which is often hard to formulate in precise rules for automated classification. 513 Therefore 4 subjective catalogs have been included in the dataset for comparison (HBGWL, 514 PECZELY, PERRET and ZAMG). Even though it is unlikely that new manual classifications will 515 be established in the future. Disadvantages are the sometimes imprecise definition of the types, 516 which may lead to inhomogeneities and that they are made for distinct geographical regions and are 517 not scalable. The former problems are overcome by the automatic assignment of days due to their 518 pressure patterns as it is done by the OGWL method, however the latter problems remain. 519 They are mostly replaced now by threshold based methods which have the advantage of automatic 520 processing and a lower degree of subjectivity. However, subjectivity is also apparent by definition 521 of the thresholds. Even though some thresholds seem natural (like the symmetric partitioning of the 522 wind rose), it is always an artificial or arbitrary decision to use certain thresholds and not others. 523 However they captivate with their clear and precise definition of types. 524 525 526 The list of included methods of the cost733 catalog database is by far not complete. However, it is 527 believed that the most common basic methods are represented with some of their variations. 528 Methods not included are mostly those which are specialized for a certain target variable or don't 529 fulfill the criterion of producing distinct and unambiguous classifications of the entities. An 530 example for a method including both aspects is the fuzzy classification method developed by 531 Bardossy et al. (), which tries to find circulation types explaining the variations of a specified target 532 variable, e.g. station precipitation time series, while the daily maps are members of more than one 533 class. The consequence of optimization of a classification for one or a few target variables is that 534 the resulting catalog is applicable for a small target region but not for climate variations on a larger, 535 e.g. continental scale. On the other hand one of the attracting properties of classification methods is 536 the transfer from the metric scale to the nominal scale, which is violated by the principle of fuzzy 537 classification. These aspects should not debase the value of the mentioned methods since they are 538 mostly superior to generalized and discrete methods, but their results are valid for distinct purposes 539 only. 540 However, further studies of the presented classification dataset might show that the idea of 541 generalized classifications including the main links between circulation and climate variability in 542 different or large regions is unrealistic. And the attempts to find generally "better" classification 543 methods is like shooting on a moving target. If a method is optimal for circulation it may be bad for 544 explaining temperature variations, if it is optimal for temperature it may be bad for precipitation, 545 etc. Thus a universally best method might be unreachable, explaining the large number of attempts 546 to develop more and more different variations of existing methods or to develop completely new 547 ones. It is the hope of the authors that this database reflecting the large variety of classification 548 methods will help to come to an conclusion whether it is worth to spend such large efforts in the 549 search for the ultimate best method. However, if the results of studies on intercomparison of the 550 classifications only show that some of the methods are not useful in many aspects, the aim of the 551 work on this catalog collection has been reached. 552 553 554 References 555 Ekstrom M, Jonsson P and Barring L. 2002. Synoptic pressure patterns associated with major wind 556 erosion events in southern Sweden. Climate Research 23: 51–66. 557 ESTEBAN P, JONES PD, MARTÍN-VIDE J, MASES M. (2005) Atmospheric circulation patterns 558 related to heavy snowfall days in Andorra, Pyrenees. International Journal of Climatology. 25: 319- 559 329. 560 ESTEBAN P, MARTIN-VIDE J, MASES M (2006) Daily atmospheric circulation catalogue for 561 western europe using multivariate techniques.International Journal of Climatology 26: 1501-1515 562 ESTEBAN, P.; NINYEROLA, M. and PROHOM, M. (2009): Spatial modelling of air temperature 563 and precipitation for Andorra (Pyrenees) from daily circulation patterns. Theoretical and Applied 564 Climatology. In press. Online at: 565 http://www.springerlink.com/content/r2728365g085q007/?p=0713eaeac536434ebc4b237b6677218 566 a&pi=1 567 Hewitson B, Crane RG (1992) Regional climates in the GISS Global Circulation Model and 568 Synoptic-scale Circulation. J Climate 5:1002-1011. 569 570 Yarnal B (1993) Synoptic climatology in environmental analysis Belhaven Press, London. 571 572 Figure 1. (will be finished soon and made available on the wiki)Table 1.: Identification numbers, 573 names and coordinates of spatial domains defined for classification input data. name 00 01 02 03 04 05 Europe Iceland West Scandinavia Northeastern Europe British Isles Baltic Sea longitudes 37°W to 56°E by 3° (32) 34°W to 3°W by 1° (32) 06°W to 25°E by 1° (32) 24°E to 55°E by 1° (32) 18°W to 08°E by 1° (27) 08°E to 34°E by 1° (27) latitudes 30°N to 76°N by 2° (24) 57°N to 72°N by 1° (16) 57°N to 72°N by 1° (16) 55°N to 70°N by 1° (16) 47°N to 62°N by 1° (16) 53°N to 68°N by 1° (16) 06 07 08 09 10 Alps Central Europe Eastern Europe Western Mediterranean Central Mediterranean 03°E to 20°E by 1° (18) 03°E to 26°E by 1° (24) 22°E to 45°E by 1° (24) 17°W to 09°E by 1° (27) 07°E to 30°E by 1° (24) 41°N to 52°N by 1° (12) 43°N to 58°N by 1° (16) 41°N to 56°N by 1° (16) 31°N to 48°N by 1° (18) 34°N to 49°N by 1° (16) 11 Eastern Mediterranean 20°E to 43°E by 1° (24) 27°N to 42°N by 1° (16) 574 575 576 Table 2.: Methods and variants overview. Alphabetical list of abbreviations used to identify 577 individual variants of classification configurations. In column 2 the number of types is given, 578 column 3 holds the parameters used for classification, the method groups are given in column 4: sub 579 (subjective), thr (threshold based), pca (based on principal component analysis), ldr (leader 580 algorithm), opt (optimization algorithm). abbreviation CKMEANSC09 CKMEANSC18 CKMEANSC27 EZ850C10 EZ850C20 EZ850C30 ESLPC09 ESLPC18 ESLPC27 GWT GWTC10 GWTC18 GWTC26 KHC09 KHC18 KHC27 LITADVE LITTC18 LITTC LUND LUNDC09 LUNDC18 LUNDC27 LWT2C10 LWT2C18 LWT2 NNW NNWC09 NNWC18 types 9 19 27 10 20 30 9 19 27 18 10 18 26 9 18 27 9 18 27 10 9 18 27 10 18 26 9-30 9 18 parameters SLP SLP SLP Z850 Z850 Z850 SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP SLP Z500 SLP SLP method group opt opt opt ldr ldr ldr ldr ldr ldr thr thr thr thr thr thr thr thr thr thr ldr ldr ldr ldr thr thr thr opt opt opt 581 582 NNWC27 P27 P27C08 P27C16 P27C27 PCACA PCACAC09 PCACAC18 PCACAC27 PCAXTR PCAXTRC09 PCAXTRC18 PCAXTRKM PCAXTRKMC09 PCAXTRKMC18 PETISCO PETISCOC09 PETISCOC18 PETISCOC27 SANDRA SANDRAC09 SANDRAC18 SANDRAC27 SANDRAS SANDRASC09 SANDRASC18 SANDRASC27 TPCAV TPCA07 TPCAC09 TPCAC18 TPCAC27 WLKC733 27 27 8 16 27 4-5 9 18 27 11-17 9-10 15-18 11-17 9-10 15-18 25-38 9 18 27 18-23 9 18 27 30 9 18 27 6-12 7 9 18 27 40 WLKC09 WLKC18 9 18 WLKC28 28 HBGWL HBGWT OGWL OGWLSLP PECZELY PERRET SCHUEPP ZAMG 29 10 29 29 13 40 40 43 SLP opt Z500 pca SLP pca SLP pca SLP pca SLP opt SLP opt SLP opt SLP opt SLP pca SLP pca SLP pca SLP opt SLP opt SLP opt SLP, Z500 opt SLP opt SLP opt SLP opt SLP opt SLP opt SLP opt SLP opt Z925, Z500 opt SLP opt SLP opt SLP opt SLP pca SLP pca SLP pca SLP pca SLP pca U/V700, thr Z925/500, PW U700, V700 thr U700,V700,Z thr 925 U700,V700,Z thr 925/500 SLP, Z500 SLP sub sub sub sub sub sub sub sub