22004 - Msecnd.net

advertisement

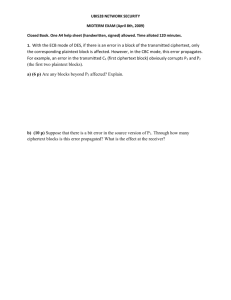

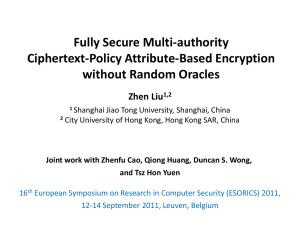

22004. >> Vinod Vaikuntanathan: Okay. So today we're very pleased to have Allison Lewko speaking to us on decentralizing attribute-based encryption. Allison is a Ph.D. student at the University of Texas at Austin, and winner of the MSR Graduate Fellowship. >> Allison Lewko: Okay. So I'm going to be talking about decentralizing attribute-based decryption, also known as multi-authority attribute-based encryption or apparently known to Dan Boneh as adjective-based encryption. It's a mouthful. I'm going to try to explain slowly what all these different modifiers mean. Please ask a lot of questions. I like questions. Jump in if something doesn't make sense. This is joint work with my advisor Brent Waters at UT. In the regular setting of public key cryptography, of course, we have two users we have user Alice who would lined to send a message to Bob but only has access to a public channel. So we have a solution to this problem by having Bob generate a public key and a secret key pair. Alice retrieves his public key. Using this to encrypt her message, sends it along to Bob who can use the secret key to read it. In this setting we have very well-defined functionality. Very nice notions of security. But it's a bit limiting in that we have the two-party model, one user communicating with another user. So more generally what we'd like to do in the public key setting, we'd like to get beyond this notion of encryption as needing a single known recipient of data. So we'd like to be able to have unknown recipients, many recipients, people who may even join the system in the future who should have access to that data. And we'd also like to think more generally beyond all-or-nothing data sharing. So we may have different recipients who should be allowed to see different portions of the data instead of just having a set of users who can see the data and a set of users who cannot. This motivates the general notion of functional encryption, which is just a more general framework for flexible data sharing. So we'd like to imagine that a user at the time they encrypt their data can more generally ask: Who should have access to my data and what should they see? As a particular example of this, we have attribute-based encryption, which was introduced by Sahai [phonetic] and Waters. And in such a system, ciphertext will be associated with access formulas. So the user will describe the set of people who can read their data in terms of a formula over attributes. So maybe I'll encrypt my data so that only people who are students at the University of Texas should be able to read the ciphertext. And keys in such a system will be associated with attributes. So I'll have keys for the different attributes associated with my identity. Perhaps I'll have a key for student, a key for Texas, a key for other things. And decryption will allow my key to decrypt ciphertext if the set of my attributes satisfies the formula the ciphertext was encrypted under. Any questions about this basic setup? Yes? >>: This is going to be ciphertext policy attribute-based encryption. >> Allison Lewko: This is ciphertext policy. You could also have key policy where you sort of reverse these roles. So in that case keys would be associated with formulas and ciphertext would be associated with attributes and you would decrypt again if the attribute satisfies the formula. So you can sort of reverse this. For the multi-authority systems we're going to talk about today, we're going to stay in key policy land. Sorry. Ciphertext policy. So this is a framework we're going to stay in, but more generally you could reverse this. Other questions? Okay. So but in these attribute-based encryption systems, traditionally we have 1 authority who's giving out all the keys. So, for instance, as a concrete example a company may want to encrypt a job posting with a formula like this, so you need both a master's degree and more than two years of experience, say. And then the main security challenge in this setting is we want to prevent collusion attacks. So, for instance, we may have a user who has a master's but has no experience. And a user who has experience but doesn't have the degree. We don't want them to be able to get together and combine their information to read the ciphertext. We essentially want to prevent the transference of attributes from one user to another to be able to use together. So to do this, these systems heavily rely on the fact that there's one central authority who is giving out all the keys and this authority can coordinate things to avoid these collusion attacks. However, in the more general world, we have situations where we have multiple authorities and multiple parties who need to have access to something. So a very real life example is if you have sort of multiple companies or multiple parties who need to access a shared space but need to keep it locked, you can use something called a multiple lock. What this is is each party has their own individual lock and their own individual key. And opening any one lock will open the gate. But so if one party loses their key, or has their key stolen, you just need to replace their one lock. So it doesn't affect any of the other parties. So it's sort of a decentralized -- so this is decentralized process so you don't have to get together and coordinate every time you need to recover from a lost or stolen key. So this is a real life example of what we would like to be able to do in the data sharing world. So more data-driven example, suppose you had different companies sort of coordinating different services. You might have a service that encrypts its content so that someone who is either a subscriber to the service can read it or perhaps someone who is certified by other sponsors. So companies might get together and make deals to provide services to each other's customers or things like that, and so you may want these keys to be distributed by sort of different entities and different companies who even more generally may not trust each other and may not even know about each other. So for the most flexible and most functional system, we like to allow authorities to sort of arise at will, not have to coordinate with each other and not even have to know all the other authorities in the system. And they shouldn't have to rely on each other for the security of their own systems. >>: So what's the -- any of these authorities can generate a key for a user? >> Allison Lewko: Yeah. For the attributes that they control. So each authority will -- so imagine an attribute as being sort of concatenated with the identity of the authority who controls it. So each authority controls disjoint attributes. And so I can arise within an authority in the system, declare I'm giving out attributes that certify this, and so users can just come to me and start getting keys and other authorities may not know I exist. >>: I see. So in real life I would go to multiple authorities, get keys for different subsets of attributes and put that together. >> Allison Lewko: Right and use them together. So like you may go to the DMV get a key for driver's license, or go to your company and get a key for employee. And the DMV and your company don't have to coordinate. >>: What do they do to the collusion problem? >> Allison Lewko: So I'll talk about that. Yeah. So the main challenge in these systems is we still want to avoid collusion. So we still require that to be secure I shouldn't be able to go to the DMV and get a driver's license and use it in conjunction with a key that you get. Okay. So we'll talk about how we do that. Yeah. >>: So there's two themes to be some global partition among either parties, because there's I cannot argue on my own and generate keys on my own and decrypt the ciphertext. >> Allison Lewko: But the keys you generate will only be for the attributes that you've sort of publicly declared belong to you. So you can't generate keys for someone else's attributes. So if someone encrypts -- so you say I control the attribute A and someone encrypts a ciphertext saying they need to satisfy attribute A, yeah, you can decrypt it. But if I encrypt ciphertext saying someone needs to have attribute B controlled by Melissa then you can't generate a key for that. So you won't be able to decrypt that. >>: It's really -- it's a decentralized, the sense these guys can come in anytime. >> Allison Lewko: Yeah, so sort of the only centralization in our system, which I'll get to, is there's some global setup parameters, which is out there in public. >>: Like ->> Allison Lewko: So they have to be generated in a trusted way at the beginning, and I'll mention this. >>: Which hand the parties need to agree on something they mutually trust. >> Allison Lewko: Right. So there is a set of global parameters that everyone will need to mutually trust. But that would just be like published data somewhere you can go pick up. It won't be like a person you have to coordinate with. But there is a global trust point. This is true. We don't know how to get rid of that. >>: Even the parties will need to go to that center and get anything? >> Allison Lewko: No, they don't have to get anything. They just have to look up these public parameters. Yes? >>: The parameters are [inaudible] does that mean [inaudible] encrypt ciphertext? >> Allison Lewko: Yes. So you have to trust the person who created the parameters and we'll see why. You can see very specifically how it could be attacked, yes. >>: Do these parameters have a secret power that if leaked can compromise the system? >> Allison Lewko: No. The parameters are just published. >>: I can take New York Times. >> Allison Lewko: I'm sorry? >>: I can take yesterday's New York Times ->>: That's not random. >>: Enough randomness for the job, I think. >> Allison Lewko: We'll see when we get to the setup algorithm but they have to be generated in a trusted way. But once they're generated they're out there. And that's fine. So as long as they're generated by someone who is not malicious and then published, then it's fine. And that person doesn't need to stay in the system. They just publish them and leave. >>: How do you ensure that authorities control disjoint set of attributes? >> Allison Lewko: So you can think about maybe every authority has an idea or something, and their attributes concatenate their idea on the front. >>: The idea. >> Allison Lewko: So in our system, users also will have global IDs. So everyone in the system should have a global identifier. >>: And they are supposed to have some secret information corresponding to the global ID. >> Allison Lewko: No, there won't be secret information corresponding to the global ID. All right. Before I describe sort of our system let me start by saying how you can get -- you can get someone close to this by just using current single authority AB and also secure signature scheme. So to some extent this is done already. So if we're willing to have a central authority, so this will be a globally trusted entity who's always active in the system. So if we're willing to have such a thing, then we can have other authorities who don't need to coordinate with each other. So one central authority everyone needs to know about and trust, and then we have other authorities who can sort of do their own thing. And a user in this system will have a global ID. And we do need some sort of way of enforcing that users have distinct global IDs. So we're only going to protect collusion up to and assuming that users have distinct global IDs. So a user can go get attributes in the following way. So authorities will arise by publishing a verification key for the signature scheme. So an authority will declare the attributes they control and publish a verification key, and so to get a key for an attribute, a user will go interact with an authority using their global ID. The authority will decide that the user deserves to have this attribute, and they will sign a certificate to that effect. And they will give the signature to the user. So user can go at different times to different authorities using their same global ID. Collect these signatures for all of its attributes, and then all at once it will take all these signatures to the central authority. Central authority will verify all the signatures and check the user's global ID, and will create the keys and the attribute base single authority system corresponding to those attributes and give these keys to the user. And then the user can use these to decrypt ciphertext. So this at least gives us a partially decentralized system, because these other authorities don't need to know about each other or interact with each other but we're still relying on a central authority who has to be constantly giving out keys. So the drawbacks to this approach are two fold. So, first of all, we have this globally trusted entity who is constantly active. So they're constantly in the system and potentially constantly a target of attack. And we also have a performance bottleneck. So, for instance, maybe someone can attack the central authority but they can do a denial of service and make the central authority go down. So while that's happening, no new keys can be issued in the system at all. So authorities can be signing things but these are useless until you turn them into attribute-based decryption keys. >>: So why is this solution any better even in an engineering sense compared to sort of having just a single central authority, none of these like millions, right? Because so what you're saying is you're decoupling the, certifying the attribute spot from generating decryption key spot, that's what you're saying. >> Allison Lewko: Yeah. So what is slightly different here is that sort of an individual authority does sort of -- doesn't have to trust a different authority who is not the central authority to sort of create his key. So he doesn't have to trust anyone else to -- and even the central authority so as long as you trust a central authority you don't have to trust all the other authorities in the system yet they can still functionally give out keys. So it's certainly not an ideal solution. This is just -- I wanted to show you what sort of could be built without additional crypto. So now we'll see what more of the additional crypto can do. Okay. So the previous work on this, Melissa's nice work from 2007, which does have a central authority and has some limitations on the policies and you need a fixed set of authorities. But what's nice about this compared to the engineering approach, you don't have to collect all your things go to the central authority, sort of visit the central authority at any time. The central authority doesn't need to know about everything, all attributes you've had you can do this in a more, can do this in any order. And then recently this was approved by Chase and Chow with an approach suggested by Waters to remove the central authority completely. But in this system there's still some restriction on the policies that you can get and you need a fixed set of abilities. >>: Limited? What's limited? What's the class of policies that ->> Allison Lewko: So the class of policies doesn't encompass sort of all Boolean formulas or all linear secret sharing things, which is what we're going for. Okay. So our system in comparison to this. We do have a trusted setup. So we need these global parameters to be generated in a nonmalicious way. We don't have a central authority. So the person who does the trusted setup can just publish these parameters and disappear from the system. They don't remain active in the system. By expressive policies, I mean we can sort of handle all Boolean formulas over attributes, more generally anything that can be expressed as a linear secret sharing scheme. And our authorities, other than just looking up these global parameters, don't need to coordinate with each other at all. >>: You say Boolean formulas if there's a negation of them hide a certificate that disbars me from doing something. >> Allison Lewko: I should say monotone boolean formulas. Yes, good point. So ands and ors, no nots. The way our system works is that some trusted entity publishes a set of global parameters. And then authorities can arise at any time. So to arise as an authority you declare your attributes, and you publish a public key for each of those attributes that you control. So for simplicity in this talk I'm always just going to be associating each authority with one attribute, but more generally an authority could have more attributes but then we would need more public keys. So authorities arise in published public keys. A user has a global idea associated to them and can go and start collecting keys from different authorities in any order at different times and we'll be able to use these keys together in decryption. And so our main security challenge is still to prevent users from colluding. So if two users have two different global IDs and one has attribute A and one has attribute B, we still don't want to allow these users to get together decrypt ciphertext that's supposed to be enforcing policy A and B. >>: The public parameters is equal to the number of attributes in the system? >> Allison Lewko: So the global public parameters don't need to know the total number of attributes in the system. So it's just for each authority, if I control six attributes, I'll have six times the size of my public parameters from someone who controls one attribute. But the global parameters aren't affected by this. Okay. So we still don't want to allow collusion. The second security challenge, which is new from single authority ABE, so we may have corrupt authorities. And we certainly want a model that handles this, otherwise we're trusting all authorities and we might as well make them one thing. These corrupt authorities can maliciously choose their public parameters perhaps, and they can also conspire with users, malicious users. So we want a security model that encompasses all of these kinds of bad behavior. So as usual formulate this as a game between the challenger and attacker, and we're going to think about the attacker as controlling both some corrupt authorities and some malicious users. So the game starts by publishing some global parameters produced in a trusted way by the challenger. And then the attacker will imagine some corrupt authorities it would like to control. This is a static corruption model for authorities. It has to decide them at the beginning. It's not going to be adaptively corrupting them to the game. It sends the challenger its set of corrupt authorities. At this point the challenger will create the good authorities and it will create the public keys and send these to the attacker. So now the attacker gets to do adaptive key queries. It will decide on some identity attribute pairs, and it will query the challenger for the corresponding keys for these. So it can use many different identities to model many different users, and it's going to make these queries adaptively. So it does as many of these as it wants and then at some point it has to decide on two messages for the challenge ciphertext and also declare a formula for the ciphertext to be encrypted to, and this formula can mix both attributes controlled by corrupt authorities and attributes controlled by good authorities. So for any attributes controlled by corrupt authorities, it also sends the challenger the corresponding public keys just to allow it to encrypt. And the restriction we place on this formula for any single global ID, the keys that the attacker has collected, in addition to any keys that could be made from corrupt authorities, should not be sufficient to decrypt the ciphertext. This is a per user restriction. So for formula A and B, it could have a key for A for one user and a key for B for a different user and this is still allowed. As long as it doesn't have enough keys for a single user to decrypt then it's allowed. Yes? >>: Have you decided which attributes are controlled by which authorities? >> Allison Lewko: So this is sort of -- so I'm just encapsulating that in the semantic of an attribute. An attribute is a string, binary string or something, and it's uniquely associated to one authority. >>: For example, the public key is contact ->> Allison Lewko: Yeah, something like that. Yeah, so there's identifying information is considered part of the definition of the attribute. Other questions? Okay. So once the attacker has sent this to the challenger and it satisfies our constraint that it shouldn't be decrypted by keys for any single individual, the challenger will flip a random coin and use this to decide which message to encrypt. It's usual it sends the resulting ciphertext to the attacker. The attacker now gets to resume key queries. Again with the same restriction that these key queries shouldn't suddenly allow it to decrypt for any one user. It does it for as long as it wants and then finally it has to form a guess for this hidden bit B. This is the usual model. We say it's secure, of course, if the guess for B is correct with probability negligibility close to a half. This is static in terms of the corrupt authorities as decided in the beginning and its adaptive key queries. Any questions about the security model? >>: So what would be the most general security model? >> Allison Lewko: So the more general security model would be if you could allow it to sort of decide adaptively to corrupt an authority. So maybe the challenger creates all the authorities. At some point in the game I decide I'd like to corrupt the stat authority. And maybe that means, for instance, so the challenger has to send me that authority's secret key. And so maybe I could do this adaptively as we go. So we don't allow that. >>: Is it strictly worse than this? Because you're saying that the authority chooses the public key honestly. >> Allison Lewko: Yeah. >>: And then I can say, okay, I'm corrupting this guy and getting the secret key. But the public key is a valid public key. >>: Yes, it's in compared to this. >> Allison Lewko: Right. But more generally you'd allow both. You could say I have corrupt authorities that maliciously generate and adaptively corrupt good authorities. So the most general is sort of allow corruption at any point. Sort of at the beginning or as you go. And so we can handle at the beginning. So the corrupt authorities can maliciously generate based on the global parameters. But we can't allow the as-you-go corruption. So that's an open problem. Okay? All right. So to talk about how we get collusion and resistance, let me just first review how this is achieved in the single authority setting. So when we have authority giving out all the keys, what that authority can do is it can bind the keys of a certain user together with some personalized randomness. So when a user comes to me finds the authority, I sort of pick some randomness all associated with that user and I'll refer to that in making every key I make for them. So every attribute key given to this user will be associated with this randomness. And since I'm sort of coordinating it, they all work together well, but it won't work with keys given to another user which has some different randomness. So you can sort of think about when users are decrypting, in the decryption process their blinding factor they need to recover will be personalized by this randomness. It will work fine for them because it's all the same randomness for all the different keys but it won't work by combining it with all other users. This is heavily using the fact we have this single authority that can remember my randomness each time I go and get a different key. This approach doesn't work when you have these different authorities who don't talk to each other. So you can't get together and decide on a randomness associated to me as a user because they're not supposed to need to know about each other. So what we do in the multi-authority setting is we replace this by a public hash function which we're going to model as a random oracle. So all of our results I should say up front are in the random oracle model. Okay. And so at a minimum, this hash function, of course, should be collusion resistant which will prevent users with different global IDs from trivially breaking things. So we have this public hash function which maps global IDs into a group. And now, so for authorities to sort of personalize a user's key, when a user goes to get a key from an authority, authority in the process of creating that key will look up the hash of that user's ID and will use this in personalizing the key to that user. And so when the user goes to a different authority to create a key the same thing will happen. This authority will apply the public function to the user's ID and will use this to personalize the key to that user. And this is how we will achieve collusion resistance in our system. >>: So you're saying you will compute the randomness as the hash of the ID. The hash is public, right? >> Allison Lewko: Yeah. So I'm not going to rely on hash of ID being a hidden value. I'm just going to rely on the hash of my ID is different from the hash of your ID. And it will be embedded in your keys in such a way that you know it, but it's stuck in there. And so you'll see when we do our decryption that if my value is different from your value, we won't be able to combine our keys. >>: Are you saying that, are you going to take an existing system and use some randomness bytes of the single user together? This randomness -- you can observe that this randomness it's okay to be public, and therefore you can generate it as a hash of ID. >> Allison Lewko: It's somewhat close to that. I don't think this is exactly what you'd get from taking an existing ABE system. But it's still on the same level of computation. It's not very far out from that. Okay. So before I present our exact construction, let me just review sort of the basic ingredients we use. So we'll be using bilinear groups. I'm going to first present a prime order version of our construction because it's easier. There are security guarantees, much weaker in this case. So I'll get to a composite order version where we have better proofs. But the mechanics are the same. So let me start with the prime order version. So we have a bilinear group of prime order P. So E is going to denote our by linear map. And our hash function model that's a random oracle is going to map into this bilinear group. And the main other tool we're going to use is linear secret sharing schemes. So we're going to use this to take a secret which will be an exponent mod P and split it into shares according to our access policy. So shares in our system will each be associated with attributes. And so an authorized set of shares will be one that can reconstruct the secret as a linear combination of these shares. And these will correspond to sets of attributes that satisfy the formula, and an unauthorized set. So if you're given an unauthorized set of shares, then the secret will still be information theoretically hidden from you if you just have those shares, and this will correspond to a set of attributes that's not sufficient to satisfy the formula. Okay. So now I'm ready to present our actual system. So our global setup will just decide on this group of order P, it will fix this bilinear map, fix the generator G and will also decide on this public hash function. And these are our global parameters. Okay. Now, to become an authority for a particular attribute, what you'll do is you'll choose two random exponents, alpha I and YI. So the index I here will be associated with attribute. And the public key will just be the pairing of G with itself raised to the alpha I and G to the YI, two group elements for the attribute and secret key will be these two exponents. Okay. So a key for an identity in an attribute will be a single group element, which is formed by taking G to the alpha I to the attribute multiplying this by hash of the ID to the YI. So to encrypt, what an encryptor will do, is they'll choose a random exponent S mod P, split it into shares according to the policy they want to encrypt on there. And they'll also split zero into shares according to the same policy and the same way. And the zero sharing is going to help us achieve the collusion resistance. And so the ciphertext is formed by using the paring of G with itself to the S as blinding factor for the message. I'm assuming here the message is in the target group so I can multiply these things. Then I'm going to give out the pairings of G with itself raised to these shares of S. Additionally, blinded by alpha X where alpha X is the attribute corresponding to the -- it's for the attribute corresponding to that share. And RX is some local randomness, and I'm just using freshly each time. I'll additionally give out G to the RX and G to the YX RX plus omega X where here omega X and lambda X are these shares, so lambda X are shares of 0. Sorry. Lambda X are shares of S. Omega S are shares of zero. Alpha and the Y are corresponding to the attribute for that share and the R is random. So there's a lot of things here, but we'll see in decryption what role each of these things plays. Okay. So to decrypt in such a system, obviously we want to recover N. So N is blinded by this pairing raised to the S. So as a sub goal we want to recover this. So what do we have? Well, we have these shares of S in the exponent. If we have them by themselves, it would be easy. Because we know how to reconstruct S as a linear combination of these shares. So just do that linear combination in the exponent, we recover the blinding factor. So what we need to do is we need to strip off these extra factors to get at these shares of S. So we need to compute these terms alpha XRX. We won't be able to do this for every share. We'll only be able to do this for shares for attributes we have the keys for. But if we're allowed to decrypt, that will be enough shares to reconstruct the secret. So if we have the key corresponding to this alpha X, then we can pair our key with this G to the RX for the ciphertext and we get what we want just with an extra term. So we're getting this alpha XRX in the exponent that we want but we're additionally getting a term that now has the hash of our ID embedded in it. So now we've introduced the hash of our ID into our computation. And now we have this to the exponent YXRX and again we need to get rid of this. So at this point we can compute hash of our ID as a public function. We compare this with, we can pair this with the GYX plus omega X from the ciphertext and again we get what we want just with an extra term. Now, though, this extra term has our hash of ID in it and it has an exponent which is the share of zero. Okay. So putting this back together for the shares that we have the key, we get this lambda X that we want to get at the share of S but additionally blinded by the share of zero which is an exponent on our hash of ID. Okay? Questions? >>: So this is all per attribute, you're not combining anything yet. >> Allison Lewko: Right. This is per attribute. >>: You're going to take that and you're combine that. >> Allison Lewko: Right. So these are the pieces I'm going to combine. So I want to get them in this form first before I combine anything. So per attribute that I have, I'm going to pull out this EGG to the lambda X which is the share that I want, and I additionally have this term with my hash of ID raised to a share of zero. And now I want to pull my shares and I want to reconstruct the secret. So I'm going to do the linear combination in the exponent. And this is where the sharing of zero becomes sort of, plays its role. Because when we do our recombination in the exponent, S will pop out and 0 will pop out. So that term will go away. Since these are shared in the same way for the same policy, a linear combination that's supposed to be S for the lambda X also give us zero for the omega X and omega make it go away. So the share of zero will cancel out. Basically example let's look at what happens with an and formula. To share S on an and formula, what am I going to do? Take two pieces that sum to S and I'll make lambda A my share for A and lambda B my share for B. So the share is zero in the same way. I'm just going to take two random things and sum to 0. So I'll put omega A for A. Omega B for B. So someone who has both of these keys will be able to get at these two terms. So through the process we did on the last slide, they'll get something with an exponent of lambda A and omega A and something with an exponent of lambda B and omega B. And this is assuming they have these two keys for their same identity. When they multiply these things together, so the reconstruction is in this case linear combination of just a sum, so you just multiply things together, and you get sums in your exponent. Okay. Well, now sort of by the definition of the way the sharing worked, the sum of the omega A and omega B was 0. So this term is just the identity element in the group. And the sum of the lambda A, lambda B is S so this is the blinding factor that we needed. >>: What is ID again? So if I go to different authorities, I'll use -- they'll use the same idea, that's the point. >> Allison Lewko: Yes, they'll use the same ID. An ID is something tied to you, like a Social Security number or something. Yes. >>: So I didn't get much intuition behind the intermediate steps. >> Allison Lewko: So the intermediate steps on the previous slide for how to get at these shares? Okay. So the point of the intermediate steps -- let me just go back a little bit. I don't know how to go back faster. Okay. So there's sort of -- okay. So you sort of see why the sharing of 0 is canceling out. Okay. So the point of the intermediate steps is to make you introduce your global, your hash into your computation. Because the ciphertext, it's not personalized to a user, right? All it knows is attributes. So the goal of the intermediate steps is to make you put your own hash of ID into the computation so that we can rely on that for collusion resistance. Yes? >>: Would it be fair to summarize it that you're taking secret sharing and pointing it with noise that only cancels out if all the pieces match. >> Allison Lewko: Exactly. That's exactly the idea. We want to force -- but we can't put that in the ciphertext. H of ID it doesn't belong in the ciphertext. We don't know who you are when we are creating a ciphertext. We have to force you to put it in yourself. That what we're doing with this intermediate randomness, sort of forcing you to use your key to get rid of these intermediate terms and your key is tied to the hash of your ID. That's what we're going for at a higher level. Does that make sense? Yes. So we have to make you put it in there because it doesn't belong in the ciphertext. But the idea is exactly to do secret sharing where you're forced to introduce your own identity so if you try to use inconsistent identities, these zero shares won't cancel out. So let me ->>: Every single authority? >> Allison Lewko: Sorry? >>: You have described it for single authority, right? >> Allison Lewko: No, this is actually for many authorities because the different attributes in the formula ciphertext, your keys can come from different people. Yeah, they can come from different people. Yeah. So one of your -- so in this example, I could be controlling attribute A and you could be controlling attribute B. So this would be the term they'd get from using the key from you. The other term they'd get from using the key from me. And then they multiply them together. >>: To decrypt I need to know the public keys of all the authorities. >> Allison Lewko: Just the ones you're using in your policy. >>: Right. >> Allison Lewko: Yes. So you just need -- to encrypt you just need to go collect the public keys for any of the attributes you're referring to in your formula. >>: So that's how you resolve conflicts, if two authorities rise up and start giving keys out for the same attribute, then it's up to the encryptor who you want to use. >> Allison Lewko: Right. It's up to the encryptor. So as an encryptor, I decide, I think that Vinod controls A and I trust his security. So I will encrypt his public key for A. Yeah. Okay? Make sense? >>: So is it possible to have an explanation based of attributes on one authority? Like if I want to give out keys for any arbitrary string. >> Allison Lewko: So in our system, you would need to have a public key for each one. So you'd have an exponentially large public key. But more generally you could try to adapt what's called a large universe ABE system. So we don't explicitly do that. But one could try to do that. You think the attribute could take exponentially many values? >>: Yes. >>: Why don't you write it down [inaudible] public keys. So then you make things a bit more ->>: That wouldn't work because of [inaudible]. >> Allison Lewko: So we are implicitly assuming sort of a small universe ABE. And you can try to do it with large universe. >>: [inaudible]. >> Allison Lewko: As far as I know. I sort of haven't kept up with it. But as far as I know it's an open question. I sort of feel like the technology is probably out there, because there's large universe IB. But we don't explicitly do it so it's an open question as far as I know. >>: There was a lot of math on the board I couldn't figure it out what makes it not work with [inaudible] what makes you need pairing? >> Allison Lewko: What makes you need pairing? Other than I don't know how to do it, I'm not sure I have -- I'm not sure I have a good answer off the top of my head. >>: Did you try it with [inaudible]. >> Allison Lewko: Yeah. And certainly for the security proof, I mean, so for the prime order version we only have a generic group model proof. So to do the static security proof we need even more than this we need composite order pairings and we're using pairings in ways that are very necessary in my mind. But, yeah, I don't have a quick answer to that. >>: Small universe to infinite, is there a practical cutoff where you want to leverage in terms of normal attributes and so on? >> Allison Lewko: Yeah, I'm not sure. >>: Which might be the case in many applications. >>: Large universe. >>: [inaudible]. >> Allison Lewko: Yeah, and I guess so sort of also having the freedom to sort of not commit yourself to an attribute space ahead of time. >>: This construction already achieves static security, the point with your composite order thing is to ->> Allison Lewko: Well, actually this construction, the security is only proven in the generic model. So the point of our composite order thing is to get static assumptions. Ideally you would want a prime order version from things like DLIN, but static things -- but composite order things does have static assumptions. This is only a generic order proof at this point. >>: Static order does encryption. >> Allison Lewko: Yes, I'll get to that if I have time. So this is the mechanics of how the systems work. So the idea for collusion resistance as we've seen is if you have different hashes of IDs you have different bases on these exponents which are shares of 0. So they won't just sum in the exponent anymore and they won't cancel out. That's intuition. So unfortunately I'm not going to talk about the generic group proof because it's not at all insightful. But so to get a stacked security proof we're going to use dual system encryption and composite ordered groups. Let me just try to give you a brief overview of what dual system encryption is. So this was introduced by Waters in 2009. And the main idea is we're going to have two types of keys and two types of ciphertext. So you have normal keys and normal ciphertext which are used in the real system. And they decrypt as normal. So a key will decrypt ciphertext if it's supposed to and so on. Okay. But for the proof we additionally have these objects called semi-functional keys and semi-functional ciphertext. And these have the functionality that a normal key will still decrypt a semi-functional ciphertext and normal ciphertext still be decrypted by semi-functional key, but suddenly, if I take a semi-functional key and try to decrypt the semi-functional ciphertext, things go horribly wrong. The encryption will always fail in this case. These are two sort of objects that don't play nicely together. All right. And the idea for the proof is we're roughly going to show that these types of keys and these types of ciphertext are indistinguishable to a certain point. Okay. So the proof -- so this has been used to do a bunch of stuff that I'm not going to talk about. So the idea for the hybrid security proof is we're going to start with a real game. So in the real game we have the real system, normal keys and a normal ciphertext. And so we're going to go through some transitions of games where first we just change the ciphertext to semi-functional. So at this point keys are still decrypting the semi-functional ciphertext, everything still sort of looks the same. Going to argue the attacker can't change to our advantage because they can't really distinguish between these cases. Then one by one, so we'll keep our ciphertext semi-functional, and one by one we're going to change our keys to be semi-functional. Going to keep arguing the attacker can't change its advantage in a nonnegligible way and we're going to end up in a game where everything is semi-functional. Now it's a lot easier for the simulator to run this game, because it doesn't have to produce things that are actually capable of decrypting each other. Now security is a lot easier to prove just directly. So that's the motivation. That's where we're trying to get to. Now, you should be a little skeptical about this framework I've just presented. So there's a catch. So at the point where -- so ciphertext becomes semi-functional, keys are normal. Fine, everything would still decrypt in theory. Not that much has changed. Okay. So now we're thinking about the step where ciphertext has become semi-functional, and our first key is going to change from normal to semi-functional. So we want to create a simulator that's going to create this key in such a way that if it's semi-functional, then the answer -- then whether or not it's semi-functional depends on the answer to this hard problem the simulator is trying to solve. So the simulator should not know the nature of the key itself. So just be producing this key that's either normal or semi-functional but it won't know itself. So it would be adversary's advantage changes it can use this to answer the hard problem. Okay. So the challenge is though we're doing an adaptive key query model. So simulator has to be able to make this key for anything. And it also needs to make this semi-functional ciphertext for anything. So the attacker can't ask for a key that's able to decrypt but the simulator can try to do that so maybe it makes this unknown key such that it should decrypt the ciphertext and it seems it can test for itself by trying to decrypt. If it tries to decrypt, if it was normal, it would work and if it tries to decrypt and it's semi-functional then it will fail. Now it seems the simulator has answered its own question. Okay. So this is a paradox we need to avoid. So the way that we avoid this is by introducing something we call nominal semi functionality. And what this means is that things will be sort of semi-functional in name, but it won't hurt decryption. So what will happen is the simulator will make a key that's either normal or nominal semi-functional. Then if it makes a ciphertext that can decrypt, even if it was nominal semi-functional it has these sort of semi-functional components they'll magically cancel out with the ciphertext and decryption will succeed anyway. Okay. So what we're going to have the simulator do is it will make a key and the ciphertext that are correlated. So if in the simulator's view decryption will always work because of this correlation. But we're going to hide this correlation from the attacker. So in the attacker's view it should look like these things are just distributed as regular semi-functional things. And so why is this possible? So what separates the attacker's view from the simulator's view is the attacker is not allowed to ask for a key that's actually capable of decrypting the cypher text. So as long as you look at a key and a ciphertext that are not capable of decrypting, then it will look random in semi-functional terms but if the key actually could decrypt the ciphertext this correlation would be revealed. Yes? >>: So if ciphertext and key are correlated, then you can encrypt otherwise not? >> Allison Lewko: Right. >>: Is it hard to come up with a semi-functional ciphertext on your own just from the public parameters? >> Allison Lewko: Depends on the system and what -- yeah, I think it should be. It should be hard, yeah, because if you could, then you could test it against the ciphertext and you could say, yes, it's going to be hard to come up with one on your own. So public parameters will sort of only allow -- public parameters will only specify things in the normal space and the semi-functional space is really coming from the simulator of the game. Okay? Other questions? >>: Why do you keep changing the normal keys one by one? >> Allison Lewko: Yeah. >>: But you will ->> Allison Lewko: So what the simulator is doing he's actually changing it from normal to nominal semi-functional. In reality. So you can sort of think about as the computational step will be changing it from normal to nominal. And then there's just an information theoretic step, we argue it's actually identically distributed in the attacker's view to regular semi-functional. So it's really a two-step process. One of them is informational theoretics swallow it into the argument. >>: Normally in these papers would you do a system encryption? >> Allison Lewko: Yes. >>: Use a dual system encryption in sort of a black box way or do you have to go inside it and ->> Allison Lewko: You have to go -- so to prove nominal. So sort of like the definition of nominal is tied to sort of the decryption functionality of the system. Right? If I'm going to do this for ABE, do this for HIB, I have to go in and tailor it to the functionality of the system. It is a correlation that should only appear when I can actually decrypt. So it should heavily depend on sort of what the definition of decryption functionality is. So I have to tailor it to every system. But this framework we've used quite generally. This is the same framework we use for IB, HIB, lots of other things. >>: But definition of a set of properties that a dual system encryption should have? For example, tomorrow I want to design one of these things based on pattern. >> Allison Lewko: Yeah. So we've written it as an abstraction a few times. Other times we haven't. We haven't been consistent about that. But, yeah, there are a few papers in which we have sort of specified this is properties. In one sense we did it for leakage resilience as well, and a few times we've done it without leakage resilience, I think. >>: So does the [inaudible] necessarily give [inaudible] is it a drawback? >> Allison Lewko: No, I really don't think so. This is not necessarily evidence in every time it's used. But the original paper, for instance, that Brent did proves security for IBEs, fully secure IBE and HBE from delin and decisional bilinear Diffie-Hellman. And a lot of the dual system systems have started out in composite ordered groups and have now migrated into the prime order groups proof of delin. >>: Do you use one assumption or -- >> Allison Lewko: Typically in the composite order systems we found it convenient to use small number of assumptions, like three or four, sort of all variants of the same kind of assumption. Typically when these things get transferred to prime order groups, they all collapse to delin. But that's sort of an ongoing process of ongoing work. >>: Could work in prime order groups? >> Allison Lewko: Oh, yeah. So this is sort of a meta conjecture that we have that sort of everything that we're doing in these composite order groups from these particular kind of static assumptions really should be delin and prime order groups in similar work. This has been done in some particular systems. So, for instance, it's been done for single authority ABE by Okimoto and Takishima. And it was done in the original dual system paper for IBE and HIB. Okay. So why are composite ordered groups so good for dual system encryption? Let me give you a sense of why they're very convenient. But I do not believe this is inherent. This is just a nice tool for making things sort of prettier so you can understand what's going on. Okay. So we consider a composite order group whose order is going to be an order, product of three distinct primes. Okay. So you have a bilinear map. What these three distinct primes gives us, it gives us subgroups of each prime order to orthogonal to each other under the bilinear map. If I take an element from the blue subgroup and I pair it with something from red subgroup, I always get the identity. So what it gives me, gives me three orthogonal spaces to work in. This is a very nice setting for dual system encryption because I can do my main scheme in one space, my semi-functionality will live in another space. And then it's very easy for me to have normal and semi-functional things work together because I have this extra space that's orthogonal, not hurting anything. But suddenly when I have two semi-functional things, they'll interact in the semi-functional space and that will stop encryption. It's just a very convenient framework for dual system. >>: Similar abelian groups. >> Allison Lewko: Yeah, abelian groups. They're cyclic groups, actually. Yeah. >>: Cyclic? >> Allison Lewko: Yeah, it's still cyclic. So this is where, for complexity assumptions, we are going to assume that this factorization is secret. So it shouldn't be hard to find a nontrivial factor of N. For instance, if the public parameters were maliciously generated, if someone knew the factorization, they would be able to break things. So we do need that to stay secret. Okay. Okay. So what kind of problems do we rely on in these groups? So in general we rely on things that belong to the class of subgroup decision assumptions. And these are roughly saying that in the generic group model, if you're given random elements from different subgroups, so the only way you can tell them apart is just by pairing them against each other, seeing if you get the identity or not. This sort of testing for these orthogonalities. So as an example -- well, okay, so more generally we have group order which is a product of many primes. So for each subset of the indices of these primes we have a corresponding subgroup whose order is the product of those primes. And so both are given, say, a random element of the subgroup of order P1. Random element subgroup of order P2 times P3 and you're trying to distinguish something that's either from P2, P4 or from P3 P4. So in generic group model, what you are going to do, you'll just pair these things together. If you pair your challenge element T against G, well, P1 doesn't appear in either cases of T, so it will get the identity element both times. Okay. If you try to pair it against H, in one case you'll get a contribution from the P2 terms. The other case you'll get a contribution from the P3 terms. So each time you'll just get some random looking group element and you won't know which subgroup it's coming from, so you won't be able to distinguish these cases. So more generally, general subgroup decision assumption says that if you're given a bunch of things which are random elements from different subgroups, so I'm illustrating subgroups being subsets of the different primes, and you're trying to distinguish the challenge which is either from one case to the other case, as long as sort of all the things you're given are corresponding to sets that intersect both cases, or neither case, and sort of whenever you try testings with pairings, you get something both times or you get nothing both times. So at least in the generic group model it should be a hard problem. Okay. So these are the kind of assumptions that we rely on. And we use a few, more than one, I think it's three or four specific instances of this. And I think for this work we have one that's static assumption that doesn't quite fall into this framework, but general and dual system encryption you can usually work in this class of assumptions. >>: What do you mean by three, four specific instances, if you make this general assumption, that's enough. >> Allison Lewko: Right. So we don't make the general assumption explicitly. You could. >>: Everything gets done. >> Allison Lewko: Except for in this particular, for this system we actually have one that doesn't fall in the general, the static one that doesn't quite fall. But, yeah. Okay. So the way that our system in composite ordered groups works, is in subgroup one is really our main space where the scheme is happening really just as it happened before. So everything in sub group one is the same. And then our semi-functional things will just spread into these other spaces. So semi-functional ciphertext have additional components in both spaces, and we'll have two types of semi-functional keys. Maybe I should ask at this point when should I stop? How much time do I have? >>: Five minutes. >> Allison Lewko: Five minutes. Okay. So I'll try to tell you the main idea of how this nominal part works. Okay. So why do we have two types of semi-functional keys? So to do this step where I'm making things nominal, and then I'm arguing that the attacker can't tell information theoretically, what I'm going to do is I'm going to rely on the fact that, okay, so remember we had this sharing of zero in the ciphertext, right? So it's going to happen in the semi-functional ciphertext is we're additionally going to be, the sharing of zero is going to spread into these sub spaces, except it will be sharing something random. The semi-functional ciphertext is sharing something random in the red space and random in the green space. So now if you try to decrypt with, say, a semi-functional key that has components in the red space, you'll be constructing a random key in the red space which will prevent you from decrypting. The way we're going to achieve nominal semi-functionality, so the simulator can't test things for itself, is we'll say we're sharing something random, but actually we'll be sharing zero again. So nominal zero ciphertext, for instance, we will be sharing something random in the green space but actually sharing zero. And our key we're not going to know the form of is going to be coming into the red space, but since we're really sharing zeros there, if we try to decrypt, we won't see the contribution of the red pieces. Okay. So we need to hide what we're sharing in a linear secret sharing scheme. So if you have only one key and one ciphertext, this is not too hard, because you don't have enough attributes to decrypt. So essentially the shares that you don't have the keys for those attributes are blinded. So information theoretically in the public parameters are just in GP1, so they don't give out any information about what's going on in the red space. What you really have, you have some blind things, and some shares that are informational theoretically revealed, but they're not enough to reconstruct the secret. If you really only have shares that are insufficient to reconstruct the secret, the fact you're sharing zero is information theoretically hidden. But now I have a collusion problem in the information theoretic world, because if you had different keys that were all different, revealing information theoretically different shares, you would see that you were sharing zero. So what I do in the hybrid organization, to get around this -- so let me skip ahead a little bit. Mostly just what I said. Okay. So the hybrid works by first my ciphertext will go. So my functional. And then my first key will be semi-functional type one. This is living in the red space. Then I'll change it to be type two. And then my next key will be semi-functional of type one. I'll change it to be type two. So the important thing about this is I only have -- so when a key first becomes semi-functional, it does so in the red space where it's sort of the only key that's happening there. So I'm bringing things in to be semi-functional, where really I'm only dealing with one key and one ciphertext at a time. And this allows me to make my information theoretic argument go through. And then I change it to the other space so that I've sort of saved my work, making it semi-functional and then I can move on. So because I only have one key coming in in this red space at a time, that's where my information theoretic argument lives, then I change it to being semi-functional of the other form. So as I'm sort of changing this third key, the keys behind it aren't leaking information about what's going on in the red space, because now they've been changed into the green space. >>: Other information [inaudible] versus where you say whatever type. >> Allison Lewko: The switch from type one to type two? >>: Or even before that from sharing zero to sharing the number. >> Allison Lewko: So the way the steps go is computationally I'm going to be sharing zero in the ciphertext in the red space and bringing in my key in the red space, and then information theoretically I argue I was really sharing random. And then I computationally change my key. So now it's random-random in the red space. Now I do a computational change to flip it to the green subgroup. And then I do an information -- then I'm really sharing 0 again in the ciphertext bring in the next key, information theoretically I'm sharing random. And I iterate this. Okay? Is there a question? So this is really the main idea of how the proof works. And then when I get to the end and everything is semi-functional, it's not too hard. So the real sort of challenge here is keeping the fact that you're giving out many keys from destroying your information theoretic security. So the way to do that is to organize your hybrid around it to bring things into one space, move them to the other space so they don't ruin things for the next key, and then keep moving forward. This can be accomplished with just a hybrid. There's one more slight issue with this, which the information theoretic argument is also sensitive to how often you've used an attribute. Okay. Because the shares are blinded by terms that are fixed with the attribute. So if you use an attribute several times in your formula, this information security will go away. So we need to specify that you can only use an attribute once in each formula. Now, you can design the system around this by just taking it -- if you really want to use an attribute six times, for instance, you just encode that attribute as six different things that really mean the same thing but semantically are different and you can do this. But it's a bit of an efficiency issue. It would be nicer to get rid of it but we don't know how at this point. >>: [inaudible]. >> Allison Lewko: Okay. So that's the system. That's the hybrid proof. Okay. This I've already sort of said. Okay. And so I'll sort of skip over the summary and just go to the open questions because this is more interesting. So obviously prime order groups under simple assumptions is where you'd want this to go. There's ongoing work in this area for specific systems. And sort of transformation in general. So this is very promising. Adaptive corruption I think also would be a very interesting thing to try to get. So adaptive corruption of the authorities now the system on line. We don't know how to do that but it would be a nice contribution. Also distributed setup. You could try to replace this trusted setup by multi-party computation, for instance, trying to make it efficient. So that's an interesting direction. Removing the random oracle is sort of the one I find the most interesting and I have absolutely no idea how to do. So if people have thoughts on this, please let me know. I think that would be great. And I always, when we sort of always have the question can we do even more expressive functionality, can we go beyond linear secret sharing. I don't really have any ideas on that right now either. So I think these are sort of the interesting questions to do next. >>: Big universe ->> Allison Lewko: And large universe I didn't put up there but it should be up there. That should be done. Yeah. And I'm not aware of it being done yet. >>: And maybe having the same attribute [inaudible] text. >> Allison Lewko: That's another one that should be up there. I didn't know that I had time to technically talk about it. But getting rid of this restriction would be a big deal. And it's in single authority ABE 2. This is just inherited from single authority. So it's a problem there as well that we still don't know how to get around. You can get around it. So if you're willing to go to a static security in terms of queries, then you don't need any such thing. But so for fully secure ABE, I believe it's a restriction there in sort of every system we have now. So that would be very nice to get rid of. >>: In other words, what this does is you raise apriori bound on the size of the formula that you can use. >> Allison Lewko: You place an apriori bound on the number of times an attribute can be used. So for small universe, it's equivalent to bounding the whole thing. For large universe, it may not be. >>: Sure. >> Allison Lewko: But, yes, you're correct. Okay. >>: When you have three primes, what is the -- security versus factorization attacks, how big should they be in order to stop ->> Allison Lewko: Yeah, that's a very relevant question. I don't know. I sort of don't keep -- I mean, really we're thinking of this as a tool for eventually getting a system in primes. I sort of wouldn't want to live with that long term, really not sort of advocating that as where you'd want to be. So the goal would be just this is sort of a nice conceptual idea that should be ported into prime ordered groups whereas maybe it's more cumbersome. We just think it's a nicer process to do it as a two-part step. But if I'm really going to deploy the system I would do step two and I would make it prime order first. >>: What secret thing in the setup of the prime order that we've discussed? >> Allison Lewko: So there it's not as clear to me. So if you created some -- if you sort of knew something about the hash function, you know, that would be a problem. But it's like the hash function was something publicly agreed to be good, then it's less -- maybe so, yeah, the global setup is sort of less worrisome in the prime order version that we have now. But I should mention sort of for the way that these things are typically transferred from composite order to prime order. So what's more worrisome about the prime order version is not just the setup but it's just the fact it's a generic group proof. If I were going to do this, take this system put it in prime order, the way I would typically start I would sort of say I want these orthogonal subspaces. So I'm going to build these into the subspaces and exponent vectors, prime order groups, and then the global setup would have to establish bases for these subgroups in the exponent. And if someone knew the bases for the semi-functional spaces, that would be a bad thing. Probably in that transformational process I would introduce things that I want to keep secret into the global setup. The way it is now the global setup looks pretty good, it's a group hash function. But probably to get the stacked security I'd introduce things that I wanted to keep secret. But maybe not. It would depend on how you did the transformation. >> Vinod Vaikuntanathan: Let's thank our speaker. [applause]