COMPUTER SYSTEMS RESEARCH Portfolio Update 2nd Quarter

COMPUTER SYSTEMS RESEARCH

Portfolio Update 2nd Quarter 2009-2010

Research Paper, Poster, Slides, Coding, Analysis and Testing of your project's program.

Name: Sam Zhang, Period: 5, Date: 1/27/10

Project title or subject: Dynamic word-sense disambiguation with semantic similarity

Computer Language: Python

Describe the updates you have made to your research portfolio for 2nd quarter.

1.

Research paper: Paste here new text you've added to your paper for 2nd quarter. Describe and include new images, screenshots, or diagrams you are using for 2nd quarter.

Specify text you've written for any of the following sections of your paper:

- Abstract

Given a list of words, how can we find the word least like the others? This question gains relevance when the other words are ambiguous as well, and they must be cross-checked for contextual clues. Through a heuristicial search across the corpora of lexical relations, the most similar senses of words has been discovered to also be most likely the intended meanings, even when the only context given is the other words from which it must differentiate itself.

- Introduction or Background

How can similarity between words be measured? Semantics, which traditionally defines the difference between man and computer in the Turing Test, has become increasingly the domain of machine as technology improves its grasp of language. The database of similarities between words is known as an ontology, a word derived from the philosophical term meaning "the study of beings and their relations." Thus by using hypernym similarity within the WordNet corpora, this project disambiguates lexical semantics and discovers when a word occurs out of context.

- Development section(s) – the work you've actually done

Added to end of Theory: Through ontological semantics, the least common hypernym can be determined between different senses of different words, and thus determining the most similar through an algorithm related to the hypernym distance and root distance.

Added to end of Description: The ontological algorithm used traces the words upstream to their least common hypernym, and compares the distance of the least common hypernym to the root. This number is factored together with the distance of the words to each other (i.e. the average distance to the least common hypernym). With an index relating the similarity between two words, a metric now exists for large scale comparison between different sets of different words.

- Results – if you're reaching any preliminary conclusions

The strength of the project's ontological traversal will be measured in two aspects: accuracy and time.

The accuracy will be the aspect most focused on intially, while optimizations can occur after the accuracy is ensured. As a prototyping step, a graph could be created of the semantic network as the program conducts the bidirectional search. Currently, as per the Wu and Palmer algorithm that this project's algorithm is based on, the project has an 88/100 accuracy rating for determing the most similar words out of a pool, as related to human judgment.

- Additions to your bibliography

Wu & Palmer

- images, screenshots, or diagrams in your paper

No new ones

2.

Poster: Copy in new text you've added to your poster for 2nd quarter.

List the titles you're using for each of your subsections. Include new text you're adding

- Subsection heading: Abstract and text:

Revised: Through a heuristicial search across the hypernym ontology, computational semantics can discover the contextual meaning of words, even when the only context given is the other words from which it must differentiate itself.

- Subsection heading: Background and Introduction and text:

Completely remade: To create a similarity metric for semantics, this author uses lexical ontologies available publicly such as WordNet. The relationships most relevant in this scenario are hypernyms and hyponyms, which are when a word “is of the class of” another. See Fig 1.

- Subsection heading: Discussion and text:

To approach the issue, this project researched the feasibility of the semantic web to perform algorithmic searches across the lexical ontology, using the hypernym depth, least common hypernym, and distance to the root. The current working implementation is in Python, and will soon be transitioned to the Web Ontology Language to facilitate the transition of the Internet into the so-called

Semantic Web, or Web 3.0.

Results:

The project successfully disambiguates word-sense around the 90% benchmark, failing most often due to human inconsistency as to the similarity of different words. Thus semantic similarity has been proved to be an accurate, if not cumbersome, test of word sense.

- images, screenshots, or diagrams in your poster.

3.

Coding: attach new code that you wrote 2nd quarter. Describe the purpose of this code in terms of your project's goal and research. Also provide clear commentary on the main sections of your code.

The program currently uses an implementation of the Wu and Palmer heuristic, which is detailed in their paper Verb Semantics and Lexical Selection, utilizing hypernym distance to the "least common hypernym", distance to the root, and distance between words. The full algorithm is thus:

"ConSim(C1, C2) = Nl+N22,N+23 *N3

C3 is the least common superconcept of C1

and C2. N1 is the number of nodes on the path from C1 to C3. N2 is the number of nodes on the path from C2 to C3. N3 is the number of nodes on the path from C3 to root.

4.

Running your project – describe what your project's program actually does in it's current stage.

Include current analysis and testing you're doing. Specifically what have you done this quarter.

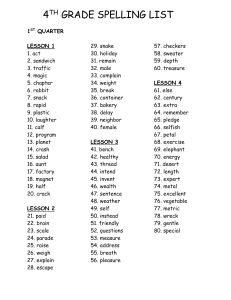

The results this gives me is shown in figure 1 below:

The higher the number, the more similar the words are. Notice how the only set that failed the similarity test was "acrobat, clown, and man", with man ranking higher in similarity to the two words than to their intersimilarity. This is due to a lack of a "Circus Performer" entry in WordNet, an issue which will be addressed in the following section (What do you expect to work on next quarter). The

Metallica test doesn't count because it wasn't expected to work, as WordNet clearly doesn't include references from popular culture. Again, this will be addressed in the following section. The problem of moon, sun, and earth returning the wrong pairing using a direct hypernym relation is circumvent by the Wu-Palmer algorithm's inclusion of direct word distance.

5.

What is your focus for 3rd quarter for your program and project?

I plan to incorporate support for terms in the contemporary semiotic sphere, by providing support for OpenCyc. OpenCyc is an ontology, similar to WordNet, yet it differs because 1.) its backbone is the Web Ontology Language (OWL), a true ontological language, 2.) it includes contemporary cultural terms in its corpora, and 3.) it requires its own programming language. Thus the transition to OWL of this project will 1.) be a complete port of the code, 2.) enable a greater inclusion of cultural terms (e.g. Metallica as per the previous test), and 3.) facilitate the transition toward Semantic Web accessibility, the next step in AI development at large.