A Terabit Switch Fabric with Integrated High

advertisement

A Terabit Switch Fabric with Integrated High-Speed CMOS Transceivers

Walter Wang

Kewei Yang

Taylor Lin

HotRail Inc.

2075 Zanker Road

San Jose, CA 95129

{wwang, kyang, tlin}@hotrail.com

Abstract

This paper details a highly integrated, fixed length, cell-based terabit switch fabric that provides both high

performance cell switching and easy system implementation. Fundamental to the switch fabric design is the

integration of high-speed CMOS transceivers to provide low power, low pin-count and low chip-count system

solutions that have a raw switching capacity up to 2Tbps. The switch fabric can support user bandwidth up to

640Gbps with an internal speed up factor of 2 for 10Gbps Ethernet and ATM/IP switch applications (64x64x10Gbps

or 256x256x2.5Gbps). It uses a combined input and output queued (CIOQ) crossbar architecture to approximate the

performance of output-queued (OQ) switches to provide better quality of service (QoS) on a per class per port basis.

Multiple slower speed crossbar switching domains are used to overcome the short cell time problem associated with

high link rates. For ATM cells at a 10Gbps link rate, the cell time has been doubled to approximately 100ns which

simplifies both scheduling and system design. Fault tolerance is supported naturally with multiple independent

switching domains and the switch fabric supports the lossless back pressure protocol on a per class per port basis.

1. Introduction

Recent progress in low power, low pin-count, highly reliable CMOS transceiver technology [1] has greatly facilitated

the design of highly integrated, high capacity switch fabrics. High capacity switch fabrics (64x64x10Gbps or

256x256x2.5Gbps) require very high internal bandwidth, normally in the multi-terabits per second range. Lowpower, high-speed integrated CMOS transceiver technology makes it possible to aggregate large amounts of traffic

onto a single ASIC chip for switching, thus reducing the pin-count, chip-count and power consumption of a switch

fabric.

Utilizing a family of high-speed CMOS transceiver technologies, we designed a switch fabric that supports high

capacity, fixed length cell switching with the CIOQ crossbar as the underlying architecture. CIOQ crossbars are

defined to be input-queued (IQ) crossbars (with virtual output queues (VOQs) to remove head-of-line blocking) with

an internal speed up factor greater than 1.

One of our design goals was to provide the level of QoS that approximates what could be provided by an OQ switch.

Shared-memory based OQ switches can provide the best delay control on a per class per port basis with fair

queueing algorithms, [2][3][4] but these are not practical. Simulation shows that the average delay of practical CIOQ

crossbar switches with a speed up of 2 approximates that of OQ switches. [5].CIOQ allows the switch fabric to

provide much better delay control than the IQ crossbar switches [5][6] while at the same time only requires a modest

speed up for the memory bandwidth and internal links. These features make it possible for the CIOQ crossbars to be

used as the underlying architecture for a high capacity switch fabric that approximates the performance of an OQ

switch.

There are several difficult problems that must be solved before the CIOQ architecture can be used for commercial

high capacity switches with high link rates. First, the switch fabric must have at least 2Tbps of raw internal

bandwidth to support 640Gbps user switching capacity with a speed up of 2 due to overhead. This puts a great deal

of pressure on transceiver technology. Second, even with high-speed integrated transceiver technology, there is a

need for multiple ASIC chips to provide enough switching capacity, and there is also a need for a better way of

organizing the switching capacity (like fully bit-sliced [7] or other alternatives). Third, the switch fabric needs to

overcome the short cell time problem. A straightforward implementation of the CIOQ crossbar with a speed up of 2

could cause the cell time for an ATM cell (at 10Gbps link rate) to be only 25ns. There is no practical crossbar

scheduler that can operate this fast for switch sizes up to 64x64. A short cell time will also present challenges to

other aspects of the switch fabric design.

To solve the problem of internal bandwidth requirements, a family of high-speed, low power, low pin-count, highly

reliable CMOS transceivers [1] has been developed that are the key enabling technology in the switch fabric design.

Because these transceivers are done in CMOS, they can be easily integrated into the switch fabric to significantly

reduce power and pin count along with the overall component count in high-speed switching applications. For

example, a single crossbar chip with these integrated transceivers can provide 256Gbps aggregate bandwidth while

consuming less than 10W of power. To solve both the short cell time problem and the need to better organize

switching capacity, the switch fabric was designed with eight slower speed switching domains operating in parallel.

Each domain contains either a single or multiple crossbar chips to provide the necessary switching capacity. The

incoming traffic is queued at the ingress port and then dispatched uniformly to the switching domains. Traffic

coming from the switching domains is then aggregated at the egress port. Overall, the switch fabric approximates a

CIOQ crossbar switch with a speed up factor of 2 and at the same time doubles the cell time for ATM cells to

approximately 100ns. The relatively long cell time allows for the design of the crossbar scheduler to be much easier.

The multiple domain design also allows much easier system implementation of the switch fabric than a fully bitsliced architecture. Because all switching domains are independent, fault tolerance is supported naturally without the

need to provide extra redundant switching capacity.

In addition to trying to approximate OQ switches via simulation, there are theoretical approaches that look for

solutions that behave identically to an OQ switch under all traffic patterns.[8][9][10] Researchers have shown that with

certain scheduling algorithms and data structures, a CIOQ switch with a speed up factor of 2 can behave identically

to an OQ switch. [8] However, the results have been theoretical so far and there is no practical way of implementing

those approaches.

2. First Generation Integrated Terabit Switch Fabric

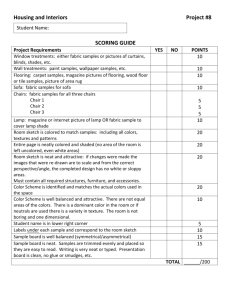

The switch fabric is protocol independent, scalable, and is implemented as a set of ASIC chips. The building blocks

of the switch fabric consist of high-speed integrated CMOS transceivers, queueing chips (Qchips), crossbar chips

(Xchips), and MUX chips (Mchips). The high-speed transceivers include 8Gbps parallel channels and 2.5Gbps

serial links that allow for low chip-count, low-pin count, low power and highly integrated implementations. The

switch fabric supports five system configurations: 40Gbps, 80Gbps, 160Gbps, 320Gbps and 640Gbps. Figure 2.1

shows the block diagram for a 320Gbps configuration and Figure 2.2 shows the block diagram for a 640Gbps

(2Tbps raw switching capacity) configuration. The 320Gbps configuration requires only 24 total chips while the

640Gbps configuration requires only 120 total chips. A single crossbar chip with integrated transceivers can provide

an aggregate throughput of 256Gbps with a pin-count of less than 650 pins. Compared to other popular solutions,

the switch fabric scales to larger port configurations (640Gbps), requires less than one-tenth the components and

uses only one-fourth the total power of an equivalent system.

2.5Gbps

SkyRail

8Gbps LiteRail

16x

Qchip

Xchip

16x

Qchip

Xchip

16x

16x

Qchip

Figure 2.1

8x

Xchip

320Gbps Router/Switch Configuration

2.5Gbps

SkyRail

8 Gbps LiteRail

8Gbps LiteRail

16x

Qchip

16x

Qchip

16x

Qchip

Mchip

8x

16x

Mchip

Xc

hi

p

Xchip

Scheduler

8 independent

switching domains

Qchip

Mchip

X

Xc

hi

Figure 2.2

640Gbps Router/Switch Configuration

pc

The switch fabric is designed to implement the CIOQ crossbar architecture with 8 slower speed switching domains

operating in parallel. Although the switch fabric has an internal speed uphi

of 2 for better QoS, the 8-switching

domain design allows each domain to operate at only half the link rate. To provide an internal speed up of 2 for the

user bandwidth, the raw switching capacity of the switch fabric is 3.2 times

p the link data rate. For example, for every

10Gbps link, the switch fabric allocates 32Gbps internal bandwidth. Of that, 20Gbps is used for the switching of

32x

16x

Xchip

8x

Qchip

Mchip

Scheduler

payload, the other 12Gbps bandwidth is used for overhead, which includes requests, grants, backpressure

information, and other control information. The switch fabric supports 8 priorities (classes) with a per port per class

based delay control. The chipset uses sideband signaling via a parallel channel as the control mechanism between

the components. The chipset switches fixed cell sizes, which is optimal for all protocols (IP, ATM, etc.) allowing

the architecture to be protocol independent.

2.1 Integrated High-Speed CMOS Transceiver Technology

The low power, low pin-count, high reliability, high-speed CMOS transceivers are suitable for chip-to-chip, box-tobox, and a variety of backplane implementations.

8Gbps LiteRail Parallel Channel. The CMOS parallel transceiver is derived from the HotRail Channel™ [1]. The

HotRail Channel is a 16Gbps full duplex chip-to-chip interconnect using only 22 signal pairs and provides the

underlying technology for the switch fabric. The same advanced high-speed mixed-signal technology of the HotRail

Channel has been applied to the high-speed LiteRail Channel, which provides 8Gbps bandwidth per direction at a

data rate of 1.6Gbps per differential signal pair with less than 10 -15 BER. The transceiver utilizes 24 signal pins and

6 power and ground pins and can drive 39” 50 ohm PCB trace with two connector crossings. It has a built-in selfcalibration circuit that optimizes the data transfer rate and corrects up to 1.2ns line-to-line data skew. Each

transceiver consumes less than 400mW which enables multiple instantiation of transceivers into a single ASIC chip

to dramatically improve the per chip bandwidth. Furthermore, the 8Gbps parallel channel requires no external

termination resistors. Latency through the link is less than 8ns because there is no need for data encoding and

decoding.

2.5Gbps SkyRail Serial Link. The CMOS serial link transceiver is designed to drive up to 30 meters of cable at

2.5Gbps per direction on a single differential pair. The transceiver is also designed in a standard 0.25u CMOS

process with power dissipation of 350mW. It has a transmitter side pre-equalization circuit for compensating high

frequency attenuation through PCB or cable. It supports both AC and DC coupled transmission, and both 50 ohm

and 75 ohm transmission lines. It has built-in comma detection and framing logic. The parallel interface is 20-bits

running at 125MHz. The transceiver can also operate in 1.25Gbps mode with the parallel interface running at

62.5MHz. An automatic locked to reference feature allows the receiver to tolerate very long streams of consecutive

1’s or 0’s. A frequency difference up to +/-200ppm between two ends of the serial link is tolerable. Multiple serial

link macros can be integrated into a single ASIC chip to achieve desired bandwidth. The transceiver has an internal

loopback mode for at-speed BIST.

2.2 Qchip – Ingress Port Processing and Egress Port Processing

On one side, the Qchip interfaces to a line card or the network processor with 16 high-speed serial links providing a

total of 32Gbps bandwidth. Each serial link runs at 2.5Gbps and provides effective bandwidth of 2Gbps. On the

other side, the Qchip interfaces with the 8 switching domains with 8 parallel links providing a total of 64Gbps

bandwidth. Each parallel link can provide 8Gbps bandwidth.

Internally the Qchip can be divided into two portions: The ingress processing portion and the egress processing

portion. Each Qchip can support either 2 OC-192 ports (10Gbps) or 8 OC-48 ports (2.5Gbps) or 1 OC-192 port and

4 OC-48 ports.

Qchip Ingress Processing. The Qchip contains on-chip SRAM which enables it to maintain 512 uni-casting VOQs

for every ingress port and 8 multicasting queues for every ingress port. The 512 uni-casting VOQs are divided into

64 groups targeting 64 egress ports. Each group has 8 VOQs for 8 priorities. The 8 multicasting queues are for the 8

priorities. The ingress port scheduler sits between the queues and the 8 outgoing parallel link ports and uniformly

dispatches cells from the queues to the Xchips via the 8 outgoing parallel links. The scheduling mechanism is fully

programmable and can support either strict or weighted priority.

Qchip Egress Processing. The Qchip maintains 8 OQs for each egress port on the Qchip. Each OQ corresponds to a

priority. The OQs are shared-memory based and are implemented as on-chip SRAMs. The egress port scheduler sits

between the OQs and the egress port and it uses a weighted round-robin algorithm to control delays of the cells for

each of the priorities.

2.3 Xchip – Crossbar Switch

The real cell switching is done in the 8 switching domains. The 8 domains are completely independent and each

operates at half the link rate. For an ATM cell with a 10Gbps incoming link data rate, the cell time is approximately

100ns. A scheduler can finish one cell scheduling for a 64x64 crossbar within one cell time. The Xchip has built-in

crossbars as the data path for the switch fabric. Each Xchip has 16 parallel channel interfaces. With 8Gbps per

parallel channel per direction, an Xchip has an aggregate throughput of 256Gbps.

The Xchip supports 1-> N multicasting and the ratio of bandwidth allocated for uni-casting vs. multicasting can be

programmed via configuration registers.

2.4 Mchip

Depending on system design requirements, the links between Qchips and Xchips are either 8Gbps parallel channels

or these 8Gbps parallel channels and a Muxing chip (Mchip). For smaller capacity systems (<=320Gbps), the

parallel channels are ideal and can result in a more compact design with dramatically fewer chips. For larger systems

(>320Gbps) the Mchips will be used for bit slicing and protocol conversion between the Qchips and the Xchips.

Figure 2.2 shows the system configuration using Mchips for connecting Qchips and Xchips while Figure 2.1

illustrates the use of only the parallel channels as the interconnect technology.

2.5 Fault Tolerance & Lossless Backpressure Protocol

Fault Tolerance. The switch fabric naturally supports fault tolerance without requiring additional redundancy logic.

A malfunctioning link, chip, or switching domain will be automatically disabled and the traffic will then be

automatically forwarded to the working links and domains. Even when one switching domain is disabled, the

remaining seven switching domains can still provide a speed up of 1.8 times that of the link data rate. In this case,

the switch fabric continues to provide good performance.

Lossless Backpressure Protocol. The switch fabric supports lossless backpressure protocol on a per class per port

basis. The backpressure information can be generated by the line card network processor at the egress port, the OQs,

or the VOQ in the Qchips and can be transferred all the way back to the line card network processor at the ingress

port.

3. Summary

The terabit switch fabric with integrated high-speed CMOS transceivers makes it possible to aggregate large

amounts of traffic onto a single ASIC. With raw switching capacity of 2Tbps and user bandwidth of up to 640Gbps,

the switch fabric provides for high performance, low power, low pin-count and low chip-count system solutions for

10Gbps Ethernet and ATM/IP switch applications.

4. References

[1] K. Yang, T. Lin, Y. Ke, “A Scalable 32Gb/s Parallel Data Transceiver with On-chip Timing Calibration Circuits”, IEEE ISSCC, Feb. 2000,

San Francisco, CA

[2] A. Demers, S. Keshav, S. Shenker, “Analysis and simulation of a fair queueing algorithms”, in Proc. SIGCOMM’89, vol. 19, no. 4, Sep.

1989, pp. 1-12.

[3] A. Parekh and R. Gallager, “A generalized processor sharing approach to flow control in integrated services networks: the single-node case”,

IEEE/ACM Transactions on Networking, vol. 1, no. 3, Jun. 1993, pp. 344-357.

[4] M. Shreedhar and G. Varghese, “Efficient fair queueing using deficit round-robin”, IEEE/ACM Transactions on Networking, Vol. 4 , Issue 3

(1996), pp. 375-385.

[5] N. McKeown, "A Fast Switched Backplane for a Gigabit Switched Router", Business Communications Review, December 1997.

[6] N. McKeown, "iSLIP: A Scheduling Algorithm for Input-Queued Switches", IEEE/ACM Transactions on Networking, Vol 7, No.2, April

1999.

[7] N. McKeown, M. Izzard, A. Mekkittikul, B. Ellersick, M. Horowitz, "The Tiny Tera: A Packet Switch Core", Hot Interconnects V, Stanford

University, August 1996.

[8] S. Chuang, A. Goel, N. McKeown, B. Prabhakar, "Matching Output Queueing with a Combined Input Output Queued Switch", IEEE Journal

Sel. Areas in Communications, Vol. 17, no. 6, pp. 1030-1039

[9] B. Prabhakar, N. McKeown "On the Speedup Required for Combined Input and Output Queued Switching", Automatica, Vol. 35, Dec. 1999,

pp.1909-1920.

[10] S. Iyer, A. Awadallah, N. McKeown "Analysis of a Packet Switch with Memories Running Slower than the Line Rate", IEEE Infocom

March 2000, Tel-Aviv, Israel.