Spectrogram Program

advertisement

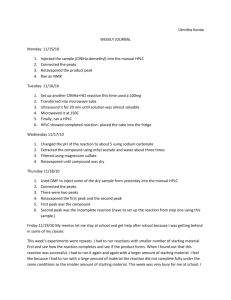

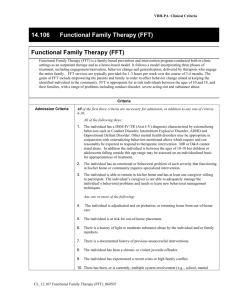

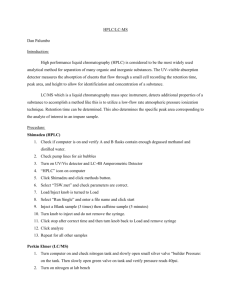

Development of a Voice Recognition Program May 9, 2002 T2 Michael Kohanski, Anna Marie Lipski, Justin Tannir, Tony Yeung ABSTRACT The objective of the following experiment was to develop a digital voice recognition program utilizing LabView and MatLab 5.3R11 that will be able to identify people uniquely by their voice. As seen from the demonstration, the programs developed equip us with the necessary tools to record, filter, and analyze different voice samples and compare them to the archived sample. Furthermore, any threshold value can be set, depending on the security level wanted.. The spectrogram program subtracts two fingerprints to get a relative difference. A large relative distance indicates little correlation between the voices. The results show that this program can accurately determine the difference between a male and a female voice and to a certain extent differentiate between different male voices. The peak comparison program is able to differentiate between four speakers saying the word “open” on multiple occasions. It compares the multiple repeats of the word to stored fingerprints of the four speakers saying, “open”. This program is a good foundation for allowing a user to identify a person based upon voice fingerprinting. BACKGROUND Voice recognition is not speaker-independent. It amplifies the idiosyncratic speech characteristics that individuate a person and suppresses the linguistic characteristics. The goal of this experiment was to identify, through comparative measures, the characteristic fingerprints of certain pitches and sounds. Speech recognition has been actively studied since the 1950s, however recent developments in computer and telecommunications technology have improved speech recognition capabilities. In a practical sense, speech recognition will solve problems, improve productivity, and change the way we run our lives. Academically, speech recognition holds considerable promise as well as challenges in the years to come for scientists and product developers. Two applications of digital speech processing, pitch recognition and voice fingerprinting, were modeled in order to create a working program in MatLab 5.3R11 that could differentiate among different frequencies. Pitch recognition was modeled initially in order to create a program that could classify and distinguish between different notes, and to determine the frequencies by FFT analysis at which each note resonated. After doing this we could then further develop our program to analyze and determine the differences in human voice. The voice fingerprinting method was utilized in order to differentiate voices among those who participated. Voice fingerprint refers to the distinct manner in which the air from an individual’s lungs passes through the vocal chords and resonates off of the pallet. It is through noise filtering and Fast Fourier Transform (FFT) analysis; comprehensive comparisons can distinctly identify the owner of a sample voice. Moreover, graphical representations of the voiceprint took the form of a speech spectrogram, which consists of a representation of a spoken utterance in which time is displayed on the abscissa and frequency on the ordinate. Voice Recognition consists of two major tasks: feature extraction and pattern recognition. Feature extraction attempts to discover characteristics of the speech signal unique to the individual speaker while pattern recognition refers to the matching of features in such a way as to determine, within probabilistic limits, whether two sets of features are from the same or different individual (peak to peak analysis of voices). Voices can be analyzed in either the temporal domain or the frequency domain. Features of a voice in the time domain are either evident in the raw signal, or they become more evident by deriving a secondary signal that has particular properties. The major time-domain parameters of interest are duration and amplitude. Features of the voice are identified by spectral analysis in the frequency domain. The FFT is the most commonly used technique that calculates the spectrum from a signal, and it was the method of choice in the following experiment. It provides a measure of the frequencies found in a given segment of a signal by decomposing it into its sine components. Furthermore, it allows for pitch extraction and the identification of fundamental frequencies, which was an essential component of the experiment. Areas of analysis included: parameter extraction and distance measurements. Parameter extraction, as discussed before, consists of preprocessing an electrical signal to transform it into a usable digital form, applying algorithms to extract only speaker-related information from the signal, and determining the quality of the extracted parameters. The two parameters of choice were pitch and frequency representations. Pitch allows for distinguishing between a male and a female voice, and frequency allows for the representation of a signal in various time frames. This is equivalent to a spectrogram in numerical form. The numerical form of a spectrogram was computed utilizing the FFT algorithm. It is from the distance measurements that one can determine how much two voices differ from each other. Here, a threshold value can be decided (which can be set to whatever value desired, depending on the amount of security needed), and if the distance between the unknown speaker and the known speaker is greater than the threshold value, the unknown is rejected. THEORY & METHOD Peak Analysis Program The characteristic properties of one’s voice is a function/product of many parameters including the dynamics of sound as it passes through the pharynx, the vibration of the larynx, the shape of the mouth and how sound reverberates off of the palette. Due to the uniqueness complexity of one’s voice, a voice has fingerprint qualities, i.e. no two people’s voice patterns are exactly alike. The method of FFT analysis maps the frequency fingerprint of a person’s voice, in order to systematically determine if another sample is or is not from the same subject. This program gathers the FFT fingerprint from the subject in question and compares it to four previously stored fingerprints in order to determine if the subject’s voice matches one of the archived voices. The program itself is divided into five functions. wav_gather.m: This is the main function and it searches out the file(*.wav) of the subject, checks/converts the data to mono from stereo (ie takes only input from one speaker) and outputs to wav_plot.m wav_plot.m: Sends the raw data to wav_filter.m to be filtered. Then it gathers the filtered data and plots the FFT, spectral density and original waveform wav_filter.m: Filters the raw data with high pass, low pass, bandpass, bandstop, or no filter. peak_compare.m: Sends the FFT to peak_finder.m to get the peaks. The peaks are then returned and compared against archived fingerprints. Peak_finder.m: Analyzes filtered data and picks out peaks via a complex downward scan of the FFT up-to a preset threshold value. Spectrogram Program In addition to the frequency domain, analysis can also be performed in the timefrequency domain using the spectrogram function in Matlab. The spectrogram performs a shorttime Fourier Transform on the input signal in the time domain and transforms it into an intensity frequency-time plot. The short time Fourier Transform is based on the Fourier Transform but the input signal, x(t), is multiplied by a shifted window function, w(t-τ). Equation 1 is the ShortTime Fourier Transform equation. (Eq. 1) Instead of performing a Fourier Transform on the whole signal, the signal is segmented into many sections and a short-time Fourier Transform is performed on each windowed segment. Figure 2 is a spectrogram of the A string (440Hz) on a violin. The top figure is the signal in a voltage-time plot and the bottom figure is the spectrogram. Each point (x1,y1) on the spectrogram indicates the intensity of the frequency at that particular time point. Pixel with red color has the highest intensity and that with blue has the lowest intensity. Figure 1 Input signal in a voltage-time plot and in a spectrogram. Since the spectrogram is essentially a “fingerprint” of a person’s voice, it shows particular features specific to the subject himself. For instance, the parallel bands observed in the above example show the harmonics of the A note. Based on the voice fingerprinting, different subjects will have different parallel bands as well as other features such as delays and time shifts. In this project, the spectrograms of two different subjects are subtracted from each other and the result is the relative difference in frequency intensity. The largest difference in the frequency intensity indicates that those two subjects vary the most. It is also important to note that the two voices are pre-aligned to obtain the best overlap between the two. This is achieved by using the crosscorrelation function between the two voices and obtaining the best correlation coefficient and the lag time. The following parameters were selected while using the peak compare program. Filter value is a normalized value, where an input of 0.5 corresponds to a value of 50% of the frequency. Percent difference in peaks corresponds to the maximum deviation in total number of peaks between an archive and a test sound. This will stop false positives from occurring for a test or an archive being a subset of the other. Maximum difference between the peaks corresponds to the maximum deviation in the frequency axis allowed between peaks in an archive versus a test subject for it to be considered the same peak. In general, this is a direct function the value used to define peak separation in the peak_finder.m function and has been defaulted to r/80, where r corresponds to the total number of data points in the FFT. parameter value/setting used filter type high pass filter value-normalized 0.2 peak-compare Yes percent diff in peaks 20 maximum diff between peak frequencies 100 Table 1 Parameter setting for the Peak Analysis programs METHODS & MATERIALS Materials 16 Bit Sound Card Microphone LabView Tuning forks MatLab 5.3R11 Programs Created ● Wave Gather (wav_gath) ● Wave Filter (wav_filt) ● Wave Plot (wav_plot) ● Peak Finder (peak_find) ● Peak Compare (peak_compare) Figure 2 Representation of the protocol. The specific protocol of the voice data collection required that all four of the participants speak into the microphone while running LabView. The settings for LabView data acquisition were at a sampling rate of 44100Hz. Four different individuals spoke chosen words into the microphone at different times: “subject,” and “open.” A total of four subjects participated in the experiment. Data was then saved in LabView and then re-opened, filtered, graphed, and analyzed in MatLab 5.3R11 by FFT analysis using the programs that were created. The archived voice was compared to all other voices recorded. The overall method of data acquisition was as followed: sound wave created by person’s speech was transuded into an analog electrical signal via mic the signal is sampled & quantized resulting in a digital representation of the analog signal. Wav_gather wav_plot Peak_comp wave_filt Peak_finder Figure 3 Order of Signal Analysis After signal collection, the analysis segment of the experiment utilized the programs made for the experiment. The data collected in LabView is transferred to MatLab and first, wave gather gathers the analog signal. Then wave plot plots the FFT of the signal, wave filter filters the original signal, and finally peak comparison and peak finder are used for the analysis of the signal, along with another signal to determine the owner of the voiceprint as well as if the two signals match. RESULTS Peak Finder & Compare Program Figure 4 is a raw wave file (voltage vs. time). ><(((*> m-open-normal2.wav Waveform <*)))>< 0.3 0.25 0.2 0.15 0.1 0.05 0 -0.05 -0.1 -0.15 -0.2 0 0.05 0.1 0.15 0.2 time 0.25 0.3 0.35 0.4 Figure 4 Raw signal in time domain. Table 2 is a sample of results from the peak compare program. Anna #2 Justin #2 Justin FAIL PASS Archived Vioce Data Mike Tony FAIL FAIL PASS FAIL Deviation Standard % Peak Difference Anna PASS FAIL +/- 100 20 Table 2 Sample of results from peak compare program The FFT on the word “open” spoken by Mike in trial #2 is presented in figure 5. As labeled in the text box, the red marker labels the peaks obtained from the FFT directly beneath it in blue. It could be seen that the red peaks overlap almost exactly on top of the peaks from the FFT in blue. This shows that the peak finder program is able to identify the peaks on a FFT accurately. Figure 5 An illustration of the red peaks identified by the peak finder program The FFT on the word “open” spoken by Anna in trial #1 and #2 is presented in figure 6. As labeled in the text box, the red represents the peaks obtained from FFT of trial #1 and the blue is the FFT of trial #2. It could be seen that the red peaks overlap to a great extent to the blue peaks from the FFT directly beneath it. Figure 6 The red peaks of Anna in trial #1 are compared to the blue peaks from trial #2 The FFT on the word “open” spoken by Anna in trial #2 and Justin in trial #1 is presented in the following Figure 7. It could be seen that the red peaks from Justin’s FFT do not match Anna’s peaks in the FFT (in blue) as closely as in the above figure, Figure 6. Figure 7 Red peaks from Justin are compared to Blue peaks from Anna Spectrogram Program The relative difference in frequency intensity between two speakers is presented in the following figure. A positive relative difference shows that the first subject (listed below each column) has higher frequency intensity than the second subject. However, the difference can be the result of delays, shifts or pitches at which the word is being voiced. The error bars are the standard deviations in the measurement. Relative Difference in Frequency Intensity between Speakers Relative Difference in Frequency Intensity 3500 3000 2500 2000 1500 1000 500 0 -500 Tony-Tony Justin-Tony Mike-Tony Speaker Figure 8 Relative difference in frequency intensity between speakers. Anna-Tony ANALYSIS Various FFT fingerprints are presented in Figures 5 for the word “open”. The word’s pronunciation and syllabic simplicity gives rise to the correspondingly simplistic FFT representations, which are appropriate for graphical display. More complex words often contain many more peaks, and the resultant FFTs become increasingly more difficult to subjectively analyze. Furthermore, a slightly different inflection of a word will give rise to a different FFT, The adjustable parameters in the peak compare program (percent difference in peaks and maximum difference between peak frequencies [Table 1]) exist to compensate for slight deviations in voice between trials, and prove effective in at least two of the subjects. The last two subjects did not exhibit such similar FFT fingerprints between trials, the causes of which could range from the distance and angle of the microphone to the constriction in the voice passage. Particularly good results are found in the trials of subject “Anna”, in which the second trial matches in good precision to the first, but exhibits no other extraneous matches. (Refer to Figure 6.) Subject “Justin” also demonstrates correspondence between the first and second trial, but also matches subject “Mike”; upon examining the FFTs, it is clear that the two voices are quite similar. This similarity can be justified in the simplicity of the word (i.e. the small number of peaks in the FFT) and the fact that the program itself is still in relatively early stages of development. In addition to this, The FFT fingerprinting in this experiment can be extended to alterations on the topic, such as matching voices to percentage values. Yet another application is on-the-fly analysis of subjects for the purpose of voice authentication, which could be coupled with spectrogram analysis to amplify security The relative difference in frequency intensity between speakers are plotted in Figure 8. The word “subject” was spoken by all speakers and the average relative difference from ten trials are shown. The difference can be a combination of different patterns of the voice and different pitches at which the word was being spoken. Thus, the variation can be due to the fact that the delay in between “sub” and “ject” is different between any two speakers or “sub-ject” all together was spoken at higher or lower pitches. It was seen that the difference between Tony and himself again was the smallest among the four groups. There was a significant increase in the relative difference for the other three groups Justin-Tony, Mike-Tony and Anna-Tony. The word “subject” compared between Anna, Tony had the most difference, and this result is reasonable and consistent with speech patterns in real life. It was also interesting to see that the program was able to differentiate between male speakers. It was shown that between Tony and himself, and between Tony and either Mike or Justin, the program finds the least difference between Tony and himself and significantly larger relative difference between Tony and the rest of the male speakers. Thus, it shows that the subtraction method of the spectrograms is sensitive enough to differentiate between the main speaker and the rest of the male speakers. As discussed above, one disadvantage of using the subtraction method is the inability to differentiate between patterns of voice and pitches of the word. Thus, an improvement of the present spectrogram analysis would be to utilize other algorithms such that these differences can be detected. One possible method is to identify two areas on the spectrogram with very high frequency intensity and determines the change in time between these two points. This change in time in one spectrogram can be compared to other ones on different spectrograms. Similar method like this one would thus be able to distinguish speakers based on speech patterns. With this improvement, voice recognition program would be able to differentiate speakers of the same sex much more accurately based on his or her distinctive way of speech. CONCLUSION As seen from the demonstration, the programs developed equip us with the necessary tools to record, filter, and analyze different voice samples and compare them to the archived sample. Furthermore, any threshold value can be set, depending on the security level wanted.. The spectrogram program subtracts two fingerprints to get a relative difference. A large relative distance indicates little correlation between the voices. The results show that this program can accurately determine the difference between a male and a female voice and to a certain extent differentiate between different male voices. The peak comparison program is able to differentiate between four speakers saying the word “open” on multiple occasions. It compares the multiple repeats of the word to stored fingerprints of the four speakers saying, “open”. This program is a good foundation for allowing a user to identify a person based upon voice fingerprinting.