Doc - Andre Trudel - Acadia University

advertisement

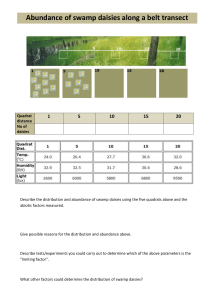

HOW BIG IS THE WORLD WIDE WEB? Darrell Rhodenizer & André Trudel Jodrey School of Computer Science Acadia University Wolf Ville, Nova Scotia, Canada, B0P 1X0 andre.trudel@acadiau.ca ABSTRACT The World Wide Web is one of the most popular and quickly growing aspects of the Internet. Ways in which computer scientists attempt to estimate its size vary from making educated guesses, to performing extensive analyses on search engine databases. We present a new way of measuring the size of the World Wide Web using “Quadrat Counts”, a technique used by biologists for population sampling. KEYWORDS WWW size estimation using biological techniques. 1. INTRODUCTION How much of the Internet does the World Wide Web (WWW or simply Web) actually populate? Are web servers abundant throughout the ‘Net, or is the vastness of cyber space still a relatively empty void? Unfortunately, due to the size and dynamic nature of the WWW, it is infeasible to conduct an exhaustive census. Instead, recent studies have attempted to estimate its size using a variety of techniques. We take an interdisciplinary approach to the problem of estimating the size of the WWW by adopting a technique utilized by biologists who conduct population sampling using quadrat counts – a new, and intuitive way of looking at the problem. There are many ways in which the Web can be measured. Other studies have treated it as the total number of web pages, or disk space used to store the web pages. We count the number of web servers available on port 80 across the Net. 2. PREVIOUS STUDIES Gone are the days when we had only to count the computers hard wired into the ARPANET to know the exact number of hosts on the Internet. Now it is a difficult task to estimate even a relatively small population, such as the number of computers running web servers, let alone to guess at the number of computers connected to the ‘Net in total. There have been numerous studies and surveys conducted which try to estimate the population of the Web, and we present a few of the approaches taken. Search engines are a logical tool to use when trying to estimate the size of the web. A search engine’s index size can be used as a lower bound estimate of the Web’s size. Steve Lawrence and C. Lee Giles [Giles, Lawrence, 1999a] [Giles, Lawrence, 1999b] [Giles, Lawrence, 1998] used the overlap between search engines to estimate N, the size of the “Indexable Web”. The Indexable Web is considered to be those pages on the Web, which are available for search engines to find and catalog. N na n0 nb Figure 1. Search Engine Coverage [Giles, Lawrence, 1998] Let a and b be two search engines that search the Web independently. As illustrated in Figure 1, n a is the number of results returned by Search Engine a, while nb is the number of results returned by Search Engine b. The value n0 is the area of overlap where the two search engines return the same results. Lawrence and Giles use n0 / nb as an estimate of the fraction of the Indexable Web, p a, covered by Search Engine a. The size of this Indexable Web could then be estimated by dividing s a by pa, where sa was the total number of pages indexed by search engine a. The fact that this study was limited to the “Indexable Web” poses a problem. Search engine results are not random and are often skewed since pages can be submitted to them, rather than just being found by a spider program. Search engines will tend to favour more popular pages as a result of this. In a scientific study on the size of the Web we would prefer truly random samples, rather than those already chosen for being popular. Search engines also have the disadvantage of not being able to gain access to large amounts of pages, such as those which may require form input in order to be viewed. Lawrence and Giles’ technique is equivalent to Peterson’s method for population estimation. Peterson’s method involves capturing individuals, marking them, and then releasing them. Individuals are then recaptured and checked for marks. The formula used for population estimation is identical to the one used by Lawrence and Giles. In the latter work, the pages indexed by search engine a are the marked individuals. The documents returned by engine b are the individuals found during the re-capture phase. Krebs [Krebs, 1999] points out several problems with Peterson’s method. The first is that it tends to overestimate the actual population; especially with small samples. Also, random sampling is critical. As described above, this is not the case with search engines. It is possible that the “Indexable Web” could be similarly mapped using the databases of Domain Name Servers, where each domain entry could be considered a web server. Unfortunately this approach also has the problem of not considering those sites that may not want to be found (and so have not registered a domain name), as well as virtual domains, which do not have real entries in the DNS database. 3. WHAT WE ARE MEASURING IP Addresses are 32 bit numbers, each of which corresponds to a network interface somewhere on the Internet. They are broken up into four octets, which are commonly represented in a decimal notation as: aaa.bbb.ccc.ddd. Each octet contains eight bits of binary information and can thus be represented as a decimal number between 0 and 255. Because the addresses have 4 octets, this scheme is sometimes referred to as IPv4. This scheme gives us 4,294,967,296 possible addresses. Not all of these addresses are useable, however, because there are several ranges of addresses that are reserved for special purposes. Moreover, the addresses are divided into network classes, and additional addresses may be reserved depending on what class of network the address belongs to. The network class is determined by the first octet. Addresses starting with 0, 127, and anything over 223 are all reserved for special purposes. If the first octet is a number between 1 and 126, then the address belongs to a Class A network. If the first octet of the address is between 128 and 191, then it belongs to a Class B network. Finally, if the first octet of the address lies between 192 and 223, then it belongs to a Class C network. In a Class A network the first octet (first 8 bits) of the address is used to identify the network, while the remaining three octets (last 24 bits) are used to identify the host. We therefore have 126 Class A networks. Each of these networks can have up to 16,777,216 hosts. Likewise, in a Class B network the first two octets (first 16 bits) of the address are used to identify the network and the remaining two octets (last 16 bits) identify the host, and in a Class C network, the first three octets (first 24 bits) of the address identify the network, while the remaining octet (last 8 bits) identifies the host. For example, the IP address of Acadia’s Dragon server is 131.162.200.56. 131 corresponds to a Class B network, the first two octets 131.162 identify Acadia’s network, while the remaining octets, 200.56, identify the host (i.e. Dragon). There are 16,384 Class B networks, each with 65,536 hosts. For Class C, there are 2,097,152 networks, each with 256 hosts. As mentioned above, certain addresses are reserved. The first octet cannot contain all 0’s or 1’s (0 or 255 in decimal notation). Nor can it be 127 as this is used for loopback. Also, the first octet cannot be a decimal value higher than 223. Values between 224 and 255 consist of the Class D and E networks, but these are experimental and are not currently useable. Aside from the restrictions on the first octet, the host address cannot contain all 0’s, or all 1’s (0 or 255 in decimal notation). The octets corresponding to the host address vary depending on the class of the network. In addition to the above restrictions, the ranges 10.(0 255).(0 - 255).(0 - 255), 172.(16 - 31).(0 - 255).(0 - 255), and 192.168.(0 - 255).(0 - 255) are reserved for private networks and are not available for routing over the Internet. We are therefore left with 3,702,423,846 unique available IP addresses. 4. Population Sampling by Quadrats A straightforward method of determining an organism’s population in a certain area is to count it. If the population we are attempting to measure is too large to count in total then we may consider counting the organisms in subsections of the total area and using that to help us estimate a total value. In the real world this can be done using quadrat counts. A quadrat is a fixed size area that is surveyed. By surveying multiple quadrats and using a formula to estimate distribution probabilities, we can estimate the total population size. In our case, the total area is the Internet, a quadrat is a set of IP addresses, and the population we wish to estimate is web servers. There are only two basic requirements to be met when using quadrat count sampling techniques. First, the total area we are considering is known. The total size of the ‘Net was previously calculated to be 3,702,423,846 excluding reserved address values and 4,294,967,296 including reserved address values – so that quantity is known. Second, it is necessary that the population we are sampling remains relatively immobile during the sampling period. This is also mostly true. Although we certainly cannot confirm it for every web server on the Net, it is common for web servers to remain at a fixed IP address in order to make best use of the DNS service (Domain Name Servers can take a long time to propagate a change through all of their system, so for a web server to be persistently accessible using a domain name, it needs to have a static IP address). We need to decide on the size of a quadrat. There are formulas available to help determine the optimal size and shape of the quadrats based on obstacles such as distance and cost. Since these obstacles are not applicable to the Internet, we arbitrarily decided to use quadrats that consist of 256 unique IP addresses. Originally, the quadrat scanning software was written to scan subnets of the form a.b.c.(0 - 255), where a, b, and c were randomly generated numbers between 0 and 255. This seemed to be an intuitive solution, but quadrats are ideally long and narrow, so that they are able to provide a larger cross section of the environment, while lacking the homogeneity of a more localized area. IP ranges of the form a.b.c.(0 255), all being in the same subnet seemed to violate that principle since commonly they would be owned by a single person or group and be subject to the homogeneity that we were trying to avoid. In order to correct this, the software was altered to scan blocks of IP addresses of the form (0 - 255).a.b.c, where a, b, and c are still randomly generated numbers between 0 and 255, but the quadrats would span entire networks, virtually ensuring that no two addresses in a single quadrat would be owned by the same person and also that no two addresses would belong to the same subnet. Changing the shape of the quadrats had the added benefit of making the scanning software seem less like an attack on a given subnet. If a network administrator were to see 256 unsolicited probes on his/her network, even if they were only on port 80 (the standard HTTP port), he/she could become concerned. This was something we wished to avoid, and the new quadrat shape, in addition to offering a better cross section and eliminating the homogeneity concern, ensured that an individual subnet would only receive one port 80 probe. Unfortunately, by changing the quadrat shape we introduced a new problem. Since the quadrat increments across the first octet of the address n.a.b.c, any reserved addresses which are identified solely by the first octet (as is the case with the entire Class D and Class E networks) will appear within every quadrat in the same relative position. This is not particularly troubling since the distribution of web servers can still be considered random outside of these restricted areas. They can never exist, however, in those locations where the address is rendered invalid solely by the first octet. We allowed this for our purposes, and although we recorded these addresses as invalid, in our final estimate they were treated as unrestricted addresses that simply did not have a web server. Alternatively, we could have omitted these addresses from our results entirely. IP addresses are uniquely suited to quadrat counts. There is no standard address at which to place a web server within a subnet, so occurrences of a web server are random. Moreover, there is no correlation between the subnets. One could infer that the concept of ‘nearness’ exists within a subnet, in as much as all the addresses will often be owned by a common entity, but there are no similar concepts between the different subnets. By taking a vertical cross section as our quadrat rather than a horizontal one, we can eliminate virtually all homogeneity in our sample and we are left with a truly random distribution. Although there may be some relationship within the addresses of a subnet there is no relationship between the subnets, so distribution in our quadrat is totally random. By incrementing across the first octet, we are taking the widest possible leap across the IP addressing system (as there are 255 * 255 = 65025 subnets between a.b.c.(0 - 255) and (a + 1).b.c.(0 - 255). When sampling in the real world, there can be cases of biases or organisms can be missed altogether. Biases occur when an individual is observed to be partially within the quadrat being surveyed, the biologist would naturally be inclined to include it in the results in order to not ‘waste’ data. This can lead to greedy estimates that may overestimate total populations. With IP addresses there is no ‘half way’, and we don’t need to worry about a web server being only partially within the quadrat we are studying. An address either lies inside the specified range, or it does not and will not be considered. We have crisp boundaries between quadrats. The other concern is the possibility of missing an individual within the area being studied. This case can occur while sampling IP addresses. Using quadrat-scanning software however, it is less a case of “missing” a web server and more a case of the web server not replying to our attempt at communication in a timely manner. Nevertheless, with a reliable and fast ‘Net connection and a fair timeout value, this small chance of error can be minimized. Other individuals we may miss, such as web servers that are not listening for requests, or those that are being blocked by firewalls, are not considered in this study as they are not publicly accessible. Since they cannot be detected or utilized by the majority of the Net’s users, we do not treat them as part of the true World Wide Web. Since the distribution of web servers across the Net is random, we can use the “Poisson Distribution Method” to estimate the probability of encountering a given number of web servers within a quadrat. This method is simple because the only parameter we are concerned with is the mean. Using the Poisson method we can compute the probability of finding 0, 1, 2, …, n individuals within a quadrat, and we can consider individuals to be either web servers, non-web servers, or invalid addresses. We use the Poisson distribution Px = e-μ * (μ x / x!) where: Px = the probability of observing x organisms in a quadrat. x = an integer counter (0, 1, 2, 3…) μ = the mean of the distribution e = the base of natural logarithms (2.71828…) The Poisson distribution assumes that all quadrats are equally likely to contain individuals, which is why a random distribution is necessary. It is through its near perfect randomness and the ease with which it can be surveyed that the ‘Net distinguishes itself as being ideal for this form of population sampling. 5. Implementation In order to survey a great number of quadrats in a short amount of time, we decided that a computer program would conduct the actual surveying, store the results, and analyze them dynamically. All programs were written in Perl and most output is in the form of HTML files which allows the programs to be executed, and the results to be viewed through a web-based front end (shown in figure 2). This front end displays a list of scanned quadrats on the left, from which one can view the detailed results for a given quadrat. It also prints out the total number of quadrats it has scanned, the total number of addresses within those quadrats, and the total number of open, closed, and invalid addresses it has encountered. Inside another table it prints dynamically calculated values, such as the estimated number of open, closed and invalid addresses on the entire Net as well as a bar graph displaying the probabilities of encountering 0, 1, 2, 3 or 4 web servers within a quadrat. At the bottom of the page are links for starting new instances of the quadrat-scanning program and for checking the integrity of the currently stored quadrat counts. Figure 2. The web-based front end 6. Results The quadrat-scanning program was allowed to complete 100 runs for a total of 100 quadrats, or 25600 total IP addresses. Although this is a large number, it is still extremely small relative to the entire ‘Net, and is in fact only approximately 0.0006% of the total number of possible IP addresses available on the Internet. Of these 25600 addresses, 111 (approximately 0.43359375%) allowed us to form a connection on port 80, which means there is a webserver running at that address. 21980 (approximately 85.859375%) of those addresses did not reply to a connection request on port 80, implying that there were either no web servers at the interfaces identified by those addresses, or no interfaces at those addresses at all. Additionally, 3509 (approximately 13.70703125%) addresses were identified as being invalid by the rules governing reserved addresses. The mean number of web servers found per quadrat was 1.11. By multiplying this by the total number of possible quadrats (256 * 256 *256 = 16,777,216) we get an estimate of approximately 18,622,710 web servers across the entire Internet. Meanwhile, the mean number of non-web servers found per quadrat was 219.8. This value yields an estimate of approximately 3687632077 non web-servers across the entire Internet. Finally, the mean number of invalid addresses found per quadrat was 35.09, yielding an estimate of 588712509 invalid addresses across the entire Internet. The number of estimated invalid addresses (588,712,509) was compared to the known value of 592,543,450. The difference was taken to be 3830941, which was then calculated as a percentage of the total ‘Net – approximately 0.089%. The results computed also included the Poisson distribution estimate for the total number of web servers on the ‘Net. By using a linear spline to estimate the values between our actual points (where x values are the number of web servers we may find in a quadrat, and y values are the probability that the number will occur), we are able to plot a graph showing the likelihood of discovering a given number of individuals within a single quadrat. The probability curve for discovering a specified number of web servers within a quadrat is illustrated in Figure 3. Figure 3. Probability curve for the number of web servers within a quadrat This curve peaks around the values 0, 1, and 2, leading us to conclude that we have a high probability of discovering 0, 1, or 2 web servers in a quadrat. After that, the probabilities drop to zero quickly, indicating that it is highly unlikely to find more than 3 or 4 web servers within a single quadrat. Although the numbers were too large to deal with using Perl, we are also able to compute probability curves for both the likelihood of finding non-web servers and the likelihood of finding invalid addresses within a quadrat by using Maple. Note that we must be careful in what we infer from these results, particularly with the invalid addresses, as they are not truly random, and so are not ideal for use with the Poisson distribution method. The value of approximately 18.5 million web servers seems reasonable given the rapid growth of the Internet in recent years. Of course, this does not take into account that many servers do not actually have any content behind them, or that some servers may in fact be masquerading as multiple servers through virtual hosts or the use of additional ports. 7. Conclusion Although only 100 out of a possible 16,777,216 quadrats were scanned, the quadrat counting method of estimating the size of the World Wide Web appears reasonable. An estimate of 18.5 million web servers seems neither too high, nor too low and is probably as valid, if not better, an estimate than any previously devised method could provide. By repeating this study after given time intervals, we would be able to make further estimates on the growth of the Web relative to time, and by increasing the level of information gathered by our software, we would be able to speculate on the distribution of server types across the Web. Testing to determine how well the Poisson distribution fit our data, and to establish confidence limits (to determine how many quadrats will provide a good estimate) would also increase the validity of the study. If future research were to be conducted in this area it may be beneficial to change the nature of the quadrats yet again. Originally, our problem was that the quadrats we had chosen were of the form a.b.c.(0 255). This allowed a high level of homogeneity within the quadrats, and it was possible to stumble upon a server farm subnet as a quadrat (giving us an extremely abundant quadrat), while another quadrat could be a subnet composed entirely of unused addresses (giving us an extremely sparse quadrat). Additionally, quadrats of this “shape” meant that all of our probes would be received by the same subnet and could be interpreted as an attack by a zealous System Administrator. To counteract these problems we changed the quadrats to be of the form (0 - 255).a.b.c. This eliminated concerns engendered by homogeneity and the problem of sending 256 unsolicited port 80 requests to a single subnet. But it also introduced a new problem. Since we were iterating through the first octet to form our quadrat, any reserved IP addresses determined by the first octet (such as when the first octet is 0, or greater than 223) would appear in all of our quadrats. This is a problem because it detracts from the random nature of our web server distribution (since a web server can never be found within these ranges). One possible solution to this situation is to iterate along the second or third octet (quadrat addresses of the form a.(0 - 255).b.c and a.b.(0-255).c respectively) rather than the first. The second octet is a better choice than the third, since the third would still yield homogenous quadrats in some Class A networks. By defining our quadrat in terms of the second octet, we retain our lack of heterogeneity, as well as prevent ourselves from appearing to launch an attack against a subnet. We also prevent invalid addresses from appearing in the same locations in all of our quadrats. Unfortunately, this method still has problems. Instead of having the reserved addresses clumped together in the same positions in all quadrats, we have quadrats with either an extremely low number of invalid addresses or which are comprised of entirely invalid addresses. This is because the first octet is now fixed throughout the quadrat, and if it determines the address to be invalid, then all addresses within that quadrat will be invalid. A better solution would be to continue with our current scheme, but simply discount all addresses where the first octet renders the address invalid. Thus, our quadrats would be 32 values smaller. Addresses beginning with 0, 10, and 224 - 255 would be eliminated from our calculations. We would then need to compensate for this in our final estimations by adding the removed values back to the total estimates. We would still have invalid addresses within our quadrats, but they would not appear with the frequency and relative stability that they do in our current quadrats. Another way the results could be improved would be to increase the timeout value when attempting to establish a connection to port 80. We used a timeout value of 5 seconds, and if a web server had not replied within that time then we would not consider it in our results. To be more precise and certain that virtually all web servers are counted, a higher time-out value should be used – perhaps in the range of 60 seconds. Finally, it should be pointed out that although being able to estimate the number of web servers on the Internet is one way of estimating the total size of the Web, it does not take other factors, such as the content of those servers, into account. It is also possible that there are a sizeable number of web servers running on ports other than 80, but these were also not identified by our software. The scanning software would also be rendered incapable of detecting a web server on any machine behind a firewall that was blocking port 80. As a result of these facts our estimate is likely to be a lower bound on the actual web server population of the Internet. In the worst case, the ipasat.pl program will take 5 seconds per address to determine whether or not that address is open, closed or invalid. If we multiply that by 256 (the number of addresses within a quadrat), the longest it can take to complete a run through a quadrat is 1275 seconds, or a little more than 21 minutes. Of course, it never actually takes this long in its current form because 32 of those addresses are certain to be invalid and are identified almost instantly. Therefore, if we were to run this program continuously to scan the total number of possible quadrats (256 * 256 * 256 = 16777216) it would take 21390950400 seconds, or approximately 678 years. With a higher time out value this would take even longer. But what if, however, we had an entire server farm running this program and gathering results? What if this program could query other IP addresses while waiting for another to reply? And what if each server could run multiple copies of ipasat.pl day and night? It may be that we could actually conduct a census on the entire Internet in a reasonable amount of time, and not have to do any estimation at all! The ability to estimate the size of the Web using quadrat counts will become even more important in the next few years, when the new IPv6 protocol is rolled out to replace the increasingly inadequate IPv4. Despite the apparent vastness of the Internet, there is a growing shortage of available IP addresses, primarily due to the large number of non-computers now using IP addresses, such as cell phones and Internet appliances. IPv6 is much the same as the current IPv4 protocol, but uses 6 octets instead of the traditional 4. These additional 2 octets give us an extra 281,470,681,743,360 addresses, for a total of 281,474,976,710,656 possible addresses available under IPv6 (including reserved addresses). For comparison’s sake, using ipasat.pl to scan every possible quadrat in the IPv6 format would take us approximately 44 million years, rather than the 678 years it would take under the current format. This means the ability to estimate the size of the Web using smaller sample portions (such as quadrats) will become more and more important as the Internet becomes too large to feasibly quantify it in any other way. ACKNOWLEDGEMENT The second author is supported by an NSERC research grant. REFERENCES Adamic, L. and Huberman, B., 2001. “The Web’s Hidden Order” Communications of the ACM, September 2001/Vol. 44, No. 9. Berners-Lee, T., 2000. “Weaving the Web” HarperCollins. Giles, C.L. and Lawrence, S., 1998. “Searching the World Wide Web” Science Magazine, April 3, 1998 Volume 280. Giles, C.L. and Lawrence, S., 1999a. “Searching the Web: General and Scientific Information Access” IEEE Communications Magazine, January. Giles, C.L. and Lawrence, S., 1999b. “Accessibility of Information on the Web” Nature Science Journal, July 8, 1999 Volume 400. Hildrum, J., 1999. “Jon Hildrum’s IP Addressing Page” Web Site, http://www.hildrum.com/IPAddress.htm. Krebs, C., 1999. “Ecological Methodology 2 nd Edition” Addison-Welsey Educational Publishers, Inc. Moschovitis, C, et al, 1999. “History of the Internet” ABC-CLIO, Inc.