Compilers, and Compiler Optimization

advertisement

Instruction Sets

Classifications:

Stack

Accumulator

Register-Memory

Register-Register

As an example consider adding two numbers from memory, and storing the

result back in memory.

On a stack based machine only operands at the top of the stack can be

accessed. Typically the top two elements can be operated on and popped off

the stack, and the result is then pushed back on.

PUSH A

PUSH B

ADD

POP C

This is pretty neat, and the most complex mathematics calculations can be

performed, just ever using the top two locations on the stack. The INTEL

floating point co-processor uses an 8-element stack in this way.

For example E=(A*B)+(C*D)

fld A

fld B

fmul

fld C

fld D

fmul

fadd

fst E

;

;

;

;

;

;

;

;

load A

load B

Multiply A*B

load C

load D

Multiply C*D

A*B+C*D at the top

pop result off

The very earliest machines were accumulator based. Basically only one

register – the accumulator. All arithmetic was done between memory and the

accumulator. The result always ended up in the accumulator.

Load

Add

Store

A

B

C

Both Stack and Accumulator-based architectures are now virtually extinct.

They generate too much memory traffic, and make compiler optimisation

difficult. It’s quicker to keep program variables in registers if at all possible,

and the more registers the better.

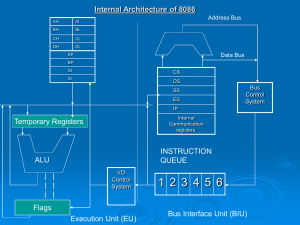

All new processors are GPR machines – General Purpose Registers. The

8086/Pentium has too few, and many are not general purpose. The ARM has

13 general purpose registers. 32 GPR registers would be common.

Optimizing compilers like lots of registers.

The 8086 allows most instructions to access memory (register-memory

architecture)

mov ax,[bx]

add ax,[bx+2]

mov [bx+4],ax

However all modern processors are register-register, more commonly known

as load-store architectures

LDR

LDR

ADD

STR

r1,A

r2,B

r3,r2,r1

r3,C

Only the special Load and Store instructions can access memory. Other

ALU operations act only on registers.

Compilers love this kind of architecture!

Remember that every instruction needs to be “encoded” in binary, ideally

making it easy for the CPU to unpick the decoding and determine exactly

what the instruction requires to be done.

For example the 8086 instruction

add ax,3

encodes as two bytes – 00000100 00000011

The first is the opcode which identifies the instruction. The second is simply

the binary for 3

However

add ax,3547

encodes as three bytes - 00000101 11011011 00001101

Obviously the number 3 or 3547 must appear in the instruction somewhere.

Here's another one

cmp fred[ebp],20

This encodes as 5 bytes (in hex) - 83 3E FF A4 14

The 833E part identifies it as the "compare using ebp" instruction. The

FFA4 is the offset to be added to ebp to find the address of "fred". This is a

negative number (can you tell why?). The 14 is simply hex for 20.

The 8086 uses variable length encoding. The number of bytes used depends

on the complexity of the instruction. The need to include constants or

memory addresses increases the code size.

An important design goal is good code density. Encoding should ideally not

be wasteful. Variable length encoding is good in this regard.

However most modern processors are load-store and attempt to generate the

same constant size codewords for every instruction – typically 32-bit.

Since memory address do not appear outside of load-store instructions, more

bits can be used to encode more registers, so three operand instructions are

commonly used.

add ax,bx

; add bx to ax

add r1,r2,r3 ; r3=r1+r2

-

8086

ARM

Memory addressing

Memory in a 32-bit PC is normally accessed in 32-bit chunks. However

memory is normally byte addressed. So…

mov eax,[200]

; good. Reads 1 word from 200-203

mov eax,[201]

; bad! Misaligned. Must read 2

; words

Since the architecture always reads in 32-bit chunks, in the misaligned case

32-bits must be read from 200 and 204, and the result constructed from both.

Much slower.

Mis-aligned data can slow down a program considerably. Its normally a job

for the compiler to ensure that data alignment is OK.

Addressing modes

Early processors allowed very elaborate memory addressing modes. Note

however that in the optimised 8086 code only two were actually used –

immediate addressing and displacement addressing, and these are the only

ones really needed.

mov eax,3547

mov eax,100[bx]

; immediate addressing

; displacement addressing

Of course the immediate number and the displacement needed to be encoded

in the instruction, so its size is often limited – 16 bits is common on a 32-bit

processor. If larger constants/displacements are needed a combination of

instructions must be used – but hopefully this will be rare.

Instruction Sets

“Make the common case fast”. Now 10 simple instructions account for 96%

of optimized compiler generated code for the 8086/Pentium.

Operation

Example

% of total

Load from memory

Conditional branch

Compare

Store

Add

And

Sub

Move register-register

Subroutine call

Subroutine return

mov ax,[bx]

jne fred

cmp ax,1

mov [bx],ax

add ax,dx

and ax,bx

sub ax,bx

mov ax,bx

call fred

ret

22%

20%

16%

12%

8%

6%

5%

4%

1%

1%

Clearly it makes sense to optimise these instructions, and that’s exactly what

Intel did when they progressed from 8086 to Pentium.

Consider branch or jump instructions, that change the contents of the PC

(program counter). There are four types

Conditional branches

Unconditional jumps

Subroutine calls

Subroutine returns

Of these the Conditional branch is by far the most important (Figure 2.19)

Now the destination of the branch normally needs to be encoded somehow

in the instruction. To achieve “relocatability” or “position independence” the

distance of the branch is normally encoded as relative to the current address.

So we branch forward a certain distance, or back a certain distance from the

current PC value. The exception is the subroutine return, which commonly

finds the return address on the top of the stack.

To help decide how many bits should be dedicated to encoding

displacement, it helps to analyse some code and calculate the average branch

displacement. As can be seen 8 bits is enough for most cases – we tend to

branch short distances - Figure (2.20)

For example on the 8086 the instruction

002B

002D

………

………

jge fred

003D fred:

encodes as 7D 10, where the 7D identifies it as a jge instruction, and if the

branch is taken execution is to continue from 002D + 10 = 003D.

Note that the bytes 7D and 10 are stored in memory locations 002B and

002C respectively.

How to specify the branch condition? In the 8086 condition codes are used,

typically the zero flag, carry flag, overflow and negative

In compiler generated code a conditional branch normally follows a compare

instruction. And most of these comparisons are comparisons with zero. So in

8086 for example jeq or jge would be more common that jc (Jump if

carry). And of the many varieties of these jeq or jne would be most

common. Typically over 50% of integer compares in branches are simple

tests for equality with zero.

An alternative to condition codes is to integrate the comparison with the

branch, in the one instruction. It may make sense to limit the range of

possible comparisons – maybe only comparison with zero?

Type and size of operands

A 32-bit computer should be able to handle byte, half-word (16-bit) word

(32-bit) and 64-bit Floating point numbers. A 64-bit computer should be

able to handle 64-bit integers as well. In Intel-world the types are BYTE,

WORD (16 bit) DWORD (32-bit) and QWORD (64-bit).

Encoding the Instruction set

The instruction format varies from instruction to instruction, some

require an indication of which registers are to be used in the

instruction, others need to know a memory address, others need to

know a branch displacement.

One part of the encoding is fixed – the op-code. This specifies the

instruction type. This in turn determines how the rest of the

encoding is formatted.

Assume that there are less than 64 instructions in the instruction

set. Then the op-code can be encoded in the first six bits.

As for what happens next, various approaches have been suggested

(Figure 2.23). For convenience whatever method is used, the

encoding should be a multiple of 8 bits. Variable length encoding

gives good code density – very short instructions can be encoded

in 1 byte. (It is a common strategy for data compression algorithms

to associate the shortest code word with the most frequently

encountered piece of text). Complex addressing modes take up lots

of space.

The 8086 uses a variable length encoding (Figure D.8). The VAX

took this idea even further a VAX instruction could take from 1 to

53 bytes. For example

addl3 r1,737(r2),(r3)

This instruction adds the contents of memory address [737+r2] to

the contents of memory address [r3], and stores the result in r1.

The op-code takes one byte, 1 byte is required to specify which

register, and which addressing mode to use with that register, and

the 737 is stored in two bytes. So the whole instruction encodes as

6 bytes.

Many RISC computers, following the KISS philosophy (Keep It

Simple Stupid), use a fixed length encoding. The MIPS processor

uses fixed length encoding. This clearly limits the variety of

addressing modes, and effectively enforces a simple load-store

architecture. All operations take place on registers – the only

access to memory is via simple load/store instructions which only

support simple addressing modes.

(Figure 2.27)

Which is best? Consider a head-to-head comparison between the

CISC VAX and the RISC MIPS processors. The VAX went for

high code density, powerful addressing modes, powerful

instructions, efficient instruction encoding, and relatively few

registers. The MIPS went with simplicity of hardware, aggressive

pipelining, simple instructions, simple addressing modes, fixed

length encoding and lots of registers.

The MIPS takes twice as many instructions to do a typical job. But

the VAX CPI is 6-times more than that for the MIPS due to its

complex instructions. So the MIPS have a performance lead of 3:1.

Furthermore it’s a lot cheaper to build – much less hardware

needed. (Figure 2.41). This spelt the end for the VAX, and

ultimately for DEC, taken over by Compaq, who have been taken

over by Hewlett-Packard. But before that they did see the error of

their ways and developed the lovely 64-bit RISC Alpha processor.

Compilers

Compilers have two main jobs

To produce correct code

To produce fast code.

Correct code is produced first. Then it is optimised on a subsequent

pass. Compiler writers like to make the frequent cases fast, while

keeping the rare cases correct.

What do compiler writers look for in an instruction set?

Regularity – orthogonality. All registers should be created

equal. The 8086 is not orthogonal – certain registers could be

used in certain contexts but not in others.

Simple instructions. It was originally thought that as computers

developed, their instruction sets would become more-and-more

high level. It was suggested that a future architecture might have

C as its instruction set. This closure of the semantic gap –

bringing the hardware up to the level of the language - never

happened. Compilers can understand simple instructions and

deploy them near-optimally

Less is more in instruction set design.

Optimisers use all kinds of tricks

Replace two or more instances of the same calculation with just

one.

Take an invariant calculation out of a loop. So replace

for (i=0;i<20;i++)

{

x=cos(y);

..

}

with

x=cos(y);

for (i=0;i<20;i++)

{

..

}

Loop unrolling. Replace

for (i=0;i<20;i++)

{

x+=a[i];

}

with

x+=a[0];

x+=a[1];

x+=a[2];

..

x+=a[19];

which saves on the loop management overhead (and avoids

pipeline unfriendly conditional branches)

In-line expansion of a function. Eliminates call/return

overhead. This is good for small functions.

Replace a multiplication by a small constant by shifts-and-adds.

Re-order instructions to avoid pipeline stalls.

Optimise register allocation