2005-12-06 Ridge regression

advertisement

BIOS 2063 2005

Ridge Regression

-1-

Ridge Regression

Linear regression is sometimes unstable due to approximate collinearity

of the predictors. Manifestations:

Very large standard errors for coefficients

Low standard errors for some linear comb’s of coeffs

det( X T X ) is close to zero.

X T X has a very small eigenvalue.

X T X has a very large “condition number”

(ratio of largest to smallest eigenvalue)

The Principle: Add a “ridge” of size

stabilize the matrix inverse.

to the diagonal of XTX, to

ˆridge ( X T X I )1 X T Y

Another view: penalized likelihood

ˆridge : arg min (Y X )T (Y X ) || ||2

Another view: maximizing a Bayesian posterior, where the prior is

[ ] ~ N (0,(2 ) 1 I p ) .

Implementation: data augmentation :

X aug

X

Y

,

Y

aug

0 .

diag ( )

Then OLS on the augmented data will yield

ˆridge .

Yet another view:

Solve a constrained optimization problem

(restricting the model space):

BIOS 2063 2005

Ridge Regression

-2-

ˆ : arg min (Y X ) (Y X )

T

will yield

restricted to the set { : || ||2 K }

ˆridge .

##### Ridge regression

## make some data, with a nearly collinear design matrix

sharedfactor = rnorm(50)

x1 = sharedfactor + rnorm(50)* 0.001

x2 = sharedfactor + rnorm(50)* 0.001

b1 = 1

b2 = 1

y = b1*x1 + b2*x2 + rnorm(50)

originaldata = data.frame(y=y, x1=x1, x2=x2)

-1

0

1

2

0

2

4

-2

1

2

-6

-4

-2

y

0

###### Standard linear model.

original.lm = lm(y ~ x1 + x2, data = originaldata)

original.lm

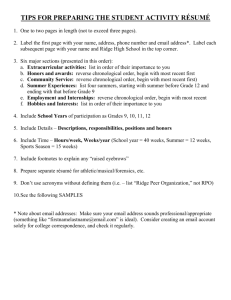

pairs(originaldata)

designmatrix = as.matrix(originaldata[, -1])

XTX=t(designmatrix)%*%designmatrix

svd(XTX)$d

#### Eigenvalues:

Badly conditioned matrix.

-4

> results

ml

1e-006

1e-005

0.0001

0.001

0.01

0.1

1

10

100

b1hat

-86.2259534

-84.8413923

-72.6883121

-29.4760827

-3.3865433

0.6014741

1.0210714

1.0529404

0.9613129

0.5010925

b2hat deviance

eig1

88.3468528 41.06907 86.67610

86.9627434 41.09845 86.67610

74.8122337 41.21327 86.67611

31.6091417 41.62145 86.67620

5.5250951 41.87684 86.67710

1.5376947 41.96154 86.68610

1.1160084 42.25016 86.77610

1.0628409 44.48155 87.67610

0.9625357 62.95771 96.67610

0.5013048 148.94035 186.67610

0

-1

x2

-2

##Ridge regression

lambdalist = 10^(-6:2)

results = matrix(NA, nrow=length(lambdalist)+1, ncol=5)

dimnames(results) = list(c("ml", as.character(lambdalist)),

words("b1hat b2hat deviance eig1 eig2"))

-6

results[1,1:2] = coef(original.lm)[2:3]

results[1,3] = deviance(original.lm)

results[1,4:5] = svd(XTX)$d

for(lambda in lambdalist) {

pseudodata = data.frame(y=rep(0,2),

x1=c(sqrt(lambda),0), x2=c(0,sqrt(lambda)))

ridgedata = rbind(originaldata, pseudodata)

ridge.lm = lm(y ~ x1 + x2, data = ridgedata)

designmatrix = as.matrix(ridgedata[, -1])

XTX=t(designmatrix)%*%designmatrix

print(svd(XTX)$d)

results[as.character(lambda),1:2] = coef(ridge.lm)[2:3]

results[as.character(lambda),3] = deviance(ridge.lm)

results[as.character(lambda),4:5] = svd(XTX)$d

}

results

1

2

-2

-1

x1

eig2

5.407039e-005

5.507039e-005

6.407039e-005

1.540704e-004

1.054070e-003

1.005407e-002

1.000541e-001

1.000054e+000

1.000005e+001

1.000001e+002

-2

0

2

4

-2

-1

0

1

2