Journal on Postsecondary Education and Disability

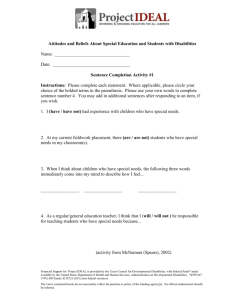

advertisement