avoiding hidden errors and organizational accidents in strategic

advertisement

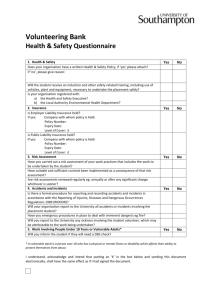

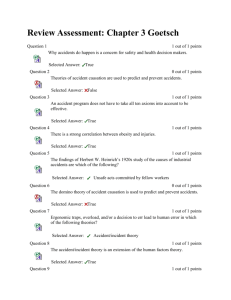

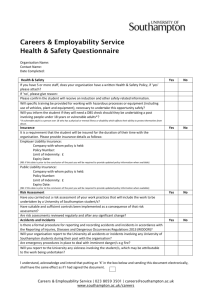

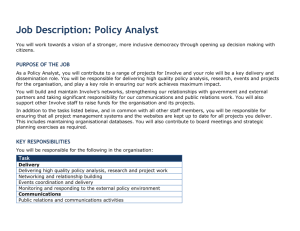

Tom Hanén Border and Coast Guard Academy, Finland AVOIDING HIDDEN ERRORS AND ORGANISATIONAL ACCIDENTS IN STRATEGIC MANAGEMENT 1. General Things have been moving fast on the highway of safety and security throughout the 21st century. For example, the harmful effects of globalisation, trends in international politics as well as several disasters and natural catastrophes have meant that the concepts of safety and security have risen on a pedestal. They have also become a favourite among consultants as well as a target state for organisations, individuals and commercial enterprises, for whom, confirmed by the media, insecurity is the only alternative offered. The state of “safe and secured” has become a trend and fashion phenomenon of our age. There exists an enormous quantity of material and debate from different angles, and thus distinguishing what is essential for research has become challenging despite source criticism. It is clear that understanding safety and security and their management is important for security authorities. The principal work of security authorities, producing safety and security for society, is nevertheless only one side of the matter. The other side is the fact that security authorities also form organisations through which they operate. The management and protection of these organisations also have to be invested in, so that their societal effectiveness is not jeopardised by malfunctions occurring in the organisation. This article is based on my ongoing research into safety and security management in various organisations. In the course of my research, I have sought to discover the most central paradigms of safety and security research via bibliometric analysis. The aim of this article is to highlight one important paradigm in modern safety/security research and estimate its effects on strategic management in different kinds of organisations. The focus in the article lies on understanding the main principles of this organisational paradigm and the role of strategic management in this phenomenon. Firstly, the article presents the method employed in my research, bibliometric analysis. After this, I define the most central concepts that I employ. Next, I demonstrate how safety and security concepts are connected to management on the basis of bibliometric analysis and, afterwards, I shall move on to consider the organisational paradigm discovered in the analysis. Finally, the article presents observations on the significance of the organisational paradigm to strategic management and points out the significance of the paradigm in safety and security research. 2. About the method: bibliometric analysis The purpose of my bibliometric analysis was to discover the scientific core of safety and security and their management. The analysis employed the American Isi Web of Science database, containing article data from over 8,000 scientific publications since 1986. The articles found with the aid of search words were subjected to citation and network 2 analysis1. This enabled me finally to identify which researchers the scientific community refers to most in its articles on safety and security. With the aid of the filtering feature, it was also possible to create network images, showing only the researchers that gained the most references, and their works. In the following is an example of this kind of network image: Figure 1: Scientific network image of ”security management” according to bibliometric analysis. The figure shows only those researchers referred to more than 12 times in hundreds of articles. With the aid of bibliometric analysis, I examined broadly the concepts of safety and security and their management, also including the concepts risk and accident. Terms relating to management, such as prevention and leadership, were also added to all the concepts. 3. About the concepts of safety, security, risk and accident Safety/security research consists in practice of four main concepts. These are safety, security, risk and accident. Next, I will consider briefly how these have been defined in the sources indicated by the analysis. The word safety, with the basic form safe, has been employed generally with reference to accidents and their prevention. Safety incorporates humanity and lack of intent, but not ”malicious intent” (Johnston 2004, p. 245–248). Security, on the other hand, incorporates negligence or a systematic approach (for example terrorism and criminal activity). Security is derived from the Latin word securus (se (without) and cura (concern, care, protection, supervision)), and so the etymological basis for the concept can be positive or negative (without concern – without care). It is a commonly employed word when talking about the prevention of violence and crimes by security firms, or when talking about the security of organisations or states in the area of international politics. Risk originates from the sphere of shipping, where insurance activity is also held to have originated. Babylonian traders took bottomries in order to finance the sea voyages of vessels that were important for their trading. If a voyage succeeded without losses, the lender was paid a separate remuneration in addition to the loan amount, which can be regarded as an insurance premium corresponding to the lender's risk. The Code of Hammurabi compiled in 1700 BC in Babylon included articles on bottomries, according to which a loan did not need to be repaid if a ship was lost. The word risk is held to be derived from the Greek word rhizikon, meaning a rock and the vulgar Latin word risicare, 1 The citation and network analysis was performed using Ucinet and Sitkis software developed at Helsinki University of Technology. 3 which means avoiding a rock. In addition to these words, it has been submitted that the origin of the word risk lies in early Italian, in which risicare signifies daring. On the basis of this, the concept of risk can be etymologically both negative and positive; after daring one encounters either loss or profit. (e.g., Bernstein 1996, p. 8) An accident refers to an unexpected and random event, when an individual or system goes off the rails. An accident is a complex concept, which Aristotle defined already in his Metaphysics. As a consequence of industrialisation, the concept of accident frequently gained a purely physical form (for example rail accident), but modern research is again moving closer to the original significance of the concept as a marker of any random and surprising turn2. 4. How these concepts are linked scientifically to management According to the analysis, the scientifically most tenable concept of safety/security management is safety management. Here the concept has been the subject of decades of diverse and multidisciplinary research. The same applies to the concept accident prevention, in which the researchers are partly the same. Instead the concept security management appeared in the analysis solely as a random object of research on information technology (cf. Figure 1). Risk management is also a concept that has been employed widely in science, particularly in technical sciences and, for example, in the insurance sector. Safety leadership and security leadership did not generate any search results, and so they are not concepts generally used in scientific publications. Figure 2: Scientific network image of ”safety management” according to bibliometric analysis. The figure shows only those researchers referred to over 12 times in hundreds of articles. It can be observed from the safety management network image that research in this sector focuses considerably on occupational safety issues. Nevertheless, the figure also highlights one central paradigm of safety management, the most important researcher of which has been the English psychology professor James Reason. He in fact appears twice in the network image. It has also been possible to make extensive use of Reason's studies of the organisational paradigm in research relating to the security concept. 2 For example Hamilton, Ross: Accident, a Philosophical and Literary History. University of Chicago Press 2007. 4 5. The principles of the organisational paradigm In 1931, Heinrich presented the domino theory of safety. This theory was based on it being possible to describe the emergence of an accident as a chain or series of successive events. In the prevention of accidents, Heinrich turned his gaze to man instead of machine: attention ought to be paid principally to hazardous measures performed by man and to hazards in the physical and social conditions of the work environment. (Heinrich et al. 1980, p. 55) Many researchers have developed the domino theory by linking the prevention of accidents also to management. Shortly after the domino theory, in studies of a number of accidents, it was concluded that the cause was to be found in the control of activities. As a consequence, a significant phase was initiated in prevention: analysis of the effects of safety management and organisations' activity in accident development. Reason has made a significant contribution to this development in particular through the development of an organisational accidents' model. Reason classifies accidents in two categories: individual (or separate) and organisational accidents. Individual accidents touch upon a narrow environment, in which both the cause and the victim are often the same person. The effects of organisational accidents are much broader: bankruptcies, environmental disasters or numerous fatalities. As the basic point of departure, Reason perceives that two elements connect all sociotechnical organisations: production and defence. Whatever the product or production (goods or services), defences are needed to avoid recognised hazards and to protect people and property. Nevertheless, the defence of organisations involves problems. In an ideal situation, the defences placed to protect people and property from dangers have no holes in them and provide the said protection, but in reality a variety of holes are created continuously in defences. These holes move and change their size constantly. When the holes that have developed in defence levels occur together, an accident can happen. (Reason 1997, p. 8–9). Reason's idea has been clarified in the following image: Figure 3: Reason's famous “Swiss Cheese model”. (www.coloradofirecamp.com/swiss-cheese/index.htm) 5 6. Active and latent errors According to Reason, the holes in defences are created by active and latent errors. Active errors are employees' errors or negligence, which combined with failure of defences, can lead to direct accidents. Latent errors are decisions, incorrect instructions and other measures by management or legislators the effect of which becomes apparent only over time and often combined with active errors. Reason also points out that accidents that have occurred do not by themselves signify the existence of latent errors, rather latent errors appear continuously, even if accidents do not actually occur. (Reason 1990, p. 173– 212, Reason 1997, p. 6–20) Reason classifies human error as a consequence, not a cause, which in his opinion is the usual practice. This is a significant observation as far as accident investigation is concerned, since, after all, we have often seen or heard of investigation reports in which human error has been recorded as the reason for an accident. As a psychologist, Reason nevertheless throws out this kind of thinking: People make mistakes and the best people are sometimes capable of the worst mistakes. People's fallibility can be reduced, but never eliminated completely. For this reason, organisations ought to break free of the ”wheel of accusations” and focus on measures in which the possibility of errors has been taken into consideration. (Reason 1997, p. 125–128) 7. Navigating in Reason’s safety space According to Reason, the safety of an organisation depends on its position and movement in the theoretical safety space, shown in the following image: Increasing resistance Increasing vulnerability ORGANIZATIONS NAVIGATING OR DRIFTING IN SAFETY SPACE Figure 4: Reason's safety space (Reason 1997, p. 111 and 2008, p. 269). Only very few organisations are in position in the safety space. They are moving decisively towards better resistance (through safety development) or drifting passively towards the opposite extreme. When the extreme of considerable vulnerability approaches, an accident can occur. An accident results in a major impulse to enhance safety, and the organisation embarks on a journey towards the opposite extreme. When an organisation is close to the extreme resistance of the safety space, one can draw false conclusions about 6 the permanence of safety or defences, and the organisation again starts to slide towards vulnerability. False conclusions of this kind are drawn when an organisation's management fails to understand the effects on the overall situation of the decisions that it takes or of changes in its operating environment. (Reason 1997, p. 110–112) An organisation's movement in the safety space can be controlled. Reason clarifies the matter using shipping terms: the ”organisation ship” requires an engine, fuel (= driving force) and navigation devices in order to be able to also move upstream and in the right direction. Prior to departure, the destination port naturally has to be decided. The organisation's management has to understand that accidents cannot be managed: management can be targeted only at measures that can lead to accidents in the organisation. Effective safety management can be compared to a long-term keep-fit programme, in which case subareas that are felt to be important are developed in one go without getting out of breath too much. In fact, it is about matters that managers are hired to manage: workplace arrangements, training, work processes, planning, budgeting, communication, disputes and upkeep. These subareas have often been linked to the causes of organisational accidents. (Reason 1997, p. 115) The driving forces, which at the same time form the core of organisational safety, are formed from three Cs: commitment, competence and cognisance. Their absence does not render the development of safety possible. In commitment, Reason pays particular attention to senior management and safety culture: the continuous changing of senior managers that is typical of the present day can partly be compensated for with a strong safety culture. The second element in the core, competence, signifies the correct way to gather safety information on the right matters and, in addition, to react to information correctly. The third entity is utilisation of information and continuous attention to safety matters, ”guerrilla war without a final victory”. Organisations should possess information on dangers that are threatening their processes. An organisation that takes safety matters into account correctly understands the nature of safety, and does not look upon an absence of accidents as a sign of final victory, but continues to develop its safety. (Reason 1997, p. 114–124) Aids to navigation consist of measurements of the safety situation. The ”organisation ship” uses these measurements to define its place in the safety space in order to be able to correct its course if necessary. Measurements are divided into reactive and proactive measurements. Reactive measurements can be performed only once an accident has occurred and proactive measurements already prior to an accident. Effective safety management requires the support of both: Lessons gained from earlier accidents help in the prevention of future accidents, provided that accidents that have occurred are investigated correctly and people are able to ”adapt them to the present”. This means seeing earlier accidents as an entity, rather than releasing exaggerated conclusions and forces for change on the basis of an individual accident alone. Experiences gained from near miss situations can be harnessed in proactive measurements. Reason nevertheless urges us to treat with caution various reporting systems, the purpose of which is the reporting of near miss situations or deviations in order to prevent accidents. Because systems are based on employees' voluntary declarations and the person making the declaration is often also involved in the situation, the content of reports can be open to interpretation. Reason nevertheless regards these kinds of systems as important, since they are the only way to learn from accidents before 7 they happen. He guides the developers of the systems to pay attention to the safety culture prevailing in the organisation, simplicity of reporting, the verification and protection of the reporter's identity, and to the avoidance of negative consequences arising from reporting. (Reason 1997, p. 196–205). 8. Conclusions: the effects of the organisational paradigm on strategic management The evolution of an organisational accident can be detected only in an area in which the entire organisation's operations are united. It is thus the organisation's highest level, i.e., the commonly employed concept strategic management, which, in addition to managing the overall situation, is in the best position to make decisions that halt the development. The activities of strategic management ought to be developed so that signals indicative of an organisational accident can be detected and interpreted. The organisation's management can itself define the events whose impact can be fateful and irreversible (for example, branding in the eyes of interest groups, embezzlement of large sums of money and so forth) and direct the entire organisation's interest to following precisely these events. A requirement of unification imposed directly on lower organisation levels can instead impose on these levels overall management requirements that they are not actually in a position to fulfil. The lower levels of the organisation are nevertheless management tools, whose interest can where necessary be directed at subareas serving the whole. In particular, it is thus important to observe that the concept of accident has broadened. Reason's accident does not refer solely to physical injuries, but to any event that can suddenly cause an entire system to collapse. Nowadays, this can mean, for example, becoming a scapegoat of the media, an act contrary to public morals, management problems, or prolonged abuse by an employee that is not noticed and ultimately leads to insuperable consequences3. Many organisations fail to take into account ”new accidents” of this kind in their risk assessments, but, rather, risk surveys focus on control of traditional individual accidents. As can be noted, what is especially challenging for the organisation is detecting latent errors before they cumulate into a whirlwind or ”infernal spiral"4 that breaks up the organisation. This ought to be considered in strategic management. What makes the matter especially difficult is the fact that often the latent error can be in actual management, too. 3 There are numerous examples of all of these. The oldest bank in England, Barings, collapsed in 1995 due to the abuses of just a single employee because the situation failed to be spotted by management in time. In 2006, the Tallink shipping company became the object of media abuse in Finland because it released sewage into the Baltic Sea. The activity was lawful, but contrary to growing environmental responsibility. The shipping company was boycotted but did not collapse. 4 A concept employed by France Telecom's management, which emerged when latent errors became uncontrollably active as a consequence of the suicide of 22 employees. Now the company has been capable solely of reaction and, for example, 100 people have been hired by the company's personnel department to observe employees. 8 9. Afterword: has the organisational paradigm already become obsolete from the viewpoint of strategic management? The most central work on the organisational paradigm is James Reason's ”Managing the Risks of Organizational Accidents”, published in 1997. Even though the work is now over ten years old, its central principles are still topical. When we look into the matter, we notice examples of organisational accidents around us constantly. The current complex and postmodern operating environment of strategic management – the most central concepts of which are continuous change, hecticity and fragmentation – calls for ever more sensitive and more continuous sounding of damaging signals and ever faster countermeasures. In this environment, the incubation period for organisational accidents has shortened dramatically from what it was in a rational and stable environment. The newest paradigm of safety management is resilience, the most central researcher of which is Professor Erik Hollnagel5. This paradigm emphasises the organisation's tolerance instead of provision for accidents, in other words the development of the organisation's flexibility and ”elasticity” in such a way that surprising events would be withstood better. This paradigm relates in other aspects as well to prevailing ideas about the effect of chance and surprise, and the difficulty of preventing accidents (see e.g. Taleb’s ”Black Swan”, 2007). It can nevertheless be observed that Reason's organisational paradigm was the first paradigm of safety management that started to link safety research closely to organisation research and theories, and thus to detach it from merely technical research approaches. The resilience paradigm continues this trend, as it links up ever more tightly with organisation theories, in particular decision and system theories included within strategic management. Reason has also in his latest work approached resilience thinking 6. One can conclude that safety/security research has now started to approach the problems that organisation research has been considering for a long time. In other words, activity in a complex and postmodern world, in which complexity, scale-free networks and chance are the key words. 5 6 The most central work is Hollnagel&Woods&Leveson (ed.): Resilience Engineering. Ashgate 2006. Reason, James: The Human Contribution. Ashgate 2008. 9 REFERENCES Reason, James: Managing the Risks of Organizational Accidents. Ashgate Publishing Company 1997. Reason, James: The Human Contribution. Ashgate Publishing Company 2008. Johnston, RG: Adversarial Safety Analysis: Borrowing the Methods of Security to Vulnerability Assessments. Journal of Safety Research 35 (3), 2004, p. 245–248. Taleb, Nassim Nicholas: The Black Swan. The Impact of the Highly Improbable. The Random House Publishing Group 2007. Bernstein, Peter L: Against the Gods – The Remarkable Story of Risk. John Wiley & Sons, Inc. New York 1996. Heinrich, H.W & Petersen, Dan & Roos, Nestor: Industrial Accident Prevention: a Safety Management Approach. 5. ed. McGraw-Hill. New York 1980. Reason, James: Human Error. University of Manchester, Department of Psychology. Cambridge University Press. New York 1990.