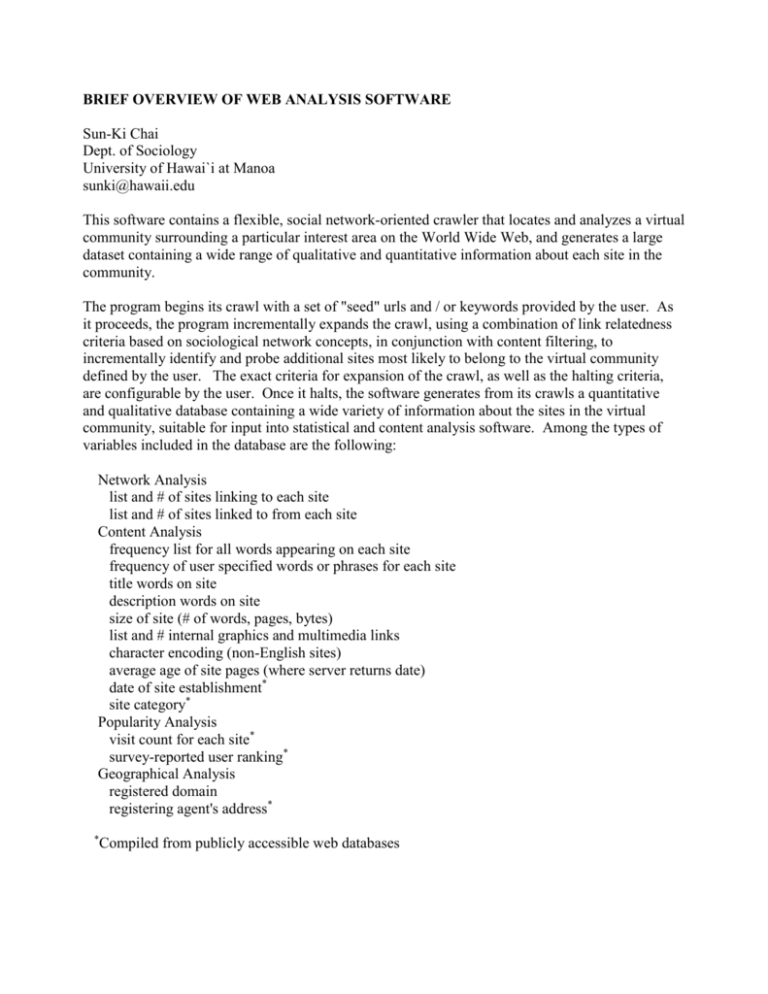

BRIEF OVERVIEW OF WEB ANALYSIS SOFTWARE

advertisement

BRIEF OVERVIEW OF WEB ANALYSIS SOFTWARE Sun-Ki Chai Dept. of Sociology University of Hawai`i at Manoa sunki@hawaii.edu This software contains a flexible, social network-oriented crawler that locates and analyzes a virtual community surrounding a particular interest area on the World Wide Web, and generates a large dataset containing a wide range of qualitative and quantitative information about each site in the community. The program begins its crawl with a set of "seed" urls and / or keywords provided by the user. As it proceeds, the program incrementally expands the crawl, using a combination of link relatedness criteria based on sociological network concepts, in conjunction with content filtering, to incrementally identify and probe additional sites most likely to belong to the virtual community defined by the user. The exact criteria for expansion of the crawl, as well as the halting criteria, are configurable by the user. Once it halts, the software generates from its crawls a quantitative and qualitative database containing a wide variety of information about the sites in the virtual community, suitable for input into statistical and content analysis software. Among the types of variables included in the database are the following: Network Analysis list and # of sites linking to each site list and # of sites linked to from each site Content Analysis frequency list for all words appearing on each site frequency of user specified words or phrases for each site title words on site description words on site size of site (# of words, pages, bytes) list and # internal graphics and multimedia links character encoding (non-English sites) average age of site pages (where server returns date) date of site establishment* site category* Popularity Analysis visit count for each site* survey-reported user ranking* Geographical Analysis registered domain registering agent's address* * Compiled from publicly accessible web databases As mentioned, the software is meant to be extremely versatile, and can be used to address a variety of topics across different disciplines. Here are some examples of possible applications for the data that are generated by the program: (1) Examining how a site's popularity is influenced by its pattern of links to and from other sites within a virtual community. (This issue is examined in the demonstration paper within a set of sites on Korean-American affairs). (2) Using regression analysis to test which types of site content characteristics (subject matter, size, graphic intensity) are most significant in determining a site's popularity. (3) Determining which types of other sites are most likely to link to a given site, and what types of interest groups these sites represent. (4) Determining how closely sites representing two different interest groups are linked to one another (crawling over communities on two different topics, then examining overlap between the final results. The software is motivated by the existing and growing demand for a product that can provide a simple, quick, and inexpensive way for researchers to collect information on the nature of virtual communities and the sites that make them up. Research about the Internet, and particularly the World Wide Web, is perhaps the fastest-growing empirical field of study in the social sciences, and is gaining great prominence in the government policy and business arenas as well. In particular, researchers are anxious to know more information about the characteristics of a community of sites relating to a particular topic, the relationships between sites in such a community, and the type of audience to which such sites appeal. However, one notable thing about existing studies of the social structure of the Web is that few make any use of systematic quantitative or qualitative data, particularly data drawn from the Web itself. This shortage is not due to any lack of interest in systematic analysis within the academic, business, and government communities. Indeed, statistics, content analysis, and other systematic techniques are pervasive throughout each of the aforementioned professions. Nor is problem the inaccessibility of information from which such data could be generated, since one of the notable characteristic of the Web is the fact that much of the information on it is publicly accessible to anyone with an Internet connection. The real problem is the lack of a readily available means for extracting systematic data on virtual communities from available information, and putting in usable form. The current software project seeks to provide a general-purpose solution to this problem.