Video Stabilization Algorithm Structure - ECE

advertisement

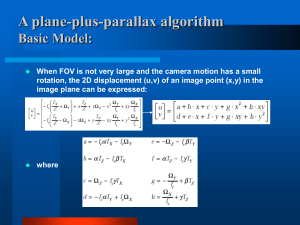

Video Stabilization for Pocket PC Integrated with Wireless Application Hamed Alaghemand hamed@glue.umd.edu Hsiu-huei Yen tamiyen@glue.umd.edu Yuan-heng Lo ylo@glue.umd.edu ENEE 408G Group 4 Final Project Report, 2002, May 17 Electrical & Computer Science Engineering, University of Maryland Abstract We integrate our design -- video stabilization, which is based on global motion estimation algorithm into the portable advantage of Pocket PC. By this way, we can remove the annoying hand shaking effect and make our video images stable and clear. Therefore, we can apply this algorithm for video conferencing wirelessly of Pocket PC, and you don’t have to worry about the images will be obscured while you’re walking. It means you won’t be limited by the location, and you can conference people anywhere. Motivation Pocket PC is getting popular and popular nowadays and people are realizing the portable convenience it brings to us. However, there’re few applications for it. That’s why we want to take its portable advantage with some interesting application. Can you imagine that? In the near future, you can walk on the street and conference people with pocket pc wirelessly. No wonder, video conferencing with the pocket PC will be one of the future most attractive aspects of this device. However, there’s a drawback, hand shacking effect, which makes your video image annoyingly obscured. In this regard, stabilization plays an important role in video coding and dynamic image processing. We want to use global stabilization skills (Motion Estimation, Motion Smoother and Motion Correction) to achieve the high performance video---stabilized, but low bit rate, for the pocket PC. Specification We suppose the hand shacking effect here is translational motion only. Otherwise, the hardware, software we use and the algorithm we adopt are specify as follows: Hardware •Compaq iPAQ Pocket PC H3760 Series •Creative PC camera with resolution 640x480 pixels Software •Embedded Visual C++ Test pattern •Translational camera motion with resolution 160x160 pixels in RGB format. •Captured as BMPAlgorithm: files. •Based on frames Global saved Stabilization •Frame : 10 frames/sec Motionrate Estimation, Motion Smoother and Algorithm Motion Correction Video Stabilization Algorithm Structure Input Video Output Stabilized Sequence Video Sequence Motion Estimation Motion Smoother Motion Correction Fig. 1 shows the block diagram of our motion stabilization architecture. After reading in video frame sequences, the motion estimator will compare the difference between every two consecutive frames, expect the possible motions, and generate motion vectors. Then, these motion vectors will be sent to the motion smoother, which helps to remove those unwanted motions. Finally, the motion corrector does adjustments to current frames based on those smoothed motion vector sequences, and output stabilized frames. (i) Motion Estimation Motion estimation is used to obtain the motion vectors for consecutive frames. Because of the limitation of Pocket PC , we adopt block-matching method with local 3-step search strategy to get the tradeoff between image quality and frame rate. When a frame is received, it was first divided into N x N (here, we have 10 x 10) blocks, so each block will be m x m (16 x 16) pixels. Then, we choose P x P (6 x 6) blocks that located in the middle part of the frame to apply three-step search, while the search ranges of steps are (16, 8, 4 ). Fig.2 illustrates the algorithm. To decide the motion vector for each block, we choose minimum mean absolute distortion(MAD) as the criterion. The definition of MAD shown as follows: Fig.2 Illustrates the 3-step motion estimation method After getting local motion vectors for each block, we have to decide what is the global motion vector of the frame. First, we have to assume that the motion is translational, which means there is no zoom-in, zoom-out or rotation. Thus, as illustrated in Fig.3a, most of the local motion vectors will be the same. From Fig.3b, we can find that the global motion vector can be decided by choosing the median of local motion vectors. Fig. 3 (a) quiver plot for motion vectors (b) histograms of motion vectors(ii) (ii)Motion Smoother Since the position of objects within two consecutive frames should not have great difference, we can say that the rapid changes of global motion result from the unintentional movements, such as hand shaking. To remove these rapid changes (high frequency components), we can achieve by applying a low pass filter to global motion vectors. In out algorithm, we use a moving average (MA) filter (as equation 1) to do the job. The output Y[n] is the convolution of input motion vectors x[n] with the MA filter h[n]. N is the length of the MA filter. Fig.4 shows the original and smoothed motion vectors versus a frame sequence. Fig. 4 Original and smoothed motion vectors Although N can be chosen as any nature number arbitrarily, we still have to consider the trade-off between choosing a big N or small N. If we choose a small N, the processing time can be improved, but the output frame quality will not be so good. On the other hand, if we choose a big N, we can get better image quality, but we have to sacrifice processing speed and will consume large memory for restoring motion vectors. Y [n ] x [n ]h[n 'n ] where h[n]= 1/N =0 , for n=0,1,..N-1 , otherwise (1) (Here, we choose N=5 for the length of the smooth filter.) (iii) Motion Correction Motion corrector is used to perform motion compensation and construct smoothed frames. It constructs smoothed frame 2 by applying smoothed motion vector 1 to the original frame 1. Then, it constructs smoothed frame 3 by applying smoothed motion vector 2 to the smoothed frame 2. Fig. 5 shows the block diagram of the whole algorithm, while the motion correction process is marked with thick lines. O1 O2 MV1 O3 MV2 O4 MV3 O5 MV4 MA Filter (Smoother) SMV1 SMV2 O2 SMV3 O3 SMV4 O4 O5 Fig.5 shows the block diagram of motion correction From the block diagram shown above, we can find that all of the stabilized frames are constructed from the first original frame and smoothed motion vectors. It means if the original frame is blurred, or an error happens in any motion vector, the output frame sequence will crash. Thus, we must adopt some error propagation control techniques. The first error propagation control technique we use is to set a threshold value. Equation 2 shows the concept of the whole process, where “u” and “v” mean motion vector, sub “o” and sub “s” means the original and the stabilized. / for each frame i / if (2) i 1 i 1 i 1 i 1 j 1 j 1 j 1 j 1 | u j ,o u j ,s |2 | v j ,o v j , s |2 threshold 2 stabilized frame(i+1) =MC(original frame i, smoothed MV i) else stabilized frame(i+1) =MC(smoothed frame i, smoothed MV i) For each frame i, if the difference between the accumulated smoothed motion vectors and the accumulated original motion vectors is smaller than the threshold value we set, we use the original frame i and the smoothed motion vector i to generate the stabilized frame i+1. That is because since the difference between the stabilized frame and the original frame is small, we can use the original one to get more accurate image information. Otherwise, if the difference is bigger than the threshold value we set, we should use the stabilized frame to get a better image. There is a tradeoff for choosing different threshold values. If we choose a small one, we will get smoother frames, since the difference of motion vectors often exceeds the threshold. On the other hand, if we choose a big one. We will get more accurate (less error) frames, since the output frames will always be generated by original frames rather than smoothed frames. Another way for error propagation control is to synchronize the output frame every certain period. In our algorithm, we choose the period as 8 frames. Fig.6 illustrates the concept (set the period as 5). O1 O2 MV1 O3 MV2 O4 MV3 O5 MV4 MA Filter (Smoother) SMV1 SMV2 O2 SMV3 O3 SMV4 O4 O5 Fig.6 Illustrates the synchronize concept (set the period as 5). Performance The performance of our algorithm is good for the quality; however, by the limitation of the CPU processing speed of Pocket PC, we still have to try to find the way to reduce our processing time. The reference performance value as follows: Frame Rate of Test Pattern •10 frames/sec Processing Old version: Time •Frames reading time : 180 sec •Frames processing time : 120 sec •Total processing time : 300 sec for 30 frames 10 sec/ frame New version: •Total processing time : 40 sec for 30 frames 4 sec/ frame We can almost neglect the reading time, because it’s really fast. This program works for both Desktop-PC andfor Pocket-PC. •Total processing time : 40 sec 30 frames We have two versions here. The old version takes 5 minutes to process, while the new version only takes 40 seconds. We improve reading time by using API functions instead of reading Totalthe processing time Total processing time frames pixels by pixels. Demo Here’re two video sequences represent the original one and the reconstructed by our algorithm. We can find the hand shacking effect has been removed of the reconstructed video sequence. If we analyze frame by frame, we can find that the blur effect of each frame is eliminated as well. Fig.7 (a) The original frame (b) The reconstructed frame by our algorithm Conclusions & Future Work We have verified our global motion estimation algorithm based on a 3-step estimation model that can remove hand shacking effect with good performance for translational video motions. We also have implemented the algorithm with embedded visual C++ on Pocket PC physical device successfully. In the future, to overcome the constraint of Pocket PC, we want to speed up our algorithm and achieve the dynamic, real time stabilization processing. We will make our algorithm applied for stabilizing videos in various conditions not only translation only motion as we suppose right now, but also translation with rotation, panning movement and so on. With the development of video stabilization algorithm for the Pocket PC, we can integrate it with wireless network to conference people whenever you are. However, limited by the transmission bandwidth and processing speed of Pocket PC, to achieve the goal of video conferencing, we want to get much lower bit rate of video sequences. One way we can do is to compress the image more and adopt the MPEG-7 technology to extract the image we want, i.e. human’s face out of the background. Since background compared to human’s face is not that desired, so we don’t have to send its information in every frame. Otherwise, video stabilization can also be used to electronics, such as camcorders. It’s hard to eliminate hand-shaking effect, however, we can use this algorithm to remove it. It will be useful to make the video quality better and let people enjoy multimedia world more. Reference [1] Ting Chen, “Video Stabilization Algorithm Using a Block-Based Parametric Motion Model” Information System Laboratory, Department of Electrical Engineering, Stanford University, http://www.stanford.edu/~tingchen/report.pdf [2] ENEE 408G Design lab 2 lecture handout, Department of Electrical & Computer Engineering, University of Maryland, College Park, http://www.ece.umd.edu/class/enee408g.S2002/ [3] M. Hansen, P. Anandan, and K.Dana, “Real-Time Scene Stabilization and Mosaic Construction,” in Image Understanding Workshop Proceedings, vol. 1,pp. 457-465,1994. [4] Q. Zheng and R.Chellapa, “A Computational Vision Approach to Image Registration,” IEEE Transactions on Image Processing (2), pp. 311-326, 1993 [5] K. Ratakonda, “Real-Time Digital Video Stabilization for Multimedia Applications,” Proceeding of the 1998 IEEE International Symposium on Circuits and Systems, vol. 4, pp.69-72, May 1998