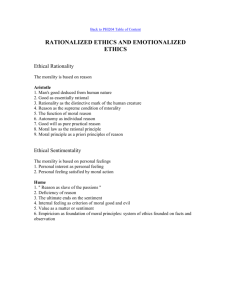

Morality as Intuition and Deliberation and Much More

advertisement