Rotation Invariant Iris Texture Verification

advertisement

Rotation-Invariant Iris Texture Verification

Amol D. Rahulkar and R. S. Holambe

Department of Instrumentation and Control Engineering, AISSMS’ Institute of Information Technology, Pune

amolrahulkar_000@yahoo.com

Abstract: The iris recognition system mainly consists of iris

segmentation, normalization, feature extraction, and matching.

The feature extraction plays a very important role in iris

recognition system. This paper is presented the rotationally

invariant iris texture feature extraction method which uses the

DWT and DT-CWT jointly. The proposed approach divides the

normalized iris image into six sub-regions and the combined

transform is applied separately on each region to capture the

information in nine directions. The rotation invariant feature

vector is formed by estimating the energies and standard

deviation of each sub-band. The Euclidean distance is used to

match the feature vectors of template and test iris image. The

performance of iris recognition with combine approach on

UBIRIS and MMU1 database is compared with Gabor, DWT,

and DT-CWT in terms of FAR, FRR, and computational

complexity. The experimentation shows that proposed method

improves the performance.

Index Terms- Iris recognition, DWT, DT-CWT, Gabor, Biometrics.

I INTRODUCTION

Biometric authentication is an automated method for

verifying an individual. The authentication is based on the

physiological or behavioral characteristics of human being.

Among various biometric techniques such as (fingerprint,

signature, face, palm-print, palm-vein, iris etc.) iris is found to

be the most reliable and accurate trait for high secured

applications. It is because iris is unique, stable, and noninvasive.

Iris recognition is the process of identifying an

individual by analyzing its iris pattern. The iris is a muscle

within the eye that regulates the size of the pupil. It is the

colored portion of the eye with coloring based on the

pigmentation within the muscle. The basic block diagram of

iris recognition system is shown in Fig.1. The whole system

consists of segmentation, normalization, feature extraction,

and matching.

The segmentation is the separation of iris from an

eye image. In 1993, Daughman [1] pioneer of iris recognition

system developed an integro-differential operator to localize

the inner and outer boundaries of the iris. In Wildes [2], first

the image intensity information converted into binary edge

map, and then edge points vote to instantiate the particular

contour parameter values. The edge map is recovered via

gradient based edge detection with the circular Hough

Transform. Li Ma et. al. [3], [4] first project the image in the

vertical and horizontal directions to approximately estimate

the center co- ordinates of the pupil. The center co-ordinates

of the iris are estimated with the help of threshold. The canny

edge detector and Hough Transform are then used to calculate

the parameters of the iris. The normalization is used to

simplify the further processing. The feature extraction is the

heart of the iris recognition system which extracts the features

from normalized iris image. The feature extraction generally

classified into three main categories-phase based method,

zero-crossing detection and texture based method. The Gabor

wavelet, Log-Gabor wavelet are used to extract the phase

information proposed by Daughman [1], Meng and Xu [5], L.

Masek [6], Proenca and Alexandre [7], M. Vatsa et. al. [8].

Boles and Boshash [9], Sanchez–avilla [10] used the DWT to

compute the zero-crossing representation at different

resolutions of concentric circles. Wildes [2] proposed the

characterization of iris texture through Laplacian Pyramid

with four different resolutions. Many researchers extracted the

texture features using bank of spatial filter [3], Haar [11]. The

Hamming Distance, Euclidean, weighted Euclidean Distance,

and signal correlation are used for the matching purpose. The

detailed literature on iris recognition can be found in [12].

The rest of this paper is organized as follows.

Section 2 provides an overview of proposed approach. A

detail description of the iris preprocessing, feature extraction,

feature vector and matching are given in Section 3, 4, 5 and 6

respectively. Section-7 reported the experimental results.

Finally, the conclusion is given in Section 8.

2. OVERVIEW OF PROPOSED APPROACH

The two noisy iris databases we have collected, and

observed that iris consists of irregular characteristics such as

freckles, crypts, radial streaks, radial furrows, collarette, rings

etc.[1].The verification rate in the presence of noise is

improved by dividing the iris into six-regions and extracted

the features from each region using Gabor, DWT, and DTCWT separately. In order to improve the performance, we

have derived the scale and rotational invariant features from

the combination of DWT and DT-CWT which extracts the

information in nine different directions.

Fig. 1. Block diagram of Iris Recognition system.

3. PREPROCESSING

44

IRIS TEXTURE VERIFICATION

Segmentation: The iris can be segmented by detecting the

limbic boundary (between sclera and iris) and the pupillary

boundary (between pupil and iris). In this paper, we have used

Where

the integro-differential operator [1] as

max G (r ) *

( r , x 0, y 0)

I ( x, y )

ds

r x 0, y 0,r 2r

(5)

x0, y0 specify position in the image, ( , )

specify the effective width and length, and (u 0, v0) specify

the modulation, which has the spatial frequency

(1)

This operator searches over the image domain (x, y) for the

maximum change in the blurred partial derivative with

respective to increasing radius r of the normalized contour

integral of I(x, y) along a circular arc ds of radius r and center

coordinate(x0,y0). Gσ(r) is Gaussian filter and * is convolution

operator.

0 u 0 2 v0 2

. In this work, Gabor is implemented

with 4 scales and 6 orientations.

Normalization : The size of the iris may change due to the

illumination variation caused pupil dilation and constriction.

Such elastic stretching or constriction of trabecular meshwork

of iris affect on the iris recognition accuracy. In order to

achieve accurate recognition we have used the Daughman’s

rubber sheet model [1] where the circular portion of iris ring

is unwrapped in anticlockwise direction into rectangular fixed

block by (2).

I (r , ) I [ x(r , ), y (r , )]

(2)

where,

x(r , ) (1 r ).xp( ) r.xl( )

(3)

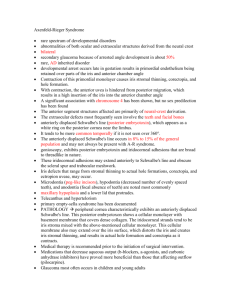

(a)

(b)

(c)

y (r , ) (1 r ). yp( ) r. yl ( )

(4)

xp(θ), yp(θ) referred the pupillary boundary points and xl (θ),

yl (θ) denoted the limbic boundary points. The values of r lies

in [0 1] and θ locates in the range of [0 2π]. Hence, it will

become scale, translation invariant and also subsequent

processing becomes very easy.

(d)

(e)

Enhancements: The normalized image still has low intensity

contrast and may have non uniform brightness caused by the

position of light sources. Li Ma et. al. [3,4] proposed the

method divides into two steps. First is background brightness

estimation and the second is histogram equalization. We used

these two steps to obtain well distributed texture image which

suit very effective for feature extraction. The results are

shown in Fig.2.

(f)

4. FEATURE EXTRACTION

Gabor Transform: Gabor Transform is constructed by the

modulation of Gaussian function with complex sinusoidal

function. It provides the optimum conjoint localization in

space and frequency. It is because sine wave perfectly

localized in frequency, but not localized in space. So

modulation of sine wave with Gaussian provides the

localization in space. The centre frequency of the filter is

specified by the frequency of the sine wave, and the

bandwidth of the filter is specified by the width of the

Gaussian.

(g)

Fig.2. Iris Preprocessing (a).Original eye image. (b) Separated iris

region (c).Original normalized image. (d) Brightness estimation. (e).

Enhanced image.(f), (g)Partitioned image

Discrete

Wavelet

Transform

(DWT):

The

DWT

decomposes an image f x, y L R

into the form of

set of dilation and translation of wavelet functions

2

2

l x, y and scaling function ( x, y ) .

45

j 0 jr0,l j. ij 0,l ; j 0 rj 0,l ij 0,l

AKGEC JOURNAL OF TECHNOLOGY, vol. 1, no.1

f ( x, y ) kZ 2 s j 0, k j 0, k ( x, y ) wlj , k lj , k ( x, y )

l j j 0k z

Where j 0,k ( x, y) 2

l

j ,k

j0

(6)

(2 ( x, y) k ) ,

j0

( x, y) 2 (2 ( x, y) k ) and l {0 ,90 ,45 }

j

j

0

The

DT-CWT

{ 75

2

0

0

The standard DWT is implemented with the help of

appropriately designed Quadrature Mirror filters (QMF). It

consists of low-pass coefficients g[n] and high-pass

coefficients h[n ] where n 0 L . These two impulse

responses thus related with the scaling and wavelet functions

as:

( x ) 2 g[ n] ( 2 x n)

(7)

( x) 2 h[n] (2 x n)

(8)

0

gives

the

(14)

information

in

,45 ,15 ,15 ,45 ,75 } six directions.

0

0

0

0

0

It is always desirable to design the iris recognition

system must be scale, translation and rotation invariant. Our

system is achieved the scale and translation invariant by

normalizing the iris ring into fixed rectangular size. The

rotation invariance is achieved by unwrapping the iris ring at

five different initial angles -50,-100, 00, 50, 100. We thus

created the five templates which denote the five rotation

angles for individual iris.

V. FEATURE VECTOR

In this work, each iris image is decomposed up to fourth level.

The energy using L1 norm and standard deviation [16] from

nZ

nZ

The products of above 1-D wavelet and scaling functions can

be used to obtain the 2-D scaling and wavelet functions from

(9)-(12).

(i, j ) (i ) ( j )

(9)

1 (i, j) (i) ( j)

2 (i, j) (i) ( j)

3 (i, j ) (i) ( j )

(10)

(11)

Dual Tree-Complex Wavelet Transform (DT-CWT): The real

DWT has poor directional selectivity, phase invariance, and

lacks from shift invariance property. These problems can be

solved by using Complex Wavelet Transform with

introducing limited redundancy [15]. In this, two parallel fully

decimated tree with real filter coefficients are used to achieve

Perfectly Reconstruction (PR) and good frequency response

characteristics as shown in Fig. 3(b). The DT-CWT

decomposed a signal f(x) in terms of complex shifted and

dilated mother wavelet filters (x ) and scaling function

(x).

lZ

c j ,l j ,l ( x)

(13)

j j 0 lZ

Where s j 0 ,l is the scaling coefficient and c j ,l is the complex

wavelet coefficients with

Fig.3(b).1-D DT-CWT

(12)

The equation (9) known as approximation coefficients (LL)

and the remaining three are known as detail coefficients (LH,

HL, HH). The LH, HL, and HH sub-bands give the

information in horizontal, vertical, and diagonal direction.

Thus, the filters divide the input image into aforementioned

four non-overlapping multi-resolution sub-bands LL1, LH1,

HL1, and HH1. The sub-band LL1 represents coarse-scale

DWT coefficients while the sub-bands LH1, HL1, HH1

represent the fine scale DWT coefficients. In order to obtain

the next coarser scale of wavelet coefficients, the sub-bands

LL1 is further decomposed to get again the four sub-bands as

shown in Fig .3(a).

f ( x ) s j 0 ,l j 0 , l ( x )

Fig.3(a) Sub-band decomposition

of real DWT

j 0 and j ,l complex as

each sub-band are computed as the texture feature vector from

(15) and (16).

Ek

k

M N

1

ck (i, j)

M N i 1 j 1

M

1

M N i 1

(15)

N

ck (i, j ) k (i, j )

2

(16)

j 1

Where Ek and K are the energy and standard deviation for

the kth sub-band of dimension M N with coefficients ck (i, j).

Hence, at each scale and direction, energy and

standard deviation based features are computed and the

resultant feature vector is given by (17)

f f E 1 , f 1 f E 2 , f 2 f E 3 , f 3 ...

(17)

Each iris image is decomposed using Gabor, DWT, and

DT-CWT. Thus four different sets of feature vectors i.e. set1

using Gabor Transform, set2 using DWT, set3 using DTCWT, and set4 using the combination of DWT and DT-CWT

are derived.

VI. MATCHING

The Euclidean distance (18) is used as a classifier of an

unknown testing iris feature vector and is compared with a set

of known iris feature vectors.

1

N

2

EDi Ei Ti

i 1

(18)

46

IRIS TEXTURE VERIFICATION

where N is the dimension of feature vector. Ei is ith component

of an unknown iris and Ti is ith value of known iris.

VII. EXPERIMENTAL RESULTS

The approach is implemented using MATLAB 7.0 with Intel

Dual core P4 processor, 2.0 GHz and 1GB RAM. In order to

evaluate the performance of the proposed scheme, we selected

the two databases namely UBIRIS [13] and MMU1 [14]. The

UBIRIS database consists of 1877 iris images from 241

persons captured in two different sessions using Nikon E5700

camera. The images in this dataset have artifacts in the form

of reflection, contrast, natural luminosity, focus and

eyelids/eyelashes obstructions. The MMU1 database contains

45 subjects having 5 images for left and 5 images for right eye

which includes 450 irises from 90 classes. The images in this

database are captured with LG IrisAccess camera which

contains, almost exclusively, iris obstructions by

eyelids/eyelashes and specular reflections. In order to be the

convenient for comparison purpose of both the databases, we

have selected 90 subjects randomly from UBIRIS dataset

including session 1 and session 2 contains 5 images per

person. The images selected from UBIRIS dataset for our

work are mostly under non-ideal environmental conditions.

The Daughman’s integrodifferential operator is used

to segment the iris. The Daughman’s rubber sheet model is

then used to normalize the iris ring into the rectangular fixed

size of 64x360 with five initial angles (-50,-100, 00, 50, 100) to

achieve scale, translation, and rotation invariance. Thus, the

two images each with five different angles for each person

enrolled in the database. The aim was to propose the robust

test under noisy environment; we partitioned the normalized

images into six sub regions as shown in Fig.2 (f) and (g).

Each sub region is decomposed using Gabor, DWT

(db3), DT-CWT, and DWT with DT-CWT jointly up to four

levels. The local discriminating feature vectors are derived for

each region by estimating the energy and standard deviation

of the individual sub-band. The same process repeats for test

iris. The test iris vector is compared with 10 enrolled iris

feature vectors and selected the minimum score. The total

number of inter and intra class comparisons on each subregion are 200250 and 4500 respectively. The same

experiment carried out on the whole iris normalized image to

extract the global features. Thus, we have performed total

eight experiments. The performance measured based on FAR,

FRR, and accuracy. Overall, it is observed from Table 1, 2,

and 3 that the performance of the combination of DWT with

DT-CWT better than the use of individual DWT, DT-CWT,

and Gabor. It is because Gabor is highly redundant; DWT is

suffering from limited directionality, shift invariance, and lack

of phase invariance, DT-CWT extracts the information in six

directions. Hence, the combination of DWT and DT-CWT

overcome the individual limitations and extracts the

information in nine directions with limited computational

complexity

0 ,15 ,15 ,75 ,75 ,45 ,45 ,45 ,90 .

0

0

0

0

0

0

0

0

0

7.1 Results

TABLE .1 Comparison of the Computational Complexity

Method

Gabor Transform

DWT

DT-CWT

DWT+DT-CWT

Feature extraction(ms)

848

33

97

186

TABLE 2 Comparison for UBIRIS Database.

DWT

DT-CWT

DWT+DT-CWT

(proposed)

FRR

Accuracy

(%)

(%)

Features

FAR

(%)

FRR

(%)

Accuracy

(%)

FAR

(%)

FRR

(%)

Accuracy

(%)

FAR

(%)

Global

8.44

8.49

91.53

6.56

6.67

93.385

6.62

6.44

Proposed

(local)

3.91

4.00

96.04

3.54

3.56

96.45

2.45

3.56

Gabor

FAR

(%)

FRR

(%)

93.47

7.00

7.11

96.995

2.40

2.44

Accuracy

(%)

92.95

97.77

TABLE 3 Comparison for MMU1 Database.

FAR

(%)

FRR

(%)

Accuracy

(%)

FAR

(%)

FRR

(%)

Accuracy

(%)

DWT+DT-CWT

(proposed)

FAR

FRR

Accuracy

(%)

(%)

(%)

16.01

14.44

84.77

16.31

16.89

83.40

15.21

15.31

84.74

15.66

15.66

7.25

7.33

92.71

6.77

6.89

93.17

6.18

6.89

93.46

9.11

9.33

DWT

Features

Global

Proposed

(local)

DT-CWT

Gabor

FAR

(%)

FRR

(%)

Accuracy

(%)

84.34

90.78

47

Fig. 6. (a) ROC plot: performance of the algorithms on the UBIRIS database

Fig. 6. (b) ROC plot showing the performance of the algorithms on the MMU1

database

VIII. CONCLUSION

In this paper, we have presented an effective approach for iris

feature extraction. This method is based upon the

decomposition of an iris image using DWT (db3) and DTCWT jointly which extracts the information in nine

orientations with the introduction of limited computational

complexity. Furthermore, the proposed method divides the

image into six sub-images and derives the local features

separately from each region. The experimental analysis

illustrates that suggested method reduced the FAR and FRR

by 73% and 44.72% respectively in UBIRIS database whereas

59.36%, 54.99% respectively in MMU1 database against

global features. It is also observed that proposed method

slightly improved the performance over the individual

transforms. The proposed method is scale, translational, and

rotational invariant and also performs in the presence of noise

as we have not considered any noise elimination technique

during the segmentation of an iris. It is also observed that the

computational complexity of the proposed method is 5 times

less than the Gabor Transform which suits better for the real

time applications. For future work, it is necessary to remove

the HH sub-band of DWT from DWT+DT-CWT to overcome

the redundancy and captures the information in number of

directions so as to improve the FAR and mainly FRR.

ACKNOWLEDGEMENT

The authors would like to acknowledge Dr. Nick Kingsbury who

has helped us by providing the material on DT-CWT. We take this

opportunity to express our gratitude towards Dr. P. B. Mane, Principal,

AISSMS IOIT, Pune (Ms), India for his constant encouragement and support.

IX. REFERENCES

[1]. John G. Daughman, ”High confidence of visual recognition of

persons by a test of statistical independence”, IEEE transaction on

pattern analysis and machine intelligence, vol.15, No.11, November

1993.

[2]. R. P. Wildes, ”Iris Recognition: An emerging Biometric

Technology” ,Proceedings of the IEEE,Vol.85,No.9,September

1997.

[3]. Li Ma, Tieniu Tan, Yunhong Wang, Dexin Zhang, ”Personal

identification Based On Iris Texture Analysis”, IEEE Trans. On

Pattern Analysis and Machine Intelligence, vol.25, No, 12, December

2003.

[4]. Li Ma, Tieniu Tan, Yunhong Wang, Dexin Zhang, ”Efficient Iris

Recognition By characterizing Key local variation”, IEEE Trans, On

Image processing, Vol. 13,No. 6,June 2004.

[5]. Hao Meng and Cuiping Xu, ”Iris recognition algorithm based on

gabor wavelet transform”, Proc. Of the 2006 IEEE, International

conference on mechatronics and automation, June-25-28, 2006,

Luoyang, China.

[6]. L. Masek, “Recognition of Human Iris pattern for biometric

identification,”M.Thesis, The University of Western Australia, 2003.

[7]. Hugo Proenca and Luis A.Alexandre,” Toward Noncoperative Iris

Recognition: A Classification Approach Using Multiple Signatures”,

IEEE Trans. On Pattern Analysis and Machine Intelligence, Special

Issue on Biometrics, July, 2007, vol.9, No. 4, pag.607-612.

[8]. Mayank Vatsa, Richa Singh, Afzel Noore,”Improving Iris

Recognition

Performance

Using

Segmentation,

Quality

Enhancement, Match Score Fusion, and Indexing”, IEEE Trans. On

Systems, Man, and Cybernetics-Part B: CYBENETICS, Vol. 38,

No.4, August 2008.

[9]. W. W. Boles and B.Boashash, ”A Human Identification Technique

Using Images of the Iris and Wavelet Transform”, IEEE Trans. On

Signal Processing, Vol. 46, No. 4,April 1998.

[10]. C.Sanzhez-Avila,”Iris Based Biometric Recognition Using Dyadic

Wavelet Transform”, IEEE AESS System magazine, pp.3-6,

October 2002.

[11]. Shinyoung Lim,, Kwanyong Lee, Okhwan Byeon, Taiyun Kim,

”Efficient iris recognition through improvement of feature

vector and classifier”, ETRI journal, volume 3,No.2,June2001.

49

IRIS TEXTURE VERIFICATION

[12]. Kevin W.Bowyer, Karen Hollingsworth, and Patrick J. Flynn,”Image

Understanding for Iris Biometrics: A Survey”, Compute Vision and

Image Understanding 110(2), 281-307, May 2008.

[13]. [Online] www.iris.di.ubi.pt.

[14]. [Online]

Multimedia

university

iris

database,

http://www.pesona.mmu.edu.my/~ccteo/

[15]. Ivan W. Selesnick, R. G. Baraniuk,Nick G. Kingsbury, “ The Dual

Tree Complex Wavelet Tranform”, IEEE Signal Processing

Magazine [123] November, 2005.

[16]. Manesh Kokare, P.K.Biswas, B.N.Chatterji ,” Rotation invariant

Texture Image Retrieval using Rotated complex wavelet filters”,

IEEE Trans. On Systems, man and cybernetics-Part

B:Cybernetics, Vol.36, No.6, December 2006.

Amol D. Rahulkar is currently working as

Assistant

Professor

in

Department

of

Instrumentation and Control Engineering,

AISSMS’ Institute of Information Technology,

Pune, affiliated to University of Pune. He is

working towards his Ph.D. at S.G.G.S.I.E. & T.,

SRTMU, Nanded. He received his B.E. from the

same institute and M. Tech. from IIT, Kharagpur.

He has seven years of experience in teaching

undergraduate students. His research interests are

in the areas of digital image processing,

Biometrics and Reliability Engineering.

50