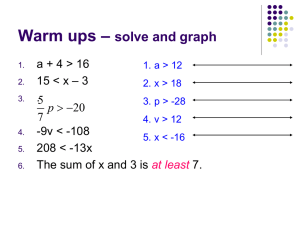

Inequalities by Amanda Wicks

advertisement

Inequalities An inequality is defined as “a statement of relative size or order of two objects” [1]. Inequalities arose from the question what occurs when the two objects or equations are not equal to each other. People use inequalities to measure or compare two objects. Inequalities are applied in mathematics when it is difficult to constrain quantities that are difficult to express in a formula. In inequalities, there exists usually no one solution but a set of solutions. There are basically two domains of inequalities. The first domain of inequalities is connected to the shape of a triangle while the second domain is associated with mean. We will examine the first domain related to the triangle. Probably discovered centuries before, the person attributed with proving the triangle inequality is Euclid. Euclid of Alexandria as he was known during his time so as not to be confused with Euclid of Megara who was a famous philosopher was “the most prominent mathematician of antiquity best known for his treatise on mathematics The Elements” [2]. Because little is known about Euclid many assumptions are made about his life and work. It is thought that he lived during the lifetime of the first Ptolemy and lived in Alexandria, Egypt. One such assumption made about Euclid’s life is that he must have been a pupil of Plato’s Academy in Athens due to his vast knowledge of Eudoxus’ and Theaetetus’ geometry. Although The Elements is Euclid’s most notable work, other works of Euclid’s include Data, On Divisions, Optics, and Phaenomena. The following works of Euclid’s have all been vanished: Conics, Book of Fallacies, Surface Loci, Porisms, and Elements of Music. Euclid’s most famous work The Elements consists of thirteen books. The importance of The Elements may be contributed to the fact it is the first book that 1 creates an axiomatic system. The logical reasoning of the mathematics demonstrated in The Elements was the first of its kind and later was extremely influential in other areas such as science. The Elements is regarded as one of the most successful textbooks ever written with the number of editions in print second only to the Bible. Euclid commenced the work with five postulates and definitions. It is thought Euclid used earlier resources when writing The Elements because numerous definitions are introduced which were never applied previously such as oblong, rhomboid, and rhombus. The subject of books one through six is plane geometry. Topics of plane geometry discussed in books one and two are “basic properties of triangles, parallels, parallelograms, rectangles, and squares” [2]. The triangle inequality is discussed and proved in book one. The remaining subject matter of books three through thirteen include properties of circles, number theory, the Euclidean algorithm for computing the greatest common divisor of two numbers, irrational numbers, and three dimensional geometry. One of the greatest attributes of The Elements is how understandable Euclid states the theorems and their proofs. The triangle inequality is discussed under Proposition 20 in Book 1 of Euclid’s The Elements. The following is a recreation by David Joyce a Professor of Mathematics as Clark University of the triangle inequality as it appears in Euclid’s The Elements [3]. D A B C In any triangle the sum of any two sides is greater than the remaining one. Let ABC be a triangle. We say that in the triangle ABC the sum of any two sides is greater than the remaining one, that is, the sum of BA and AC is greater than BC, the sum of AB and BC is greater than AC, and the sum of BC and CA is greater than AB. Choose a point D such that DA equals to CA and then draw BD. Join DC. Since DA equal AC, therefore the angle ADC also equals the angle ACD. Therefore the angle BCD is greater than the angle ADC. 2 Since DCB is a triangle having the angle BCD greater than the angle BDC, and the side opposite the greater angle is greater, therefore DB is greater than BC. But DA equals AC, therefore the sum of BA and AC is greater than BC. Similarly we can prove that the sum of AB and BC is also greater than CA, and the sum of BC and CA is greater than AB. Therefore in any triangle the sum of any two sides is greater than the remaining one. There are several proofs of the triangle inequality which we will progress through during this paper. The first one to be discussed is the proof of the triangle inequality for real numbers. Triangle Inequality for Real Numbers For any a, b, ab a b We know ab a b is true because of these four cases: Since ab ab a b , then 2ab 2 a b 1. a 0, b 0. Then ab ab a b Thus, a 2 2ab b 2 a 2 2 a b b 2 2. a 0, b 0. Then ab -(ab) a(-b) a b 3. a 0, b 0. Then ab -(ab) (a)b a b 4. a 0, b 0. Then ab ab (a)( b) a b 2 Because a a 2 , then a 2 2ab b 2 a 2 a b b which can be written as (a b) 2 ( a b ) 2 . 2 We can say that a a 2 is true because a 2 a 2 and if we take the positive square root, a 2 a 2 ; however, a 0 so a a 2 is true. Thus, (a b) 2 ( a b ) 2 . Again since a a 2 , then a b a b . This proves the triangle inequality for real numbers. 3 From real numbers, the natural progression is to move into the proof of the triangle inequality for complex numbers. Below is the proof: Triangle Inequality for Complex Numbers The triangle inequality states if z and w are complex numbers then z w z w 2 z w ( z w)( z w) Because z z z 2 by the following: Let z = a + bi, then z a bi Thus z z (a bi)( a bi) a 2 b 2 But z a 2 b 2 so z 2 Thus z z a 2 b 2 z 2 z w ( z w)( z w) Because Re( z ) z for all z C 2 z w z z ww z w w z 2 2 2 2 2 2 z w z w z w ( z w) z w z w 2 Re( z w) a2 b2 2 By substitution of ( z w) for ( z w) because of the following: Let z a bi and w c di Then zout wthe (aabove bi) (c di) (a c) i(b d ) Foiling So ( z w) (a c) i(b d ) Combine the last two terms Also z a bi and w c di and so because z z 2 Re z . This is true because of zw (a bi) (c di) (a c) i(b d ) ( z w) the following: Let z a bi and z a bi z z a bi a bi 2a 2 Re( z) 2 2 2 2 2 2 z w z w 2 zw zw z w 2z w Because Re( z ) z for all z C z w can be rewritten as z w because of the following : zw z w zw zw zw zw 2 zw( zw) 2 zw z w 2 z zww 2 z 2 w 2 Hence taking the square root of each sides zw z w 4 2 2 2 zw z w 2z w w can be replaced with w because of the following : Let z a bi z a 2 b 2 and z a bi so z a 2 (b) 2 a 2 b 2 z 2 z w ( z w )2 Since z w 0 and z w 0 Take the square root of both sides yields z w z w (All of the above information came from Heriott Watt University Scholar Math website [4]) Both of these proofs are very similar. Each proof deals with understanding that the absolute value of a number whether real or complex is greater than or equal to that same number. However, the complex number proof is a little more involved because one must also know and understand the properties of complex numbers and their conjugates. So far we have discussed with the triangle inequality only in a one dimensional space. Now we will look at the triangle inequality in R n space. In R n space, the triangle inequality is known as the Cauchy-Schwarz Inequality in R n named after two famous mathematicians Augustin Louis Baron de Cauchy and Herman Amandus Schwarz. Augustin Cauchy (1789 - 1857) was born in Paris, France during the French Revolution. 5 At an early age, Lagrange took an interest in young Cauchy’s mathematical education and urged Cauchy’s father to enroll him in Ecole Centrale du Pantheon to study languages. After Ecole Centrale du Pantheon, Cauchy entered Ecole Polytechnique and then the engineering school Ecole des Ponts et Chaussees. After graduating engineering school, Cauchy began work on the Ourcq Canal project. For Cauchy’s first job in 1810, he worked “on port facilities for Napoleon’s English invasion fleet” in Cherbourg [5]. In 1811, Cauchy proved “that the angles of a convex polyhedron are determined by its faces” [5]. He left his Cherbourg in 1813 for health reasons and returned to Paris where he applied for several positions none of which he was offered until 1815 when he was offered the assistant professorship of analysis at his alma mater Ecole Polytechnique. During Cauchy’s period of unemployment, he devoted himself to his mathematical research. From Ecole Polytechnique, Cauchy took a position at the College de France in 1817. There he “lectured on methods of integration which he had discovered, but not published, earlier” [5]. As well as defining an integral, “Cauchy was the first to make a rigorous study of the conditions of convergence of infinite series” [5]. Next, the deposed king Charles X of France offered Cauchy the position of tutor to his grandson, the duke of Bordeaux. This position allowed Cauchy to travel extensively. In exchange for his services, the Charles X made Cauchy a baron. In 1838 upon returning to Paris, Cauchy reclaimed his position at the Academy, but he was not allowed to teach there because he refused to a take an oath of allegiance. After losing the position of mathematics chair at the College de France, Cauchy’s mathematical output included “work on differential equations and applications to mathematical physics” [5]. Cauchy also wrote a four volume book entitled Exercises d’analyse et de physique mathematique which discussed mathematical astronomy. Once Louis Phillippe was overthrown, Cauchy returned to his position at Ecole Polytechnique. An argument involving “a priority claim regarding a result on 6 inelastic shocks” with Duhamel overshadowed Cauchy’s remaining years [5]. On May 23, 1857, Cauchy died rather calmly with is family by his side. Cauchy wrote 789 mathematical papers and is associated with many items in mathematics including the famous inequality we are studying. Some of these items are the theory of series, calculus with the aid of limits and continuity, and the “Cauchy-Kovalevskaya existence theorem that offers a solution of partial differential equations” [5]. The second mathematician in our inequality is Hermann Schwarz (1843 1921). He studied at Gewerbeinstitut, which is later to be known as the Technical University of Berlin, with the goal of obtaining a chemistry degree. Not long after coming to Gewerbeinstitut, Schwarz was convinced to change his program of study to mathematics. He continued to study at Gewerbeinstitut until he attained his doctorate. Schwarz researched minimal service areas which is a typical problem of the calculus of variations. In 1865, he revealed a “minimal surface has a boundary consisting of four edges of a regular tetrahedron,” which is known today as Schwarz minimal surface [6]. Upon obtaining his certificate to teach, Schwarz acquired positions at the University of Halle, Eidgenossische Technische Hochschule, then at Gottingen University, and finally at the University of Berlin in 1892. While at these various institutions, Schwarz researched “the subjects of function theory, differential geometry, and the calculus of variations” [7]. Another area Schwarz researched was conformal mappings. His achievement in this area was connected with the Riemann mapping theorem. Schwarz’s process incorporated the Dirichlet problem which mapped “polygonal regions to a circle” [6]. By performing this method, Schwarz was then able to provide a thorough proof of the Riemann mapping theorem. Schwarz’ most significant work was answering the “question of whether a given 7 minimal surface really yields a minimal area” [6]. Another great achievement of Schwarz’ was the Schwarz function. In this work, Schwarz characterized “a conformal mapping of a triangle with arcs of circles as sides onto the unit disc” [6]. The Schwarz function was one of the first examples of an automorphic function which was a helped Klein and Poincare to originate the theory of automorphic functions. According to O’Connor and Robertson, it is not surprising that Schwarz discovered a “special case of the general result now known as the Cauchy-Schwarz inequality.” A great deal of Schwarz’ work is characterized by analyzing specialized and narrow questions and applying much more general methods for solving them. A third mathematician’s can be added to the Cauchy-Schwarz inequality. His name is Viktor Bunyakovsky born in the Ukraine in 1804 (1804 – 1889). Bunyakovsky was a pupil of Cauchy’s in Paris, where he remained until 1826. Upon returning to St. Petersburg, Bunyakovsky became a central figure in bringing mathematics to Russia and the Russian Empire. Bunyakovsky brought to Russia the Cauchy’s theories as well as probabilistic theories of the French. Bunyakovsky taught mathematics and mechanics at several institutions in St. Petersburg including the First Cadet Corps, the Communications Academy, and the Naval Academy. From 1846 to 1880, Bunyakovsky held a position at the University of St. Petersburg. Bunyakovsky did most of his research at the St. Petersburg Academy of Sciences. He held the position of vice-president of the Academy of Science until his death. Although not always credited, Bunyakovsky is “best known for his discovery of the Cauchy-Schwarz inequality” some twenty five years prior to Schwarz’ own discovery [8]. Other work of Bunyakovsky includes “new proof of Gauss’ law of quadratic reciprocity” which is an area of number theory, applied mathematics, and geometry [8]. Bunyakovsky’s work Foundations of the mathematical theory of probability is credited with providing 8 the foundation of Russian probabilistic vocabulary. Over his life, Bunyakovsky was published 150 times for his work in mathematics and mechanics. Before tackling the Cauchy-Schwarz inequality, we need to understand some of the vocabulary involved. The dimension in which the Cauchy-Schwarz inequality is used is R n space also known as Euclidean n-space. In R n space there are more than three dimensions. According to Anton and Rorres’ Elementary Linear Algebra [9], the dot product in R 2 or R 3 is referred to the Euclidean inner product in R n which is defined for two vectors ( u u1 , u 2 ,...., u n ) and v v1 , v2 ,...., vn as u v u1v1 u 2 v2 .... u n vn . Another term is the Euclidean norm or Euclidean length of a vector u = ( u1 , u 2 ,...., u n ) in R n is u 1 (u u ) 2 (u12 , u 2 2 ,..., u n 2 ) . There are some properties of length in R n that we should also know. According to Elementary Linear Algebra [9], these properties hold true if u and v are vectors in R n and k is any scalar, then: (a) u 0 (b) u 0 if and only if u = 0 (c) ku k u (d) u v u v . The last property should look familiar because it is the triangle inequality. A visualization of the triangle inequality with respect to vector length is: v u+v u 9 Triangle Inequality for Euclidean n space A proof of the triangle inequality for Euclidean n space is shown below and it works in generality. uv 2 (u v) (u v) (u u ) 2(u v) (v v) uv 2 u 2 2(u v) v uv 2 u 2 2u v v uv 2 u 2 2u v v uv 2 ( u v )2 2 2 2 uv u v Once we have found the norm or lengths of a certain object or vector, we can now define a distance between two objects or vectors as follows. The Euclidean distance between two points, u and v, in R n is defined as d (u, v) u v (u1 v1 ) 2 , (u 2 v 2 ) 2 ,..., (u n v n ) 2 . The properties of distance in R n are similar to properties of length. These following properties are true if u,v, and w are vectors in R n and k is any scalar: (a) d(u,v) 0 (b) d(u,v) = if and only if u = v (c) d(u,v) = d(v,u) and (d) d(u,v) d(u,w) + d(w,v). Once again, the last property should look familiar because it is the triangle inequality only this time it is applied to distance. The proof works in complete generality whenever we have a norm with the properties a – d. We can then define the distance and the triangle inequality will follow: d (u , v) u v (u w) ( w v) d (u , v) u w w v d (u , v) d (u , w) d ( w, v) A visualization of this is also similar to the one for length with the exception that the sides of the triangle are segments and not rays. 10 u+v v u Both u v u v and d(u,v) d(u,w) + d(w,v) generalize the results of triangle inequality in Euclidean geometry. For length, u v u v simply may be restated that the sum of two sides of the triangle are greater than or equal to the length of the third side. As for distance, d (u,v) d(u,w) + d(w,v) may be summed up by stating that the shortest distance between two points is a straight line. Now we are ready for the Cauchy-Schwarz Inequality in R n which states that if u = ( u1 , u 2 ,...., u n ) and v= ( v1 , v2 ,...., vn ) are vectors in R n , then u v u v . In other words, the absolute value of the inner product of u and v is less than or equal to norm or length of u multiplied by the norm or length of v which is symbolized by u1v1 u 2 v 2 ..... u n v n (u1 u 2 .... u n 2 2 1 2 2 ) (v1 v 2 .... v n 2 2 1 2 2 ) . The proof of the Cauchy-Schwarz Inequality is shown below. Cauchy-Schwarz Inequality If u 0, then u u u v 0 so the two sides are equal to each other. Assume u 0 . Let a u u , b 2u vand c v v and let t be any real number. By the positivity axiom, the Euclidean inner product of any vector with itself is always positive. 0 [(tu v) (tu v)] (u u)t 2 2(u v)t (v v) . Using substitution, we can say that (u u)t 2 2(u v)t (v v) at 2 bt c . The inequality implies that the quadratic polynomial at 2 bt c has either no real roots or a double root. Thus, the discriminant must satisfy the condition of b 2 4ac 0 . Expressing the coefficients a, b, and c in terms of vectors u and v gives [2(u v)]2 4(u u)(v v) 0 which may be simplified to 4(u v) 2 4(u u)(v v) 0 . This statement may also be rewritten 2 2 as 4(u v) 4(u u)(v v) which may be simplified to (u v) (u u)(v v) . 11 Taking 1 (u u ) 2 the square root of both sides and using the fact that u yields u v u v . From the Cauchy-Schwarz inequality which deals with vectors of a finite number of components, we can now move into a discussion of a special type of vector space called sequence space. A sequence space is a vector with an infinite number of components which is represented by v = (v1 , v2 ,.....) that has no ending. There are two inequalities which extend the Cauchy-Schwarz inequality into sequence space. The first of these inequalities is the Hölder inequality named after the German born mathematician, Otto Hölder (1859 – 1937). Hölder’s education includes studying at the polytechnic in Stuttgart and later at the University of Berlin. While at the University of Berlin, Hölder became interested in algebra because of the influence of Kronecker. In 1882, Hölder gave his dissertation on “analytic functions and summation procedures by arithmetic means” at the University of Tubingen [10]. In 1884, Hölder took the position of lecturer at Gottingen. At Gottingen, Hölder discovered the inequality which is now named for him, began his “work on the convergence of the Fourier series,” and developed a fascination for the area of group theory” [10]. Hölder’s lifetime work includes the Jordan-Hölder theorem which deals with the “uniqueness of the factors groups in a composition series,” contributions to group theory, the creation of inner and outer automorphisms. 12 A proof of Hölder’s inequality is given below as reconstructed by a Professor Gabriel Nagy at Kansas State University who uses the method induction [11]. Hölder’s Inequality states: Let a1 , a2 ,.... , an , b1 , b2 ,. . ., bn be nonnegative numbers. Let p, q > 1 be real 1 n p 1 1 a p a b numbers with the property 1 . Then j j j p q j 1 j 1 Moreover, one has equality only when the p p p p (a1 ,...., an ) and (b1 ,...., bn ) are proportional. n 1 q bjq . j 1 sequences n The proof of Hölder’s Inequality is as follows. The case n = 1 is trivial. Case n = 2 Assume (b1 , b2 ) (0,0) . Otherwise everything is trivial. Define the number b1 r . 1 (b1q b2 q ) q Notice that r 0,1 b1 The proof of why r 1 q q 1 follows as: (b1q b2 ) We were given that b1 and b2 are nonnegative numbers. Let us assume that the second positive number b2 equals 0. Thus the denominator 1 q 1 q q b1 1 . However, b2 must be a positive b1 b1 1. number making 1 the greatest value r can be. Therefore, r 1 becomes (b1 0) (b1 ) b1 . So r q (b1q b2 q ) q We also have 1 q q b2 1 q q (1 r ) . (b1q b2 ) Notice also that, upon dividing by (b1q 1 q q b2 ) , the desired inequality 13 1 p p 1 q q 1 q q 1 p p a1b1 a2b2 (a1 a2 ) (b1 b2 ) reads a1r a2 (1 r ) (a1 a2 ) . It is obvious that this is an equality when a1 a2 0 . Assume (a1 , a2 ) (0,0) , and p q p 1 q q set up the function f (t ) a1t a2 (1 t ) , t 0,1, We now apply the Lemma of the following: 1 1 Let p, q >1 be such that 1 , and let u and v be two nonnegative numbers, p q at least one being non-zero. Then the function f: [0,1] Real numbers defined 1 1 q q up q by f (t ) ut v(1 t ) , t 0,1 has a unique maximum point at s p . p u v 1 p p The maximum value of f is max f (t ) (u p v ) . t0,1 The proof of this Lemma is as follows: If v 0, then f (t ) tu, t [0,1] ( with u 0), and in this case the Lemma is trivial. 1 q q Likewise, if u 0, then f (t ) v(1 t ) , t [0,1] (with v 0), and using the inequality 1 q q (1 t ) 1, t (0,1] We immediately get f (t ) f (0), t (0,1], and the Lemma again follows. For the remainder of the proof we are going to assume that u , v 0 . We concentrate on the first assertion. Obviously f is differentiable on (0, 1), so the “candidates” for the maximum points are 0, 1, and the solutions of the equation f ' (t ) 0 . 1 up q Let s be defined as in s p , so under the assumption that u , v 0 , we p u v clearly have 0<s<1. We are going to prove first that s is the unique solution in (0, 1) of the equation f ' (t ) 0 . We have 1 1 q q 1 f ' (t ) u v (1 t ) q 1 q t q 1 so the equation f ' (t ) 0 reads t p , t (0,1) u v 1 t q q 14 1 t p 0 u v 1 t q q 1 u t q v 1 t q p p tq u q v 1 t tq u v p up p up vp u 1 v which gives t = s. We have now shown that the “candidates” for the maximum point are 0, 1, and s. Let us show that s is the only maximum point. For this purpose, we go back to 1 1 q q 1 p t , t (0,1) and we observe that f ' is q t q 1 u v 1 t q also continuous on (0,1). Since lim f ' (t ) u 0 and lim f ' (t ) , q 1 f ' (t ) u v (1 t ) q t 0 t 1 and the equation f ' (t ) 0 has exactly one solution in (0,1), namely s, this forces f ' (t ) 0, t (0, s) and f ' (t ) 0, t ( s,1) . This means that f is increasing on [0,s] and decreasing on [s,1], and we are proven that the maximum value of f is then given by max f (t ) f (s) (u t0,1 p 1 p p v ) . 1 q q 1 p p The Lemma immediately gives us a1r a2 (1 r ) (a1 a2 ) . Let us examine when equality holds. If a1 a2 0 , the equality obviously holds, and in this case (a1 , a2 ) is clearly proportional to (b1 , b2 ) . Assume (a1 , a2 ) (0,0) . Again by the above lemma, we know that equality holds 1 q q p 1 p p in a1r a2 (1 r ) (a1 a2 ) , exactly when p 15 1 q 1 a r p 1 , p a a 2 1 p equivalently b1q b1q b2 q that b1 is (b1 b2 ) q a1 p a1 p a 2 p q a1 p p p a1 a 2 q 1 q b2 q . Obviously this forces b1q b2 q or a2 p a1 p a 2 p , so indeed (a1 p a2 p ) and (b1q b2 q ) are proportional. Having proven the case n = 2, we now must show with the proof of: The implication: Case n=k Case n = k+1 Start with two sequences (a1 , a2 ,....., ak , ak 1 ) and (b1 , b2 ,....., bk , bk 1 ) . Define the numbers 1 p 1 q k k a a j p and b b j q . j 1 j 1 Using the assumption that the case n = k holds, we have 1 p 1 q k k a p b q a b ab a b . a b j j j j k 1 k 1 k 1 k 1 j 1 j 1 j 1 Using the case n = 2 we also have k 1 1 p p ab a k 1bk 1 (a p a k 1 ) (b q bk 1 combining with 1 1 p k 1 j 1 j 1 k 1 k 1 p ) a j p j 1 1 q q q k 1 b jq , j 1 so 1 q k b j q a k 1bk 1 ab a k 1bk 1 we see j 1 a j b j a j p 1 p 1 q b j q holds for n = k+1. j 1 j 1 j 1 Assume now we have equality. Then we must have equality in both n that the desired inequality k 1 k j 1 1 p in ab a k 1bk 1 (a p a k 1 one a j b j a j p n 1 q k b j q a k 1bk 1 ab a k 1bk 1 j 1 a j b j a j p j 1 n hand, and 1 1 k 1 p k 1 q q p q ) (b bk 1 ) a j b j . j 1 j 1 the equality 1 p p 1 q q On the in 16 1 k p a p a b j j j j 1 j 1 k 1 1 q k b j q a k 1bk 1 ab a k 1bk 1 j 1 forces (a1 p , a2 p ,...., ak p ) and (b1q , b2 q ,....., bk q ) to be proportional (since we assume the case n = k). On the other hand, the equality in 1 p 1 q k 1 k 1 ab a k 1bk 1 (a p a k 1 ) (b q bk 1 ) a j p b j q forces j 1 j 1 1 p p (a p , ak 1 p ) and (b q , bk 1q ) to 1 q q be proportional (by k k Since a p a j p and b q b j q , j 1 j 1 the it case n is = 2). clear that (a1 p , a2 p ,...., ak p , ak 1 p ) and (b1q , b2 q ,....., bk q , bk 1q ) are proportional. 1 n p Thus Hölder’s inequality of a j b j a j p j 1 j 1 generality. n 1 q b j q has been proven in j 1 n We use Hölder’s inequality to prove the second inequality that extends the Cauchy-Schwarz inequality into sequence space. This inequality is the Minkowski inequality named after the mathematician, Hermann Minkowski (1864 – 1909). The Minkowski inequality is the triangle inequality represented in Lp space. 17 Minkowski was born the second son of Lewin Minkowski and Rachel Taubmann. When he was eight, Minkowski and his family moved from Russia back to Konigsberg, Germany where his father’s business was. Upon returning to Konigsberg, Minkowski began his studies at the Gymnasium. It was here that Minkowski’s interest in mathematics was first discovered. Minkowski studied at the University of Konigsberg and the University of Berlin. It was at the University of Konigsberg, he became friends with Hilbert. Early in his university studies, Minkowski became fascinated by quadratic forms. So when the Academy of Sciences revealed in 1881 “that the Grand Prix for mathematical science to be awarded in 1883 would be for a solution to the problem of the number of representations of an integer as the sum of five squares,” Minkowski was intrigued [12]. The problem stemmed from Eisenstein’s 1847 “formula for the number of such representations;” however, he never gave a proof of the conclusion [12]. When the problem was announced, the Academy of Sciences was ignorant of the fact that a mathematician by the name of Henry Smith had already solved it. At the age of eighteen, Minkowski recreated Eisenstein’s quadratic forms theory and produced a solution to the problem, launching Minkowski’s mathematical career. The Academy of Sciences decided to award the prize to both mathematicians. In 1885, Minkowski received his doctorate which was an extension of the Grand Prix problem. Minkowski held positions at several institutions which include the University of Bonn, Konigsberg, Eidgenossicsche Polytechnikum Zurich, and finally the University of Gottingen. It was at the University of Gottingen that Minkowski was reunited with Hilbert who had had the position there created especially for him. Minkowski remained at the 18 University of Gottingen until his death. One of Minkowski’s greatest achievements was his idea of space-time continuum which influenced Einstein’s theory of relativity significantly. Until Minkowski, space and time were thought to be independent of each other; however, Minkowski joined the two together in four dimensional space-time continuum. Another triumph of Minkowski was his ‘geometry of numbers,’ which led to his work “on convex bodies and to questions about packing problems, the ways in which figures of a given shape can be placed within another given figure” [12]. Sadly, Minkowski died from a ruptured appendix in 1909 at the age of 44. Now we are ready to tackle the proof of the Minkowski inequality in its most general form for R n space according to Planet Math [13]. For p = 1 the result follows immediately from the triangle inequality, so we may assume p>1. We have a k bk p a k bk a k bk p 1 a k bk a k bk p 1 by the triangle inequality. Therefore we have p a k bk a k a k bk p 1 bk a k bk p 1 when we distribute ak bk p 1 to ak and bk . Set q p 1 1 . Then 1 , so by the Hölder inequality we have p 1 p q 1 n ak k 0 a k bk p 1 n pp a k a k bk k 0 k 0 n 1 n bk k 0 a k bk p 1 n pp a k bk k 0 n bk k 0 1 ( p 1) q q 1 ( p 1) q q Adding these two inequalities, dividing by the factor common to the right sides of both, and observing that (p-1)q = p, we have 1 a k bk p k 0 n 1 q ak n k 0 Finally, observe that 1 bk a k bk a k bk k 0 n 1 p q p 1 1 n pp a k bk k 0 k 0 n 1 pp 1 1 , and the result follows as required. q p 19 a k bk k 0 n ak n 1 p p k 0 bk a k bk p 1 1 n a k k 0 1 q a k bk p k 0 n n pp bk k 0 1 pp Thus, the Minkowski inequality is proven in its most general form in R n space of 1 n a k bk k 0 n pp ak k 0 1 n pp bk k 0 1 pp . The proof of the Minkowski inequality given above can easily be proven in the same manner for Lp space. In order to understand what Lp space is we need familiarize ourselves with some vocabulary. Lp space can be defined as “spaces of p-power integrable functions, and corresponding sequence spaces” [14]. 1 According to Mathworld, the Lp norm of a function f is f Lp f X p p on a 1 p p measure space X. Lp functions are function for which f converges. A X measure space is a measurable space possessing a nonnegative measure. Examples of measure spaces include -dimensional Euclidean space with Lebesgue measure and the unit interval with Lebesgue measure (i.e., probability) as defined by Mathworld [15]. Norms if we recall are the lengths of vectors. Thus, by utilizing counting measure, Lp norm is a generalization of vector pnorms. A counting measure is defined as “a measure u is called a counting n if A has exactly n elements measure on X if u ( A B) ” [16]. A p-norm is otherwise “defined as x ( x1 p p 1 p p .... xn ) , p 1, x R n ” and denoted by “ p ” [17]. The three most common p-norms are 1-norm, 2-norm, and -norm. norms can be defined as the following: 1 – norm: x x1 x2 ..... xn These 1 2 – norm: x - norm: x 2 2 x1 x2 2 . .. . . x n 2 xT x max xi 1i 20 We are already familiar with the 2 – norm because it is known as the Euclidean vector norm which generates the “Euclidean distance between any two vectors” [17]. In finite dimensional spaces, the three p-norms above as well as all pnorms are equivalent. Lp space is an example of a Banach space as long as p ≠ 2 that has applications in finite element analysis. A special case of Lp space is when p = 2 which represents a Hilbert space. Another unique case of Lp space is l p space. In l p space, the measure space is discrete and “the measure used in the integration in the definition is a counting measure.” The definition of l p (S) is a “set of sequences x xi , i in S, for which the quantity x p xi p is iS finite” [14]. We now turn to the second domain of inequalities which focuses on means. There are several types of means: geometric, arithmetic, and harmonic just to name a few. Their applications can be seen in the areas of statistics, genetics, the stock market, computer science, etc. The first inequality we will discuss is the arithmetic mean versus the geometric mean (AM-GM inequality). Before we jump into the inequality itself, let us define arithmetic mean and geometric mean. The arithmetic mean is identified as “sum of all the members of a set divided by the number of items in the set” while the geometric mean can be described as “the product of all the members of the set, raised to a power equal to the reciprocal of the number of members” [18] and [19]. Mathematically both means may be represented by the following: x x 2 ...... x n The arithmetic mean which is denoted by a 1 , where n x1 , x2 ,......, xn are the members of a set. The geometric mean which is denoted by g n x1 x2 ..... xn , where x1 , x2 ,......, xn are the members of a set. Now let us attempt the arithmetic mean versus the geometric mean inequality. The person credited with originally proving this inequality can be traced to Augustin Louis Cauchy who we have already discussed earlier in his paper, Cours d'analyse. The arithmetic mean versus the geometric mean inequality states for any positive x x 2 ..... . x n real numbers, x1 , x2 ,......, xn , a 1 g n x1 x2 ..... xn and if n x1 x2 ...... xn n x1 x2 ...... xn then x1 x2 ..... xn . n 21 The proof of the arithmetic mean vs. the geometric mean as reconstructed by Korovkin in Inequalities [20] follows: Using g n x1 x2 ..... xn If we divided both sides by g, then x x x x g n x1 x2 ..... xn n 1 2 3 ,......, n g g g g g g 1 n x x1 x 2 x3 ...... n g g g g This can be rewritten as x x x x 1 1 2 3 ...... n g g g g The above step provides that we prove “As the product of n positive numbers equals 1, then their sum is not less than n.” This can be stated mathematically as x x1 x 2 x3 ...... n n g g g g The proof of if the product of n positive numbers equals 1 their sum is not less than n is as follows: Suppose for n k 2 that x1 x2 x3 ...... xk k is true if x1 x2 ..... xk 1 . We need to prove for n k 1. First, x1 x2 ..... xk xk 1 1 We have 2 cases: 1.) x1 x2 ..... xk xk 1 1 If all the terms are equal to 1, then their sum equals k 1 . x1 x2 ..... xk xk 1 k 1 2.) Terms are not all equal. There will be terms less than 1 and greater than 1. x1 1 and x k 1 1 ( x1 xk 1 ) x2 x3 ........xk 1 If y1 x1 xk 1 , we may rewrite the above y1 x2 x3 ........xk 1 So by our assumption their sum is not less than k y1 x2 x3 ........xk k 22 But x1 x 2 .. x k x k 1 k x k 1 y1 x1 x1 x 2 .. x k x k 1 ( y1 x 2 .. x k ) x k 1 x1 y1 and k x k 1 y1 x1 (k 1) x k 1 y1 x1 1 So, ( y1 x 2 .. x k ) x k 1 x1 y1 (k 1) x k 1 y1 x1 1 Substitute x1 xk 1 in for y1 x1 x2 ... xk 1 (k 1) xk 1 x1 xk 1 x1 1 The right hand side of the inequality can be written as k 1 ( xk 1 1)(1 x1 ) So the inequality now can be stated as x1 x2 ... xk 1 k 1 ( xk 1 1)(1 x1 ) Because we assumed that x1 1 and x k 1 1, then ( xk 1 1)(1 x1 ) 0 . So then (k 1) ( xk 1 1)(1 x1 ) k 1 Thus, x1 x2 .... xk xk 1 k 1 . Recall the last step we did in our AM-GM x x1 x 2 x3 ...... n n g g g g Multiply both sides by g which denotes the geometric mean x x x x g 1 2 3 ...... n g n g g g g inequality was x1 x 2 ..... x n g n Then divide both sides by n x1 x2 ..... xn g n n n The left hand side of the inequality equals the arithmetic mean. Thus, a g . So without loss of generality the arithmetic mean is greater than the geometric mean. The AM-GM inequality can be represented geometrically as well using the Pythagorean Theorem. For this version of the inequality, there are only two real numbers a and b. Below is an illustration of a right triangle with the lengths of the ab ab legs equaling and x .The hypotenuse length equals . 2 2 23 a-b a+b 2 2 x Using the Pythagorean Theorem, we know that the sum of the squares of the two legs of a right triangle equals the square of the hypotenuse. 2 2 a b ab x 2 . Thus, 2 2 a 2 2ab b 2 a 2 2ab b 2 x2 4 4 2 ab ab and 2 2 2 Foiling x2 a 2 a 2 2ab 2ab b 2 b 2 4 a 2 2ab b 2 Subtract from both sides of the equation 4 x2 4ab 4 Combine like terms x 2 ab Simplify x ab Take the square root of both sides Because the hypotenuse is always the longest side of a right triangle, then ab ab . The left side of the equation represents the arithmetic means while 2 the right hand side represents the geometric mean. Thus the arithmetic mean is greater than the geometric mean. If the arithmetic mean is always greater than the geometric mean, then why would one use the geometric mean we ask? The geometric mean is slightly higher than the arithmetic mean when one is working with percentages and rates of change such as the yearly growth rate of a company’s profits over a certain number of years. To know when it is appropriate to use the geometric mean, one 24 should ask the question, “if all the quantities had the same value, what would that value have to be in order to achieve the same product" [19]. An extension to the AM-GM inequality is the arithmetic mean-geometric mean-harmonic mean inequality. First we need to know that the harmonic mean n h for the real numbers, x1 , x2 ,......, xn , is 1 1 1 . Archytas of ..... x1 x 2 xn Tarentum, a Greek mathematician, is the person credited with coining the term harmonic mean. Originally called the sub-contrary mean, Archytas renamed it the harmonic mean because “the ratio proved to be useful for generating harmonious frequencies on string instruments” [21]. A useful application of the harmonic mean is “a measure of central tendency for data which consists of rates or frequencies” [21]. The harmonic mean is always less than the arithmetic mean and the geometric mean; thus the arithmetic-geometric-harmonic means inequality exists. The proof of the arithmetic-geometric-harmonic reconstructed by Planet Math [22] is shown below. means inequality as The arithmetic-geometric-harmonic means inequality states for any positive real numbers, then x1 , x2 ,......, xn , x x 2 ...... x n n a 1 g n x1 x 2 ..... x n h . 1 1 1 n ..... x1 x 2 xn Using what we have already proven about the arithmetic and geometric means. We will now prove the geometric mean is greater than the inequality. Let t i be 1 . xi t1 t 2 .... t n n t1t 2t3 ... t n . The left hand side denotes the arithmetic n mean and the right hand side represents the geometric mean. From Substitute 1 for t i . xi 1 1 1 1 .... x1 x2 x3 xn 1 1 1 1 n . .. .. n x1 x2 x3 xn 25 When you multiply both sides of the equation by the reciprocal of the left hand n side, you obtain 1 1 1 1 .... x1 x2 x3 xn 1 n 1 1 1 1 n ..... 1 1 1 x1 x2 x3 xn 1 .. .. x1 x2 x3 xn 1 1 1 1 .. . . . x1 x2 x3 xn 1 n . 1 1 1 1 1 1 1 1 . .. . ..... x1 x2 x3 xn x1 x2 x3 xn Then divide both sides by n n By the properties of exponents, we know 1 1 1 1 ..... ( x1 x 2 x3 .... x n ) 1 . So the denominator of the left hand x1 x 2 x3 xn side can be rewritten as ( x1 x2 x3 .... xn 1 1 n ) . Again by the laws of exponents 1 the denominator of the left hand side can be modified to 1 ( x1 x2 x3 .... xn ) which can be changed to ( x1 x2 x3 ..... xn 1 )n n n x1 x2 x3 ..... xn . n . In other words the geometric mean 1 1 1 1 . .. . x1 x2 x3 xn is always greater than or equal to the harmonic mean. Thus, n x1 x2 x3 .... xn Thus, our proof is complete without loss of x x 2 ...... x n n a 1 g n x1 x 2 ..... x n h . 1 1 1 n ..... x1 x 2 xn generality, 26