The Presidential Lexicon – Linguistic Analysis of State of the Union

advertisement

Jak Marshall

The Presidential Lexicon – Linguistic Analysis of State of

the Union Addresses 1790-2008

Introduction

The goal of this analysis is to study various aspects of speeches given by US Presidents.

In particular we look at State of the Union Addresses here. We will look at summary

statistics such as the number of sentences in the speeches and the average words per

sentence and how these attributes have varied among the presidents and over time. We

will also compare the distribution of individual words between presidents.

Methods

From a raw text file containing all of the State of the Union Addresses I obtained various

summary statistics. For each speech I counted both the number of individual sentences

made and the average number of words per sentence. For each speech I also counted the

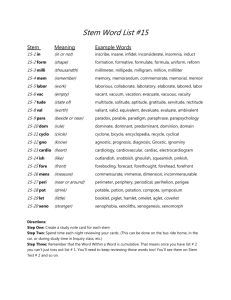

frequency of stems. A stem is a common root word to various words in the speech. For

example, for the words ‘runs’ and ‘running’ the stem ‘run’ is the appropriate stem. By

counting stems we avoid classing very similar words as being entirely different words

altogether, which is both inefficient and unnecessary.

I wrote some computer code in the programming language ‘R’, which first separated the

text file into ‘blocks’ of text where each ‘block’ contained only one speech made by one

president at one time. This was possible because each speech was separated by three

asterisks ‘***’ in the raw text file so the computer was able to recognize when one

speech ended and another began.

To get the number of sentences in each speech, or each ‘block’ the code then separated

portions of the text by periods or ellipsis using a similar process and then the code simply

counted the amount of these smaller blocks of text to give the amount of sentences in

each speech.

To get the number of words in a sentence, the code took each of the sentences in the

speech (these were broken down earlier from the raw text to count the amount of

sentences) and separated them individually by spaces, commas and various other

punctuation marks to return a collection of words. For each sentence, the amount of such

words were counted and the mean number of words was taken by simply adding all of

these values together and dividing by the number of sentences in the speech which was

calculated earlier.

By attributing each speech with the president that made it and the year in history it was

made, I was able to generate a colour coded plot which displays the number of sentences

in the State of the Union addresses against time (colours used to identify the presidents)

and a similar plot which does the same for mean number of words per sentence. I did not

Jak Marshall

produce the code to generate the plot itself. I only supplemented the data for the plot to

use.

For the stem counting I first converted the all of the words in the speeches into lower case

using a command in the R language so that identical words were not classed differently

because of capitalization. I then used a purpose-built piece of code called wordStem (not

written by myself) to convert the entirety of each individual speech into stem form. This

essentially returned each speech where every word was replaced by its stem.

With some further code I created a list of all the unique stems that appear across all of the

speeches.

So now I essentially have for each speech a list of stems, including repeats. I also have a

dictionary of stems that have appeared at least once in at least once speech. I was then

able to use R to look at each individual speech and obtain the frequency of the all the

stems in my stem dictionary that appear in that speech. A lot of the time frequency for a

given stem will be zero in most speeches as it may only appear once in one speech only.

So now for each speech I now had a distribution of the stems in that speech.

Having obtained a list of stem frequencies for each speech (or the stem distribution for

each speech). I was able to feed this data into a piece of code (again, not written by

myself) to take all of these distributions and perform a multi-dimensional scaling

operation on them to compute the distance between each of the distributions from each

other. This multi-dimensional approach is required because each of the distributions is

essentially a vector of the counts for each stem that appeared at least once in one of the

speeches. These vectors will have been of length 13888 so we would need to perform an

operation on 218 such vectors (there were 218 speeches) in R13888. Once these distances

had been computed the same piece of code then produced a plot to represent the distances

in stem distributions between the presidents by grouping the speeches by orator.

I also wrote some code to count the overall frequency of the stems across all of the

speeches (rather than within each individual speech) and ordered the list in decreasing

order to see the top 25 words used by US Presidents in the State of the Union Addresses.

Results

The colour coded plots for the number of sentences and mean number of words per

sentence for all of the speeches are plotted against time below.

Jak Marshall

The plot shows us that the number of sentences in State of the Union Addresses tended to

increase over time from George Washington’s first address and then dropped sharply

with the arrival of Woodrow Wilson and then generally the number of sentences either

increased slightly above or remained steady at this level until the present day. This is

with the exception of the unusually long speeches given by Harry S. Truman and Jimmy

Carter.

Another noticeable trend is the decrease in mean sentence length over time from George

Washington to George Bush. The patterns shows us that as we approach the modern

presidents, the length of sentences become smaller and smaller. Although the mean

sentence length can be quite varied and erratic to look at, the plot clearly shows a decline

in mean sentence length over time. A ‘best fit line’ mode of thinking will show this.

Now I turn to look at the results of the multidimensional scaling operation, which

compared the stem distributions of the various presidents’ speeches. A colour code has

also been used to identify the time period of the presidents displayed here.

Jak Marshall

Looking at the plot it would seem that presidents who are closer together in history also

seem to be closer together in stem distributions. George Washington seems to have the

most similar stem distribution to any other president from his era. This is generally true

for every president. However, the older presidents seems to have more conformity with

the stem distribution among older presidents being more clustered that a collection of

modern presidents, whose stem distributions are not so clustered together. There is almost

a parabolic shape to this plot and there seems to be a very clear divide between post

1950s presidents and pre 1950s presidents with each group occupying one side of the plot

a considerable distance away from the other group.

The top 25 words used across all the speeches are as follows:

1 – the, 2 – of, 3 – to, 4 – and, 5 – in, 6 – a, 7 – that, 8 – it, 9 – be, 10 – for, 11- our, 12 –

is, 13 – by, 14 – have, 15 – which, 16 – as, 17 – with, 18 – this, 19 – we, 20 – will, 21 – I,

22 – on, 23 – has, 24 – state, 25 – not.

Conclusions

From my analysis of the State of the Union Addresses I have learned how various aspects

of the presidential speeches have changed. Sentences have gotten shorter and whilst the

length of the addresses seemed to get longer and longer at one stage, it seemed that the

presidents post Wilson decided to stick to a certain amount of sentences in their speeches

which is not as low as George Washington’s but not as high as Truman’s post war

speech.

I have also found that the most commonly used words in these speeches are simple

syntactical words such as ‘the’ and ‘is’ that are among the most ubiquitous words in most

written documents. The only notable non-syntactical word is ‘state’ which is unsurprising

Jak Marshall

given the nature of the documents being studied here. Specific words such as

‘Afghanistan’ and ‘terrorist’ don’t get used by all of the presidents and the presidents that

do use these words don’t tend to repeat them to the extent of everyday words like ‘and’

and ‘as’ would be. In further research I’d like to see which stems are most popular other

than these very common syntactical words to see which ‘meaningful’ words are most

commonly used. The criterion for which words should be omitted is also a problem to be

considered.

Harry S. Truman’s speech came shortly after the end of the Second World War whilst

Jimmy Carter presided over a severe oil crisis. This may be why these addresses are so

long but this is just a theory of mine.

Sentences have definitely gotten a lot shorter in modern speeches from the first plot and

the stem distributions of the modern speeches are very different to the older ones. The

stem distribution difference may be a general trend seen in all documents from these

times, as the change in language and choice of words and styles over time is well-known

phenomenon. However, the shortening of sentences may have various explanations.

Perhaps the introduction of mass media has persuaded more presidents to adopt more

succinct, sharp and perhaps even more theatrical ways of delivering their addresses or

this could be just another phenomenon caused by the passage of time. This would be

something worth considering in future studies.

Appendix – R code

#State of the Union Analysis

#Graded Assignment 4

#Jak Marshall

# - Here I read in the text file from bspace which first contains all

of the State of the Union Addresses after a list of all the presidents

and dates of the speeches.

options(max.print=10000000)

alltext<readLines(con="/class/u/s133/s133ap/Desktop/stateoftheunion17902008.txt")

# - Using indexing I strip out the lines which list the presidents and

the dates of the speeches

prestext<-alltext[11:228]

# - I use the same regular expression with two different back

references to create first a vecotr

Pres<-gsub(pattern="^ ([^,]+), [^,]+, ([^,]+, [[:digit:]]+)", "\\1",

prestext)

RawDates<-gsub(pattern="^ ([^,]+), [^,]+, ([^,]+, [[:digit:]]+)",

"\\2", prestext)

# - Using strptime and another regular expression I can convert dates

from the given format to the POSIXt format.

Dates<-strptime(RawDates, format= "%B %d, %Y")

#----------------------------

Jak Marshall

#The following code creates the vectors meanwords and nsentences

required to produce the first plot

speech.sentences<-speech.words<- vector("list", length(Pres))

meanwords<- rep(NA, times= length(Pres))

nsentences<- rep(NA, times= length(Pres))

speechtext<-alltext[233:168851]

a<- c(grep("\\*\\*\\*", speechtext),length(speechtext))-2

b<- c(5,grep("\\*\\*\\*", speechtext)+6)

for(i in 1:218){

x<-speechtext[b[i]:a[i]]

x<-paste(x, sep="", collapse=" ")

speech.sentences[i]<-strsplit(x, split="\\.+")

speech.words[i]<-strsplit(x, split="[[:punct:] +]+")

nsentences[i]<-length(speech.sentences[[i]])

meanwords[i]<length(speech.words[[i]])/length(speech.sentences[[i]])

}

#----------------------#This code produces the first plot

par(mfrow = c(2, 1), mar = c(0, 4, 1, 1), oma = c(12, 0, 2, 0),

xpd = NA)

plot(Dates, nsentences, type = "l", col = "grey",

xaxt = "n", ylab = "Number of Sentences")

title(main = "Summary Statistics for State of the Union Addresses",

line = 1)

points(Dates, nsentences, pch = 16, col = match(Pres, unique(Pres)))

plot(Dates, meanwords, type = "l",

col = "grey", ylab = "Avg Words per Sentence")

points(Dates, meanwords, pch = 16, col = match(Pres, unique(Pres)))

text(Dates[!duplicated(Pres)], 5, unique(Pres),

srt = -90, col = 1:length(Pres), adj = 0, srt = -90)>

dev.print(pdf, file = "SoUplot.pdf", height = 8, width = 10)

# - I install the library I need and create the vector of stems in

speeches speech.stems and the stem dictionary all.stems

library(Rstem,lib.loc="~/Rlibs")

speech.stems<-lapply(lapply(speech.words,tolower), wordStem)

all.stems<-unique(unlist(speech.stems))

# - I create the matrix of stem frequencies for all the speeches

stem.count<-matrix(nrow=length(all.stems), ncol=218)

for(i in 1:218){

stem.count[,i]<-tabulate(factor(speech.stems[[i]],levels=all.stems)

,length(all.stems))

}

# - this code used stem.count to give the second plot.

source("/class/u/s133/s133ap/Rlibs/SJD.R")

Jak Marshall

presfreq <- function(president, stem.count, Pres){

submat <- stem.count[,Pres == president, drop = FALSE]

wordcounts <- apply(submat, 1, sum)

return(wordcounts/sum(wordcounts))

}

word.freq <- sapply(unique(Pres), presfreq, stem.count, Pres)

SJ.mat <- computeSJDistance(terms = all.stems,

df = apply(word.freq > 0, 1, sum),

tf = word.freq)

mds <- cmdscale(SJ.mat)

require(fields)

lim <- range(mds[,1] + c(-0.15, 0.15) * diff(range(mds[,1]))) # Make

room for names

par(oma = c(3, 0, 0, 0))

plot(mds, type = "n", xlim = lim, bty = "l", xlab = "", ylab = "")

text(mds, label = unique(Pres), col = tim.colors(length(unique(Pres))))

title(main = "Distances Between Presidentional Word Frequency

Distributions")

image.plot(zlim = c(1790, 2008), col = tim.colors(40), legend.only =

TRUE,

horizontal = TRUE, legend.mar = 0, legend.shrink = 0.7)

dev.print(pdf, file = "mds.pdf", height = 7, width = 10)

# - This code gives the total amount of uses of each stem

stem.sums<-apply(stem.count, MARGIN=1, sum)

# - This code gives each element its associated word as the names of

the vector

names(stem.sums)<-all.stems

# - This puts them in order.

sort(stem.sums, decreasing=TRUE)

#----------------